Poll Nvidia shows Full Raytracing at 1440p/30fps. Can we expect next, next gen console games to do full rt? (52 votes)

check 19:47 marbles demo

check 19:47 marbles demo

@hardwenzen: damn lol any hope that the next consoles will pull it off in any capacity has been shattered to bits.

I want to know what is the sample rate or the number of rays. Just having ray tracing is not enough. We need higher quality ray tracing.

to do it proper and real time. wont be for years.

Dude has a sweet kitchen. That whole range hood sculpture-thing must have cost a bit.

I want to know what is the sample rate or the number of rays. Just having ray tracing is not enough. We need higher quality ray tracing.

I turned mine off in Control, game looks better now imo and the performance? GTFO, soooooo much better!

@rzxv04: The PS6 is inside the PS5 we just have to wait for GodCerny to unlock it.

The PS6 is of course hidden inside the custom SSD and already partially unlocked for more triangles, duh.

@mrbojangles25: whaaat? I'm playing control now and it definitely is worthwhile playing with RTX. But also turn on DLSS - no joke, it looks better with it on than off. Like, noticeably. I was surprised - I doubt that's normal.

And it turns a 30fps RTX experience into a 60fps one. Win-win

1440p? Ugh that is so 2010 is 8k now.

For consoles 1440p is very much 2020.

But that video is not from a PS or xbox,is for PC so yeah my comment is spot on.

Let me guess 1440p is now OK with full ray tracing,just like using upscale methods like DLSS is.

Is actually a beauty seeing you people grasp this badly and see you accepting now what you have sop fiercely downplay in the past.

Up scaling is up scaling not matter if DLSS is better or not,you over priced hardware was bring to its knees and a method for up scaling was implemented yeah just like checkerboard that so many of you so fiercely attacked.

Now I/O improvement is great even if it is an the expense of GPU resources.¯\_(ツ)_/¯¯

What goes around comes around.

But that video is not from a PS or xbox,is for PC so yeah my comment is spot on.

Let me guess 1440p is now OK with full ray tracing,just like using upscale methods like DLSS is.

Is actually a beauty seeing you people grasp this badly and see you accepting now what you have sop fiercely downplay in the past.

Up scaling is up scaling not matter if DLSS is better or not,you over priced hardware was bring to its knees and a method for up scaling was implemented yeah just like checkerboard that so many of you so fiercely attacked.

Now I/O improvement is great even if it is an the expense of GPU resources.¯\_(ツ)_/¯¯

What goes around comes around.

Dude just shut up, it's a demo lol.

1440p/30 is fine to showcase the path-tracing capabilities of the card. If this were a game, most PC gamers would drop it to get 60fps.

Nothing to be taken away, just like UE5, this shit is a demo. It looks cool and shows off nice features. Nothing more to it. Quit looking for this "ah ah, got you!" moment.

Dude has a sweet kitchen. That whole range hood sculpture-thing must have cost a bit.

I turned mine off in Control, game looks better now imo and the performance? GTFO, soooooo much better!

Control is my faovrite Remedy game to date but playing it while using RT is totally worth it. WTH are you smoking? DLSS 2.0 has really help the game and using all the goody RT is totally worth it. I'm sorry but playing Control with RT off makes it less impressive and the same goes for Shadow of the Tomb Raider. I really been enjoying playing my games while using RT.

1440p? Ugh that is so 2010 is 8k now.

1440p is still the gold standard on PC since it still looks great and for next-gen consoles, its 2020 but that's a really good thing. No joke about it.

What does “full” ray tracing even mean? I thought you always had to take a small fraction of all rays and then don’t allow too many bounces 🤔

What does “full” ray tracing even mean? I thought you always had to take a small fraction of all rays and then don’t allow too many bounces 🤔

It means that the entire scene is rendered using ray-tracing i.e. path tracing. No rasterization. It's a rendering method with which CGI in movies is done, though that is of much much higher quality and samples.

Developers could push RT on consoles....they'll just have to scale back the visuals is all. I see more games using tid bits of it, which IMO is good enough, i'm not that big on the feature, expecially...like i've said if it needs to downgrade visuals to achieve it....games can look amazing without it.

Dude has a sweet kitchen. That whole range hood sculpture-thing must have cost a bit.

I turned mine off in Control, game looks better now imo and the performance? GTFO, soooooo much better!

Control is my faovrite Remedy game to date but playing it while using RT is totally worth it. WTH are you smoking? DLSS 2.0 has really help the game and using all the goody RT is totally worth it. I'm sorry but playing Control with RT off makes it less impressive and the same goes for Shadow of the Tomb Raider. I really been enjoying playing my games while using RT.

I will have to give it another go.

Just installed updated drivers, maybe that will help.

Is DLSS something I need to turn on or off?

@rzxv04: seeing as how the ps3 was able to squeeze out some form of ray tracing way back in the days, i'm sure the new consoles will also be able to. The gpu will be based on the new AMD RDNA2 cards which are already confirmed to have hardware based ray tracing.

@mrbojangles25: i'd turn it on. It looks worse with it off - at least on my setup: all rt and visuals set to max at 1440p with dlss on vs off.

Yeah I just tried it out, definitely looks better and performs better.

Had RT to "medium" with DLSS off and was only getting 45 FPS.

Turned DLSS on then set it to "High" and now I'm getting about 70 FPS

Also 1440p, everything else maxed. Pretty incredible, really.

One question, though: when I turned on DLSS, it lowered my "render resolution" or something like that...what does that mean?

@rzxv04: seeing as how the ps3 was able to squeeze out some form of ray tracing way back in the days, i'm sure the new consoles will also be able to. The gpu will be based on the new AMD RDNA2 cards which are already confirmed to have hardware based ray tracing.

Yet the PS3 did not do any real-time ray tracing in any applicable situation. It was all BS hype and marketing. It took 3 PS3's to produce a low polygon count car model with only using primary rays with a single RT reflection with poor fps......

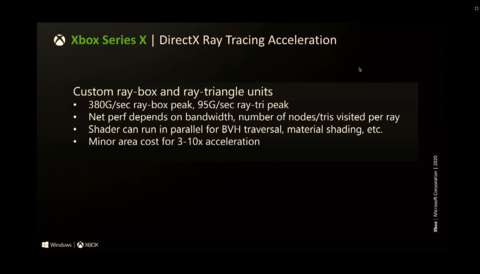

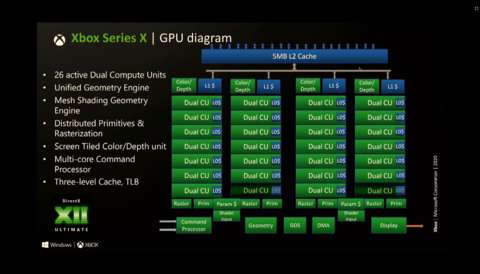

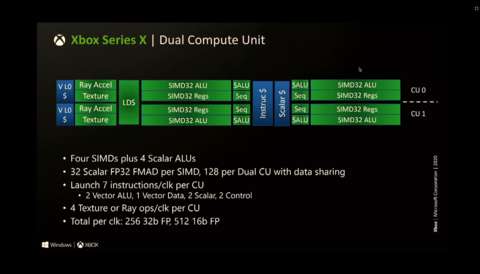

RDNA 2 does not have dedicated hardware for ray tracing, if XSX is a prelude to the design of RDNA 2.0, Its using TMU's and CU's to do RT. Which will eat into the overall performance of the gpu based on how much RT is going to be used. Dev's are going to juggle and compromise what to RT and what not to especially on the consoles .

The consoles are not going to do full scene ray tracing...... Its going to a hybrid method where you enhanced normal rasterized lighting/shadowing, and or only do a few select items. Nvidia took a stab at the XSX with its equivalent 13 RT TFLOPS. So if a 52CU at 1.8ghz is only able to do 13 RT TFLOPS, what do you expect a 72/80CU 2.1 ghz RDNA 2 will do? Even if its 3x the amount its still nowhere near the RT performance of RTX 3080. I think RDNA 2 will compete with non RT enabled games quite well but when it comes to doing both its going to fall short anything under 72CU based gpu.

@mrbojangles25: i'd turn it on. It looks worse with it off - at least on my setup: all rt and visuals set to max at 1440p with dlss on vs off.

One question, though: when I turned on DLSS, it lowered my "render resolution" or something like that...what does that mean?

Depending on the game and what DLSS setting you use it will automatically lower the actual resolution its rendering the game at and then it uses the tensor cores(DLSS) to upscale that lower resolution its rendering to what your screen resolution is. Basically sets the game resolution to 720p or whatever and then scales it up to 1440p.

@mrbojangles25: isn't it wild?! Feels like cheating somehow.

So the framerate gains are because of the lower render resolution. The "traditional" GPU pipeline is rendering at that lower resolution. But then the machine learning algorithm is using that lower fidelity info to create the true 1440p target image. The surprising thing to me is that it actually looks better than native. I *think* it's because the algorithm was trained on ultra fidelity info, and so its model of what the image should look like, given the clues provided from the lower fidelity render, is actually better than "reality". It looks to me like there's some artifacting that it cleans up. A bit like supersampling without the cost. But that's just my guess.

@rzxv04: seeing as how the ps3 was able to squeeze out some form of ray tracing way back in the days, i'm sure the new consoles will also be able to. The gpu will be based on the new AMD RDNA2 cards which are already confirmed to have hardware based ray tracing.

Yet the PS3 did not do any real-time ray tracing in any applicable situation. It was all BS hype and marketing. It took 3 PS3's to produce a low polygon count car model with only using primary rays with a single RT reflection with poor fps......

RDNA 2 does not have dedicated hardware for ray tracing, if XSX is a prelude to the design of RDNA 2.0, Its using TMU's and CU's to do RT. Which will eat into the overall performance of the gpu based on how much RT is going to be used. Dev's are going to juggle and compromise what to RT and what not to especially on the consoles .

The consoles are not going to do full scene ray tracing...... Its going to a hybrid method where you enhanced normal rasterized lighting/shadowing, and or only do a few select items. Nvidia took a stab at the XSX with its equivalent 13 RT TFLOPS. So if a 52CU at 1.8ghz is only able to do 13 RT TFLOPS, what do you expect a 72/80CU 2.1 ghz RDNA 2 will do? Even if its 3x the amount its still nowhere near the RT performance of RTX 3080. I think RDNA 2 will compete with non RT enabled games quite well but when it comes to doing both its going to fall short anything under 72CU based gpu.

This site says big navi will have hardware ray tracing for flagship video cards

https://www.techradar.com/news/amds-rdna-2-gpus-are-coming-this-year-but-they-wont-all-feature-ray-tracing-support

I can find other links that say the same thing, and they're more recent. Its possible AMD won't include it, but the rumors say otherwise. Unless you have some other information to disprove this, then where are you getting your info on RDNA2 not having hw ray tracing?

Also console manufacturers have always had custom gpu's that may be cut down in some ways but incredibly advanced in other ways. I wouldn't be surprised in the xbox or ps5 had some way to do it. Thats the power of mass manufacturing 200 million consoles.

63% of people clearly don't understand raytracing that well. Next next gen ranges from (likely) 7-14 years from now which is a very very long time away, which allows a) GPUs to catch up and b) raytracing optimization to get better.

Also VR has this easily. Fully raytraced AAA games will happen in VR first because it will always be less demanding to do photorealism in VR when a full raytraced pipeline is viable.

@rzxv04: seeing as how the ps3 was able to squeeze out some form of ray tracing way back in the days, i'm sure the new consoles will also be able to. The gpu will be based on the new AMD RDNA2 cards which are already confirmed to have hardware based ray tracing.

Yet the PS3 did not do any real-time ray tracing in any applicable situation. It was all BS hype and marketing. It took 3 PS3's to produce a low polygon count car model with only using primary rays with a single RT reflection with poor fps......

RDNA 2 does not have dedicated hardware for ray tracing, if XSX is a prelude to the design of RDNA 2.0, Its using TMU's and CU's to do RT. Which will eat into the overall performance of the gpu based on how much RT is going to be used. Dev's are going to juggle and compromise what to RT and what not to especially on the consoles .

The consoles are not going to do full scene ray tracing...... Its going to a hybrid method where you enhanced normal rasterized lighting/shadowing, and or only do a few select items. Nvidia took a stab at the XSX with its equivalent 13 RT TFLOPS. So if a 52CU at 1.8ghz is only able to do 13 RT TFLOPS, what do you expect a 72/80CU 2.1 ghz RDNA 2 will do? Even if its 3x the amount its still nowhere near the RT performance of RTX 3080. I think RDNA 2 will compete with non RT enabled games quite well but when it comes to doing both its going to fall short anything under 72CU based gpu.

This site says big navi will have hardware ray tracing for flagship video cards

https://www.techradar.com/news/amds-rdna-2-gpus-are-coming-this-year-but-they-wont-all-feature-ray-tracing-support

I can find other links that say the same thing, and they're more recent. Its possible AMD won't include it, but the rumors say otherwise. Unless you have some other information to disprove this, then where are you getting your info on RDNA2 not having hw ray tracing?

Also console manufacturers have always had custom gpu's that may be cut down in some ways but incredibly advanced in other ways. I wouldn't be surprised in the xbox or ps5 had some way to do it. Thats the power of mass manufacturing 200 million consoles.

Ray tracing support is not the same as having separate dedicated hardware. Even your link suggests only top end may have RT and not the lower end sku's. The article is all speculation and no substance.

The info I'm getting for RDNA 2.0 is the the direct presentation MS did on the XSX on hotchips. They explained how their gpu works which is based on RDNA 2.0...... They are using Dual CU config to offload RT but also share each CU block with TMU's to process RT. Notice how Nvidia's cuda cores have doubled vs Turing, RDNA CU design is now doubled pumped as well. But instead of using dedicated RT hardware their RT performance and normal rasterization is done on CU's and TMU's, which sounds like it can and will most likely going to be affected more depending on RT usage. So RDNA 2.0 may be more flexible in some areas but to allocate a good chunk of its CU's to do RT leaves the normal rendering performance to suffer. Also the RT method their using is different than Nvidia where their using Bounding Volume Hierarchy, which basically means their using a hybrid RT approach.

Now for upscaling its still going to be shader based using 4/8/16 bit FP upscaling, which means you are not going to see AMD achieving the same performance results as DLSS, since your having to allocate shader processors to up res the image while on Nvidia the tensor cores are doing the upscaling while shader and RT cores are left alone.

Until we see AMD show their hand on desktop versions the consoles give us an idea how their doing things. that being said the console versions of RDNA 2.0 are weaker and will have be more conservative in what they ray trace. Also the rumor of RDNA 2's RT performance scaling with CU count seems to be holding true as well.

Ray tracing support is not the same as having separate dedicated hardware. Even your link suggests only top end may have RT and not the lower end sku's. The article is all speculation and no substance.

The info I'm getting for RDNA 2.0 is the the direct presentation MS did on the XSX on hotchips. They explained how their gpu works which is based on RDNA 2.0...... They are using Dual CU config to offload RT but also share each CU block with TMU's to process RT. Notice how Nvidia's cuda cores have doubled vs Turing, RDNA CU design is now doubled pumped as well. But instead of using dedicated RT hardware their RT performance and normal rasterization is done on CU's and TMU's, which sounds like it can and will most likely going to be affected more depending on RT usage. So RDNA 2.0 may be more flexible in some areas but to allocate a good chunk of its CU's to do RT leaves the normal rendering performance to suffer. Also the RT method their using is different than Nvidia where their using Bounding Volume Hierarchy, which basically means their using a hybrid RT approach.

Now for upscaling its still going to be shader based using 4/8/16 bit FP upscaling, which means you are not going to see AMD achieving the same performance results as DLSS, since your having to allocate shader processors to up res the image while on Nvidia the tensor cores are doing the upscaling while shader and RT cores are left alone.

Until we see AMD show their hand on desktop versions the consoles give us an idea how their doing things. that being said the console versions of RDNA 2.0 are weaker and will have be more conservative in what they ray trace. Also the rumor of RDNA 2's RT performance scaling with CU count seems to be holding true as well.

Didn't know that. Can you link the slide (its screenshot)? AMD is in big trouble if that's their approach to RT while Nvidia not only have dedicated HW, they can also do it concurrently with shaders.

63% of people clearly don't understand raytracing that well.

You couldn't be more correct. I am reading some of the stuff here and its astounding how people who think they know what they are talking about are just spewing nonsense. You know who are.

Didn't know that. Can you link the slide (its screenshot)? AMD is in big trouble if that's their approach to RT while Nvidia not only have dedicated HW, they can also do it concurrently with shaders.

Just do a search on google for xsx hotchips but here are some of the slides. Yea the dual CU design allows them to do RT without the need of designing special dedicated hardware. This is why XSX is only able to pump out 116 Gigapixels per second vs RX 5700xt's 122 GP/s. Even though XSX has 3328 Streaming Processors vs 5700xt's 2650..... It says that their hybrid method with the dual CU allows like 13 RT TFLOPS along with its normal 12 TFLOP of shader performance along with it. So its like having RTX 2080S's shader performance but only RTX 2060 like RT performance.

Also on the upsampling, If XSX has no dedicated Machine learning hardware its reason to believe AMD's RDNA 2 desktop cards wont have none.So the task its going to left upto the shader processors using 4/8 and 16 bit FP precision to upsample. Which will take away some of the shader processors for the task.

@rzxv04: seeing as how the ps3 was able to squeeze out some form of ray tracing way back in the days, i'm sure the new consoles will also be able to. The gpu will be based on the new AMD RDNA2 cards which are already confirmed to have hardware based ray tracing.

Yet the PS3 did not do any real-time ray tracing in any applicable situation. It was all BS hype and marketing. It took 3 PS3's to produce a low polygon count car model with only using primary rays with a single RT reflection with poor fps......

RDNA 2 does not have dedicated hardware for ray tracing, if XSX is a prelude to the design of RDNA 2.0, Its using TMU's and CU's to do RT. Which will eat into the overall performance of the gpu based on how much RT is going to be used. Dev's are going to juggle and compromise what to RT and what not to especially on the consoles .

The consoles are not going to do full scene ray tracing...... Its going to a hybrid method where you enhanced normal rasterized lighting/shadowing, and or only do a few select items. Nvidia took a stab at the XSX with its equivalent 13 RT TFLOPS. So if a 52CU at 1.8ghz is only able to do 13 RT TFLOPS, what do you expect a 72/80CU 2.1 ghz RDNA 2 will do? Even if its 3x the amount its still nowhere near the RT performance of RTX 3080. I think RDNA 2 will compete with non RT enabled games quite well but when it comes to doing both its going to fall short anything under 72CU based gpu.

This site says big navi will have hardware ray tracing for flagship video cards

https://www.techradar.com/news/amds-rdna-2-gpus-are-coming-this-year-but-they-wont-all-feature-ray-tracing-support

I can find other links that say the same thing, and they're more recent. Its possible AMD won't include it, but the rumors say otherwise. Unless you have some other information to disprove this, then where are you getting your info on RDNA2 not having hw ray tracing?

Also console manufacturers have always had custom gpu's that may be cut down in some ways but incredibly advanced in other ways. I wouldn't be surprised in the xbox or ps5 had some way to do it. Thats the power of mass manufacturing 200 million consoles.

Ray tracing support is not the same as having separate dedicated hardware. Even your link suggests only top end may have RT and not the lower end sku's. The article is all speculation and no substance.

The info I'm getting for RDNA 2.0 is the the direct presentation MS did on the XSX on hotchips. They explained how their gpu works which is based on RDNA 2.0...... They are using Dual CU config to offload RT but also share each CU block with TMU's to process RT. Notice how Nvidia's cuda cores have doubled vs Turing, RDNA CU design is now doubled pumped as well. But instead of using dedicated RT hardware their RT performance and normal rasterization is done on CU's and TMU's, which sounds like it can and will most likely going to be affected more depending on RT usage. So RDNA 2.0 may be more flexible in some areas but to allocate a good chunk of its CU's to do RT leaves the normal rendering performance to suffer. Also the RT method their using is different than Nvidia where their using Bounding Volume Hierarchy, which basically means their using a hybrid RT approach.

Now for upscaling its still going to be shader based using 4/8/16 bit FP upscaling, which means you are not going to see AMD achieving the same performance results as DLSS, since your having to allocate shader processors to up res the image while on Nvidia the tensor cores are doing the upscaling while shader and RT cores are left alone.

Until we see AMD show their hand on desktop versions the consoles give us an idea how their doing things. that being said the console versions of RDNA 2.0 are weaker and will have be more conservative in what they ray trace. Also the rumor of RDNA 2's RT performance scaling with CU count seems to be holding true as well.

so even the dirt cheap $299 xbox series s will have raytracing support. This pretty much confirms all the consoles will be getting it. The question now is if it will be hardware ray tracing on the higher tier consoles. I doubt it but we will see. Also, AMD is guaranteed to have hardware ray tracing on flagship gpu, and at least direct x ray tracing on mid tier and lower tier gpus.

so even the dirt cheap $299 xbox series s will have raytracing support. This pretty much confirms all the consoles will be getting it. The question now is if it will be hardware ray tracing on the higher tier consoles. I doubt it but we will see. Also, AMD is guaranteed to have hardware ray tracing on flagship gpu, and at least direct x ray tracing on mid tier and lower tier gpus.

The problem is that with only 4 TFLOP RDNA 2 in the XSS, at best it will do 4-5 RT TFLOPS. At that point the idea of implementing RT on such a low performance model, might be pointless. We are talking about RT performance well under RTX 2060 levels. Unless they use 720p upscale it to 1080p and target only 30FPS maybe.

Please Log In to post.

Log in to comment