Xbox One OWNS the PS4 with a technique called "Tiled Resources"

This topic is locked from further discussion.

This is a marketing gimmick. What they call "tiled resources" is really a sort of tessellation BUT instead of increasing the amount of detail in a 3d object, it removes objects you don't see. Current gen games like COD, Battlefield, and many other PC/PS3/Xbox games already utilize this type of technology and have since the PS1 days.

Ever try playing COD in wire frame mode? Go inside a building and you will see trees and other 3d models disappear from the scene until you can physically see them again. They are only adapting this technology to how close your view port is to something which replaces LOD models and textures. I wouldn't expect much or anything at all from this.

Don't be fooled by this marketing scheme.

TrooperManaic

[QUOTE="superclocked"]This doesn't take the move engines into account, nor the fact that the XBox One's custom texture compression hardware reduces the amount of bandwidth that is actually needed...Deevoshun

Bingo!

When you have 1/3 of the bandwith of the competition you need all compression you can get...

Like the heavily compressed pixelized cutscenes of the 360 vs the bluray quality video of the Ps3.

ERMAHGURD!!!!! MS has it all.... Tiled Resources... DAT CLOUD.... DOGZ, TV, EXPERIENCES, SPY CAM STUFF... and lets not forget CONTENT, they've got that in droves!!!

What can Sony possibly do to combat all this WIN!?

*facepalm*

sounds interesting but like the article points out, this isnt a novel concept.. maybe the Xbox One has a prioprietary way to make this process more easier and efficient but the overall concept will surely be available to PS4 developed games aswell.. it may turn out to be a small advantage to the XB1 (Microsoft is a software company afterall) but i wouldnt claim ownage or anything..

sounds interesting but like the article points out, this isnt a novel concept.. maybe the Xbox One has a prioprietary way to make this process more easier and efficient but the overall concept will surely be available to PS4 developed games aswell.. it may turn out to be a small advantage to the XB1 (Microsoft is a software company afterall) but i wouldnt claim ownage or anything..

Antwan3K

Considering that most developers say that PS4 devtools are at a more mature stage than the X1 devtools, i somehow doubt that.

[QUOTE="Krelian-co"]

[QUOTE="ronvalencia"] Your "underclocked 7770" for X1 is BS.ronvalencia

trying to care about your opinion, but i really couldn't, sorry :(

1. X1, 7850, PS4 has 2 primitives per cycle. 7770 has 1 primitive per cycle. This factor is important for DX11 titles.

2. 7850's 800mhz 32 ROPS would be underutilized as shown by 7950(non-BE)'s 800Mhz 32 ROPS results.

-----------------------

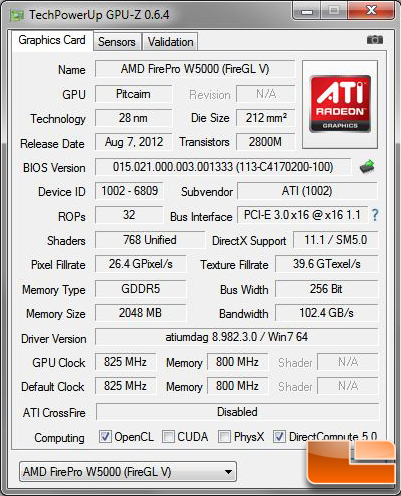

I'll post another 768 stream processor GCN, i.e. AMD FirePro W5000 SKU.

Notice FirePro W5000's 102 GB/s video memory bandwidth almost matches VGLeaks' eSRAM memory bandwidth.

Some gaming benchmarks for AMD FirePro W5000.

http://www.tomshardware.co.uk/workstation-graphics-card-gaming,review-32643-9.html

7850 = 45.

W5000 = 33.

7870 GE's 52.3 fps / 20 CUs = 2.615 x 10 CUs = 26.15 fps which roughly matches 7770's 25.9 fps result. My scale down theory and actual results works

FirePro W5000's 12 CUs (825 Mhz) scales down from Radeon HD 7850's 16 CUs (860 Mhz).

7850's 45.3 fps / 16 CUs (860Mhz) = 2.831 x 8 CUs = 22.65 fps which roughly matches 7750's 21.5 fps result. 7750 is clocked at 800Mhz. Again, my scale down theory and actual results works.

If we use the 7850 and 7750 as the two points for the "line of best fit", FirePro W5000 falls into the expected slot for scaled 12 CUs @ ~800 Mhz.

---------------------

If you scale 7750's 21.1 FPS result to 12 CUs you get 31.65 FPS which is close to W5000's 33 FPS result.

If you scale 7750's 21.1 FPS result to 16 CUs you get 42.2 FPS which is close to 7850's 45 FPS result. You got 60Mhz difference between 7750 and 7850.

If you scale 7750's 21.1 FPS result to 18 CUs you get 47.47 FPS which is between 7850's and 7870's FPS results.

The gap between 31.65 FPS and 47.47 FPS is about 33 percent i.e. 31.65 FPS has about 66 percent of 47.47 FPS performance.

or

The gap between 47.47 FPS and 31.65 FPS is about 49.9 percent i.e. 47.47 FPS has 49.9 percent extra performance over 31.65 FPS.

Both 31.65 FPS and 47.47 FPS plays the same game with the same settings.

ill give you a small hint about your copy paste in every thread, no matter how you may want to put it xbone is a gimped gpu compared to ps4.

AMD PRT is designed smaller/fast memory pool. AMD PRT's value is less for single speed memory pool.ronvalencia

No is not that is your biased ass opinion and silly theories.

PRT will work the same with 4GB of memory that with 1GB or 2GB, or 7 GB,is a way to maximize memory,which will also works on PS4.

By the way WTF do you think GDDR5 is.? Slow ass memory.?

So what AMD GCN on PC have 32 MB of ESRAM.? with DDR3 memory.?

Because PRT is a GCN features and almost all GCN are GDDR5 which is very fast memory,and that i know non GCN has ESRAM in it..

The cloud is being used to create dynamic AI in games. Since the always online feature was removed, it is now very unlikely that all games will use the cloud power. But, once the entire world is connected through fiber optic connections, the cloud will indeed be able to much more amazing things than just advanced AI...superclocked

That is funny Tittan Fall is a multiplayer only game,so where teh fu** the AI in the game.? Some bots.? And you tell me that can't be done by the CPU already.? Becuse bots have been aroudn for ages to.

By the time the whole world is connected by fiber optics the xbox one would be long gone and burry.

[QUOTE="dream431ca"]

[QUOTE="superclocked"]You make such a compelling argument...ronvalencia

Always :cool:

Kidding aside, the ESRAM is a convoluted way of enhancing the bandwidth issue. In the XB1, the DDR3 bandwidth alone is 68.3 GB/s, while the GDDR5 in the PS4 is around 176 GB/s. Pretty big gap. The ESRAM is an unknown at this point:

- Is the ESRAM a cache, or software managed memory?

- Can the ESRAM read and write at the same time?

- Do developers need to jump through hoops to access the ESRAM, potentially making development more complicated?

As of right now, the PS4 is in a much better position when it comes to bandwidth. It's also a very simple architecture to develop games for.

1. Most likely a cache since it doesn't have the ROPs units like in Xbox 360's EDRAM.

AMD PRT's treatment on the fast memory domain.

2. SRAM has dual ports

3. less latency can have less cache size.

Nothing you quoted say PRT will not work on GDDR5 or that it will work better on ESRAM with slow DDR3.

By the way to bad that ESRAM will not inject 700 Gflops to compensate for the gap in GPU,or 2GB more of ram for video to compensate what the xbox one OS eat.

[QUOTE="superclocked"]This doesn't take the move engines into account, nor the fact that the XBox One's custom texture compression hardware reduces the amount of bandwidth that is actually needed...Deevoshun

Bingo!

Which mean nothing since the 1.23 TF the xbox one has don't need a 200GB/s bandwidth,to put things into perspective the 7770 has 1.28 TF and only has 72GB/s bandwidth why didn't AMD put a 153GB/s bandwidth on the 7770 like it is on the 7850.? Because it wasn't need it..

I love how arguments like that are based on the premise that PS4 developers wouldn't use texture compression if they felt the need to and cram a crapload more information to the framebuffer irregardless of a 32MB tile..

Lems are going from 0 to idiot at the speed of ridiculous to the power of moronic.

This is real computer science, not some fanboy pseudo-science.

Read this article: http://www.escapistmagazine.com/news/view/125435-Microsoft-Tiled-Resources-Key-To-Xbox-One-Graphics

So it looks like it will be another console gen of Sony's console being owned in the graphics department.

Baurus_1

Uh yeah man, PS4 has an AMD GPU that does this too, just like the XBone. Self-owned.

[QUOTE="ronvalencia"]

[QUOTE="Krelian-co"]

trying to care about your opinion, but i really couldn't, sorry :(

Krelian-co

1. X1, 7850, PS4 has 2 primitives per cycle. 7770 has 1 primitive per cycle. This factor is important for DX11 titles.

2. 7850's 800mhz 32 ROPS would be underutilized as shown by 7950(non-BE)'s 800Mhz 32 ROPS results.

-----------------------

I'll post another 768 stream processor GCN, i.e. AMD FirePro W5000 SKU.

...

Notice FirePro W5000's 102 GB/s video memory bandwidth almost matches VGLeaks' eSRAM memory bandwidth.

Some gaming benchmarks for AMD FirePro W5000.

http://www.tomshardware.co.uk/workstation-graphics-card-gaming,review-32643-9.html

7850 = 45.

W5000 = 33.

7870 GE's 52.3 fps / 20 CUs = 2.615 x 10 CUs = 26.15 fps which roughly matches 7770's 25.9 fps result. My scale down theory and actual results works

FirePro W5000's 12 CUs (825 Mhz) scales down from Radeon HD 7850's 16 CUs (860 Mhz).

7850's 45.3 fps / 16 CUs (860Mhz) = 2.831 x 8 CUs = 22.65 fps which roughly matches 7750's 21.5 fps result. 7750 is clocked at 800Mhz. Again, my scale down theory and actual results works.

If we use the 7850 and 7750 as the two points for the "line of best fit", FirePro W5000 falls into the expected slot for scaled 12 CUs @ ~800 Mhz.

---------------------

If you scale 7750's 21.1 FPS result to 12 CUs you get 31.65 FPS which is close to W5000's 33 FPS result.

If you scale 7750's 21.1 FPS result to 16 CUs you get 42.2 FPS which is close to 7850's 45 FPS result. You got 60Mhz difference between 7750 and 7850.

If you scale 7750's 21.1 FPS result to 18 CUs you get 47.47 FPS which is between 7850's and 7870's FPS results.

The gap between 31.65 FPS and 47.47 FPS is about 33 percent i.e. 31.65 FPS has about 66 percent of 47.47 FPS performance.

or

The gap between 47.47 FPS and 31.65 FPS is about 49.9 percent i.e. 47.47 FPS has 49.9 percent extra performance over 31.65 FPS.

Both 31.65 FPS and 47.47 FPS plays the same game with the same settings.

ill give you a small hint about your copy paste in every thread, no matter how you may want to put it xbone is a gimped gpu compared to ps4.

I'll give you a small hint. My post actually illustrates the lower GPU vs higher GPU results (for CryEngine 3 DX11).

Your limited brain is set in console wars.

I love how arguments like that are based on the premise that PS4 developers wouldn't use texture compression if they felt the need to and cram a crapload more information to the framebuffer irregardless of a 32MB tile..

Lems are going from 0 to idiot at the speed of ridiculous to the power of moronic.

Shewgenja

AMD PRT has nothing to do with texture compression.

"Reducing the value" of AMD PRT doesn't mean PS4 is missing AMD PRT functions i.e. it's a shade of grey instead of black or white.

DX11 class GPUs already includes DX11 texture compression/decompression hardware. It would be silly not to use it.

[QUOTE="Deevoshun"]

[QUOTE="superclocked"]This doesn't take the move engines into account, nor the fact that the XBox One's custom texture compression hardware reduces the amount of bandwidth that is actually needed...tormentos

Bingo!

Which mean nothing since the 1.23 TF the xbox one has don't need a 200GB/s bandwidth,to put things into perspective the 7770 has 1.28 TF and only has 72GB/s bandwidth why didn't AMD put a 153GB/s bandwidth on the 7770 like it is on the 7850.? Because it wasn't need it..

What happens to 7770 with memory overclocking (e.g. 1.45Ghz, 5800Mhz effective)?

128bit PCB is targeted for particlar price segment.

If AMD needs a faster memory bus for ~1.26 TFLOPS class GCN, they'll config a SKU like W5000 (aka the gimped 7850 or prototype 7850 ES or unreleased 7830).

[QUOTE="ronvalencia"]AMD PRT is designed smaller/fast memory pool. AMD PRT's value is less for single speed memory pool.tormentos

No is not that is your biased ass opinion and silly theories.

PRT will work the same with 4GB of memory that with 1GB or 2GB, or 7 GB,is a way to maximize memory,which will also works on PS4.

By the way WTF do you think GDDR5 is.? Slow ass memory.?

So what AMD GCN on PC have 32 MB of ESRAM.? with DDR3 memory.?

Because PRT is a GCN features and almost all GCN are GDDR5 which is very fast memory,and that i know non GCN has ESRAM in it..

"Reducing the value" of AMD PRT doesn't mean PS4 is missing AMD PRT functions i.e. it's a "shades of grey" instead of black or white. There's less value for AMD PRT when you have 8 GB GDDR5 (will be reduced for user land games) i.e. you could load the entire texture data into a large GDDR5 memory pool before using the AMD PRT/Tiled resource. This POV is the same for 6GB GDDR5 equipped 7970s/W9000/Titan. There's no bias in that. X1 will be using Tiled resource/AMD PRT sooner than PS4 or the gaming PCs. AMD PRT wouldn't change GPU's potential e.g. a GCN with 32 CUs would be still faster than lesser GCNs. Tiled resource/AMD PRT is just an extra insurance when you run out of fast video memory or when you overcommit your fast video memory. --------- On the PC-OEM embedded, AMD is working on MCM GCN e.g. "Venus PRO MCM". The first GCN with embedded/on-chip GDDR5 modules i.e. X1's 32MB eSRAM setup is not ideal for legacy PC support i.e. legacy PC apps doesn't use DX11.2.[QUOTE="tormentos"][QUOTE="ronvalencia"]AMD PRT is designed smaller/fast memory pool. AMD PRT's value is less for single speed memory pool.ronvalencia

No is not that is your biased ass opinion and silly theories.

PRT will work the same with 4GB of memory that with 1GB or 2GB, or 7 GB,is a way to maximize memory,which will also works on PS4.

By the way WTF do you think GDDR5 is.? Slow ass memory.?

So what AMD GCN on PC have 32 MB of ESRAM.? with DDR3 memory.?

Because PRT is a GCN features and almost all GCN are GDDR5 which is very fast memory,and that i know non GCN has ESRAM in it..

"Reducing the value" of AMD PRT doesn't mean PS4 is missing AMD PRT functions i.e. it's a "shades of grey" instead of black or white. There's less value for AMD PRT when you have 8 GB GDDR5 (will be reduced for user land games) i.e. you could load the entire texture data into a large GDDR5 memory pool before using the AMD PRT/Tiled resource. This POV is the same for 6GB GDDR5 equipped 7970s/W9000/Titan. There's no bias in that. X1 will be using Tiled resource/AMD PRT sooner than PS4 or the gaming PCs. AMD PRT wouldn't change GPU's potential e.g. a GCN with 32 CUs would be still faster than lesser GCNs. Tiled resource/AMD PRT is just an extra insurance when you run out of fast video memory or when you overcommit your fast video memory. --------- On the PC-OEM embedded, AMD is working on MCM GCN e.g. "Venus PRO MCM". The first GCN with embedded/on-chip GDDR5 modules i.e. X1's 32MB eSRAM setup is not ideal for legacy PC support i.e. legacy PC apps doesn't use DX11.2. still doesnt change the fact that the PS4 gpu has noticeably more horsepower then the Xb1 gpu, along with more GPU bandwidth[QUOTE="ronvalencia"][QUOTE="tormentos"]"Reducing the value" of AMD PRT doesn't mean PS4 is missing AMD PRT functions i.e. it's a "shades of grey" instead of black or white. There's less value for AMD PRT when you have 8 GB GDDR5 (will be reduced for user land games) i.e. you could load the entire texture data into a large GDDR5 memory pool before using the AMD PRT/Tiled resource. This POV is the same for 6GB GDDR5 equipped 7970s/W9000/Titan. There's no bias in that. X1 will be using Tiled resource/AMD PRT sooner than PS4 or the gaming PCs. AMD PRT wouldn't change GPU's potential e.g. a GCN with 32 CUs would be still faster than lesser GCNs. Tiled resource/AMD PRT is just an extra insurance when you run out of fast video memory or when you overcommit your fast video memory. --------- On the PC-OEM embedded, AMD is working on MCM GCN e.g. "Venus PRO MCM". The first GCN with embedded/on-chip GDDR5 modules i.e. X1's 32MB eSRAM setup is not ideal for legacy PC support i.e. legacy PC apps doesn't use DX11.2. still doesnt change the fact that the PS4 gpu has noticeably more horsepower then the Xb1 gpu, along with more GPU bandwidthNo is not that is your biased ass opinion and silly theories.

PRT will work the same with 4GB of memory that with 1GB or 2GB, or 7 GB,is a way to maximize memory,which will also works on PS4.

By the way WTF do you think GDDR5 is.? Slow ass memory.?

So what AMD GCN on PC have 32 MB of ESRAM.? with DDR3 memory.?

Because PRT is a GCN features and almost all GCN are GDDR5 which is very fast memory,and that i know non GCN has ESRAM in it..

xboxiphoneps3

My posted Crysis 2 tomshardware benchmark illustrates that.

On games, there's no way W5000 level GCN would equal 7850 let alone "7860" (estimated 18 CU GCN placement), but X1 is no Wii U.

still doesnt change the fact that the PS4 gpu has noticeably more horsepower then the Xb1 gpu, along with more GPU bandwidth[QUOTE="xboxiphoneps3"][QUOTE="ronvalencia"] "Reducing the value" of AMD PRT doesn't mean PS4 is missing AMD PRT functions i.e. it's a "shades of grey" instead of black or white. There's less value for AMD PRT when you have 8 GB GDDR5 (will be reduced for user land games) i.e. you could load the entire texture data into a large GDDR5 memory pool before using the AMD PRT/Tiled resource. This POV is the same for 6GB GDDR5 equipped 7970s/W9000/Titan. There's no bias in that. X1 will be using Tiled resource/AMD PRT sooner than PS4 or the gaming PCs. AMD PRT wouldn't change GPU's potential e.g. a GCN with 32 CUs would be still faster than lesser GCNs. Tiled resource/AMD PRT is just an extra insurance when you run out of fast video memory or when you overcommit your fast video memory. --------- On the PC-OEM embedded, AMD is working on MCM GCN e.g. "Venus PRO MCM". The first GCN with embedded/on-chip GDDR5 modules i.e. X1's 32MB eSRAM setup is not ideal for legacy PC support i.e. legacy PC apps doesn't use DX11.2.ronvalencia

My posted Crysis 2 tomshardware benchmark illustrates that.

On games, there's no way W5000 level GCN would equal 7850 let alone "7860" (estimated 18 CU GCN placement), but X1 is no Wii U.

true, its fair to say both these consoles will produce some great looking graphics though (ps4 already has showed me quite impressive games... xbox one not so much yet) , but im even more excited for the edge that the the PS4 apu has over the XB1 apu

[QUOTE="ronvalencia"]

I'll give you a small hint. My post actually illustrates the lower GPU vs higher GPU results (for CryEngine 3 DX11).

Your limited brain is set in console wars.

tormentos

No your post illustrate how pathetic you are, you still tagging the W5000 to the xbox one which is a joke period,the xbox one is a gimped 7790,with 2 less computer units and lower clock speed.

All of of what your post do is prove that no matter what the PS4 will outperform the xbox one,even if by a miracle it was a W5000s,and not a 7790,because the W5000s is a 1.28 TF in fact 1.3 on ATI page,not 1.23 oh and the PS4 is not a 7850 is over it.

rons knowledge on GCN architecture >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>> Tormentos. Stop trying so hard

[QUOTE="ronvalencia"]

I'll give you a small hint. My post actually illustrates the lower GPU vs higher GPU results (for CryEngine 3 DX11).

Your limited brain is set in console wars.

tormentos

No your post illustrate how pathetic you are, you still tagging the W5000 to the xbox one which is a joke period,the xbox one is a gimped 7790,with 2 less computer units and lower clock speed.

All of of what your post do is prove that no matter what the PS4 will outperform the xbox one,even if by a miracle it was a W5000s,and not a 7790,because the W5000s is a 1.28 TF in fact 1.3 on ATI page,not 1.23 oh and the PS4 is not a 7850 is over it.

You do realise that AIB(Add In Board) vendors and large PC-OEMs can set different GPU clock speeds?

If one follows AMD's website, my 7950 should have 800Mhz GPU instead of 900Mhz (which is set by AIB).

From http://pcpartpicker.com/parts/video-card/#c=71&sort=d3 7950 product list, more than half of 7950 offerrings doesn't follow the reference 800Mhz clockspeed.

From http://pcpartpicker.com/parts/video-card/#sort=d3&qq=1&c=112 7970 GE product list, more than half of the 7970 GE offerings doesn't follow the reference 1000Mhz e.g. using boost clock speed as base clock speed i.e. 1050Mhz

From http://pcpartpicker.com/parts/video-card/#sort=d3&qq=1&c=81 7850 product list, most 7850 offerings has non-reference clockspeeds e.g. 860Mhz and beyond.

From http://pcpartpicker.com/parts/video-card/#sort=d3&qq=1&c=122 7970 product list, one 7970 offering has underclocked 900Mhz clockspeed i.e. below 925Mhz reference.

W5000 is ONLY used as a guide for my 12 CU scale estimates.

----------------------

With the same primitive per cycle rate and same CU count, the difference between 1.3 TFLOPS and 1.23 TFLOPS is minor.

In general, 7850/7870's 32 color ROPs will NOT be fully utilized i.e. the existence of 7950/7970's results debunks any PS4 advantage in this area.

X1 has

1. Pitcairn's 256 bit wide memory controller and associated L2 cache. Assuming AMD didn't change memory controller and L2 cache setup. 7790 has memory controller and L2 cache setup from 7770.

2. Memory bandwidth issues was mitigated by dual port 32 MB ESRAM and JIT LZ compression/decompression hardware. X1's aggregate memory bandwith is greater than 7790.

----------------------

I also used the following dev quotes (not being tied to PS4 exclusivity) as a rough guide

http://www.videogamer.com/news/xbox_one_and_ps4_have_no_advantage_over_the_other_says_redlynx.html

Speaking to VideoGamer.com at E3, Ilvessuo said: " Obviously we have been developing this game for a while and you can see the comparisons. I would say if you know how to use the platform they are both very powerful. I don't see a benefit over the other with any of the consoles."

----

http://www.videogamer.com/xboxone/metal_gear_solid_5_the_phantom_pain/news/ps4_and_xbox_one_power_difference_is_minimal_says_kojima.html

"The difference is small, and I don't really need to worry about it," he said, suggesting versions for Xbox One and PS4 won't be dramatically different.

----

http://gamingbolt.com/ubisoft-explains-the-difference-between-ps4-and-xbox-one-versions-of-watch_dogs

"Of course, the Xbox One isnt to be counted out. We asked Guay how the Xbox One version of Watch_Dogs would be different compared to the PC and PS4 versions of the game, to which he replied that, The Xbox One is a powerful platform, as of now we do not foresee a major difference in on screen result between the PS4 and the Xbox One. Obviously since we are still working on pushing the game on these new consoles, we are still doing R&D."

----

link

"We're still very much in the R&D period, that's what I call it, because the hardware is still new," Guay answered. "It's obvious to us that its going to take a little while before we can get to the full power of those machines and harness everything. But, even now we realise that both of them have comparable power, and for us thats good, but everyday it changes almost. Were pushing it and were going to continue doing that until [Watch Dogs] ship date."

http://www.eurogamer.net/articles/df-hardware-spec-analysis-durango-vs-orbis

"Other information has also come to light offering up a further Orbis advantage: the Sony hardware has a surprisingly large 32 ROPs (Render Output units) up against 16 on Durango. ROPs translate pixel and texel values into the final image sent to the display: on a very rough level, the more ROPs you have, the higher the resolution you can address (hardware anti-aliasing capability is also tied into the ROPs).16 ROPs is sufficient to maintain 1080p, 32 comes across as overkill, but it could be useful for addressing stereoscopic 1080p for instance, or even 4K. However, our sources suggest that Orbis is designed principally for displays with a maximum 1080p resolution."

OK I just realized something, near the end the guy said that "Tiled Resources" will be able to run on OpenGL platforms..... Seems that Tiled Resources will INDEED be available on the PS4 as well as the PC and Xbox One. And if you listen real close, they hint that it will work on Linux as well.

/Thread.

AMD's PRT demos was running on vendor specific OpenGL extensions i.e. non-standard API calls. NVIDIA has their own version. DX11.2 standardized the API call for Intel, NVIDIA and AMD.OK I just realized something, near the end the guy said that "Tiled Resources" will be able to run on OpenGL platforms..... Seems that Tiled Resources will INDEED be available on the PS4 as well as the PC and Xbox One. And if you listen real close, they hint that it will work on Linux as well.

/Thread.

TrooperManaic

[QUOTE="tormentos"]

[QUOTE="ronvalencia"]

I'll give you a small hint. My post actually illustrates the lower GPU vs higher GPU results (for CryEngine 3 DX11).

Your limited brain is set in console wars.

ronvalencia

No your post illustrate how pathetic you are, you still tagging the W5000 to the xbox one which is a joke period,the xbox one is a gimped 7790,with 2 less computer units and lower clock speed.

All of of what your post do is prove that no matter what the PS4 will outperform the xbox one,even if by a miracle it was a W5000s,and not a 7790,because the W5000s is a 1.28 TF in fact 1.3 on ATI page,not 1.23 oh and the PS4 is not a 7850 is over it.

You do realise that AIB(Add In Board) vendors and large PC-OEMs can set different GPU clock speeds?

If one follows AMD's website, my 7950 should have 800Mhz GPU instead of 900Mhz (which is set by AIB).

From http://pcpartpicker.com/parts/video-card/#c=71&sort=d3 7950 product list, more than half of 7950 offerrings doesn't follow the reference 800Mhz clockspeed.

From http://pcpartpicker.com/parts/video-card/#sort=d3&qq=1&c=112 7970 GE product list, more than half of the 7970 GE offerings doesn't follow the reference 1000Mhz e.g. using boost clock speed as base clock speed i.e. 1050Mhz

From http://pcpartpicker.com/parts/video-card/#sort=d3&qq=1&c=81 7850 product list, most 7850 offerings has non-reference clockspeeds e.g. 860Mhz and beyond.

From http://pcpartpicker.com/parts/video-card/#sort=d3&qq=1&c=122 7970 product list, one 7970 offering has underclocked 900Mhz clockspeed i.e. below 925Mhz reference.

W5000 is ONLY used as a guide for my 12 CU scale estimates.

----------------------

With the same primitive per cycle rate and same CU count, the difference between 1.3 TFLOPS and 1.23 TFLOPS is minor.

In general, 7850/7870's 32 color ROPs will NOT be fully utilized i.e. the existence of 7950/7970's results debunks any PS4 advantage in this area.

X1 has

1. Pitcairn's 256 bit wide memory controller and associated L2 cache. Assuming AMD didn't change memory controller and L2 cache setup. 7790 has memory controller and L2 cache setup from 7770.

2. Memory bandwidth issues was mitigated by dual port 32 MB ESRAM and JIT LZ compression/decompression hardware. X1's aggregate memory bandwith is greater than 7790.

----------------------

I also used the following dev quotes (not being tied to PS4 exclusivity) as a rough guide

http://www.videogamer.com/news/xbox_one_and_ps4_have_no_advantage_over_the_other_says_redlynx.html

Speaking to VideoGamer.com at E3, Ilvessuo said: " Obviously we have been developing this game for a while and you can see the comparisons. I would say if you know how to use the platform they are both very powerful. I don't see a benefit over the other with any of the consoles."

----

http://www.videogamer.com/xboxone/metal_gear_solid_5_the_phantom_pain/news/ps4_and_xbox_one_power_difference_is_minimal_says_kojima.html

"The difference is small, and I don't really need to worry about it," he said, suggesting versions for Xbox One and PS4 won't be dramatically different.

----

http://gamingbolt.com/ubisoft-explains-the-difference-between-ps4-and-xbox-one-versions-of-watch_dogs

"Of course, the Xbox One isnt to be counted out. We asked Guay how the Xbox One version of Watch_Dogs would be different compared to the PC and PS4 versions of the game, to which he replied that, The Xbox One is a powerful platform, as of now we do not foresee a major difference in on screen result between the PS4 and the Xbox One. Obviously since we are still working on pushing the game on these new consoles, we are still doing R&D."

----

link

"We're still very much in the R&D period, that's what I call it, because the hardware is still new," Guay answered. "It's obvious to us that its going to take a little while before we can get to the full power of those machines and harness everything. But, even now we realise that both of them have comparable power, and for us thats good, but everyday it changes almost. Were pushing it and were going to continue doing that until [Watch Dogs] ship date."

http://www.eurogamer.net/articles/df-hardware-spec-analysis-durango-vs-orbis

"Other information has also come to light offering up a further Orbis advantage: the Sony hardware has a surprisingly large 32 ROPs (Render Output units) up against 16 on Durango. ROPs translate pixel and texel values into the final image sent to the display: on a very rough level, the more ROPs you have, the higher the resolution you can address (hardware anti-aliasing capability is also tied into the ROPs).16 ROPs is sufficient to maintain 1080p, 32 comes across as overkill, but it could be useful for addressing stereoscopic 1080p for instance, or even 4K. However, our sources suggest that Orbis is designed principally for displays with a maximum 1080p resolution."

Nothing you say there master of copy paste,refute what i say..

The xbox one using a W5000 is a theory inveted by you,period so your charts illustrating performance mean sh**,they are not real or represent the xbox one GPU,tehy are usless even less when you try to pretend the PS4 is a straight 7850.

And quoting developers is a joke they will remain neutral even if the PS4 does kick the living crap out of the xbox one.

Funny because UBIsoft basically confirmed that it can't push the PS4 much because the game they are doing is multiplatform,now i am more than sure that PC can keep up and surpass the PS4 so they don't have to worry for PC,so why they don't push the PS4 and do PS4 specific things.?

Because the xbox one will not be able to keep up,specially if heavy compute is use.

OK I just realized something, near the end the guy said that "Tiled Resources" will be able to run on OpenGL platforms..... Seems that Tiled Resources will INDEED be available on the PS4 as well as the PC and Xbox One. And if you listen real close, they hint that it will work on Linux as well.

/Thread.

TrooperManaic

But he also said it was exclusive to Windows 8.1 and next gen console(presumably Xbox One). So I don't believe it the PS4 will have this particular technique available to it.

[QUOTE="TrooperManaic"]

OK I just realized something, near the end the guy said that "Tiled Resources" will be able to run on OpenGL platforms..... Seems that Tiled Resources will INDEED be available on the PS4 as well as the PC and Xbox One. And if you listen real close, they hint that it will work on Linux as well.

/Thread.

GravityX

But he also said it was exclusive to Windows 8.1 and next gen console(presumably Xbox One). So I don't believe it the PS4 will have this particular technique available to it.

In fact he say next gen consolesssss.....With an S at the end,and PRT had been part of OpenGl for quite a while,when sony talked about the PS4 GPU they describe it as having more features than DirectX1.1+.

PRT will be used more on the x1, alot of devs wouldnt put time and resources into using PRT's, now that its available for them to use I think they might use of it. It helps offset scenarios I guess where there is a high load of data flying down the pipe and the bandwidth cant keep up. You put a huge chunk of data and compress it into 32 bytes and transmit it over the Esram buffer..... Am I right RON????

It's available to both. I don't understand how MS would be able to even prevent a coding technique. It's nothing inherent to the chipsets utilized in either box. On top of that, it's customization that each dev will have to build into their pipeline. I know we haven't even started implementing anything like this.

I'm glad it gives some MS fans hope that they might get some more power out of their box. But again, its nothing the PS4 wouldn't be able to do.

It's available to both. I don't understand how MS would be able to even prevent a coding technique. It's nothing inherent to the chipsets utilized in either box. On top of that, it's customization that each dev will have to build into their pipeline. I know we haven't even started implementing anything like this.

I'm glad it gives some MS fans hope that they might get some more power out of their box. But again, its nothing the PS4 wouldn't be able to do.

Shensolidus

ok snake avatar dude

It's available to both. I don't understand how MS would be able to even prevent a coding technique. It's nothing inherent to the chipsets utilized in either box. On top of that, it's customization that each dev will have to build into their pipeline. I know we haven't even started implementing anything like this.

I'm glad it gives some MS fans hope that they might get some more power out of their box. But again, its nothing the PS4 wouldn't be able to do.

Shensolidus

In fact i remember something similar on PS2.

[QUOTE="Shensolidus"]

It's available to both. I don't understand how MS would be able to even prevent a coding technique. It's nothing inherent to the chipsets utilized in either box. On top of that, it's customization that each dev will have to build into their pipeline. I know we haven't even started implementing anything like this.

I'm glad it gives some MS fans hope that they might get some more power out of their box. But again, its nothing the PS4 wouldn't be able to do.

XBOunity

ok snake avatar dude

That makes me 'teh biased'? Cause I like a character whose games I loved growing up? Whose latest game also opened the MS E3 press conference? Get over yourself.[QUOTE="XBOunity"][QUOTE="Shensolidus"]

It's available to both. I don't understand how MS would be able to even prevent a coding technique. It's nothing inherent to the chipsets utilized in either box. On top of that, it's customization that each dev will have to build into their pipeline. I know we haven't even started implementing anything like this.

I'm glad it gives some MS fans hope that they might get some more power out of their box. But again, its nothing the PS4 wouldn't be able to do.

Shensolidus

ok snake avatar dude

That makes me 'teh biased'? Cause I like a character whose games I loved growing up? Whose latest game also opened the MS E3 press conference? Get over yourself.im just joking you been pretty fair since talking to you, but you do side of the ps4sz

That makes me 'teh biased'? Cause I like a character whose games I loved growing up? Whose latest game also opened the MS E3 press conference? Get over yourself.[QUOTE="Shensolidus"][QUOTE="XBOunity"]

ok snake avatar dude

XBOunity

im just joking you been pretty fair since talking to you, but you do side of the ps4sz

LOL I understand that. It's hard to say that i'm not, but I just know the two boxes intimately and I prefer one over the other. The thing is i'm just enjoying the PS4 more right now. That isn't to say Sony won't **** up, I know they probably will and soon. But right now,they're doing some amazing stuff and I can get behind that.[QUOTE="XBOunity"]

[QUOTE="Shensolidus"] That makes me 'teh biased'? Cause I like a character whose games I loved growing up? Whose latest game also opened the MS E3 press conference? Get over yourself.Shensolidus

im just joking you been pretty fair since talking to you, but you do side of the ps4sz

LOL I understand that. It's hard to say that i'm not, but I just know the two boxes intimately and I prefer one over the other. The thing is i'm just enjoying the PS4 more right now. That isn't to say Sony won't **** up, I know they probably will and soon. But right now,they're doing some amazing stuff and I can get behind that.im getting a ps4, its just not my primary console. I dont know if Sony is gonna f*ck up, cerny is a good dude. i think both xbone and ps4 will be successes

LOL I understand that. It's hard to say that i'm not, but I just know the two boxes intimately and I prefer one over the other. The thing is i'm just enjoying the PS4 more right now. That isn't to say Sony won't **** up, I know they probably will and soon. But right now,they're doing some amazing stuff and I can get behind that.[QUOTE="Shensolidus"]

[QUOTE="XBOunity"]

im just joking you been pretty fair since talking to you, but you do side of the ps4sz

XBOunity

im getting a ps4, its just not my primary console. I dont know if Sony is gonna f*ck up, cerny is a good dude. i think both xbone and ps4 will be successes

Yeah, i'd like to get an Xbone, but I don't like the idea of having an always-on camera in my living room. The moment they get rid of the camera being plugged in requirement, i'll snatch one up.[QUOTE="XBOunity"][QUOTE="Shensolidus"] LOL I understand that. It's hard to say that i'm not, but I just know the two boxes intimately and I prefer one over the other. The thing is i'm just enjoying the PS4 more right now. That isn't to say Sony won't **** up, I know they probably will and soon. But right now,they're doing some amazing stuff and I can get behind that.

Shensolidus

im getting a ps4, its just not my primary console. I dont know if Sony is gonna f*ck up, cerny is a good dude. i think both xbone and ps4 will be successes

Yeah, i'd like to get an Xbone, but I don't like the idea of having an always-on camera in my living room. The moment they get rid of the camera being plugged in requirement, i'll snatch one up.I wouldn't get the Xbox One simply due to the fact of how microsoft has been doing things. More importantly, a CEO left the company early on.. I figure if a salesman doesn't have any faith in his product then it will not sale... so what would you think if the CEO the Big guy didn't have faith in his own console?? Just a thought. But there are other reasons why I wouldn't get it.

I'm personally done with Consoles and is moving to the Portable 3DS XL, may not be the best, but it's a gaming console thats more simple. :)

Please Log In to post.

Log in to comment