Nothing new (June 2014) but It's pretty easy to follow and understand.

Xbox One vs PlayStation 4 -:Excellent video explaining the CPU/GPU (APU) differences

This topic is locked from further discussion.

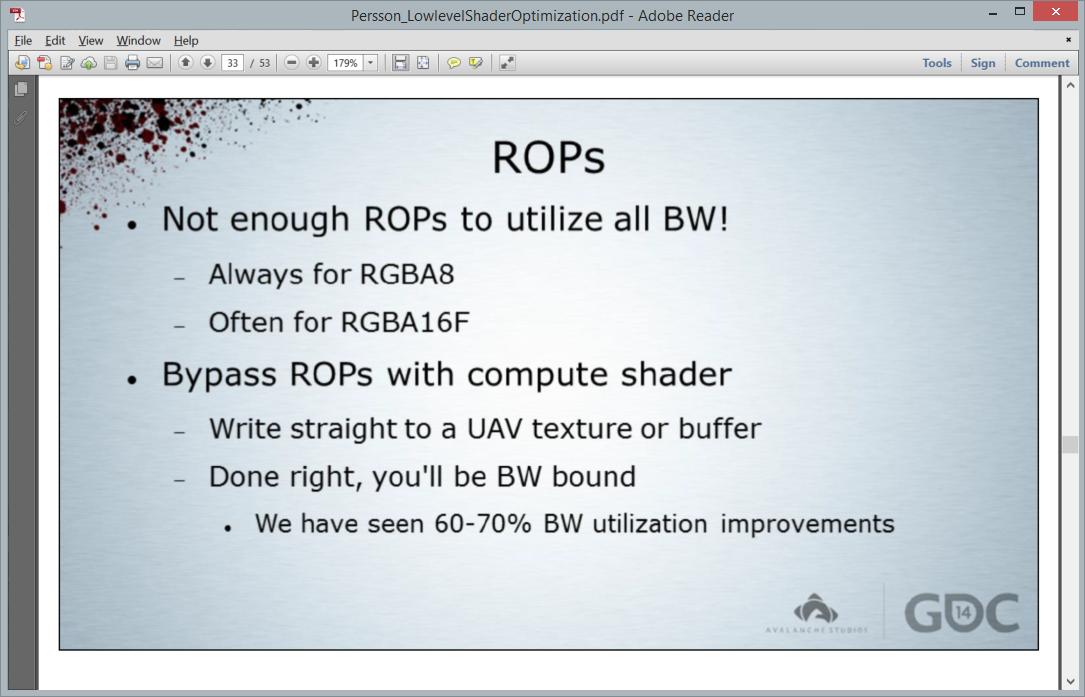

That guy's explanation of the importance of ROPs was pretty misleading, also more than a year old video.

"The Xbox One has 16 ROPs while the PlayStation 4 has 32. While this may look like it means 2x the performance, its not quite that simple. ROPs stands for Render Output Pipelines. At the end of the GPU's calculation's process, the ROPs draw out the final image. The trick is however, that the ROPs are only as useful as the GPU is Powerful, so you could have 2 billion ROPs (for example), but if your GPU is not powerful enough to utilize them, it means nothing. While the Playstation 4's GPU probably needs a little more than 16 ROPs, it is not nearly powerful enough to utilize all 32. Just to demonstrate what I mean, AMD's new R9 280x graphics card has 4.1 Tflops of computing power, more than twice that of Playstation 4, and it gets by fine with just 32 ROPs. The Xbox One, on the other hand has a slightly less powerful GPU, and so it probably has just the right amount of ROPs. The reason Sony could not just put in the exact number of ROPs they needed (like 20, or 18) is because ROPs are only made in chunks of 8,16, 32, 48, & 64, so they figured it would be better to have too much ROPs with the 32 configuration, than to bottleneck their bigger GPU with just 16 ROPs."

This is just gonna turn into a tormentos Vs. 04dcarraher plus everyone else debate. Thanks.

lol

@nyadc: That is all true, PS4 GPU is not twice as powerful as Xbox One GPU, so even though it has twice as many ROPs, it's likely that this setup is underutilized. However having more ROPs does give PS4 at least some significant advantage in the number of pixels per clock cycle the GPU can deliver. AKA pixel fill rate.

Peak pixel fillrate on PS4 is 25,600 million (25.6B) pixels/sec (32 ROPs * 800 MHz GPU clock)

On Xbox One it's 13,648 million (13.6B) pixels/sec (16 ROPs * 853 MHz GPU clock)

While PS4 GPU likely cannot take full advantage the extra ROPs & pixel fillrate, I'm sure it can take advantage of some of the extra pixels per clock it does over and above what Xbox One GPU outputs.

This has to be *part* of the reason why PS4 games can more easily reach native 1080p at similar levels of graphic detail and framerates compared to the Xbox One versions of the same game. ROPs & fillrate aren't the only reason, but they most likely contribute to that picture, no pun intended.

With all that said, I hope that the next generation Xbox and PS5 both have GPUs that are nearly equally as capable in power & performance, to handle 4K Ultra HD games when they arrive, probably by 2019.

Nothing new (June 2014) but It's pretty easy to follow and understand.

1. (4: 21), PC has Windows 10's DirectX12 for console like APIs.

2. (4: 21), Claims for consoles matching high graphics cards are flawed.

3. (5: 38) Slightly flawed comparison between 12 Cu vs 18 CU since the clock speeds are different i.e. XBO's 71 percent of PS4 or PS4 is 1.4X over XBO.

Intel Haswell's AVXv2 256bit SIMD extensions has GpGPU style gather and FMA3 instructions hence rivaling AMD GCN/NVIDIA Maxwell's features in this area.

4: (6:48), Flawed "50% advantage" assertion since clock speeds are different. On TMU (texture mapping units), write operations are bound by memory bandwidth.

5. (7:43), PS4's 32 ROPS advantage was mitigated by Avalanche Studios's re-purposing 48 TMUs workaround for ROPS operations. The recent Mad Max game was programmed by Avalanche Studios.

MSAA is not suitable for most deferred rendering 3D engines.

PS4's 32 ROPS with MSAA 4X is memory bandwidth bound e.g. The Order's 1920x800p limit. To maximize 32 ROPS, it needs a higher grade AMD GCNs like Radeon HD 7950 or higher.

6. (9:35)

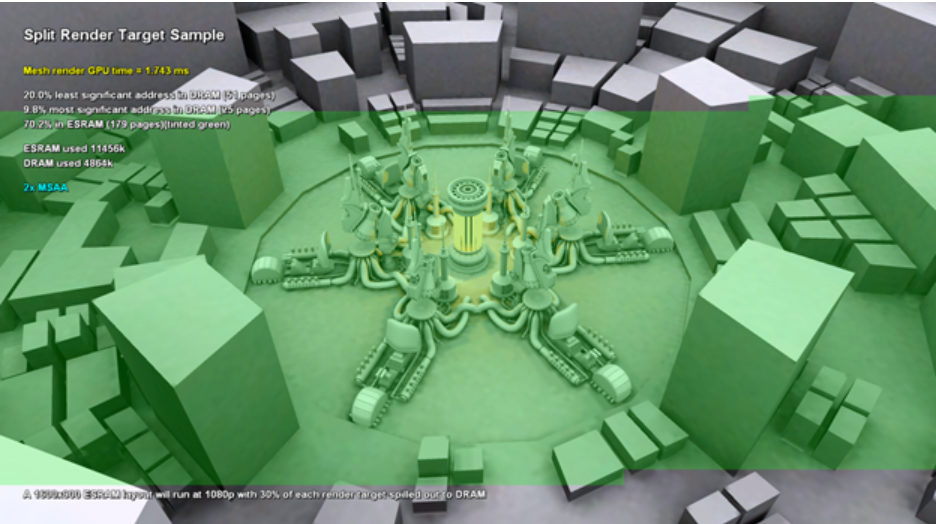

Split render target combines 32 MB ESRAM and DDR3 memory pools into a virtual single frame buffer surface which yields similar memory write bandwidth as PS4.

For 1920x1080p, 32MB ESRAM contains 70 percent of the workload while DDR3 contains 30 percent of the workload.

Theoretical peak for frame buffer write operations

XBO, 108 + 68 = 176 GB/s

PS4, 176 GB/s

Xbox has a faster cpu and DX12, it's more powerful. GDDR5 is gimmick folks, don't fall for Sony's lies.

And yet Xbone can hardly output 1080p

Xbox has a faster cpu and DX12, it's more powerful. GDDR5 is gimmick folks, don't fall for Sony's lies.

And yet Xbone can hardly output 1080p

In the end PS4 has the best multiplats.

/thread

http://www.eurogamer.net/articles/digitalfoundry-2015-mad-max-performance-analysis

Nothing new (June 2014) but It's pretty easy to follow and understand.

1. (4: 21), PC has Windows 10's DirectX12 for console like APIs.

2. (4: 21), Claims for consoles matching high graphics cards are flawed.

3. (5: 38) Slightly flawed comparison between 12 Cu vs 18 CU since the clock speeds are different i.e. XBO's 71 percent of PS4 or PS4 is 1.4X over XBO.

Intel Haswell's AVXv2 256bit SIMD extensions has GpGPU style gather and FMA3 instructions hence rivaling AMD GCN/NVIDIA Maxwell's features in this area.

4: (6:48), Flawed "50% advantage" assertion since clock speeds are different. On TMU (texture mapping units), write operations are bound by memory bandwidth.

5. (7:43), PS4's 32 ROPS advantage was mitigated by Avalanche Studios's re-purposing 48 TMUs workaround for ROPS operations. The recent Mad Max game was programmed by Avalanche Studios.

MSAA is not suitable for most deferred rendering 3D engines.

PS4's 32 ROPS with MSAA 4X is memory bandwidth bound e.g. The Order's 1920x800p limit. To maximize 32 ROPS, it needs a higher grade AMD GCNs like Radeon HD 7950 or higher.

6. (9:35)

Split render target combines 32 MB ESRAM and DDR3 memory pools into a virtual single frame buffer surface which yields similar memory write bandwidth as PS4.

For 1920x1080p, 32MB ESRAM contains 70 percent of the workload while DDR3 contains 30 percent of the workload.

Theoretical peak for frame buffer write operations

XBO, 108 + 68 = 176 GB/s

PS4, 176 GB/s

Even though the XBO reaches 176Gb/s, don't you think it's a pain to have information in two places rather than one.... ?

I know the ESRAM has it's advantages by consuming less energy and being faster as well as being able to store info for longer. But from a coding perspective wouldn't it be easier with just a large pool like the PS4's 8GB GDDR5 ram ?

Although devs should be used to it since the 360 also had something similar.

its an old video but does capture the differences between the two. Some slightly over exaggerated / theoretical but the essence is there. Obvious this type of thread is going to be covered extensively with banners all over the place. If you go by multi platform performance weather you like the games or not ps4 does tend to edge xbox one. runs games at higher resolution sometimes with fps dips compare to xbox one and sometimes ridiculously runs games not only at higher res but fps at the same time as well. Also seems like the trend still continue, mind you for every Mad Max your still going to get the Batman types. The divide is still there when look at some demos, betas of some of the upcoming games.

In terms of really bringing the theories to life first party will be where to go. The video made me think of Sucker Punch and there proclamation of "Asynchronous Processing is the future of ps4". I'm really interest to see what they do next, seeing how nobody has time for that shit. Preferable move away from Infamous after making a lunch game type out of it.

its an old video but does capture the differences between the two. Some slightly over exaggerated / theoretical but the essence is there. Obvious this type of thread is going to be covered extensively with banners all over the place. If you go by multi platform performance weather you like the games or not ps4 does tend to edge xbox one. runs games at higher resolution sometimes with fps dips compare to xbox one and sometimes ridiculously runs games not only at higher res but fps at the same time as well. Also seems like the trend still continue, mind you for every Mad Max your still going to get the Batman types. The divide is still there when look at some demos, betas of some of the upcoming games.

In terms of really bringing the theories to life first party will be where to go. The video made me think of Sucker Punch and there proclamation of "Asynchronous Processing is the future of ps4". I'm really interest to see what they do next, seeing how nobody has time for that shit. Preferable move away from Infamous after making a lunch game type out of it.

System Wars are over this topic actually in general unless MS says something about a "performance boost" or something but then it dies down again.

Everyone knows the PS4 has better hardware.... but I still think people make the gap bigger than it is in SW.

In the end PS4 has the best multiplats.

/thread

http://www.eurogamer.net/articles/digitalfoundry-2015-mad-max-performance-analysis

WTF? Hahahahaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa

So do i declare you full lemming now or should i wait until you have the guts to admit that you are a lemming.?

Just because the PS4 was hold back on this game it doesn't mean the PS4 can't run games better than the XBO,so when you are push you admit the PS4 will be alway stronger but then you go out and quote a game were parity was the main focus as some kind of proof.

Fact is he is right the PS4 runs games better but is up to developers to make them run better.

System Wars are over this topic actually in general unless MS says something about a "performance boost" or something but then it dies down again.

Everyone knows the PS4 has better hardware.... but I still think people make the gap bigger than it is in SW.

Maybe you should look harder,since there are people who who downplay the difference some even claim Parity will be achieve which is down right a joke.

Also this is a bait thread.

In the end PS4 has the best multiplats.

/thread

http://www.eurogamer.net/articles/digitalfoundry-2015-mad-max-performance-analysis

WTF? Hahahahaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa

So do i declare you full lemming now or should i wait until you have the guts to admit that you are a lemming.?

Just because the PS4 was hold back on this game it doesn't mean the PS4 can't run games better than the XBO,so when you are push you admit the PS4 will be alway stronger but then you go out and quote a game were parity was the main focus as some kind of proof.

Fact is he is right the PS4 runs games better but is up to developers to make them run better.

System Wars are over this topic actually in general unless MS says something about a "performance boost" or something but then it dies down again.

Everyone knows the PS4 has better hardware.... but I still think people make the gap bigger than it is in SW.

Maybe you should look harder,since there are people who who downplay the difference some even claim Parity will be achieve which is down right a joke.

Also this is a bait thread.

Well I want it to stop honestly.... but I know that there will always be some who keep on rebelling against the truth.

I wouldn't take this as a bait thread since the seems pretty new... May 2015. Unless he already knows how to bait in forums.

@tormentos:

The results speak for themselves you stupid cow.

Yeah it show an unimpressive game having parity what does that prove.?

Let me break it down for you.

NFS 1080p 30FPS on both.

UFC 900p 30FPS on both.

Destiny 1080p 30FPS on both.

Fifa 1080p 60 -30FPS on both.

Diablo 1080p 60FPS on both.

So what does they prove other than parity was set from the offshot rather than been equal because the xbox one can match the PS4.

You are a hypocrite on one side you claim the PS4 is more powerful,and on the other try to give the impression that both consoles can be equal,when in reality the only way that will happen is if the PS4 is hold back or screw up.

@tormentos:

The results speak for themselves you stupid cow.

Yeah it show an unimpressive game having parity what does that prove.?

Let me break it down for you.

NFS 1080p 30FPS on both.

UFC 900p 30FPS on both.

Destiny 1080p 30FPS on both.

Fifa 1080p 60 -30FPS on both.

Diablo 1080p 60FPS on both.

So what does they prove other than parity was set from the offshot rather than been equal because the xbox one can match the PS4.

You are a hypocrite on one side you claim the PS4 is more powerful,and on the other try to give the impression that both consoles can be equal,when in reality the only way that will happen is if the PS4 is hold back or screw up.

Again, PS4 doesn't enough power for 1920x1080p at 60 fps when XBO's 1920x1080p at 30 fps.

Still with this crap? PS4 is stronger... the end. Lock due to the 500 post rule right?

Na that rule was drop recently.

Oh, thanks man, I was unaware.

Can't say I entirely agree, but fair enough. Maybe 500 was a bit harsh, but maybe 100? I don't know. Not my decision I suppose. I thought the rule was to keep dupe accounts from spamming.

Then again, this makes things a whole heck of a lot more fun... lol

Even though the XBO reaches 176Gb/s, don't you think it's a pain to have information in two places rather than one....? (1)

I know the ESRAM has it's advantages by consuming less energy and being faster as well as being able to store info for longer. But from a coding perspective wouldn't it be easier with just a large pool like the PS4's 8GB GDDR5 ram ?(1)

Although devs should be used to it since the 360 also had something similar.(2)

1. It depends on the abstraction layer e.g. with PC's add-on cards, memory pools located on the add-on cards gets mapped to PCI address range.

Contrary to tormented's CPU can't see ESRAM memory pool claim, being non-cache coherent from CPU's POV doesn't mean that ESRAM can't be accessed by the programmer. Being non-cache coherent means that CPU doesn't need to worry about updating it's data stored in it's cache for every write updates on ESRAM hence reducing I/O traffic noise. For some workloads, CPU doesn't need know about multiple write updates until the desired result is completed.

2. X360 can't even do XBO's split target render method since X360's ROPS are located with the EDRAM chip.

Even though the XBO reaches 176Gb/s, don't you think it's a pain to have information in two places rather than one....? (1)

I know the ESRAM has it's advantages by consuming less energy and being faster as well as being able to store info for longer. But from a coding perspective wouldn't it be easier with just a large pool like the PS4's 8GB GDDR5 ram ?(1)

Although devs should be used to it since the 360 also had something similar.(2)

1. It depends on the abstraction layer e.g. with PC's add-on cards, memory pools located on the add-on cards gets mapped to PCI address range.

Contrary to tormented's CPU can't see ESRAM memory pool claim, being non-cache coherent from CPU's POV doesn't mean that ESRAM can't be accessed by the programmer. Being non-cache coherent means that CPU doesn't need to worry about updating it's data stored in it's cache for every write updates on ESRAM hence reducing I/O traffic noise. For some workloads, CPU doesn't need know about multiple write updates until the desired result is completed.

2. X360 can't even do XBO's split target render method since X360's ROPS are located with the EDRAM chip.

So basically it depends on how you code a game.... Allocation etc..

1. It depends on the abstraction layer e.g. with PC's add-on cards, memory pools located on the add-on cards gets mapped to PCI address range.

Contrary to tormented's CPU can't see ESRAM memory pool claim, being non-cache coherent from CPU's POV doesn't mean that ESRAM can't be accessed by the programmer. Being non-cache coherent means that CPU doesn't need to worry about updating it's data stored in it's cache for every write updates on ESRAM hence reducing I/O traffic noise. For some workloads, CPU doesn't need know about multiple write updates until the desired result is completed.

2. X360 can't even do XBO's split target render method since X360's ROPS are located with the EDRAM chip.

Again, PS4 doesn't enough power for 1920x1080p at 60 fps when XBO's 1920x1080p at 30 fps.

On the flipside, the game manages to hit 60fps pretty solidly in locations that have fewer effects at work, and in the more scripted action sequences where the rendering load is more predictable.

Yeah tell that to DF Tomb Raider had moments where frames are 30 vs 60 of consistent 60 FPS vs 30,not only the PS4 drop but the xbox one version also drops from 30.

But it has the power to run a 20FPS gap quite steady at the same resolution or even more since in both Tomb Raider and Sniper elite the PS3 hit 60 while the xbox one runs at 30 with vsynch.

That guy's explanation of the importance of ROPs was pretty misleading, also more than a year old video.

"The Xbox One has 16 ROPs while the PlayStation 4 has 32. While this may look like it means 2x the performance, its not quite that simple. ROPs stands for Render Output Pipelines. At the end of the GPU's calculation's process, the ROPs draw out the final image. The trick is however, that the ROPs are only as useful as the GPU is Powerful, so you could have 2 billion ROPs (for example), but if your GPU is not powerful enough to utilize them, it means nothing. While the Playstation 4's GPU probably needs a little more than 16 ROPs, it is not nearly powerful enough to utilize all 32. Just to demonstrate what I mean, AMD's new R9 280x graphics card has 4.1 Tflops of computing power, more than twice that of Playstation 4, and it gets by fine with just 32 ROPs. The Xbox One, on the other hand has a slightly less powerful GPU, and so it probably has just the right amount of ROPs. The reason Sony could not just put in the exact number of ROPs they needed (like 20, or 18) is because ROPs are only made in chunks of 8,16, 32, 48, & 64, so they figured it would be better to have too much ROPs with the 32 configuration, than to bottleneck their bigger GPU with just 16 ROPs."

Yah I didn't like this guy that much, I stopped pretty early in when he mentioned developers would be more comfortable on x86 vs PowerPC. From a developer standpoint, unless your writing a compiler or have a very strange situation where your writing in assembly, there's literally no difference. Maybe messing with some compiler options early on... What matters is the API's. And 360,One, and ps4's API's I hear are much more like PC (DirectX/OpenGL) which is what makes developing for those systems easier.

And further down from you (up from me) Rov is tearing it apart.

@tormentos:

Notice "gets mapped to PCI address range."

PC CPUs doesn't have direct access to memory pools on add-on PCI cards i.e. Northbridge's IO-MMU bridges between the two sides. If you have noticed with XBO's block diagram, the GPU has it's own MMU block. Memory access for add-on PCI cards gets off-loaded to IO-MMU.

PCI address ranges are logically map to CPU's address range. Same concept exist for XBO's ESRAM, hence why CPU generated command list can still perform memory writes to ESRAM.

If XBO's 32MB ESRAM is swap for 4GB HBM as in Fury X, the basic concept remains the same.

That black line was a lie,it was put there when the thole not true huma crap started to appear around hot chip presentation,there is no connection between ESRAM and CPU.

ESRAM is dedicated RAM, it’s 32 megabytes, it sits right next to the GPU, in fact it’s on the other side of the GPU from the buses that talk to the rest of the system, so the GPU is the only thing that can see this memory.

There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that

http://wccftech.com/microsoft-xbox-esram-huge-win-explains-reaching-1080p60-fps/#ixzz3lOCWw4V3

That black line was a lie,it was put there when the thole not true huma crap started to appear around hot chip presentation,there is no connection between ESRAM and CPU.

ESRAM is dedicated RAM, it’s 32 megabytes, it sits right next to the GPU, in fact it’s on the other side of the GPU from the buses that talk to the rest of the system, so the GPU is the only thing that can see this memory.

There’s no CPU access here, because the CPU can’t see it, and it’s gotta get through the GPU to get to it, and we didn’t enable that

http://wccftech.com/microsoft-xbox-esram-huge-win-explains-reaching-1080p60-fps/#ixzz3lOCWw4V3

Who cares about the black line? The facts, a CPU thread containing generated command list drives frame buffer write operation on ESRAM. For frame buffer write operation, CPU has talk to Host Guest GPU MMU.

Who cares about the black line? The facts, a CPU thread containing generated command list drives frame buffer write operation on ESRAM. For frame buffer write operation, CPU has talk to Host Guest GPU MMU.

True HSA requires the data to be seen by both CPU and GPU at the same time all the time,when data is on ESRAM the CPU can't see it,there for no true HSA.

Like i already told you there are 3 gaps here,one is hardware related GPU,another is memory structure,and the last one is API related.

That 29% difference has produce 100% gap in pixels and frames.

Who cares about the black line? The facts, a CPU thread containing generated command list drives frame buffer write operation on ESRAM. For frame buffer write operation, CPU has talk to Host Guest GPU MMU.

True HSA requires the data to be seen by both CPU and GPU at the same time all the time,when data is on ESRAM the CPU can't see it,there for no true HSA.

Like i already told you there are 3 gaps here,one is hardware related GPU,another is memory structure,and the last one is API related.

That 29% difference has produce 100% gap in pixels and frames.

Some workloads are not suitable for constant cache coherency e.g. if a frame is being layered by multiple shader effects, the CPU doesn't need to know GPU's memory writes until the task is completed i.e. the original goals for offloading is to "out source" the job and give me back the finish results and I do not need to know what you did to it.

YOU already know the disadvantage with the pure shared memory architecture.

PC's R7-265 with keep video memory bandwidth intact.

For XBO, ESRAM is a temporary memory space for the GPU i.e. think of CU's local data store (LDS). The problem with CELL's SPE local storage is the extreme one sided feature set. The goal is goldilocks zone i.e. a balance design that is optimized for different workloads.

PS4's APU is not the ultimate APU design e.g. a design that contains both aspects from XBO and PS4 e.g. next year's 14 nm super APU that includes both HBM and shared DDR3/DDR4 memory pools i.e. a gaming PC architecture without the PCI-E 16X Ver 3.0 bottleneck (32 GB/s) and avoids PS4's mistakes.

That 29% difference has produce 100% gap in pixels and frames.

XBO has additional problems beyond the 29 percent shader power gap e.g. hard to use APIs for ESRAM.

Some workloads are not suitable for constant cache coherency e.g. if a frame is being layered by multiple shader effects, the CPU doesn't need to know GPU's memory writes until the task is completed i.e. the original goals for offloading is to "out source" the job and give me back the finish results and I do not need to know what you did to it.

YOU already know the disadvantage with the pure shared memory architecture.

PC's R7-265 with keep video memory bandwidth intact.

For XBO, ESRAM is a temporary memory space for the GPU i.e. think of CU's local data store (LDS). The problem with CELL's SPE local storage is the extreme one sided feature set. The goal is goldilocks zone i.e. a balance design that is optimized for different workloads.

PS4's APU is not the ultimate APU design e.g. a design that contains both aspects from XBO and PS4 e.g. next year's 14 nm super APU that includes both HBM and shared DDR3/DDR4 memory pools i.e. a gaming PC architecture without the PCI-E 16X Ver 3.0 bottleneck (32 GB/s) and avoids PS4's mistakes.

That 29% difference has produce 100% gap in pixels and frames.

XBO has additional problems beyond the 29 percent shader power gap e.g. hard to use APIs for ESRAM.

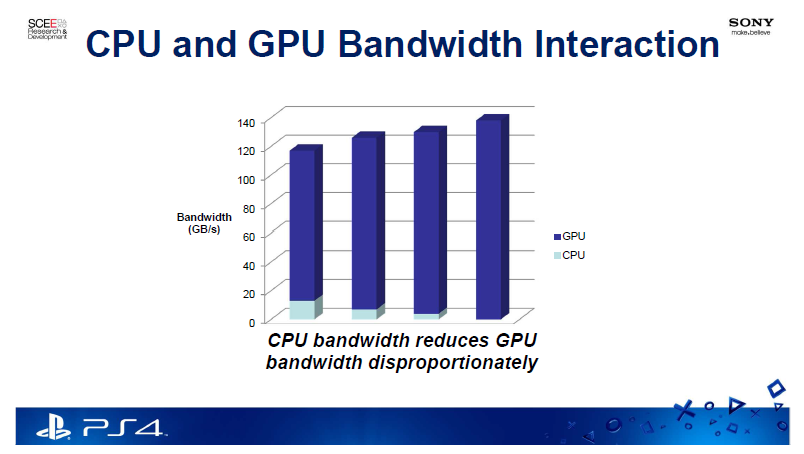

lol so you keep using sony chart as some kind of proof,in not place it say maximum bandwidth is 140GB/s that is what you assume.

Gilray stated that, “It means we don’t have to worry so much about stuff, the fact that the memory operates at around 172GB/s is amazing, so we can swap stuff in and our as fast as we can without it really causing us much grief.

http://gamingbolt.com/oddworld-inhabitants-dev-on-ps4s-8gb-gddr5-ram-fact-that-memory-operates-at-172gbs-is-amazing#RfwwWQPOl9eihUoW.99

A developer already shoot down your assumption long ago,as they were operating the PS4 at 172GB/s..

Some workloads are not suitable for constant cache coherency e.g. if a frame is being layered by multiple shader effects, the CPU doesn't need to know GPU's memory writes until the task is completed i.e. the original goals for offloading is to "out source" the job and give me back the finish results and I do not need to know what you did to it.

YOU already know the disadvantage with the pure shared memory architecture.

PC's R7-265 with keep video memory bandwidth intact.

For XBO, ESRAM is a temporary memory space for the GPU i.e. think of CU's local data store (LDS). The problem with CELL's SPE local storage is the extreme one sided feature set. The goal is goldilocks zone i.e. a balance design that is optimized for different workloads.

PS4's APU is not the ultimate APU design e.g. a design that contains both aspects from XBO and PS4 e.g. next year's 14 nm super APU that includes both HBM and shared DDR3/DDR4 memory pools i.e. a gaming PC architecture without the PCI-E 16X Ver 3.0 bottleneck (32 GB/s) and avoids PS4's mistakes.

That 29% difference has produce 100% gap in pixels and frames.

XBO has additional problems beyond the 29 percent shader power gap e.g. hard to use APIs for ESRAM.

lol so you keep using sony chart as some kind of proof,in not place it say maximum bandwidth is 140GB/s that is what you assume.

Gilray stated that, “It means we don’t have to worry so much about stuff, the fact that the memory operates at around 172GB/s is amazing, so we can swap stuff in and our as fast as we can without it really causing us much grief.

http://gamingbolt.com/oddworld-inhabitants-dev-on-ps4s-8gb-gddr5-ram-fact-that-memory-operates-at-172gbs-is-amazing#RfwwWQPOl9eihUoW.99

A developer already shoot down your assumption long ago,as they were operating the PS4 at 172GB/s..

PS4's memory operates at 176 GB/s

Sony's 140 GBs/ is in line with any AMD DDR3/GDDR5 memory controller efficiency. AMD R9-Fury throws GDDR5 and it's memory controllers in the bin.

You can restore some of the lost memory bandwidth with older compression/decompression data formats, but they are not as good Tonga's delta compression/decompression improvements.

Nothing new indeed. PS4 remains the world's most powerful video game console in the history of video games.

And Forza 6 is still coming out on Sept 15th and I'm getting it. lol!! Also getting Nathan Drake Collection as well in October. Great time to be a true gamers.

That guy's explanation of the importance of ROPs was pretty misleading, also more than a year old video.

"The Xbox One has 16 ROPs while the PlayStation 4 has 32. While this may look like it means 2x the performance, its not quite that simple. ROPs stands for Render Output Pipelines. At the end of the GPU's calculation's process, the ROPs draw out the final image. The trick is however, that the ROPs are only as useful as the GPU is Powerful, so you could have 2 billion ROPs (for example), but if your GPU is not powerful enough to utilize them, it means nothing. While the Playstation 4's GPU probably needs a little more than 16 ROPs, it is not nearly powerful enough to utilize all 32. Just to demonstrate what I mean, AMD's new R9 280x graphics card has 4.1 Tflops of computing power, more than twice that of Playstation 4, and it gets by fine with just 32 ROPs. The Xbox One, on the other hand has a slightly less powerful GPU, and so it probably has just the right amount of ROPs. The reason Sony could not just put in the exact number of ROPs they needed (like 20, or 18) is because ROPs are only made in chunks of 8,16, 32, 48, & 64, so they figured it would be better to have too much ROPs with the 32 configuration, than to bottleneck their bigger GPU with just 16 ROPs."

Yeah, I remember reading in a eurogamer article a while back that 32 ROPs in PS4 is total overkill.

PS4's memory operates at 176 GB/s

Sony's 140 GBs/ is in line with any AMD DDR3/GDDR5 memory controller efficiency. AMD R9-Fury throws GDDR5 and it's memory controllers in the bin.

You can restore some of the lost memory bandwidth with older compression/decompression data formats, but they are not as good Tonga's delta compression/decompression improvements.

I quoted a developer period in no place in that chart say maximum 140GB/s that is what you assume that chart was an example of how cpu could eat bandwidth disproportionally,not a chart confirming the PS4 can only use 140GB/s so yeah you ASSUMED that which mean nothing.

I just quote a developer that was operating his game at around 172GB/s which is lower than the 176GB/s the units has.

Not to mention the PS4 is a true HSA design which mean less copy and paste between CPU and GPU which usually happen with separate memory.

Yeah, I remember reading in a eurogamer article a while back that 32 ROPs in PS4 is total overkill.

No is not that was DF in one of the many Damage controls article they ran for MS back on the day,fact is the 7850 and 7870 both come with 32 RPO and in certain scenarios can be saturated.

Please Log In to post.

Log in to comment