[QUOTE="Jag85"]

[QUOTE="nameless12345"]

Carmack said that GC was actually less-powerful than the PS2. (smaller peak poly-counts)

But he also said that PS2 had a backwards GPU in comparison to GC and Xbox. (much less features)

Only console in that generation that was powerful enough to run Doom 3 was the Xbox.

Also, Xbox's Pentium 3-based CPU had it's strenghts over the PowerPC-based GC CPU.

Like for example it was better at complex physics calculations (Half-Life 2) and complex AI calculations. (Operation Flashpoint: Elite)

It was also PC-like X86 architecture so ports from the PC were easy on the Xbox.

Altho there weren't exactly many physics and AI intensive games on the GC so it's hard to say how it would handle them.

But Xbox also had more RAM in it's favour.

nameless12345

Are you sure Carmack said that? I just Googled it and haven't found him saying anything like that. As for peak polygon counts, benchmark tests showed the GameCube having the highest that generation, especially with the Rogue Squadron games which had a polygon count well beyond any Xbox game.

As for Doom 3, the reason why it wasn't brought over to the GameCube was because, like I said above, it lacked the Xbox's more advanced shading capabilities that the game heavily relied on. It was technically possible to recreate those shaders on the GameCube, but it would have been too difficult.

As for the Pentium III architecture, I'm not really sure if it really did have better physics & AI calculations, but the fact that Microsoft abandoned it in favour of the PowerPC architecture for the Xbox 360 suggests that, overall, the GameCube's PowerPC architecture had the edge over the Xbox's Pentium III architecture.

And finally, while the Xbox did have more overall RAM, the GameCube had faster 1T-SRAM.

Overall, the Xbox and GameCube were closely matched, with the GameCube offering more raw power while the Xbox offered a more advanced feature set... almost like the Mega Drive vs SNES, with the Mega Drive offering more raw power and the SNES offering a more advanced feature set.

Well that's what I heard from some "sources". (that he said that PS2 had better poly-count than GC did)

MS picked Power architecture because it was cheaper, not because it would be better than X86 architecture in all aspects.

The PS4 and X1 are both using X86 now.

IBM G3 vs Intel P3 was a interesting match but we haven't really seen much CPU-oriented games on GC.

The P3 in Xbox was clocked higher, if anything.

I don't quite agree that GC had more power than Xbox did.

GC's video chip was about on-par with a AMD/ATi Radeon 7200 while the Xbox's was a GeForce 3-based chip with improved feature set.

So basically their video chips were almost a gen appart.

GC was just a very efficient design, that I give it right.

I'd say "MD vs SNES" is more comparable to "PS3 vs 360".

PS3 had the CPU edge (like MD) but 360 had better GPU. (SNES)

I looked online and didn't find Carmack saying anything of the sort. And if he did, then it must have been from before any benchmark tests were done, since Sony (as usual) initially claimed the PS2 had a theotical peak polygon count of 60 million (in reality, the PS2's practical polygon count wasn't much higher than the Dreamcast).

The performance (in terms of MIPS and FLOPS) of a PowerPC G3 clocked at 485 MHz is higher than that of a Pentium III clocked at 733 MHz. The PowerPC series was always known for providing a higher performance at a lower clock rate than the Pentium series.

I'd say it wasn't until the Intel Core processors came along that x86 architecture overtook PowerPC architecture in terms of raw performance per clock rate, so it shouldn't be too surprising that the PS4 and X1 are going x86 now.

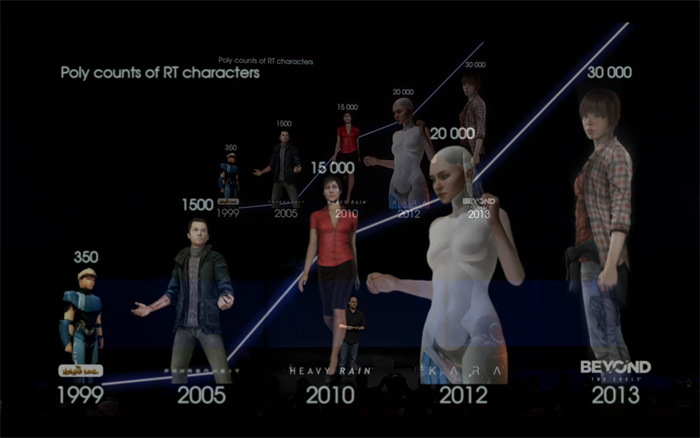

The GameCube did not use a Radeon-like GPU, but its GPU was a custom chip designed by ArtX (later brought by ATI), so you cannot compare it directly to any Radeon GPU. Like I already said, the Xbox did have a more advanced feature set, but benchmark tests have shown that the GameCube was capable of performing at higher polygon counts than the Xbox:

The Old Xbox vs GameCube Graphics War

Also, it was actually possible to re-create the Xbox's more advanced feature set on the GameCube, but it was just very difficult to do. Only a handful of GameCube games were programmed well enough to be able to pull off those more advanced graphical features.

Like the MD vs SNES battle, what makes the GC vs Xbox comparison similar is that one had more raw peformance while the other had more advanced graphical features. What also makes it similar is how often many gamers, by default, simply assume the SNES/Xbox was technically superior, overlooking how the MD/GC offered more raw performance than their rivals.

Log in to comment