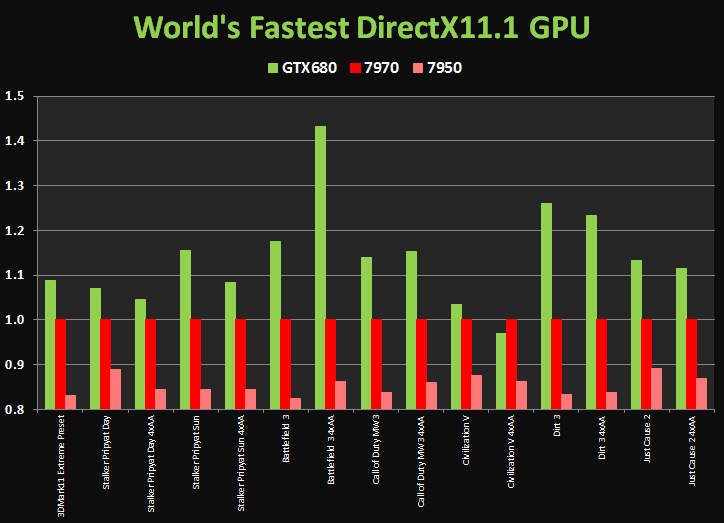

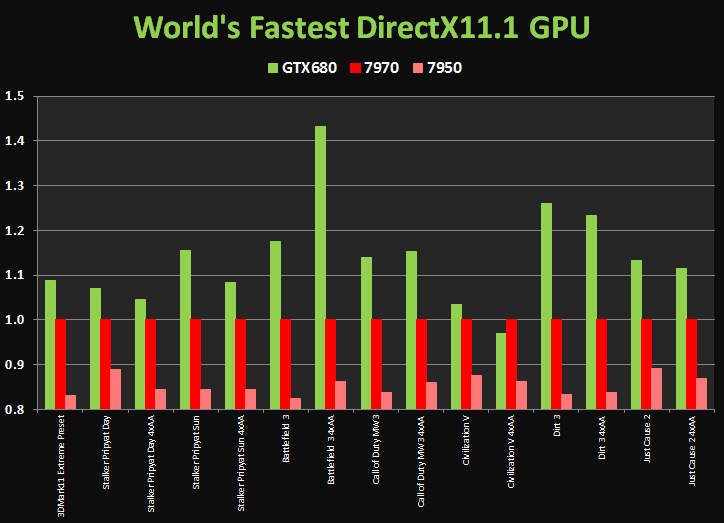

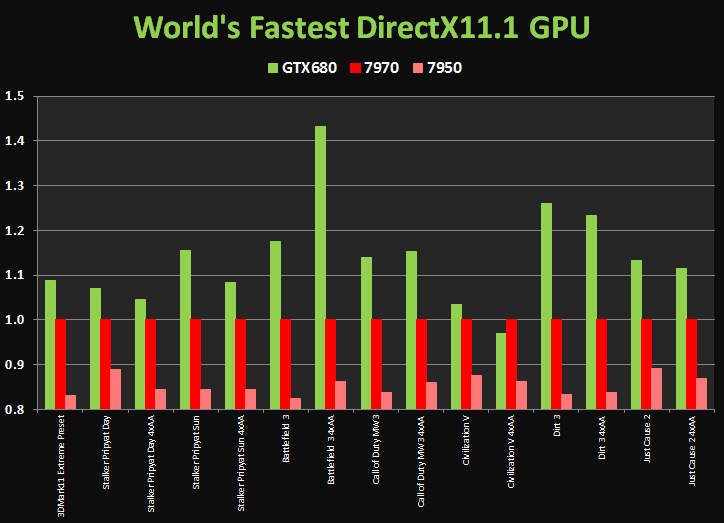

Some pretty hard hitting numbers, especially against the newly released AMD 7970.

Link

This topic is locked from further discussion.

I guess we are not going to get much of a price war.... This is really disappointing. I'll wait forGK110 myself, maybe it will come by the end of the year.

I guess we are not going to get much of a price war.... This is really disappointing. I'll wait forGK110 myself, maybe it will come by the end of the year.

GTSaiyanjin2

they're gonna have lower end models.

The gimped mobile version(which I want) will probably actually cost 999$ >.>All for $999.99 and a new house when it overheats and burns your place to the ground.

:P

Wasdie

[QUOTE="GTSaiyanjin2"]

I guess we are not going to get much of a price war.... This is really disappointing. I'll wait forGK110 myself, maybe it will come by the end of the year.

wewantdoom4now

they're gonna have lower end models.

Yeah but the way AMD and Nvidia are pricing their cards I cant see them having anything good of value to offer over their existing line up. The AMD 6850, 6870, and the nvidia 560ti are still some of the best cards for the money right now.

If this ends up being true, that is absurd. What impresses me the most is the fact that it runs on a pair of 6-pin power connectors. 2GB of VRAM is plenty, but the memory bandwidth would seem to be a little low for a flagship card at this point in the game, if prior reports are true. 192gb/s of bandwidth is a LOT, but AMD has some cards on the market with more if I'm not mistaken. If these specs are right, I'm very interested to see how it stacks up with so many cores and a relatively weak memory subsystem. VERY nice to see a TDP below 200 watts from a top notch Nvidia GPU, if accurate.

Rumor says it's the mid range GPU made to look high end with a massive price tag to it. All because AMD failed at making a competive GPU. Wont buy it unless it's 50% faster then a GTX 580. Been waiting a longgg time for an upgrade.Truth_Hurts_Uhuh why do think think it might be only 50% faster? Look at the specs again GTX 680 1536 Cuda cores vs GTX 580's 512 Cuda cores, thats 3x the shader processing power alone. Not counting the new architecture. Also note that its no real difference 256bit vs 384bit memory interface for single monitor resolutions. Its when you get into the scalable multi monitor resolution where the 2+gb 384 bit bus helps.

Those statistics are so skewed looking lol. .2 difference is like 9999

Those statistics are so skewed looking lol. .2 difference is like 9999 huh why do think think it might be only 50% faster? Look at the specs again GTX 680 1536 Cuda cores vs GTX 580's 512 Cuda cores, thats 3x the shader processing power alone. Not counting the new architecture. Also note that its no real difference 256bit vs 384bit memory interface for single monitor resolutions. Its when you get into the scalable multi monitor resolution where the 2+gb 384 bit bus helps. Why would you want a GPU that powerful if you weren't going to get into crazy resolutions...? (it's late here and I saw someone mention 1000 bucks)[QUOTE="Truth_Hurts_U"]Rumor says it's the mid range GPU made to look high end with a massive price tag to it. All because AMD failed at making a competive GPU. Wont buy it unless it's 50% faster then a GTX 580. Been waiting a longgg time for an upgrade.04dcarraher

[QUOTE="04dcarraher"]huh why do think think it might be only 50% faster? Look at the specs again GTX 680 1536 Cuda cores vs GTX 580's 512 Cuda cores, thats 3x the shader processing power alone. Not counting the new architecture. Also note that its no real difference 256bit vs 384bit memory interface for single monitor resolutions. Its when you get into the scalable multi monitor resolution where the 2+gb 384 bit bus helps. Why would you want a GPU that powerful if you weren't going to get into crazy resolutions...? (it's late here and I saw someone mention 1000 bucks) We will have to wait and see if the 256bit actually limits performance for those multi monitor resolutions like 5760 x 1080 or 7680 x 3200 , 384 bit ensures that there is more then enough headroom for three monitors along with more then 2gb of memory, If these specs are true about it having 2gb then most likely 5760 x 1080 will be an acceptable limit.[QUOTE="Truth_Hurts_U"]Rumor says it's the mid range GPU made to look high end with a massive price tag to it. All because AMD failed at making a competive GPU. Wont buy it unless it's 50% faster then a GTX 580. Been waiting a longgg time for an upgrade.achilles614

i think i know what nvidias strategy is now against amd. basically make one of their cards specs really incredible, then make underground deals with devs to make games that are heavy on what nvidias card does and in this way make amd look bad. its pretty pathetic nvidia cant compete normally but use these underhanded tactics. the shader count on the 680 is ridiculous. nvidia did the same thing last gen by gimping tessellation in their favor for the 5xx series

wow :?: Like AMD does not do the same crap with AMD sponsored games? You need to let go of the fanboyism, because your complaining about petty stuff and about a gpu that actually will outclass the 7970 while using nearly the same amount of power. And how did Nvidia gimp tessellation? another baseless claim.....i think i know what nvidias strategy is now against amd. basically make one of their cards specs really incredible, then make underground deals with devs to make games that are heavy on what nvidias card does and in this way make amd look bad. its pretty pathetic nvidia cant compete normally but use these underhanded tactics. the shader count on the 680 is ridiculous. nvidia did the same thing last gen by gimping tessellation in their favor for the 5xx series

blaznwiipspman1

It's a win-win for everyone and the price war will start when these come out.

wow :?: Like AMD does not do the same crap with AMD sponsored games? You need to let go of the fanboyism, because your complaining about petty stuff and about a gpu that actually will outclass the 7970 while using nearly the same amount of power. And how did Nvidia gimp tessellation? another baseless claim.....[QUOTE="blaznwiipspman1"]

i think i know what nvidias strategy is now against amd. basically make one of their cards specs really incredible, then make underground deals with devs to make games that are heavy on what nvidias card does and in this way make amd look bad. its pretty pathetic nvidia cant compete normally but use these underhanded tactics. the shader count on the 680 is ridiculous. nvidia did the same thing last gen by gimping tessellation in their favor for the 5xx series

04dcarraher

It's a win-win for everyone and the price war will start when these come out.

Dont worry, blazn like to go around every nvidia thread trying to trash them, he has been doing it for months.All for $999.99 and a new house when it overheats and burns your place to the ground.

Wasdie

Only has 2 6-pin power connectors. Might have fairly low power consumption and therefore and somewhat low amount of heat generated. Fingers crossed on this, but it might be high.

Anyways, looks good.

wow :?: Like AMD does not do the same crap with AMD sponsored games? You need to let go of the fanboyism, because your complaining about petty stuff and about a gpu that actually will outclass the 7970 while using nearly the same amount of power. And how did Nvidia gimp tessellation? another baseless claim.....[QUOTE="blaznwiipspman1"]

i think i know what nvidias strategy is now against amd. basically make one of their cards specs really incredible, then make underground deals with devs to make games that are heavy on what nvidias card does and in this way make amd look bad. its pretty pathetic nvidia cant compete normally but use these underhanded tactics. the shader count on the 680 is ridiculous. nvidia did the same thing last gen by gimping tessellation in their favor for the 5xx series

04dcarraher

It's a win-win for everyone and the price war will start when these come out.

AMD doesn't do that kind of stuff, they're in favour of open standards, and have been for a long time. Like I said, its an underhanded tactic, at least recognize it for what it is. Also, I didn't know the 680 was out already, where are you getting your insider information from? Nvidia gimped the tessellation in their favor, they slapped on so much of tessellation hardware to the 5xx series that devs even didn't know what to do with it. The games that did use tessellation to above normal levels were from devs that were in bed with nvidia. I'll call it now, the coming gen will see games slopped all over with an extra large portion of shaders.

im expecting here could cost that much of money(or even more). the 7970 cost almost that much atmAll for $999.99 and a new house when it overheats and burns your place to the ground.

Wasdie

Ok, I wanna see the price on that thing. The HD 7970 is already U$1000 here. That thing will cost at least U$1200...

AMD doesn't do that kind of stuff, they're in favour of open standards, and have been for a long time. Like I said, its an underhanded tactic, at least recognize it for what it is. Also, I didn't know the 680 was out already, where are you getting your insider information from? Nvidia gimped the tessellation in their favor, they slapped on so much of tessellation hardware to the 5xx series that devs even didn't know what to do with it. The games that did use tessellation to above normal levels were from devs that were in bed with nvidia. I'll call it now, the coming gen will see games slopped all over with an extra large portion of shaders.

blaznwiipspman1

For someone who's really focused on tactics and their ethics, you should consider this: What AMD is doing can also be considered unethical, as they are making things open for others to work on them, and not their own driver team. Why else do you think everyone else is complaining about their drivers? Even when AMD claimed to be stepping up their efforts for their driver programming team, there were still a few caveats found when the 7970 was released (refer to Techpowerup's Crossfire test on BF3 at 2560x1600).

When a company makes something open-source, yes, it gives opportunities for others to tweak it and maximize its potential. However, it is obvious that is not the case, as developers are not embracing this opportunity, but rather leaving it aside in favour of Nvidia's sponsorships.

Push comes to shove, all these companies are in it for the money. Stop complaining when one company uses up more money to divert developers to favour their cards, and start looking at the possibility that maybe AMD is just plain stingy and lazy.

Around 10% faster than the 7970 in real world gaming scenarions confirmed, after those Nvidia benhcmarks showed 40% difference. With aggressive pricing, it could be a good hit, but I was expecting something better. Hopefully the rumored 190w TDP is true, and hopefully the true Kepler juggernaught, the GK110 comes soon as well, and is all we excpected it to be.

Those specs are so high.... I mean, REALLY HIGH!!!!!

LIKE SUPER HIGH!!!!

The jump from the 580 to 680 seems way to big to be true, that would leave ATI in the dark, I need to gather a few thousands $ for this monster!!!!

wow :?: Like AMD does not do the same crap with AMD sponsored games? You need to let go of the fanboyism, because your complaining about petty stuff and about a gpu that actually will outclass the 7970 while using nearly the same amount of power. And how did Nvidia gimp tessellation? another baseless claim.....[QUOTE="04dcarraher"]

[QUOTE="blaznwiipspman1"]

i think i know what nvidias strategy is now against amd. basically make one of their cards specs really incredible, then make underground deals with devs to make games that are heavy on what nvidias card does and in this way make amd look bad. its pretty pathetic nvidia cant compete normally but use these underhanded tactics. the shader count on the 680 is ridiculous. nvidia did the same thing last gen by gimping tessellation in their favor for the 5xx series

blaznwiipspman1

It's a win-win for everyone and the price war will start when these come out.

AMD doesn't do that kind of stuff, they're in favour of open standards, and have been for a long time. Like I said, its an underhanded tactic, at least recognize it for what it is. Also, I didn't know the 680 was out already, where are you getting your insider information from? Nvidia gimped the tessellation in their favor, they slapped on so much of tessellation hardware to the 5xx series that devs even didn't know what to do with it. The games that did use tessellation to above normal levels were from devs that were in bed with nvidia. I'll call it now, the coming gen will see games slopped all over with an extra large portion of shaders.

First you say that Nvidia "gimped" their hardware by making it better at tessellation than AMD bothered to, and then forget the fact that AMD are the ones who are heavy on cores and shaders, and have been for years now.

Saying that something was "gimped" indicates that it was somehow made weaker, not stronger.. at least anywhere I've ever heard it used. Don't whine because the GTX 400 and 500 series performed better in tessellation than their AMD counterparts.. especially the 5800's. That's the way the game works.

Secondly, even if Nvidia's new GTX 680 does have over 1500 Cuda cores, that's fewer than the number of stream processors that the AMD 5870 from 2 and a half years ago had. I'm not really sure where you're going with your arguments. They seem full of holes and obvious oversights.

[QUOTE="blaznwiipspman1"]

[QUOTE="04dcarraher"] wow :?: Like AMD does not do the same crap with AMD sponsored games? You need to let go of the fanboyism, because your complaining about petty stuff and about a gpu that actually will outclass the 7970 while using nearly the same amount of power. And how did Nvidia gimp tessellation? another baseless claim.....

It's a win-win for everyone and the price war will start when these come out.

hartsickdiscipl

AMD doesn't do that kind of stuff, they're in favour of open standards, and have been for a long time. Like I said, its an underhanded tactic, at least recognize it for what it is. Also, I didn't know the 680 was out already, where are you getting your insider information from? Nvidia gimped the tessellation in their favor, they slapped on so much of tessellation hardware to the 5xx series that devs even didn't know what to do with it. The games that did use tessellation to above normal levels were from devs that were in bed with nvidia. I'll call it now, the coming gen will see games slopped all over with an extra large portion of shaders.

First you say that Nvidia "gimped" their hardware by making it better at tessellation than AMD bothered to, and then forget the fact that AMD are the ones who are heavy on cores and shaders, and have been for years now.

Saying that something was "gimped" indicates that it was somehow made weaker, not stronger.. at least anywhere I've ever heard it used. Don't whine because the GTX 400 and 500 series performed better in tessellation than their AMD counterparts.. especially the 5800's. That's the way the game works.

Secondly, even if Nvidia's new GTX 680 does have over 1500 Cuda cores, that's fewer than the number of stream processors that the AMD 5870 from 2 and a half years ago had. I'm not really sure where you're going with your arguments. They seem full of holes and obvious oversights.

AMD: More cores but each of them are slower. Nvidia: Less cores but each of them are faster. Look at the scale of that graph. Unless it is priced the same, there is zero point.

Look at the scale of that graph. Unless it is priced the same, there is zero point. [QUOTE="hartsickdiscipl"][QUOTE="blaznwiipspman1"]

AMD doesn't do that kind of stuff, they're in favour of open standards, and have been for a long time. Like I said, its an underhanded tactic, at least recognize it for what it is. Also, I didn't know the 680 was out already, where are you getting your insider information from? Nvidia gimped the tessellation in their favor, they slapped on so much of tessellation hardware to the 5xx series that devs even didn't know what to do with it. The games that did use tessellation to above normal levels were from devs that were in bed with nvidia. I'll call it now, the coming gen will see games slopped all over with an extra large portion of shaders.

ShadowDeathX

First you say that Nvidia "gimped" their hardware by making it better at tessellation than AMD bothered to, and then forget the fact that AMD are the ones who are heavy on cores and shaders, and have been for years now.

Saying that something was "gimped" indicates that it was somehow made weaker, not stronger.. at least anywhere I've ever heard it used. Don't whine because the GTX 400 and 500 series performed better in tessellation than their AMD counterparts.. especially the 5800's. That's the way the game works.

Secondly, even if Nvidia's new GTX 680 does have over 1500 Cuda cores, that's fewer than the number of stream processors that the AMD 5870 from 2 and a half years ago had. I'm not really sure where you're going with your arguments. They seem full of holes and obvious oversights.

AMD: More cores but each of them are slower. Nvidia: Less cores but each of them are faster.Radeon HD 7870 follows less cores (i.e. 1280 SPs) with faster reference clock speed (i.e. 1Ghz).

AMD could have designed thier own GTX 680 type config from HD7870 i.e. 1.3Ghz core, 5Ghz (1.5Ghz ref)GDDR5 memory.

Look at the scale of that graph. Unless it is priced the same, there is zero point. 10% to 20% average. I see these numbers leveling out or even AMD passing Nvidia at very high rezs.

Look at the scale of that graph. Unless it is priced the same, there is zero point. 10% to 20% average. I see these numbers leveling out or even AMD passing Nvidia at very high rezs. [QUOTE="hartsickdiscipl"][QUOTE="blaznwiipspman1"]

AMD doesn't do that kind of stuff, they're in favour of open standards, and have been for a long time. Like I said, its an underhanded tactic, at least recognize it for what it is. Also, I didn't know the 680 was out already, where are you getting your insider information from? Nvidia gimped the tessellation in their favor, they slapped on so much of tessellation hardware to the 5xx series that devs even didn't know what to do with it. The games that did use tessellation to above normal levels were from devs that were in bed with nvidia. I'll call it now, the coming gen will see games slopped all over with an extra large portion of shaders.

ShadowDeathX

First you say that Nvidia "gimped" their hardware by making it better at tessellation than AMD bothered to, and then forget the fact that AMD are the ones who are heavy on cores and shaders, and have been for years now.

Saying that something was "gimped" indicates that it was somehow made weaker, not stronger.. at least anywhere I've ever heard it used. Don't whine because the GTX 400 and 500 series performed better in tessellation than their AMD counterparts.. especially the 5800's. That's the way the game works.

Secondly, even if Nvidia's new GTX 680 does have over 1500 Cuda cores, that's fewer than the number of stream processors that the AMD 5870 from 2 and a half years ago had. I'm not really sure where you're going with your arguments. They seem full of holes and obvious oversights.

AMD: More cores but each of them are slower. Nvidia: Less cores but each of them are faster.Yes, I know this. That doesn't change the fact that he was going off about shaders and the fact is, AMD has a lot more by pure numbers.

[QUOTE="blaznwiipspman1"]

AMD doesn't do that kind of stuff, they're in favour of open standards, and have been for a long time. Like I said, its an underhanded tactic, at least recognize it for what it is. Also, I didn't know the 680 was out already, where are you getting your insider information from? Nvidia gimped the tessellation in their favor, they slapped on so much of tessellation hardware to the 5xx series that devs even didn't know what to do with it. The games that did use tessellation to above normal levels were from devs that were in bed with nvidia. I'll call it now, the coming gen will see games slopped all over with an extra large portion of shaders.

ravenguard90

For someone who's really focused on tactics and their ethics, you should consider this: What AMD is doing can also be considered unethical, as they are making things open for others to work on them, and not their own driver team. Why else do you think everyone else is complaining about their drivers? Even when AMD claimed to be stepping up their efforts for their driver programming team, there were still a few caveats found when the 7970 was released (refer to Techpowerup's Crossfire test on BF3 at 2560x1600).

When a company makes something open-source, yes, it gives opportunities for others to tweak it and maximize its potential. However, it is obvious that is not the case, as developers are not embracing this opportunity, but rather leaving it aside in favour of Nvidia's sponsorships.

Push comes to shove, all these companies are in it for the money. Stop complaining when one company uses up more money to divert developers to favour their cards, and start looking at the possibility that maybe AMD is just plain stingy and lazy.

DO YOU UNDERSTAND WHAT ETHICS MEANS?

No, you do not.

Ethics is not the Word you should say There Policy Is what you should have said or ToS as even open source thing can come with Severe ToS you should also have said is Dishonest or Disreputable : because what they do is Ethical

I do hope AMD driver keep the lead they have expect 12.2 fiasco with 2D game so far it seem it doing alright for most of game versus time of ATI Team Driver like ATI HD47xx/48xx or worst ATI HD58xx so much issue with them according friend

altough some of the ATI team are still there i think they shouuld invest twice the amount on updating driver bi-weekly till there Driver are super stable : mean while they keep upgrade there Crossfire application as needed to fix most of issue there still few to fix

DO YOU UNDERSTAND WHAT ETHICS MEANS?

No, you do not.

GummiRaccoon

Apparently, you don't either if you haven't considered the subjective nature of it.

There's always two sides to an argument. What's ethical or "right" can be different depending on where one's perspective originates from.

If you take it from a utilitarian perspective, then Nvidia is in the wrong, as it would not benefit the majority of users out there by optimizing software to run better on their cards only. However, if you take it from a relativist perspective, then it is based entirely on the moral perspective that you take to judge the action. On one hand, Nvidia could be blamed for "bribing" the developers to make their software work better on their cards; on the other hand, you could say putting in the extra funding is essential in order to ensure all potential is used in their hardware. If it is the latter, then it's AMD that is in the wrong, as they refuse to ensure that resources are made available to ensure the same optimizations can be had on their cards.

What blazn was doing was proclaiming ethical violations in Nvidia's actions. I'm just saying it's not as absolute as he's claiming.

[QUOTE="GummiRaccoon"]

DO YOU UNDERSTAND WHAT ETHICS MEANS?

No, you do not.

ravenguard90

Apparently, you don't either if you haven't considered the subjective nature of it.

There's always two sides to an argument. What's ethical or "right" can be different depending on where one's perspective originates from.

If you take it from a utilitarian perspective, then Nvidia is in the wrong, as it would not benefit the majority of users out there by optimizing software to run better on their cards only. However, if you take it from a relativist perspective, then it is based entirely on the moral perspective that you take to judge the action. On one hand, Nvidia could be blamed for "bribing" the developers to make their software work better on their cards; on the other hand, you could say putting in the extra funding is essential in order to ensure all potential is used in their hardware. If it is the latter, then it's AMD that is in the wrong, as they refuse to ensure that resources are made available to ensure the same optimizations can be had on their cards.

What blazn was doing was proclaiming ethical violations in Nvidia's actions. I'm just saying it's not as absolute as he's claiming.

There is nothing morally right or wrong about being inept, therefore it cannot be ethical nor unethical.

Please Log In to post.

Log in to comment