I honestly don't think that none of these companies will have their main system sold at a loss anymore. I think the two system approach with the latter having the updated CPU / GPU is here to stay.

Games will play the same, as long as we don't see a true upgrade in CPU power Next-Gen.

@ronvalencia: "Could have" and cut CU's has nothing to do with what it has to use. The simple fact is 2 years in not enough for the industry to have a viable GPU option to accommodate console price/heat/power restrictions of a GPU with 96 CU's, price being the biggest factor.

@xboxps2cube: The XIX is being sold at a loss... The problem is that console gamers want to be wow'ed but faint at the sight of a $599 price tag. If the X1X was sold with profit being made on the hardware they would scrap the 4K Bluray and the RX590 would probably end up being a RX 570.

The PS4 Pro is breaking even also and doesn't have a 4K bluray and the GPU is a RX 470... If they wanted to make profit they would need to drop the GPU down to a RX 460.

A $399 price point with the companies making profit... Your looking at a entry level PC. At $499 and selling at a loss your looking at a X1X a mid to high end PC.

Again though all this is moot, since the mid/late hardware jump the next generation doesn't have much of chance to impress anyone unless a drastic price increase to $599 is introduced. There is a article on the PS4 Pro saying that they couldn't afford to put in a 2TB HDD... Which should indicate how low the budget is. LINK: http://www.pocket-lint.com/news/138783-ps4-pro-ditches-4k-ultra-hd-blu-ray-player-for-cost-and-one-other-reason

In two years if you guys expect 10TFLOPs, 16GB's GDDR6, 2TB and a Ryzen CPU?... You guys are deluded(not you but the OP).

Yes the jump from the PS2 to the PS3 was significant and will be the last meaningful graphical jump for consoles. There is still a gameplay experience jump to be had and VR/AR is on it's way. The public and developers just have to stop resisting the inevitable.

@xboxps2cube: The XIX is being sold at a loss... The problem is that console gamers want to be wow'ed but faint at the sight of a $599 price tag. If the X1X was sold with profit being made on the hardware they would scrap the 4K Bluray and the RX590 would probably end up being a RX 570.

The PS4 Pro is breaking even also and doesn't have a 4K bluray and the GPU is a RX 470... If they wanted to make profit they would need to drop the GPU down to a RX 460.

A $399 price point with the companies making profit... Your looking at a entry level PC. At $499 and selling at a loss your looking at a X1X a mid to high end PC.

Again though all this is moot, since the mid/late hardware jump the next generation doesn't have much of chance to impress anyone unless a drastic price increase to $599 is introduced. There is a article on the PS4 Pro saying that they couldn't afford to put in a 2TB HDD... Which should indicate how low the budget is. LINK: http://www.pocket-lint.com/news/138783-ps4-pro-ditches-4k-ultra-hd-blu-ray-player-for-cost-and-one-other-reason

In two years if you guys expect 10TFLOPs(1), 16GB's GDDR6(2), 2TB and a Ryzen CPU?... You guys are deluded(not you but the OP).

1. With 7 nm, it should nearly double RX-580 (14 nm)'s 6.17 TFLOPS i.e. 10 to 12 TFLOPS, but the rasterization hardware needs to scale at appropriate levels. This is assuming the APU doesn't have any distractions.

2. X1X dev kit already has 24 GB GDDR5-6800. Year 2013 PS4's GDDR5-5600/6000 chips has moved to year 2016's PS4 Pro's GDDR5-7000. Two years after 2017, I expect GDDR5-9000. GDDR6 has GDDR6-16000 starting point.

384 bit GDDR5-9000 would yield about 432 GB/s. VEGA-56 has 410 GB/s.

Vega 56/64's quad rasterization units are bottlenecks for this TFLOPS level i.e. GTX 1080 Ti has six rasterization units with 12.9 TFLOPS level.

PS4/PS4 Pro level hardware follows AMD's R7-2xx/RX-x70 memory solutions.

@xboxps2cube: The XIX is being sold at a loss... The problem is that console gamers want to be wow'ed but faint at the sight of a $599 price tag. If the X1X was sold with profit being made on the hardware they would scrap the 4K Bluray and the RX590 would probably end up being a RX 570.

The PS4 Pro is breaking even also and doesn't have a 4K bluray and the GPU is a RX 470... If they wanted to make profit they would need to drop the GPU down to a RX 460.

A $399 price point with the companies making profit... Your looking at a entry level PC. At $499 and selling at a loss your looking at a X1X a mid to high end PC.

Again though all this is moot, since the mid/late hardware jump the next generation doesn't have much of chance to impress anyone unless a drastic price increase to $599 is introduced. There is a article on the PS4 Pro saying that they couldn't afford to put in a 2TB HDD... Which should indicate how low the budget is. LINK: http://www.pocket-lint.com/news/138783-ps4-pro-ditches-4k-ultra-hd-blu-ray-player-for-cost-and-one-other-reason

In two years if you guys expect 10TFLOPs, 16GB's GDDR6, 2TB and a Ryzen CPU?... You guys are deluded(not you but the OP).

https://www.polygon.com/e3/2017/6/14/15804708/xbox-one-x-not-making-money

Xbox One X is not being sold at a lost and not earning any money either. Phil Spencer rejects Xbox One X's "selling at a lost" claims.

@ronvalencia: "Could have" and cut CU's has nothing to do with what it has to use. The simple fact is 2 years in not enough for the industry to have a viable GPU option to accommodate console price/heat/power restrictions of a GPU with 96 CU's, price being the biggest factor.

XBO was created with Kinect while X1X doesn't have Kinect distraction.

If X1X/PS4's design approach was applied for 28 nm era GCN with 363 mm2 size chip, it would have about 28 CUs (two Bonaire 14 CUs). Two Bonaire 14 CUs would have quad CU lanes/quad rasterization setup just like Tonga GCN.

XBO GPU already has two GCP units hence it's already faking Two Bonaire in hyper-thread form. Standard PC Bonaire has a single GCP.

Kinect's $80 can yield a larger GPU and better memory. PS4's smaller 347 mm2 size chip already has 20 CUs.

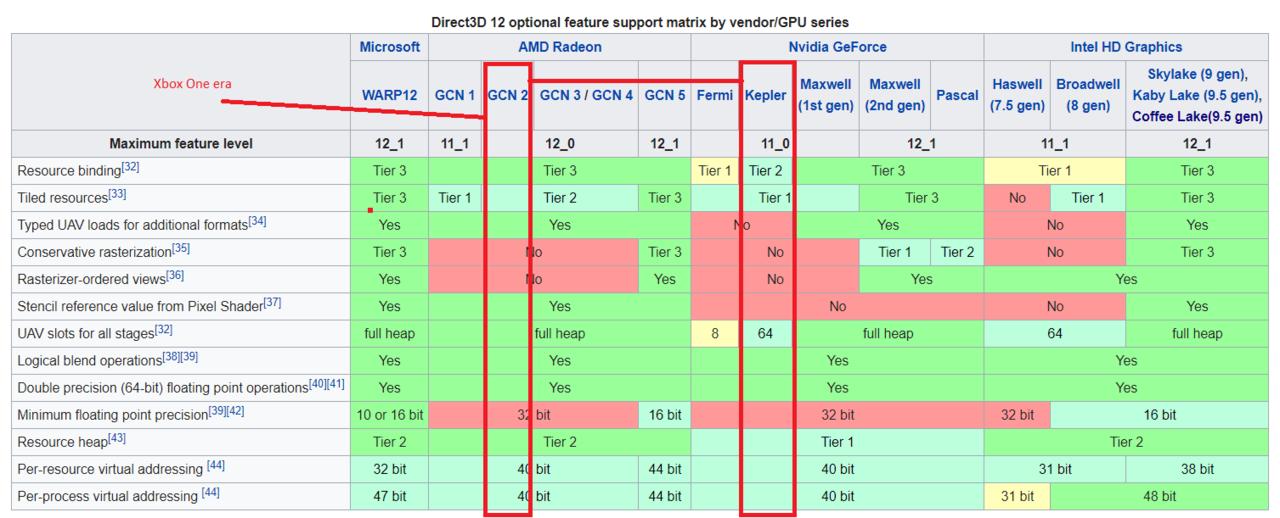

Vega 56/64 is already has matured DirectX12 Feature Level 12_1 with most of the optionals.

https://www.globalfoundries.com/technology-solutions/cmos/performance/7nm-finfet

GF's 7nm FinFET delivers more than twice the logic and SRAM density, and either >40% performance boost or >60% total power reduction, compared to 14nm foundry FinFET offerings.

Vega 64 at 250 watts with 60 percent power reduction would land on 100 watts.

Vega 64 at 300 watts with 60 percent power reduction would land on 120 watts.

https://www.techpowerup.com/gpudb/2871/radeon-rx-vega-64

Vega 64's 14 nm FinFET fab'ed 484 mm2 reduce by 2X yields 242 mm2. Vega 64 has high bandwidth cache distraction (HBC).

X1X's GPU has about 283 mm2 chip area size without any distractions. Polaris 10/20 has 232 mm2.

From year 2015 to 2019, there have been about four years on 14 nm/12 nm process node.

To me, the difference between $399 and $599 when it comes to immersive graphics is nothing. I don't consider $200 or even as additional cost, for something I want, to be very much at all and I'm certainly not rich. Just a blue collar worker. I don't consider money to get serious until at least $2000, anything under is basically petty cash in comparison to the real world.

I mean I have a vet bill that costs more than the X1X for crying out loud. That's honestly not much money at all, especially for something that can provide so much enjoyment, bragging rights...etc. I probably spend an average of $150 - $500 a year on PC part upgrades just because I want them. I won't tell anyone how much I've spend on Headphones alone the past 3 years.

We only get to live one life. And I want to experience whatever I possibly can out of it, preferably the best possible experience from it. I guess I just can't understand how anyone in 2017 would consider $599 for the most powerful console, to be too much to pay unless you are just content with the poor man's experience. You can mow lawns on Saturdays and come up with an extra $200 in a few weeks.

@ronvalencia: No where does he say if it is breaking even or selling at a loss...

"I don't want to get into all the numbers, but in aggregate you should think about the hardware part of the console business is not the money-making part of the business. The money-making part is in selling games."

As for your predictions, we will have to wait and see. I personally do not see a substantial upgrade over the X1X happening in the span of two years. It will be better than the original X1 and PS4 but for those who bought the Pro and X1X they will be harder to convince unless the companies have other incentives other than raw power.

@ronvalencia: 1. No where does he say if it is breaking even or selling at a loss...

"I don't want to get into all the numbers, but in aggregate you should think about the hardware part of the console business is not the money-making part of the business. The money-making part is in selling games."

As for your predictions, we will have to wait and see. I personally do not see a substantial upgrade over the X1X happening in the span of two years. It will be better than the original X1 and PS4 but for those who bought the Pro and X1X they will be harder to convince unless the companies have other incentives other than raw power.

1. From the article

When asked if the console was losing money on each sale, Spencer didn’t further specify to the reporter.

He did have this to say, though, when it comes to the traditional route by which most game systems and their designers have made money:

"I don't want to get into all the numbers, but in aggregate you should think about the hardware part of the console business is not the money-making part of the business. The money-making part is in selling games."

Phil Spencer counters the question on "console was losing money on each sale" with "hardware part of the console business is not the money-making part of the business" i.e. denied making a lost (from the question) but MS is not making money on it.

Full process tech change usually leads to a jump e.g.

28 nm R7-265 (Pitcairn) -----> 14 nm RX-470D

28 nm R9-370 (Pitcairn) -----> 14 nm RX-470 --> RX-570 (refinements)

28 nm R9-370X (Pitcairn) -----> 14 nm RX-480 --> RX-580 (refinements)

dual rasterization units -----> quad rasterization units. Still old school ROPS/RBE setup.

Before 7 nm, there's 12 nm minor step jump in 2018.

Mainstream Navi 11 has 7 nm.

If Navi 11 still has quad rasterization units, then it would have major bottlenecks like Vega 56/64 with GP104 level classic GPU hardware. Any major TFLOPS increase must scale with rasterization power.

Major new feature for Vega and X1X are just ROPS/RBE having multi-MB of L2 type cache which is introduced with NVIDIA's Maxwell v1.

Please Log In to post.

Log in to comment