The X1X should run it at at least 1440p @60fps. Bungie is full of crap and there's nothing they can say for anyone to take them seriously. They should just be truthful about it and say it's 100% parity issues they locked it to 30 frames. There should be 2 options for X1X users: 1440p 60fps or 4k 30fps. After leaving MS they've been a massive joke in the gaming industry which looks like they're continuing it through their second game.

If my GTX 970 can run Destiny 2 1080p @60fps then the X1X should be able to as well.

More and more Xbox One X threads! Keep em coming!

You're welcome homie. Be sure to click 'follow.'

The shitty Jaguar CPU is the problem. It's what I've been trying to tell clowns like Wrongvalencia since they announced the official XboneX specs. The XboneX is still bottlenecked by the shitty Jaguar CPU, no amount of optimizations will make up for the garbage CPU in the XBoneX. If you want 60fps at all times stick to PC. Destiny 2 won't run at 60fps on X1X even if they drop it down to 1080p, the CPU is that shitty, same with the PS4 Pro.

The X1X should run it at at least 1440p @60fps. Bungie is full of crap and there's nothing they can say for anyone to take them seriously. They should just be truthful about it and say it's 100% parity issues they locked it to 30 frames. There should be 2 options for X1X users: 1440p 60fps or 4k 30fps. After leaving MS they've been a massive joke in the gaming industry which looks like they're continuing it through their second game.

what CPU do you have paired with your 970? Makes a big difference.

The X1X should run it at at least 1440p @60fps. Bungie is full of crap and there's nothing they can say for anyone to take them seriously. They should just be truthful about it and say it's 100% parity issues they locked it to 30 frames. There should be 2 options for X1X users: 1440p 60fps or 4k 30fps. After leaving MS they've been a massive joke in the gaming industry which looks like they're continuing it through their second game.

what CPU do you have paired with your 970? Makes a big difference.

an old ass i5 4750 which was a mid tier CPU even when it was new back in 2014

The X1X should run it at at least 1440p @60fps. Bungie is full of crap and there's nothing they can say for anyone to take them seriously. They should just be truthful about it and say it's 100% parity issues they locked it to 30 frames. There should be 2 options for X1X users: 1440p 60fps or 4k 30fps. After leaving MS they've been a massive joke in the gaming industry which looks like they're continuing it through their second game.

what CPU do you have paired with your 970? Makes a big difference.

an old ass i5 4750 which was a mid tier CPU even when it was new back in 2014

Yeah..... it's still better than what's in the X1X lol.

The X1X should run it at at least 1440p @60fps. Bungie is full of crap and there's nothing they can say for anyone to take them seriously. They should just be truthful about it and say it's 100% parity issues they locked it to 30 frames. There should be 2 options for X1X users: 1440p 60fps or 4k 30fps. After leaving MS they've been a massive joke in the gaming industry which looks like they're continuing it through their second game.

what CPU do you have paired with your 970? Makes a big difference.

an old ass i5 4750 which was a mid tier CPU even when it was new back in 2014

Yeah..... it's still better than what's in the X1X lol.

lmao yeah right.

Can the GPU in the X1X perform to the same level as a GTX 970?

lol the GTX 970 is trash compared to what the X1X is packing.

Can the GPU in the X1X perform to the same level as a GTX 970?

lol the GTX 970 is trash compared to what the X1X is packing.

I think you're overestimating a bit. A 100 mhz bump in clock speed over the GTX 970 base (which nobody really owns, everybody has slightly higher clocked versions) isn't going to be as big of a performance bump as you think. This is assuming the Xbox One X's GPU isn't CPU limited, which it's sounding like it may be.

It's looking like the X1X is on par with about a GTX 980, not much more powerful than a GTX 970.

@Wasdie: Are you kidding me, It can easily. As bungie have said CPU is the issue. I feel that Pro and especially X1X can just about do it. The concern is probably the base console cpu's and there user base is the main focus. There holding back these premium consoles. We shall see how cpu benchmark stack up soon enough.

Yeah I just did a quick google and it looks like the GPU in the Xbox One X is comparable to that of a Radeon R9 390x which has about 100 mhz slower clock speed and 44 compute units compared to the Xbox One X's 40.

The Radeon R9 390x benchmarks a bit lower than the GTX 970, but if you bump the Radeon R9 930x's clock speed by 100 mhz it probably makes the difference.

So I think it's safe to say the Xbox One X's GPU will be roughly similar to the GTX 970.

Can the GPU in the X1X perform to the same level as a GTX 970?

lol the GTX 970 is trash compared to what the X1X is packing.

I think you're overestimating a bit. A 100 mhz bump in clock speed over the GTX 970 base (which nobody really owns, everybody has slightly higher clocked versions) isn't going to be as big of a performance bump as you think. This is assuming the Xbox One X's GPU isn't CPU limited, which it's sounding like it may be.

It's looking like the X1X is on par with about a GTX 980, not much more powerful than a GTX 970.

Go watch the DF analysis of the PC version. They basically say even a toaster can run D2 in at least 30 fps. Also the X1X is packing closer to a GTX 1070

@lifelessablaze: The specs don't line up with that. It looks like the card is going to perform around the same level as a GTX 970, not 1070.

I know Destiny 2 can be run on basically a toaster, but when you start running at 1440p or 4k, things change.

I have no doubt in my mind that Destiny 2 could run at 1080p60 on the Xbox One X. I'm just questioning your assertion that the GPU is more powerful than a single GTX 970. I don't believe that's the case. They are similar and the Xbox One X's GPU may outperform a GTX 970 by a little, but not by much.

I think you really need to revise your expectations for the Xbox One X. You're not going to be buying a $500 console that competes with $500 GPUs. It's just not going to happen.

The X1X should run it at at least 1440p @60fps. Bungie is full of crap and there's nothing they can say for anyone to take them seriously. They should just be truthful about it and say it's 100% parity issues they locked it to 30 frames. There should be 2 options for X1X users: 1440p 60fps or 4k 30fps. After leaving MS they've been a massive joke in the gaming industry which looks like they're continuing it through their second game.

what CPU do you have paired with your 970? Makes a big difference.

an old ass i5 4750 which was a mid tier CPU even when it was new back in 2014

Yeah..... it's still better than what's in the X1X lol.

lmao yeah right.

The CPU in the X1X is only clocked at 2.3, and has considerably worse instructions per clock cycle than your i5 at 3.2 Ghz.

But your 970 was about 330$ alone when it launched, why would a whole system outperform it for a 100$ more?

The 970s were more than that when they launched. More like $400-450.

http://www.anandtech.com/show/8526/nvidia-geforce-gtx-980-review/7

| Model | Launch | Release price (USD) |

|---|---|---|

GeForce GTX 970 | September 18, 2014 | $329 |

| GeForce GTX 980 | $549 | |

| GeForce GTX 980 Ti | June 2, 2015 | $649 |

Actually, the X1X's GPU is a souped-up Radeon RX 580, with more shaders, more TMUs and slighter higher clock. It is therefore about parity with or slightly better than the GTX 970.

The issue definitely seems to be the CPU. They're tiny and slow clocked. From what I can gather, the PS4's SOC consist of two quad-core Jaguars, each one about 3.1 mm2. A Haswell i5 is 177 mm2 (though this admittedly includes the integrated GPU), and clocked at at least 2.7 GHz. Even the X1X's Jaguar is clocked only at 2.3 GHz.

@Wasdie: The X1X runs Rise of the Tomb Raider in 4K/30fps and has settings comparable to the Very High(highest) preset on PC. A 970 can't do that.

The performance of this GPU places it between that of the a GTX 980 and 980 Ti, so very much 390X territory. I don't know what this dude is talking about bleating on about the 970...

For all the people complaining, complain about the dumb mid gen upgrades. We all knew they have to satisfy their largest base first, and parity is a must at least in multiplayer, which this whole game is.(Even the story mode has other players around)

The CPU is weaker than an entry i3.

It really is a potato box.

Consoles only sell to the consumers without tech knowledge, and succumb to buzz words when thinking this a monster piece of tech.

The X1X should run it at at least 1440p @60fps. Bungie is full of crap and there's nothing they can say for anyone to take them seriously. They should just be truthful about it and say it's 100% parity issues they locked it to 30 frames. There should be 2 options for X1X users: 1440p 60fps or 4k 30fps. After leaving MS they've been a massive joke in the gaming industry which looks like they're continuing it through their second game.

X1X's version is geared for Digital Foundry's XBO resolution gate.

For multiplayer without a dedicated server, the problem is you can't have different machines having 30 hz vs 60 hz game simulation update rates mix hence the lowest machine dictates the lowest common denominator.

@MonsieurX: huh

Damn Nvidia what happened with the GTX 1070s prices.

Mining craze is what happened. Once all AMD gpu's flew off the shelves they had no choice but to grab GTX 1060's and 1070's to supplement.

Actually, the X1X's GPU is a souped-up Radeon RX 580, with more shaders, more TMUs and slighter higher clock. It is therefore about parity with or slightly better than the GTX 970.

The issue definitely seems to be the CPU. They're tiny and slow clocked. From what I can gather, the PS4's SOC consist of two quad-core Jaguars, each one about 3.1 mm2. A Haswell i5 is 177 mm2 (though this admittedly includes the integrated GPU), and clocked at at least 2.7 GHz. Even the X1X's Jaguar is clocked only at 2.3 GHz.

FALSE,

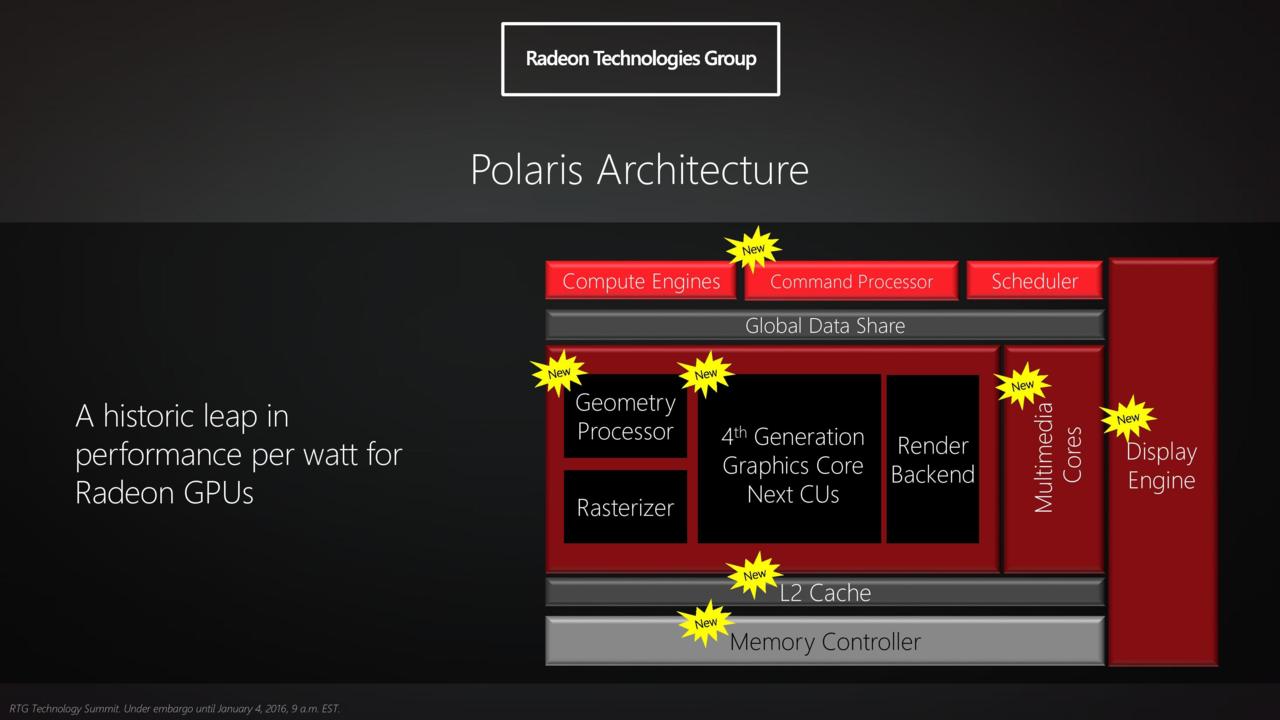

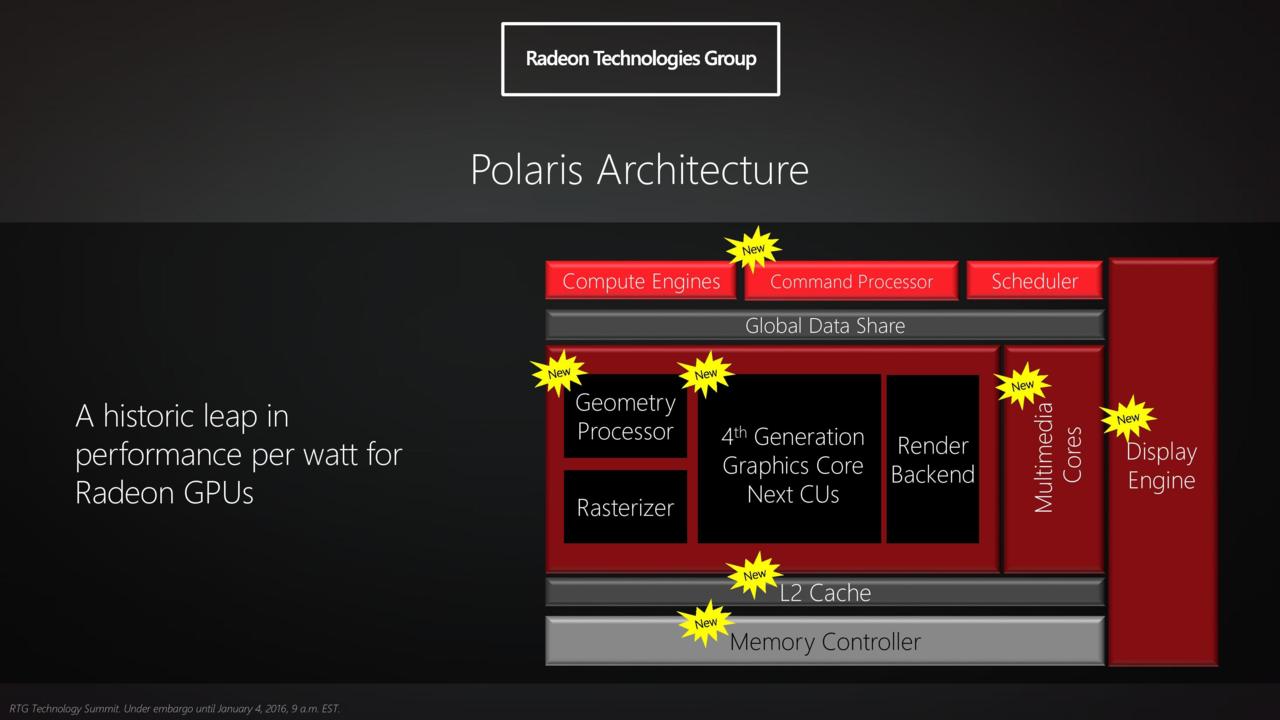

The following diagram is Polaris 10 for RX-480 which is the same for RX-580

Notice RX-480/RX-580 lacking updates to both Rasterizer and Render Backend(RBE). X1X's graphics pipeline was updated e.g. Rasterizer has conservation occlusion and RBE has 2MB render cache updates. There's 60 graphics pipeline changes for X1X.

Most of Polaris updates are for compute shaders and associated L2 cache e.g. 2MB from 1MB.

From http://www.anandtech.com/show/11740/hot-chips-microsoft-xbox-one-x-scorpio-engine-live-blog-930am-pt-430pm-utc#post0821123606

12:36PM EDT - 8x 256KB render caches

12:37PM EDT - 2MB L2 cache with bypass and index buffer access

12:38PM EDT - out of order rasterization, 1MB parameter cache, delta color compression, depth compression, compressed texture access

X1X's GPU's Render Back Ends (RBE) has 256KB cache each and there's 8 of them, hence 2 MB render cache.

X1X's GPU has 2 MB L2 cache, 1 MB parameter cache and 2MB render cache. That's 5 MB of cache.

https://www.amd.com/Documents/GCN_Architecture_whitepaper.pdf

The old GCN's render back end (RBE) cache. Page 13 of 18.

Once the pixels fragments in a tile have been shaded, they flow to the Render Back-Ends (RBEs). The RBEs apply depth, stencil and alpha tests to determine whether pixel fragments are visible in the final frame. The visible pixels fragments are then sampled for coverage and color to construct the final output pixels. The RBEs in GCN can access up to 8 color samples (i.e. 8x MSAA) from the 16KB color caches and 16 coverage samples (i.e. for up to 16x EQAA) from the 4KB depth caches per pixel. The color samples are blended using weights determined by the coverage samples to generate a final anti-aliased pixel color. The results are written out to the frame buffer, through the memory controllers

GCN version 1.0's RBE cache size is just 20 KB. 8x RBE = 160 KB render cache (for 7970)

AMD R9-290X/R9-390X's aging RBE/ROPS comparison. https://www.slideshare.net/DevCentralAMD/gs4106-the-amd-gcn-architecture-a-crash-course-by-layla-mah

16 RBE with each RBE contains 4 ROPS. Each RBE has 24 bytes cache.

24 bytes x 16 = 384 bytes.

X1X's RBE/ROPS has 2048 KB or 2 MB render cache.

X1X's RBE has 256 KB. 8x RBE = 2048 KB (or 2 MB) render cache. X1X has hold more rendering data on the chip when compared to Radeon HD 7970 and R9-290X/R9-390X.

http://www.eurogamer.net/articles/digitalfoundry-2017-project-scorpio-tech-revealed

We quadrupled the GPU L2 cache size, again for targeting the 4K performance."

X1X GPU's 2MB L2 cache can be used for rendering in addition to X1X's 2 MB render cache.

When X1X's L2 cache (for TMU) and render cache (for ROPS) is combined, the total cache size is 4MB which is similar VEGA's shared 4MB L2 cache for RBE/ROPS and TMUs.

Actually, the X1X's GPU is a souped-up Radeon RX 580, with more shaders, more TMUs and slighter higher clock. It is therefore about parity with or slightly better than the GTX 970.

The issue definitely seems to be the CPU. They're tiny and slow clocked. From what I can gather, the PS4's SOC consist of two quad-core Jaguars, each one about 3.1 mm2. A Haswell i5 is 177 mm2 (though this admittedly includes the integrated GPU), and clocked at at least 2.7 GHz. Even the X1X's Jaguar is clocked only at 2.3 GHz.

FALSE,

The following diagram is Polaris 10 for RX-480 which is the same for RX-580

Notice RX-480/RX-580 lacking updates to both Rasterizer and Render Backend(RBE). X1X's graphics pipeline was updated e.g. Rasterizer has conservation occlusion and RBE has 2MB render cache updates. There's 60 graphics pipeline changes for X1X.

Most of Polaris updates are for compute shaders and associated L2 cache e.g. 2MB from 1MB.

From http://www.anandtech.com/show/11740/hot-chips-microsoft-xbox-one-x-scorpio-engine-live-blog-930am-pt-430pm-utc#post0821123606

12:36PM EDT - 8x 256KB render caches

12:37PM EDT - 2MB L2 cache with bypass and index buffer access

12:38PM EDT - out of order rasterization, 1MB parameter cache, delta color compression, depth compression, compressed texture access

X1X's GPU's Render Back Ends (RBE) has 256KB cache each and there's 8 of them, hence 2 MB render cache.

X1X's GPU has 2 MB L2 cache, 1 MB parameter cache and 2MB render cache. That's 5 MB of cache.

https://www.amd.com/Documents/GCN_Architecture_whitepaper.pdf

The old GCN's render back end (RBE) cache. Page 13 of 18.

Once the pixels fragments in a tile have been shaded, they flow to the Render Back-Ends (RBEs). The RBEs apply depth, stencil and alpha tests to determine whether pixel fragments are visible in the final frame. The visible pixels fragments are then sampled for coverage and color to construct the final output pixels. The RBEs in GCN can access up to 8 color samples (i.e. 8x MSAA) from the 16KB color caches and 16 coverage samples (i.e. for up to 16x EQAA) from the 4KB depth caches per pixel. The color samples are blended using weights determined by the coverage samples to generate a final anti-aliased pixel color. The results are written out to the frame buffer, through the memory controllers

GCN version 1.0's RBE cache size is just 20 KB. 8x RBE = 160 KB render cache (for 7970)

AMD R9-290X/R9-390X's aging RBE/ROPS comparison. https://www.slideshare.net/DevCentralAMD/gs4106-the-amd-gcn-architecture-a-crash-course-by-layla-mah

16 RBE with each RBE contains 4 ROPS. Each RBE has 24 bytes cache.

24 bytes x 16 = 384 bytes.

X1X's RBE/ROPS has 2048 KB or 2 MB render cache.

X1X's RBE has 256 KB. 8x RBE = 2048 KB (or 2 MB) render cache. X1X has hold more rendering data on the chip when compared to Radeon HD 7970 and R9-290X/R9-390X.

http://www.eurogamer.net/articles/digitalfoundry-2017-project-scorpio-tech-revealed

We quadrupled the GPU L2 cache size, again for targeting the 4K performance."

X1X GPU's 2MB L2 cache can be used for rendering in addition to X1X's 2 MB render cache.

When X1X's L2 cache (for TMU) and render cache (for ROPS) is combined, the total cache size is 4MB which is similar VEGA's shared 4MB L2 cache for RBE/ROPS and TMUs.

This actually made sense as a reply, congrats.

This actually made sense as a reply, congrats.

It's the main reason for ForzaTech's X1X performance boost which increases GCN's pixel shader and RBE performance i.e. pixel shader and RBE math operations has access to 2MB render cache just like GTX 1070's version. It has some benefits for Unreal Engine 4's deferred render.

X1X's graphics pipeline improvements may not have any benefits for games already bias towards compute shader and TMU path i.e. only X1X's effective bandwidth increase improvements.

GTX 1070's higher tessellation rate still stands as long it's not gimped by heavy memory bandwidth workloads. This problem is not major issue for X1X since it's geometry workload would be budgeted.

GPU is only strong as it's weakest point.

Polaris's update is half baked i.e. missing major graphics pipeline updates.

(Usual graphs and diagrams overload)

This actually made sense as a reply, congrats.

Yeah, but does he really have to do it in the form of 4-browser-page graphs and diagrams ocular assault? I mean, I did read all of that, and I was able to sum it up as; "You're wrong. The X1X's GPU has updated graphics pipeline, the rasterizer has conservation occlusion and the render backends have 2 MB of cache, compared to GCN 1.0's 160 KB, among other improvements. Combine that with the GPU's 2 MB L2 cache, and the X1X has 4 MB of total cache, which is more similar to the Vega." Simple as that. Throw in a few links as sources and - Bam! - point made.

If he had posted that instead, I would have just said well shave my backside and call me "Served," because I stand corrected. Still, my point remains: these consoles are ridiculously GPU-heavy, and that's where their limitations lie. I was even sort of praising the X1X's GPU in my original post.

But no, I had to sift through all of that mess to see what he was saying. He even repeated a few of his points for... ...some reason? This is why I typically don't bother to reply to ronvalencia's posts any more. Too tired of reading them.

The shitty Jaguar CPU is the problem. It's what I've been trying to tell clowns like Wrongvalencia since they announced the official XboneX specs. The XboneX is still bottlenecked by the shitty Jaguar CPU, no amount of optimizations will make up for the garbage CPU in the XBoneX. If you want 60fps at all times stick to PC. Destiny 2 won't run at 60fps on X1X even if they drop it down to 1080p, the CPU is that shitty, same with the PS4 Pro.

Thats for real now ?!

You telling me X1X , 500$ most super duper mega ultimate super ultra bomb power console in the world .. cant play Destiny 2 at 1080p/60fps ? Roooofl !

Is this a joke , when did i miss that?

The shitty Jaguar CPU is the problem. It's what I've been trying to tell clowns like Wrongvalencia since they announced the official XboneX specs. The XboneX is still bottlenecked by the shitty Jaguar CPU, no amount of optimizations will make up for the garbage CPU in the XBoneX. If you want 60fps at all times stick to PC. Destiny 2 won't run at 60fps on X1X even if they drop it down to 1080p, the CPU is that shitty, same with the PS4 Pro.

Thats for real now ?!

You telling me X1X , 500$ most super duper mega ultimate super ultra bomb power console in the world .. cant play Destiny 2 at 1080p/60fps ? Roooofl !

Is this a joke , when did i miss that?

No one in any official capacity anywhere has ever stated that. This seems to be a recurring trend surrounding this console, people literally making things up and peddling it as fact, things they made up...

The shitty Jaguar CPU is the problem. It's what I've been trying to tell clowns like Wrongvalencia since they announced the official XboneX specs. The XboneX is still bottlenecked by the shitty Jaguar CPU, no amount of optimizations will make up for the garbage CPU in the XBoneX. If you want 60fps at all times stick to PC. Destiny 2 won't run at 60fps on X1X even if they drop it down to 1080p, the CPU is that shitty, same with the PS4 Pro.

Thats for real now ?!

You telling me X1X , 500$ most super duper mega ultimate super ultra bomb power console in the world .. cant play Destiny 2 at 1080p/60fps ? Roooofl !

Is this a joke , when did i miss that?

No one in any official capacity anywhere has ever stated that. This seems to be a recurring trend surrounding this console, people literally making things up and peddling it as fact, things they made up...

No really now , wont be the new console able to run destiny 2 that is pretty much well optimized ( played the PC open beta , game is light !! ) with at 60fps on 1080p ? I mean .. thats the worse news ive heard in a while. So neither PRO will !!

So what , people will buy destiny 2 , an fps game that will run at 30fps at 1080p in new consoles ? 30fps ?!!!!!! Man , i say noone should even touch this shit if its true. Go for PC version or bypass this shit all together.

The shitty Jaguar CPU is the problem. It's what I've been trying to tell clowns like Wrongvalencia since they announced the official XboneX specs. The XboneX is still bottlenecked by the shitty Jaguar CPU, no amount of optimizations will make up for the garbage CPU in the XBoneX. If you want 60fps at all times stick to PC. Destiny 2 won't run at 60fps on X1X even if they drop it down to 1080p, the CPU is that shitty, same with the PS4 Pro.

Thats for real now ?!

You telling me X1X , 500$ most super duper mega ultimate super ultra bomb power console in the world .. cant play Destiny 2 at 1080p/60fps ? Roooofl !

Is this a joke , when did i miss that?

No one in any official capacity anywhere has ever stated that. This seems to be a recurring trend surrounding this console, people literally making things up and peddling it as fact, things they made up...

No really now , wont be the new console able to run destiny 2 that is pretty much well optimized ( played the PC open beta , game is light !! ) with at 60fps on 1080p ? I mean .. thats the worse news ive heard in a while. So neither PRO will !!

So what , people will buy destiny 2 , an fps game that will run at 30fps at 1080p in new consoles ? 30fps ?!!!!!! Man , i say noone should even touch this shit if its true. Go for PC version or bypass this shit all together.

The game isn't 1080p on the Pro and Xbox One X, it's checkerboard rendered on the Pro (1920x2160) and will likely be native 4K on the Xbox One X.

(Usual graphs and diagrams overload)

This actually made sense as a reply, congrats.

Yeah, but does he really have to do it in the form of 4-browser-page graphs and diagrams ocular assault? I mean, I did read all of that, and I was able to sum it up as; "You're wrong. The X1X's GPU has updated graphics pipeline, the rasterizer has conservation occlusion and the render backends have 2 MB of cache, compared to GCN 1.0's 160 KB, among other improvements. Combine that with the GPU's 2 MB L2 cache, and the X1X has 4 MB of total cache, which is more similar to the Vega." Simple as that. Throw in a few links as sources and - Bam! - point made.

If he had posted that instead, I would have just said well shave my backside and call me "Served," because I stand corrected. Still, my point remains: these consoles are ridiculously GPU-heavy, and that's where their limitations lie. I was even sort of praising the X1X's GPU in my original post.

But no, I had to sift through all of that mess to see what he was saying. He even repeated a few of his points for... ...some reason? This is why I typically don't bother to reply to ronvalencia's posts any more. Too tired of reading them.

X1X was mostly designed for Digital Foundry's XBO resolution gate, hence GPU heavy. X1X's CPU quad core layout follows Ryzen CCX layout instead of flat layout.

X1X's CPU has some improvements over stock Jaguar.

https://en.wikipedia.org/wiki/Translation_lookaside_buffer

A Translation lookaside buffer (TLB) is a memory cache that is used to reduce the time taken to access a user memory location

The TLB is sometimes implemented as content-addressable memory (CAM). The CAM search key is the virtual address and the search result is a physical address. If the requested address is present in the TLB, the CAM search yields a match quickly and the retrieved physical address can be used to access memory. This is called a TLB hit. If the requested address is not in the TLB, it is a miss, and the translation proceeds by looking up the page table in a process called a page walk. The page walk is time consuming when compared to the processor speed, as it involves reading the contents of multiple memory locations and using them to compute the physical address. After the physical address is determined by the page walk, the virtual address to physical address mapping is entered into the TLB

Performance implications

The CPU has to access main memory for an instruction cache miss, data cache miss, or TLB miss. The third case (the simplest one) is where the desired information itself actually is in a cache, but the information for virtual-to-physical translation is not in a TLB. These are all slow, due to the need to access a slower level of the memory hierarchy, so a well-functioning TLB is important. Indeed, a TLB miss can be more expensive than an instruction or data cache miss, due to the need for not just a load from main memory, but a page walk, requiring several memory accesses.

The only reason it will be 30fps was for parity. Xbox one x can do 60 fps. Sony does not want their console to have the inferior version.

LOL but not at 4k and if if was 4k, it would be at the lowest settings. If they did a 1440p version it would most likely be at medium settings. Lemmings and their dreams of being equal to the master race lol .

Please Log In to post.

Log in to comment