The game is optimised really well, but yeah those CPU's in consoles. Don't worry too much anyway, the game will look great and be fun if that's your thing, consoles have always had horrid framerates, nothing new here.

If my GTX 970 can run Destiny 2 1080p @60fps then the X1X should be able to as well.

This has more to do with Destiny's engine architecture than it has to do with Xbox One X/ ps4 Pro. There is no reason the PVP in Destiny with 8 players should be CPU bound due to their weird client server structure it leads to them having to manage alot of things in the client. i fully believe if they did not have the cadence set by activision for their release windows they would be able to refactor some of their session management to make better use of servers.

X1X was mostly designed for Digital Foundry's XBO resolution gate, hence GPU heavy. X1X's CPU quad core layout follows Ryzen CCX layout instead of flat layout.

X1X's CPU has some improvements over stock Jaguar.

https://en.wikipedia.org/wiki/Translation_lookaside_buffer

A Translation lookaside buffer (TLB) is a memory cache that is used to reduce the time taken to access a user memory location

The TLB is sometimes implemented as content-addressable memory (CAM). The CAM search key is the virtual address and the search result is a physical address. If the requested address is present in the TLB, the CAM search yields a match quickly and the retrieved physical address can be used to access memory. This is called a TLB hit. If the requested address is not in the TLB, it is a miss, and the translation proceeds by looking up the page table in a process called a page walk. The page walk is time consuming when compared to the processor speed, as it involves reading the contents of multiple memory locations and using them to compute the physical address. After the physical address is determined by the page walk, the virtual address to physical address mapping is entered into the TLB

Performance implications

The CPU has to access main memory for an instruction cache miss, data cache miss, or TLB miss. The third case (the simplest one) is where the desired information itself actually is in a cache, but the information for virtual-to-physical translation is not in a TLB. These are all slow, due to the need to access a slower level of the memory hierarchy, so a well-functioning TLB is important. Indeed, a TLB miss can be more expensive than an instruction or data cache miss, due to the need for not just a load from main memory, but a page walk, requiring several memory accesses.

A little better. Anyway, never said there wasn't any improvements. That said, the core Jaguar architecture is the same, with the biggest core performance improvement being the 550 MHz clock boost. The rest of what you listed are incremental memory and cache improvements. I doubt this is the big leap in CPU performance people were looking for, and it's still very GPU-heavy.

X1X was mostly designed for Digital Foundry's XBO resolution gate, hence GPU heavy. X1X's CPU quad core layout follows Ryzen CCX layout instead of flat layout.

X1X's CPU has some improvements over stock Jaguar.

https://en.wikipedia.org/wiki/Translation_lookaside_buffer

A Translation lookaside buffer (TLB) is a memory cache that is used to reduce the time taken to access a user memory location

The TLB is sometimes implemented as content-addressable memory (CAM). The CAM search key is the virtual address and the search result is a physical address. If the requested address is present in the TLB, the CAM search yields a match quickly and the retrieved physical address can be used to access memory. This is called a TLB hit. If the requested address is not in the TLB, it is a miss, and the translation proceeds by looking up the page table in a process called a page walk. The page walk is time consuming when compared to the processor speed, as it involves reading the contents of multiple memory locations and using them to compute the physical address. After the physical address is determined by the page walk, the virtual address to physical address mapping is entered into the TLB

Performance implications

The CPU has to access main memory for an instruction cache miss, data cache miss, or TLB miss. The third case (the simplest one) is where the desired information itself actually is in a cache, but the information for virtual-to-physical translation is not in a TLB. These are all slow, due to the need to access a slower level of the memory hierarchy, so a well-functioning TLB is important. Indeed, a TLB miss can be more expensive than an instruction or data cache miss, due to the need for not just a load from main memory, but a page walk, requiring several memory accesses.

A little better. Anyway, never said there wasn't any improvements. That said, the core Jaguar architecture is the same, with the biggest core performance improvement being the 550 MHz clock boost. The rest of what you listed are incremental memory and cache improvements. I doubt this is the big leap in CPU performance people were looking for, and it's still very GPU-heavy.

Xbox's small CPU : larger GPU ratio existence is due to MS's disagreement with PC OEM's large CPU : small GPU ratio in the lower price segments.

This has more to do with Destiny's engine architecture than it has to do with Xbox One X/ ps4 Pro. There is no reason the PVP in Destiny with 8 players should be CPU bound due to their weird client server structure it leads to them having to manage alot of things in the client. i fully believe if they did not have the cadence set by activision for their release windows they would be able to refactor some of their session management to make better use of servers.

https://www.kotaku.com.au/2017/05/bungie-explains-how-theyre-improving-destiny-2-servers/

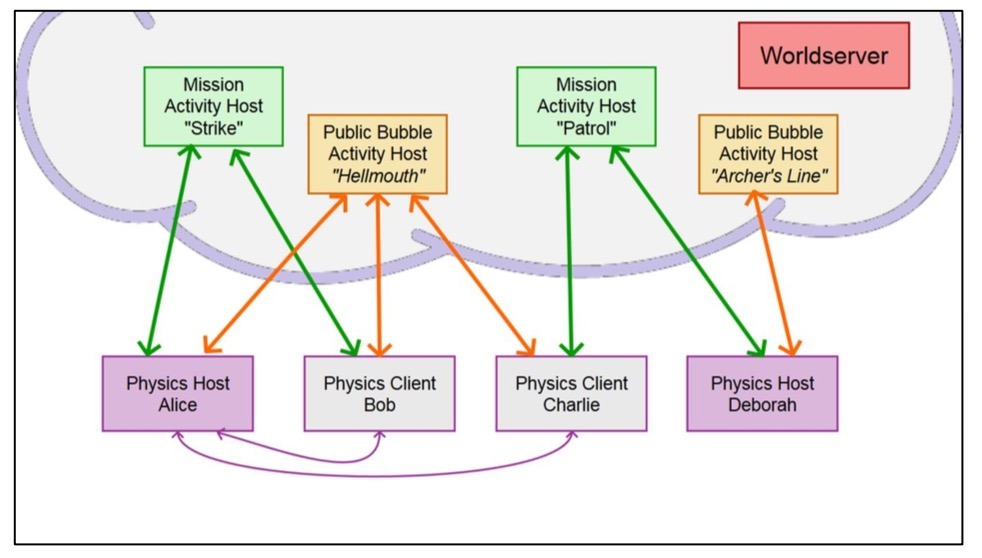

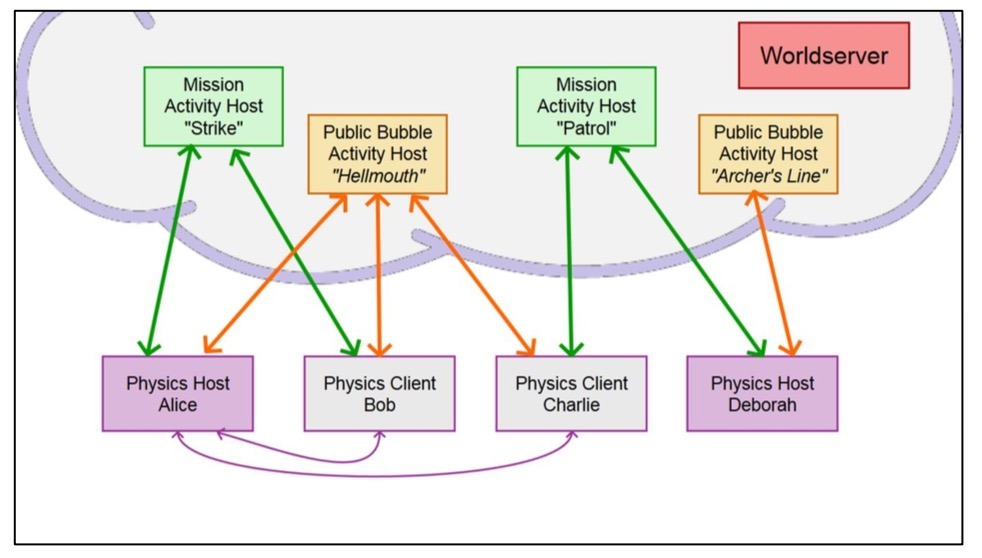

For D2, "Physics Host Alice" is acting like central game simulation server which is different from the following diagram.

Above the dash line, dedicated server handles the game simulation.

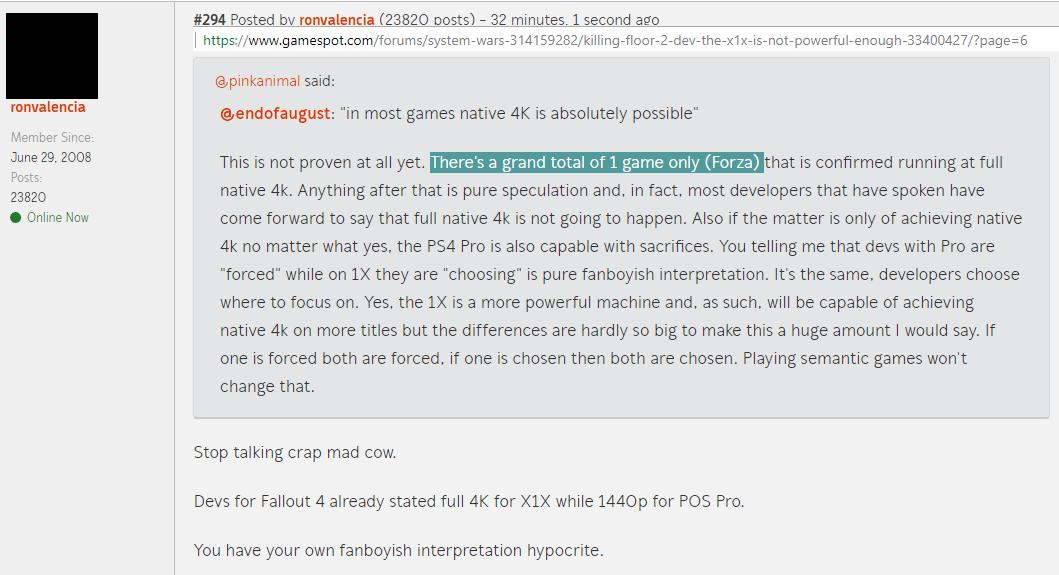

@endofaugust: "This seems to be a recurring trend surrounding this console, people literally making things up and peddling it as fact, things they made up..."

Agree, like for example the "uncompromised True 4k" BS.

@endofaugust: "This seems to be a recurring trend surrounding this console, people literally making things up and peddling it as fact, things they made up..."

Agree, like for example the "uncompromised True 4k" BS.

That's not made up, things like that are hyperbolic and metaphorical statements.

@endofaugust: "This seems to be a recurring trend surrounding this console, people literally making things up and peddling it as fact, things they made up..."

Agree, like for example the "uncompromised True 4k" BS.

That's not made up, things like that are hyperbolic and metaphorical statements.

lol you can call it as euphemistically as you like, it's still part of the BS.

The X1X should run it at at least 1440p @60fps. Bungie is full of crap and there's nothing they can say for anyone to take them seriously. They should just be truthful about it and say it's 100% parity issues they locked it to 30 frames. There should be 2 options for X1X users: 1440p 60fps or 4k 30fps. After leaving MS they've been a massive joke in the gaming industry which looks like they're continuing it through their second game.

You simple don't understand how computers, work like 99% of this forum and gaming forums in general.

No amount of GPU power is going to compensate for a weak CPU, this is why those boxes focus mainly on resolution increases to simple tax the gpu with.

Destiny 2, is heavily CPU demanding which simple the current consoles cannot deal with.

So bungie isn't full of crap as tests have showcased how much of a impact cpu has on destiny and even the most crappy pc cpu trounces the current consoles.

@Wasdie: The X1X runs Rise of the Tomb Raider in 4K/30fps and has settings comparable to the Very High(highest) preset on PC. A 970 can't do that.

You don't know what you are talking about.

It's nto the very high preset on the PC as they scaled effects back, so it's already a costum configuration.

Also tomb raider is a total different game on what requirements it have then destiny. Destiny is way more CPU demanding then tomb raider ever will be, tomb raider is more GPU demanding then anything else.

Totally useless comparison.

Can the GPU in the X1X perform to the same level as a GTX 970?

lol the GTX 970 is trash compared to what the X1X is packing.

You cleary have no clue how hardware works even remotely.

970 is very close to what a x1x packs on 1080p resolutions.

This has more to do with Destiny's engine architecture than it has to do with Xbox One X/ ps4 Pro. There is no reason the PVP in Destiny with 8 players should be CPU bound due to their weird client server structure it leads to them having to manage alot of things in the client. i fully believe if they did not have the cadence set by activision for their release windows they would be able to refactor some of their session management to make better use of servers.

https://www.kotaku.com.au/2017/05/bungie-explains-how-theyre-improving-destiny-2-servers/

For D2, "Physics Host Alice" is acting like central game simulation server which is different from the following diagram.

Above the dash line, dedicated server handles the game simulation.

So after reading their talk from GDC, about networking, and reading their talk about their creation of the tiger engine, it is clear that the combination of their component system, and p2p/ dedicated networking is root cause of the problem. Moving the physics hosting to the cloud will reduce the exploits for host migration it will not help gain some of their cpu back from all of the other back ground tasks running.

I think the whole debacle of the making of Destiny, Bungie teaming with Activision, all the staff problems, and Sony marketing deal is way more interesting then the game itself.

Hope someone writes a book on it someday. Would make for a great read.

I have no doubt the X is being held back on purpose. Didn't I just hear somewhere the Pro version is getting HDR treatment but not the X? Maybe a different game Sony has a marketing deal on.

I am totally curious how much money Sony ponied up to have this much control. Must have been the biggest in console history.

You simple don't understand how computers, work like 99% of this forum and gaming forums in general.

No amount of GPU power is going to compensate for a weak CPU, this is why those boxes focus mainly on resolution increases to simple tax the gpu with.

Destiny 2, is heavily CPU demanding which simple the current consoles cannot deal with.

So bungie isn't full of crap as tests have showcased how much of a impact cpu has on destiny and even the most crappy pc cpu trounces the current consoles.

You don't know what you are talking about.

It's nto the very high preset on the PC as they scaled effects back, so it's already a costum configuration.

Also tomb raider is a total different game on what requirements it have then destiny. Destiny is way more CPU demanding then tomb raider ever will be, tomb raider is more GPU demanding then anything else.

Totally useless comparison.

You cleary have no clue how hardware works even remotely.

970 is very close to what a x1x packs on 1080p resolutions.

Lots of talk. No evidence.

The X1X should run it at at least 1440p @60fps. Bungie is full of crap and there's nothing they can say for anyone to take them seriously. They should just be truthful about it and say it's 100% parity issues they locked it to 30 frames. There should be 2 options for X1X users: 1440p 60fps or 4k 30fps. After leaving MS they've been a massive joke in the gaming industry which looks like they're continuing it through their second game.

You simple don't understand how computers, work like 99% of this forum and gaming forums in general.

No amount of GPU power is going to compensate for a weak CPU, this is why those boxes focus mainly on resolution increases to simple tax the gpu with.

Destiny 2, is heavily CPU demanding which simple the current consoles cannot deal with.

So bungie isn't full of crap as tests have showcased how much of a impact cpu has on destiny and even the most crappy pc cpu trounces the current consoles.

@Wasdie: The X1X runs Rise of the Tomb Raider in 4K/30fps and has settings comparable to the Very High(highest) preset on PC. A 970 can't do that.

You don't know what you are talking about.

It's nto the very high preset on the PC as they scaled effects back, so it's already a costum configuration.

Also tomb raider is a total different game on what requirements it have then destiny. Destiny is way more CPU demanding then tomb raider ever will be, tomb raider is more GPU demanding then anything else.

Totally useless comparison.

Can the GPU in the X1X perform to the same level as a GTX 970?

lol the GTX 970 is trash compared to what the X1X is packing.

You cleary have no clue how hardware works even remotely.

970 is very close to what a x1x packs on 1080p resolutions.

DF plans to investigate Athlon 5350 i.e. quad core stock Jaguar at 2.05 Ghz and overclock it.

Can the GPU in the X1X perform to the same level as a GTX 970?

lol the GTX 970 is trash compared to what the X1X is packing.

I think you're overestimating a bit. A 100 mhz bump in clock speed over the GTX 970 base (which nobody really owns, everybody has slightly higher clocked versions) isn't going to be as big of a performance bump as you think. This is assuming the Xbox One X's GPU isn't CPU limited, which it's sounding like it may be.

It's looking like the X1X is on par with about a GTX 980, not much more powerful than a GTX 970.

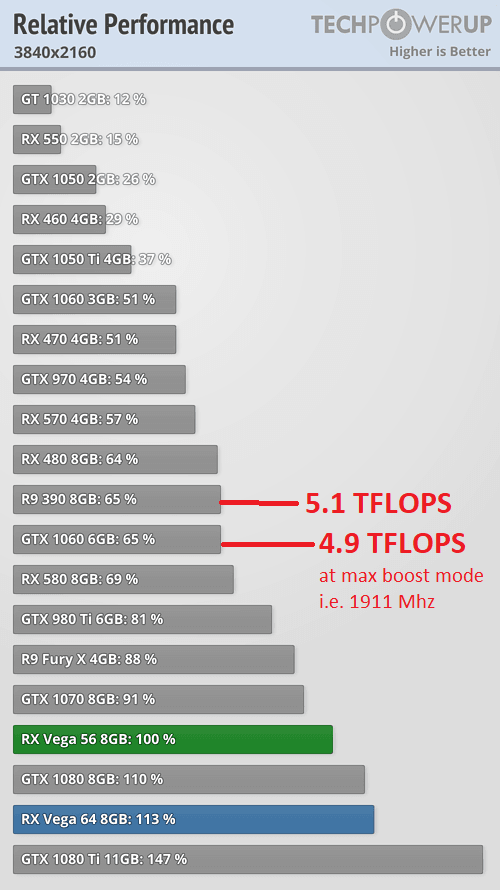

Go watch the DF analysis of the PC version. They basically say even a toaster can run D2 in at least 30 fps. Also the X1X is packing closer to a GTX 1070

Wow, you're delusional. If that's what you think, that means you believe that the XBOX ONE X GPU could run Battlefield 1 at Max settings at 80-100 FPS at 1080p? Or GTAV at around 70-90 fps at 1080p. That couldn't be farther from the truth.

The reality is that, from what we've seen - the XB1X being able to run High PC equivalent graphics at 60 fps at 1080p - the XBOX ONE X GPU will probably be a bit lower than a 1060 3gb variant; but with that CPU bottleneck, it doesn't matter how powerful the GPU is. Nonetheless, it's not sniffing a GTX 1070 (what you're basically saying is that a GPU which costs $450 will perform as well as a GPU in a system which costs $500 itself????); shoot, it's not even sniffing a 1060 6GB. Keep dreaming.

And before anyone goes on about Teraflops, an RX 580 has 6.17 Teraflops, yet it's Nvidia equivalent - the 1060 3gb variant - get's only 3.9 teraflops. Your TF don't mean anything if you have crappy architecture, which consoles usually do.

Only people who know nothing about gaming hardware are going around touting the teraflop numbers.

@gamingpcgod: I see you're discovering the stupidity of lems. I've had numerous arguments with idiots like him and Wrongvalencia claiming that the X1X is a GTX 1070 equivalent. I've tried to explain to them exactly like you've done and it's never worked. They go back again to claiming that the X1X is as powerful as a $450 card that costs almost the same as the entire X1X. Look at the idiots here comparing TFlops in an AMD card directly to an Nvidia card. The idiots think the performance of the cards is dependent on the TFlops lol. They have no idea that Nvidia TFlops is far superior to AMD's thanks to architecture, and cards from both manufacturers can't be compared directly just with TFlops alone.

They are bunch of delusional morons, no point trying to explain to them. Let them remain in the stupidity and ignorance.

@gamingpcgod: I see you're discovering the stupidity of lems. I've had numerous arguments with idiots like him and Wrongvalencia claiming that the X1X is a GTX 1070 equivalent. I've tried to explain to them exactly like you've done and it's never worked. They go back again to claiming that the X1X is as powerful as a $450 card that costs almost the same as the entire X1X. They are bunch of delusional morons, no point trying to explain to them. Let them remain in the stupidity and ignorance.

Remember wrongvalencia also claimed it was equivalent to the GTX 1080 before he moved the goalpost to the GTX 1070 lol

@pinkanimal: I remember that, the guy is a complete idiot. He claims to be a PC gamer but he's completely oblivious to how hardware works on PCs. The only thing he knows how to do is copy and paste charts he finds in the internet. ? He doesn't even understand 99% of what he posts.

@gamingpcgod: I see you're discovering the stupidity of lems. I've had numerous arguments with idiots like him and Wrongvalencia claiming that the X1X is a GTX 1070 equivalent. I've tried to explain to them exactly like you've done and it's never worked. They go back again to claiming that the X1X is as powerful as a $450 card that costs almost the same as the entire X1X. They are bunch of delusional morons, no point trying to explain to them. Let them remain in the stupidity and ignorance.

Remember wrongvalencia also claimed it was equivalent to the GTX 1080 before he moved the goalpost to the GTX 1070 lol

Funny, he also claimed X1X will have Ryzen as well. Damn fool!

@gamingpcgod: I see you're discovering the stupidity of lems. I've had numerous arguments with idiots like him and Wrongvalencia claiming that the X1X is a GTX 1070 equivalent. I've tried to explain to them exactly like you've done and it's never worked. They go back again to claiming that the X1X is as powerful as a $450 card that costs almost the same as the entire X1X. They are bunch of delusional morons, no point trying to explain to them. Let them remain in the stupidity and ignorance.

Remember wrongvalencia also claimed it was equivalent to the GTX 1080 before he moved the goalpost to the GTX 1070 lol

Funny, he also claimed X1X will have Ryzen as well. Damn fool!

Indeed. Every week he claims a new nonsense.

The game in question seems to be complete and utter shit and should not be purchased by anyone else than people who likes shit and wants more shit. Arguing about its graphics and how it runs on Xbox seems unnecessary.

Destiny for games is what Twilight is for movies.

@gamingpcgod: I see you're discovering the stupidity of lems. I've had numerous arguments with idiots like him and Wrongvalencia claiming that the X1X is a GTX 1070 equivalent. I've tried to explain to them exactly like you've done and it's never worked. They go back again to claiming that the X1X is as powerful as a $450 card that costs almost the same as the entire X1X. Look at the idiots here comparing TFlops in an AMD card directly to an Nvidia card. The idiots think the performance of the cards is dependent on the TFlops lol. They have no idea that Nvidia TFlops is far superior to AMD's thanks to architecture, and cards from both manufacturers can't be compared directly just with TFlops alone.

They are bunch of delusional morons, no point trying to explain to them. Let them remain in the stupidity and ignorance.

Exactly. Though it's not as common, the same goes on with cores in CPU. Console lems go around touting their 8 cores, but the reality is that cores don't matter a damn thing when it comes to comparing Intel cpus to console/amd cpus.

@gamingpcgod: I see you're discovering the stupidity of lems. I've had numerous arguments with idiots like him and Wrongvalencia claiming that the X1X is a GTX 1070 equivalent. I've tried to explain to them exactly like you've done and it's never worked. They go back again to claiming that the X1X is as powerful as a $450 card that costs almost the same as the entire X1X. They are bunch of delusional morons, no point trying to explain to them. Let them remain in the stupidity and ignorance.

Remember wrongvalencia also claimed it was equivalent to the GTX 1080 before he moved the goalpost to the GTX 1070 lol

Funny, he also claimed X1X will have Ryzen as well. Damn fool!

I also speculated alternatives to Ryzen in the same topic's page 5 which you have omitted e.g. mobile Excavator at +3.2 Ghz. Your narration about me is a lie by omission.

It was AMD who claimed X1X has association with Ryzen and I went against it with mobile Excavator alternative. AMD can claim some association with Ryzen and MS claims lower latency improvements for X1X's CPU. Parts of Ryzen's improvements applied for X1X's CPU.

Try again cow dung.

@gamingpcgod: I see you're discovering the stupidity of lems. I've had numerous arguments with idiots like him and Wrongvalencia claiming that the X1X is a GTX 1070 equivalent. I've tried to explain to them exactly like you've done and it's never worked. They go back again to claiming that the X1X is as powerful as a $450 card that costs almost the same as the entire X1X. They are bunch of delusional morons, no point trying to explain to them. Let them remain in the stupidity and ignorance.

Remember wrongvalencia also claimed it was equivalent to the GTX 1080 before he moved the goalpost to the GTX 1070 lol

That's false narration.

To quote myself again

Vega NCU enabled Scorpio could gimp current PC GPUs without double rate FP16 feature.

The word enabled is conditional.

The word could is conditional.

https://www.gamespot.com/forums/system-wars-314159282/both-scorpio-and-pro-are-half-baked-4k-and-thats-p-33383816/?page=2

If Scorpio has Vega NCU, it would be faster than R9-390X.

That's conditional with an IF statement

And before anyone goes on about Teraflops, an RX 580 has 6.17 Teraflops, yet it's Nvidia equivalent - the 1060 3gb variant - get's only 3.9 teraflops. Your TF don't mean anything if you have crappy architecture, which consoles usually do.

Only people who know nothing about gaming hardware are going around touting the teraflop numbers.

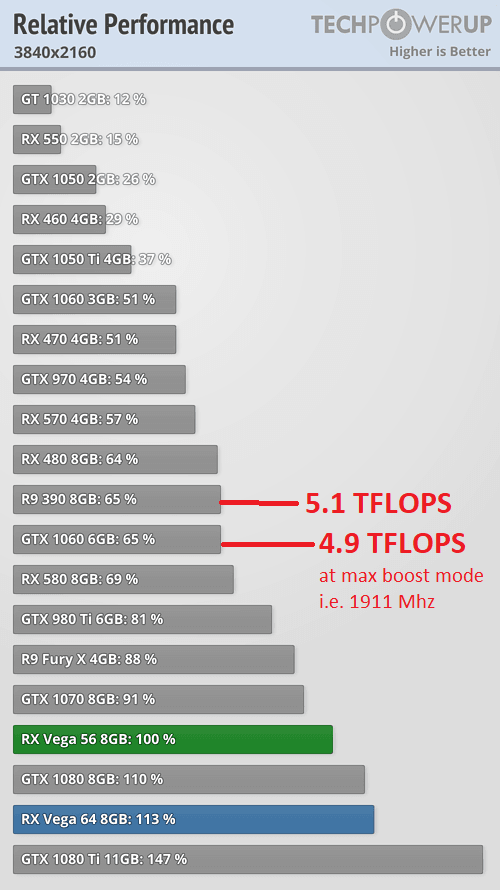

R9-390 has 5.1 TFLOPS

GTX 1060 has 4.9 TFLOPS at max boost mode.

http://www.anandtech.com/show/10540/the-geforce-gtx-1060-founders-edition-asus-strix-review/17

| GeForce GTX 1060FE Overclocking | ||||

| Stock | Overclocked | |||

| Core Clock | 1506MHz | 1706MHz | ||

| Boost Clock | 1709MHz | 1909MHz | ||

| Max Boost Clock | 1911MHz | 2100MHz | ||

| Memory Clock | 8Gbps | 9Gbps | ||

| Max Voltage | 1.062v | 1.093v | ||

My MSI branded GTX 1080 Ti FE usually holds it's clock speed at 1800 Mhz with occasional 1900 Mhz burst during gaming. That's beyond the listed paper spec. NVIDIA has stealth overclock mode.

You're simply a liar, wrongvalencia

@ronvalencia said:

Vega NCU enabled Scorpio could gimp current PC GPUs without double rate FP16 feature.

1. 4X rate integer 8 bit = 24 TIOPS. Scorpio exceeds GTX 1080, GTX 1070, Fury X, 980 Ti

2. 2X rate integer 16 bit = 12 TIOPS. Scorpio exceeds GTX 1080, GTX 1070, Fury X, 980 Ti

3. 2X rate FP16 = 12 TFLOPS. Scorpio exceeds GTX 1080, GTX 1070, Fury X, 980 Ti

You're simply a liar, wrongvalencia

@ronvalencia said:

Vega NCU enabled Scorpio could gimp current PC GPUs without double rate FP16 feature.

1. 4X rate integer 8 bit = 24 TIOPS. Scorpio exceeds GTX 1080, GTX 1070, Fury X, 980 Ti

2. 2X rate integer 16 bit = 12 TIOPS. Scorpio exceeds GTX 1080, GTX 1070, Fury X, 980 Ti

3. 2X rate FP16 = 12 TFLOPS. Scorpio exceeds GTX 1080, GTX 1070, Fury X, 980 Ti

To quote myself again

Vega NCU enabled Scorpio could gimp current PC GPUs without double rate FP16 feature.

The word enabled is conditional.

The word could is conditional.

https://www.gamespot.com/forums/system-wars-314159282/both-scorpio-and-pro-are-half-baked-4k-and-thats-p-33383816/?page=2

If Scorpio has Vega NCU, it would be faster than R9-390X.

That's conditional with an IF statement

Learn proper English.

I don't see the word if here, wrongvalencia. Stop being a hypocrite and a liar.

@ronvalencia said:

Vega NCU enabled Scorpio could gimp current PC GPUs without double rate FP16 feature.

1. 4X rate integer 8 bit = 24 TIOPS. Scorpio exceeds GTX 1080, GTX 1070, Fury X, 980 Ti

2. 2X rate integer 16 bit = 12 TIOPS. Scorpio exceeds GTX 1080, GTX 1070, Fury X, 980 Ti

3. 2X rate FP16 = 12 TFLOPS. Scorpio exceeds GTX 1080, GTX 1070, Fury X, 980 Ti

And before anyone goes on about Teraflops, an RX 580 has 6.17 Teraflops, yet it's Nvidia equivalent - the 1060 3gb variant - get's only 3.9 teraflops. Your TF don't mean anything if you have crappy architecture, which consoles usually do.

Only people who know nothing about gaming hardware are going around touting the teraflop numbers.

R9-390 has 5.1 TFLOPS

GTX 1060 has 4.9 TFLOPS at max boost mode.

http://www.anandtech.com/show/10540/the-geforce-gtx-1060-founders-edition-asus-strix-review/17

| GeForce GTX 1060FE Overclocking | ||||

| Stock | Overclocked | |||

| Core Clock | 1506MHz | 1706MHz | ||

| Boost Clock | 1709MHz | 1909MHz | ||

| Max Boost Clock | 1911MHz | 2100MHz | ||

| Memory Clock | 8Gbps | 9Gbps | ||

| Max Voltage | 1.062v | 1.093v | ||

My MSI branded GTX 1080 Ti FE usually holds it's clock speed at 1800 Mhz with occasional 1900 Mhz burst during gaming. That's beyond the listed paper spec. NVIDIA has stealth overclock mode.

Yeah, my mistake the RX 580 is closer to the 6gb variant than the 3gb. However, the 1060 still wins out, and it has far better overclocking abilities. Nonetheless, that still means that the RX 580 is being beat out by a card with 1.8 (4.4 to 6.2, which is higher than the XB1X) fewer teraflops.

http://www.anandtech.com/show/10580/nvidia-releases-geforce-gtx-1060-3gb

https://www.gamespot.com/articles/amd-radeon-rx-580-and-rx-570-review/1100-6449402/

However, despite this huge Teraflop gap, the 1060 is still better. This is because AMD has shitty architecture, giving them slower speeds, and the architecture for consoles are even WORSE. So my statement still stands; TF don't mean crap if the architecture and GPU speeds are slow.

The RX 470, which has similar TF numbers to the 1060 6GB variant (but still has .5 more) is absolutely trumped by the 1060 6gb.

Irregardless, the notion that the new XBOX ONE X has a GTX 1070 GPU is laughable. There has been NOTHING shown (except for that stupid teraflops number) to suggest that it's anything near that, and from what we've seen, it's more like a 1060 3gb variant that is being bottlenecked, which is absolutely pathetic.

You simple don't understand how computers, work like 99% of this forum and gaming forums in general.

No amount of GPU power is going to compensate for a weak CPU, this is why those boxes focus mainly on resolution increases to simple tax the gpu with.

Destiny 2, is heavily CPU demanding which simple the current consoles cannot deal with.

So bungie isn't full of crap as tests have showcased how much of a impact cpu has on destiny and even the most crappy pc cpu trounces the current consoles.

You don't know what you are talking about.

It's nto the very high preset on the PC as they scaled effects back, so it's already a costum configuration.

Also tomb raider is a total different game on what requirements it have then destiny. Destiny is way more CPU demanding then tomb raider ever will be, tomb raider is more GPU demanding then anything else.

Totally useless comparison.

You cleary have no clue how hardware works even remotely.

970 is very close to what a x1x packs on 1080p resolutions.

Lots of talk. No evidence.

It's called logic, go disprove any of it. You can't because this is how computers and hardware parts work. The fact that you come with such a reaction already showcases your ignorance.

The X1X should run it at at least 1440p @60fps. Bungie is full of crap and there's nothing they can say for anyone to take them seriously. They should just be truthful about it and say it's 100% parity issues they locked it to 30 frames. There should be 2 options for X1X users: 1440p 60fps or 4k 30fps. After leaving MS they've been a massive joke in the gaming industry which looks like they're continuing it through their second game.

You simple don't understand how computers, work like 99% of this forum and gaming forums in general.

No amount of GPU power is going to compensate for a weak CPU, this is why those boxes focus mainly on resolution increases to simple tax the gpu with.

Destiny 2, is heavily CPU demanding which simple the current consoles cannot deal with.

So bungie isn't full of crap as tests have showcased how much of a impact cpu has on destiny and even the most crappy pc cpu trounces the current consoles.

@Wasdie: The X1X runs Rise of the Tomb Raider in 4K/30fps and has settings comparable to the Very High(highest) preset on PC. A 970 can't do that.

You don't know what you are talking about.

It's nto the very high preset on the PC as they scaled effects back, so it's already a costum configuration.

Also tomb raider is a total different game on what requirements it have then destiny. Destiny is way more CPU demanding then tomb raider ever will be, tomb raider is more GPU demanding then anything else.

Totally useless comparison.

Can the GPU in the X1X perform to the same level as a GTX 970?

lol the GTX 970 is trash compared to what the X1X is packing.

You cleary have no clue how hardware works even remotely.

970 is very close to what a x1x packs on 1080p resolutions.

DF plans to investigate Athlon 5350 i.e. quad core stock Jaguar at 2.05 Ghz and overclock it.

Sadly DF proofs over and over again, that they are not really competent to make those comparisons. They are great for some specific content, but other's they are practically clueless on how stuff work.

A good example is

1) let's test budget gpu's from AMD and Nvidia on extremely expensive hardware parts like motherboard/cpu/memory. and draw conclusions for people that watch and are interesting in that hardware. Which get completely misinformed.

2) Or there most recent gem, there latest clueless video about the 970 vs destiny 2 4k. Jumping to conclusions while he just stares at a screen, moving sliders without having any clue what he's doing and just jumps to random conclusions. The further it goes the more embarrassing it becomes.

The whole issue with these tests outlets is that they are just a bunch of random dudes, that watch eachother video's and copy eachother to maek the same content. But honestly not a single one ever things for themselves for a second.

This is what made DF so interesting when they started, no nonsense only a clear visible picture with effects visible straight pulled from the console/pc with a framerate meter. Everything else however as they probably need more public and attention falls fast apart with them.

You cleary have no clue how hardware works even remotely.

970 is very close to what a x1x packs on 1080p resolutions.

1080p at what measure?

A 970 is 3.5 TF

x1x is 6...

Because hardware is not just only TFLOPS on a GPU card. And even Tflops mean nothing without context from different company's.

GO put 1gb of memory into your PC, and see how your 2x titan x, and your 2000 dollar nitrogen cooled cpu is going to run any new generation game. It's not or its going to be a slide show.

That's why you have hardware balances.

That's why you don't buy a 16 core cpu, with 36 threads at 3,2ghz, if you play a game that only addressed 2 cores and profits from high ghz frequency.

You just burned a massive pile of cash for absolute no reason, while another guy that has a 4 core cpu at 4,5ghz out of the same gen, gets more performance then you do and paid practically nothing for it.

Another example:

I have 2x 290's, overclocked by 15% each ( same goes for my 970 ). so that means over 10tflop of gpu performance on 290's, probably over 11 tlfops, vs 4tflops.

Witcher 3, 1080p.

10tflops gpu's = 200 fps looking in the sky, 40 fps in city's walking around

970 overclocked 4tflop gpu = 100 fps looking in the sky, 60 fps walking in city's.

Both lock at 60 fps.

Result:

10 tflop gpu, drops to 40 fps in city's, stays 60 outside.

4tflop gpu, stays 60 fps at all time.

Welcome to CPU software bottleneck

It's the same why those playstation users when the PS4 came out with there 8gb of gddr5 comments where just absolutely hilarious. 8gb is the same as a 1080 i think gets delivered on. So it must be teh same performance as the original ps4 right?

Because ram decides what your system performs right?

People read simple numbers even DF, on the internet and draw conclusion out of it, even while they don't know why it actually does that.

1) what does a game actually adress, cores / memory / gpu / v-ram

2) does it benefit from higher frequency's etc. or not, on what components, because what does it do exactly or require from it to run

3) does it run advanced stuff on the CPU, which can favor nvidia, but amd not so much

4) how much overhead do drivers effect the performance on different grades of hardware.

5) how does the OS adress the hardware for x title.

6) what engine/ OS version, gets used and how does it relate towards the software and hardware it runs on from different company's.

7) etc etc

I can go on and on and on.

But 6 tflop vs 3,5 tflops. Because that's what a forum told you. A bunch of posters thought how things where, and it was a number you could easily pick up and compare even while its utterly useless.

Why do you think people like RON and his endless charts of sites that don't even know how half of the framerates that they activily test and describe end up hitting on these charts, have any clue what is going on.

It's like, hey look at this chart of this game. they are close so it must be performing the same. makes sense right? WRONG.

Anyway, gotta go now. I can go on for days about this. But it's useless.

Time will learn these people that a PS4 with 8gb of gddr5 isn't equal towards a PC with a GPU with 8gb of gddr5, that the insane CELL cpu isn't magic and actually has massive poppins for a reason on its screen ( and is not used by everybody in the industry currently and still for the ps4 for a reason ), and that xbox cloud solution for endless processing isnt'going to deliver you true 4k games at 200 fps to the end of time.

Actual True 4k = 8192 × 4320 ( they like to shift numbers around to buzz there crap up, yet it doesn't make it true 4k even while they term it correctly )

It's just 2160p at the end.

i'm out, overstayed my welcome way to long.

You cleary have no clue how hardware works even remotely.

970 is very close to what a x1x packs on 1080p resolutions.

1080p at what measure?

A 970 is 3.5 TF

x1x is 6...

Because hardware is not just only TFLOPS on a GPU card. And even Tflops mean nothing without context from different company's.

GO put 1gb of memory into your PC, and see how your 2x titan x, and your 2000 dollar nitrogen cooled cpu is going to run any new generation game. It's not or its going to be a slide show.

That's why you have hardware balances.

That's why you don't buy a 16 core cpu, with 36 threads at 3,2ghz, if you play a game that only addressed 2 cores and profits from high ghz frequency.

You just burned a massive pile of cash for absolute no reason, while another guy that has a 4 core cpu at 4,5ghz out of the same gen, gets more performance then you do and paid practically nothing for it.

Another example:

I have 2x 290's, overclocked by 15% each ( same goes for my 970 ). so that means over 10tflop of gpu performance on 290's, probably over 11 tlfops, vs 4tflops.

Witcher 3, 1080p.

10tflops gpu's = 200 fps looking in the sky, 40 fps in city's walking around

970 overclocked 4tflop gpu = 100 fps looking in the sky, 60 fps walking in city's.

Both lock at 60 fps.

Result:

10 tflop gpu, drops to 40 fps in city's, stays 60 outside.

4tflop gpu, stays 60 fps at all time.

Welcome to CPU software bottleneck

It's the same why those playstation users when the PS4 came out with there 8gb of gddr5 comments where just absolutely hilarious. 8gb is the same as a 1080 i think gets delivered on. So it must be teh same performance as the original ps4 right?

Because ram decides what your system performs right?

People read simple numbers even DF, on the internet and draw conclusion out of it, even while they don't know why it actually does that.

1) what does a game actually adress, cores / memory / gpu / v-ram

2) does it benefit from higher frequency's etc. or not, on what components, because what does it do exactly or require from it to run

3) does it run advanced stuff on the CPU, which can favor nvidia, but amd not so much

4) how much overhead do drivers effect the performance on different grades of hardware.

5) how does the OS adress the hardware for x title.

6) what engine/ OS version, gets used and how does it relate towards the software and hardware it runs on from different company's.

7) etc etc

I can go on and on and on.

But 6 tflop vs 3,5 tflops. Because that's what a forum told you. A bunch of posters thought how things where, and it was a number you could easily pick up and compare even while its utterly useless.

Why do you think people like RON and his endless charts of sites that don't even know how half of the framerates that they activily test and describe end up hitting on these charts, have any clue what is going on.

It's like, hey look at this chart of this game. they are close so it must be performing the same. makes sense right? WRONG.

Anyway, gotta go now. I can go on for days about this. But it's useless.

Time will learn these people that a PS4 with 8gb of gddr5 isn't equal towards a PC with a GPU with 8gb of gddr5, that the insane CELL cpu isn't magic and actually has massive poppins for a reason on its screen ( and is not used by everybody in the industry currently and still for the ps4 for a reason ), and that xbox cloud solution for endless processing isnt'going to deliver you true 4k games at 200 fps to the end of time.

Actual True 4k = 8192 × 4320 ( they like to shift numbers around to buzz there crap up, yet it doesn't make it true 4k even while they term it correctly )

It's just 2160p at the end.

i'm out, overstayed my welcome way to long.

LOL dumbass...

You say 970 is equivalent because of something unrelated to the GPU and then ignore PCs also can have shitty CPU's... your entire refute is stupid.

The xonex's GPU far more powerful than a 970... it completely utterly destroys it and that's a fact. Your entire argument of equivalence depends on removing the GPU as a bottleneck by lowering the resolution to something the 970 can handle so other bottlenecks surface (ie not utilizing the xonex at all)...

Even at 1080p the x1x can do several things better, texture fillrate, geometry fillrate, more compute resources... etc

Please Log In to post.

Log in to comment