How is the 2070 Super, "outdated?"

The problem is, if the PS5 matches the upper mid tier GPU released in 2018-2019, what do we call the PS5 in 2025-2027, when it's later in its cycle and matching a GPU that will then the bare minimum required to run new AAA games? That's the problem with console gaming and holding the industry back. They handcuff developers and consumers to decade old technology.

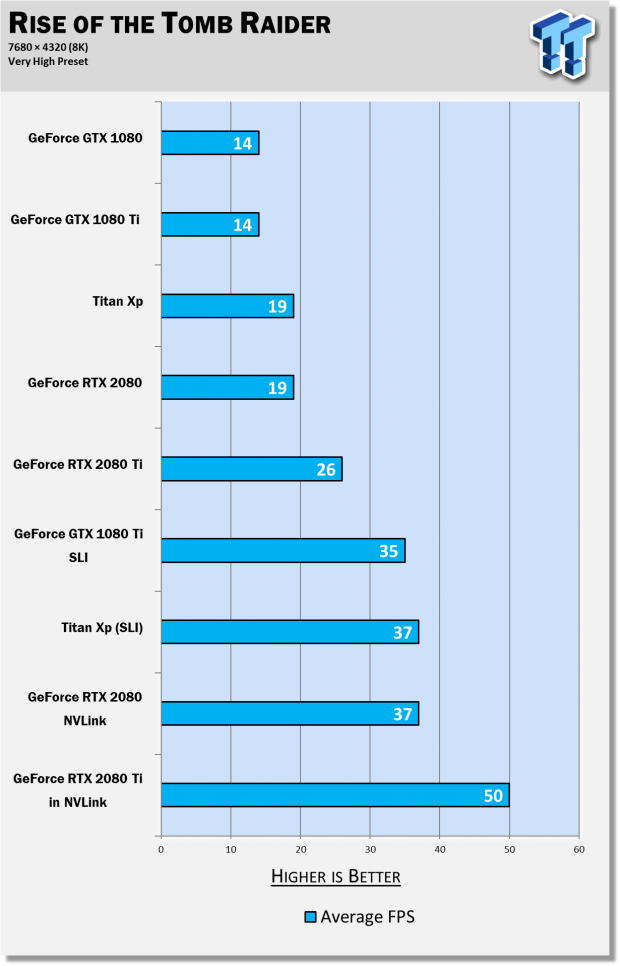

Firstly, this holding back nonsense is exactly that nonsense. Please refrain from falsehood. Another fact that is routinely missed is that performance advances has come to an all time crawl. The difference between the 1080 Ti and 2080 Ti is 50% if you are lucky. That's 2 years between cards and the gap is decreasing. Diminishing returns is on full blast. Not sure why so many folks heads are in the sand. By the time 2025-2027 arrives they would be a newer system. Even then the advances would be less significant that now.

The answer is GTX 1080 Ti, RTX 2080 Super and RTX 2080 Ti has six GPCraster engine front ends hardware.

Block diagram for RTX 2080 Ti

Like AMD's diminishing returns with adding CU on quad Shader Engine with quad raster engine GPU framework, NVIDIA adds CUDA cores into six GPCraster engine GPU framework and it's hitting diminishing returns.

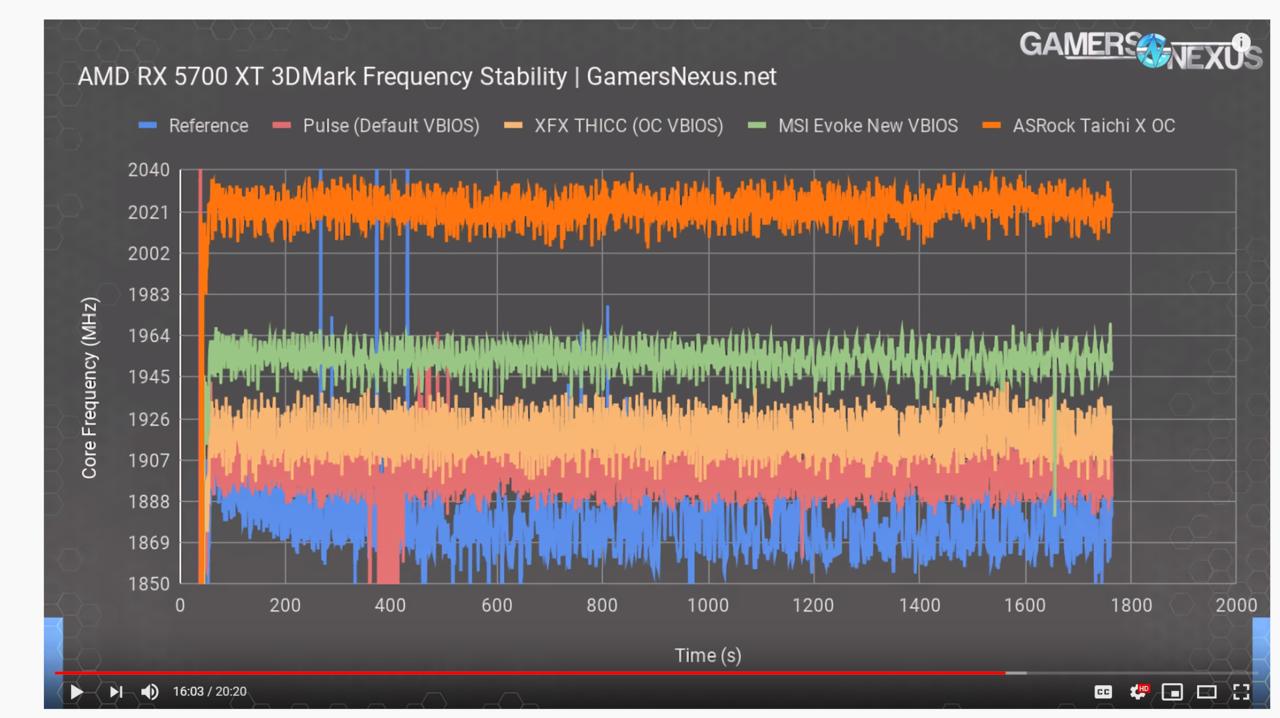

The workaround is to increase the clock speed which scales both CUDA cores and raster engines at the same time.

From https://wccftech.com/nvidia-ampere-gpu-geforce-rtx-3080-3070-specs-rumor/

Credit: https://twitter.com/kopite7kimi

- GA100 8GPC*8TPC*2SM 6144bit (this is HBM 2)

- GA102 7GPC*6TPC*2SM 384bit

- GA103 6GPC*5TPC*2SM 320bit

- GA104 6GPC*4TPC*2SM 256bit

- GA106 3GPC*5TPC*2SM 192bit

- GA107 2GPC*5TPC*2SM 128bit

Rumored GA102 has 7 GPC design, hence 7raster engine front ends. NVIDIA is not AMD/RTG! Ampere combines higher clock speed and GPC horizontal expansion.

I plan to buy the next MSI RTX 3080 Ti Gaming X.

AMD needs to glue two RX 5700 XT (dual RDNA shader engines) for RDNA's Hawaii style quad shader engine config.

Log in to comment