Chief executive officer of Nvidia Corp. this week said that the company is designing a broad lineup of solutions for different applications powered by its next-generation “Pascal” graphics processor architecture and other technologies from the company. Jen-Hsun Huang seems to be impressed about the prospects of products that are in the company’s pipeline, but he naturally reveals no details. The CEO advices to “wait a little longer” to find out more about them.

“I cannot wait to tell you about the products that we have in the pipeline,” said Jen-Hsun Huang, chief executive officer of Nvidia, at the company’s quarterly conference call with investors and financial analysts. “There are more engineers at Nvidia building the future of GPUs than just about anywhere else in the world. We are singularly focused on visual computing, as you guys know.”

In fact, Nvidia’s “Pascal” graphics processing architecture is ought to be impressive and there are many reasons why the chief exec of Nvidia is excited about it. Thanks to major architectural enhancements, the company’s next-gen GPUs will support numerous new features introduced by DirectX 12, Vulkan and OpenCL application programming interfaces. Moreover, having more GPU engineers than any other company in the world, Nvidia will probably add a lot of exclusive capabilities to future GPUs.

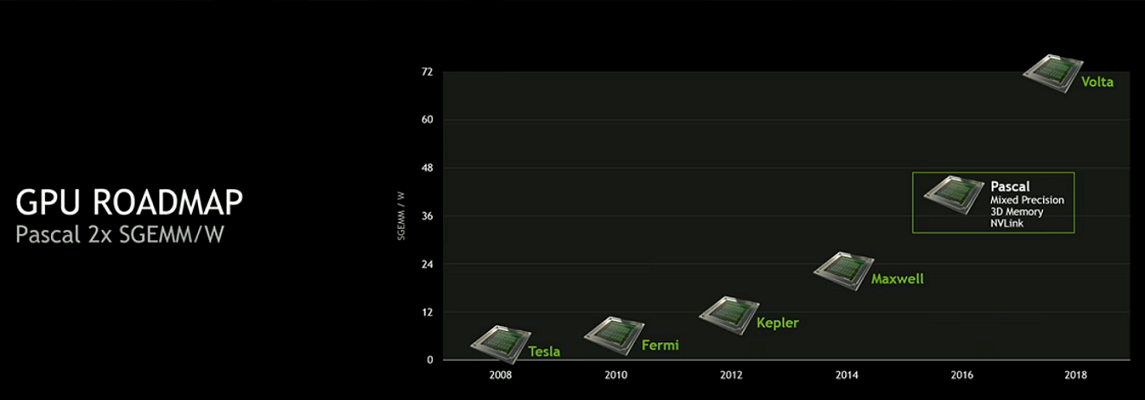

The upcoming PK100 and PK104 graphics processing units will not only feature major architectural innovations, but they will also be made using either 14nm or 16nm manufacturing technology with fin-shaped field-effect transistors (FinFETs). “Pascal” GPUs will be the first graphics chips from Nvidia to be made using an all-new process technology since “Kepler” in 2012. Finer fabrication process is something that should permit Nvidia engineers to considerably increase the number of stream processors and other units inside the company’s future GPUs, dramatically increasing their performance.

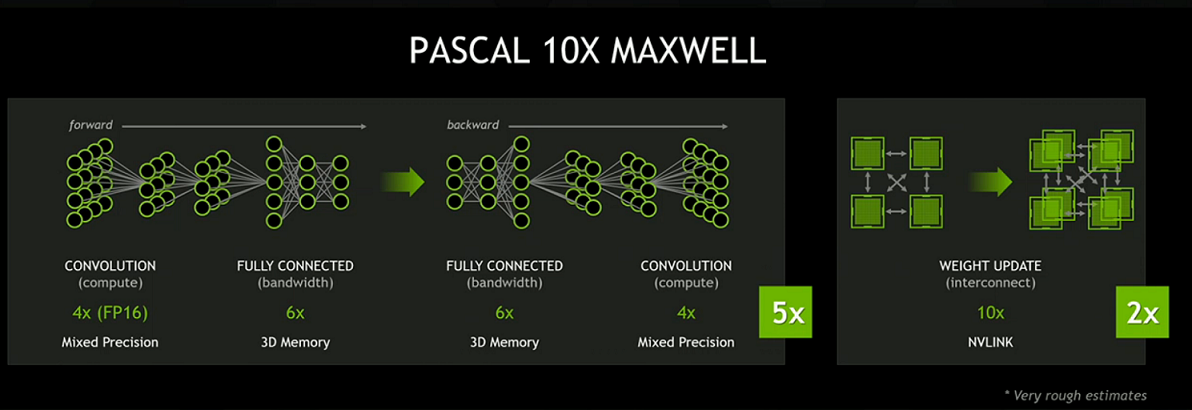

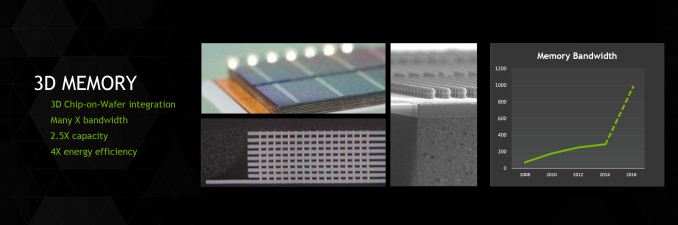

In addition, next-generation graphics processing units from Nvidia will support second-generation stacked high-bandwidth memory (HBM2). The HBM2 will let Nvidia and its partners build graphics cards with up to 32GB of onboard memory and 820GB/s – 1TB/s bandwidth. Performance of such graphics adapters in ultra-high-definition (UHD) resolutions like 4K (3840*2160, 4096*2160) and 5K (5120*2880) should be extremely high.

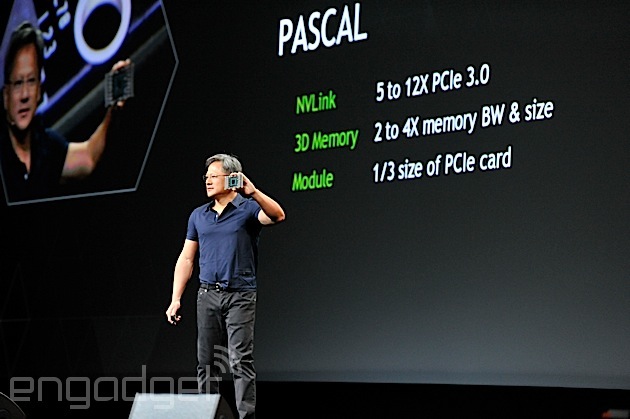

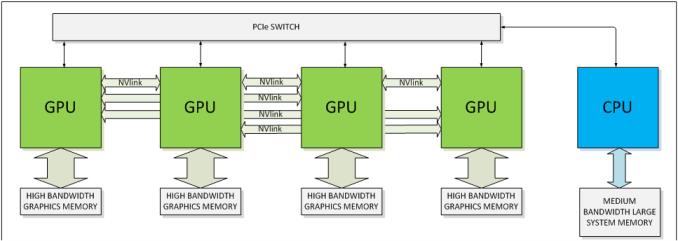

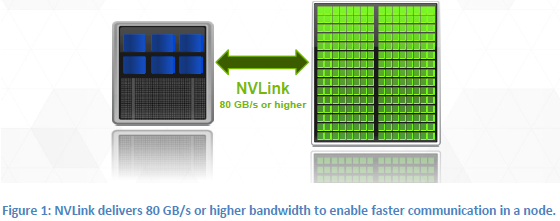

For supercomputers, the “Big Pascal” chip will integrate NVLink interconnection tech with 80GB/s or higher bandwidth, which will significantly increase performance of “Pascal”-based Tesla accelerators in high-performance computing (HPC) applications. Moreover, NVLink could bring major improvements to multi-GPU technologies thanks to increased bandwidth for inter-GPU communications.

Graphics and compute cards based on “Pascal” will offer breakthrough performance, but the new architecture will be used not only for gaming and HPC markets. Nvidia will use its “Pascal” technology for a variety of solutions, including those for mobile and automotive applications.

“We have found over the years to be able to focus on just one thing, which is visual computing, and be able to leverage that one thing across PC, cloud, and mobile, and be able to address four very large markets with that one thing: gaming, enterprise, cloud, and automotive,” said Mr. Huang. “We can do this one thing and now be able to enjoy all and deliver the capabilities to the market in all three major computing platforms, and gain four vertical markets that are quite frankly very exciting.”

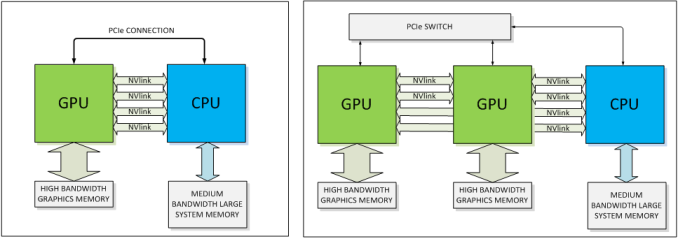

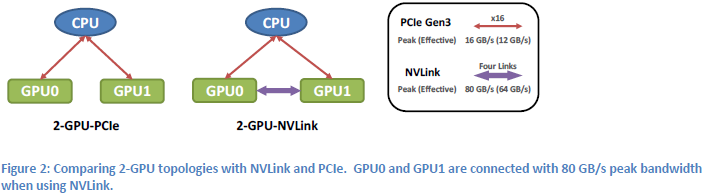

NVLink, in a nutshell, is NVIDIA’s effort to supplant PCI-Express with a faster interconnect bus. From the perspective of NVIDIA, who is looking at what it would take to allow compute workloads to better scale across multiple GPUs, the 16GB/sec made available by PCI-Express 3.0 is hardly adequate. Especially when compared to the 250GB/sec+ of memory bandwidth available within a single card. PCIe 4.0 in turn will eventually bring higher bandwidth yet, but this still is not enough. As such NVIDIA is pursuing their own bus to achieve the kind of bandwidth they desire.

The end result is a bus that looks a whole heck of a lot like PCIe, and is even programmed like PCIe, but operates with tighter requirements and a true point-to-point design. NVLink uses differential signaling (like PCIe), with the smallest unit of connectivity being a “block.” A block contains 8 lanes, each rated for 20Gbps, for a combined bandwidth of 20GB/sec. In terms of transfers per second this puts NVLink at roughly 20 gigatransfers/second, as compared to an already staggering 8GT/sec for PCIe 3.0, indicating at just how high a frequency this bus is planned to run at.

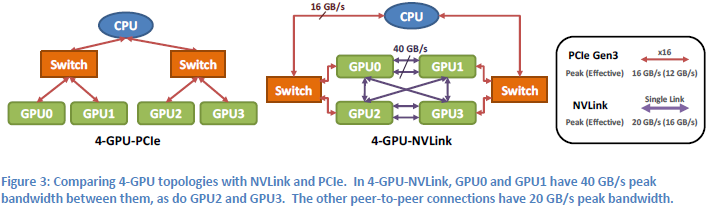

Multiple blocks in turn can be teamed together to provide additional bandwidth between two devices, or those blocks can be used to connect to additional devices, with the number of bricks depending on the SKU. The actual bus is purely point-to-point – no root complex has been discussed – so we’d be looking at processors directly wired to each other instead of going through a discrete PCIe switch or the root complex built into a CPU. This makes NVLink very similar to AMD’s Hypertransport, or Intel’s Quick Path Interconnect (QPI). This includes the NUMA aspects of not necessarily having every processor connected to every other processor.

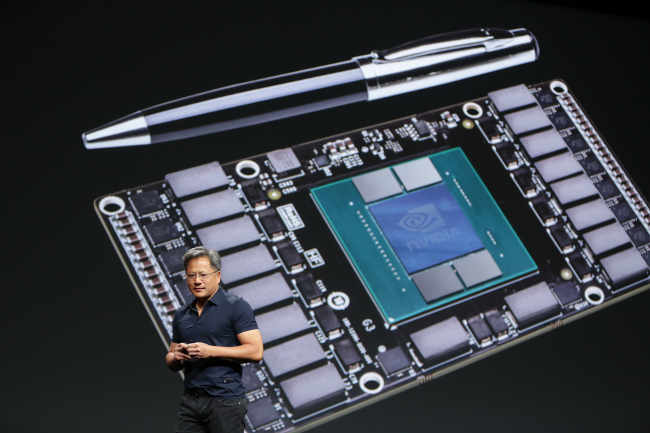

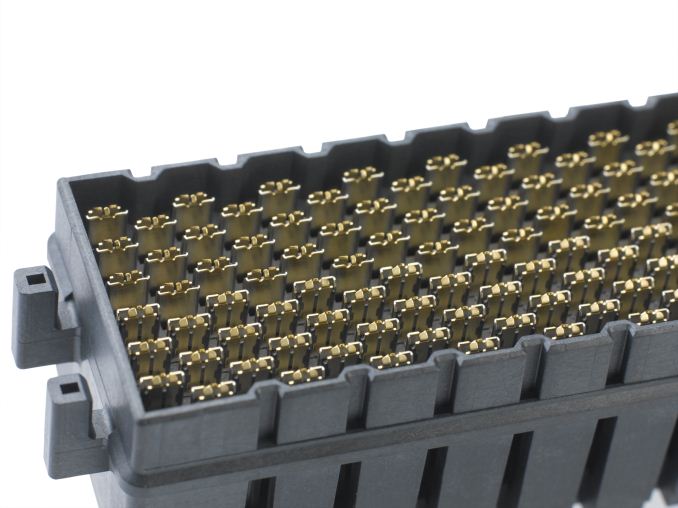

But the rabbit hole goes deeper. To pull off the kind of transfer rates NVIDIA wants to accomplish, the traditional PCI/PCIe style edge connector is no good; if nothing else the lengths that can be supported by such a fast bus are too short. So NVLink will be ditching the slot in favor of what NVIDIA is labeling a mezzanine connector, the type of connector typically used to sandwich multiple PCBs together (think GTX 295). We haven’t seen the connector yet, but it goes without saying that this requires a major change in motherboard designs for the boards that will support NVLink. The upside of this however is that with this change and the use of a true point-to-point bus, what NVIDIA is proposing is for all practical purposes a socketed GPU, just with the memory and power delivery circuitry on the GPU instead of on the motherboard.

NVIDIA’s Pascal test vehicle is one such example of what a card would look like. We cannot see the connector itself, but the basic idea is that it will lay down on a motherboard parallel to the board (instead of perpendicular like PCIe slots), with each Pascal card connected to the board through the NVLink mezzanine connector. Besides reducing trace lengths, this has the added benefit of allowing such GPUs to be cooled with CPU-style cooling methods (we’re talking about servers here, not desktops) in a space efficient manner. How many NVLink mezzanine connectors available would of course depend on how many the motherboard design calls for, which in turn will depend on how much space is available.

One final benefit NVIDIA is touting is that the new connector and bus will improve both energy efficiency and energy delivery. When it comes to energy efficiency NVIDIA is telling us that per byte, NVLink will be more efficient than PCIe – this being a legitimate concern when scaling up to many GPUs. At the same time the connector will be designed to provide far more than the 75W PCIe is spec’d for today, allowing the GPU to be directly powered via the connector, as opposed to requiring external PCIe power cables that clutter up designs.

With all of that said, while NVIDIA has grand plans for NVLink, it’s also clear that PCIe isn’t going to be completely replaced anytime soon on a large scale. NVIDIA will still support PCIe – in fact the blocks can talk PCIe or NVLink – and even in NVLink setups there are certain command and control communiques that must be sent through PCIe rather than NVLink. In other words, PCIe will still be supported across NVIDIA's product lines, with NVLink existing as a high performance alternative for the appropriate product lines. The best case scenario for NVLink right now is that it takes hold in servers, while workstations and consumers would continue to use PCIe as they do today.

Meanwhile, though NVLink won’t even be shipping until Pascal in 2016, NVIDIA already has some future plans in store for the technology. Along with a GPU-to-GPU link, NVIDIA’s plans include a more ambitious CPU-to-GPU link, in large part to achieve the same data transfer and synchronization goals as with inter-GPU communication. As part of the OpenPOWER consortium, NVLink is being made available to POWER CPU designs, though no specific CPU has been announced. Meanwhile the door is also left open for NVIDIA to build an ARM CPU implementing NVLink (Denver perhaps?) but again, no such product is being announced today. If it did come to fruition though, then it would be similar in concept to AMD’s abandoned “Torrenza” plans to utilize HyperTransport to connect CPUs with other processors (e.g. GPUs).

Finally, NVIDIA has already worked out some feature goals for what they want to do with NVLink 2.0, which would come on the GPU after Pascal (which by NV’s other statements should be Volta). NVLink 2.0 would introduce cache coherency to the interface and processors on it, which would allow for further performance improvements and the ability to more readily execute programs in a heterogeneous manner, as cache coherency is a precursor to tightly shared memory.

Wrapping things up, with an attached date for Pascal and numerous features now billed for that product, NVIDIA looks to have to set the wheels in motion for developing the GPU they’d like to have in 2016. The roadmap alteration we’ve seen today is unexpected to say the least, but Pascal is on much more solid footing than old Volta was in 2013. In the meantime we’re still waiting to see what Maxwell will bring NVIDIA’s professional products, and it looks like we’ll be waiting a bit longer to get the answer to that question.

Nvidia: ‘Pascal’ architecture’s NVLink to enable 8-way multi-GPU capability

Compute performance of modern graphics processing units (GPUs) is tremendous, but so are the needs of modern applications that use such chips to display beautiful images or perform complex scientific calculations. Nowadays it is rather impossible to install more than four GPUs into a computer box and get adequate performance scaling. But brace yourself as Nvidia is working on eight-way multi-GPU technology.

The vast majority of personal computers today have only one graphics processor, but many gaming PCs used to play games integrate two graphics cards for increased framerate. Enthusiasts, who want to have unbeatable performance in select games and benchmarks opt for three-way or four-way multi-GPU setups, but these are pretty rare because scaling beyond two GPUs is not really high. Professionals, who need high-performance GPUs for simulations, deep learning and other applications also benefit from four graphics processors and could use even more GPUs per box. Unfortunately, that is virtually impossible because of limitations imposed by today’s PCI Express and SLI technologies. However, Nvidia hopes that with the emergence of the code-named “Pascal” GPUs and NVLink bus, it will be considerably easier to build multi-GPU machines.

Today even the top-of-the-range Intel Core i7-5960X processor has only 40 PCI Express 3.0 lanes (up to 40GB/s of bandwidth), thus, can connect up to two graphics cards using PCIe 3.0 x16 or up to four cards using PCIe 3.0 x8 bus. In both cases, maximum bandwidth available for GPU-to-GPU communications will be limited to 16GB/s or 8GB/s (useful bandwidth will be around 12GB/s and 6GB/s) in the best case scenarios since GPUs need to communicate with the CPU too.

In a bid to considerably improve communication speed between GPUs, Nvidia will implement support of proprietary NVLink bus into its next-generation “Pascal” GPUs. Each NVLink point-to-point connection will support 20GB/s of bandwidth in both directions simultaneously (16GB/s effective bandwidth in both directions) and each “Pascal” high-end GPU will support at least four of such links. In case a of a system with NVLink, two GPUs would get a total peak bandwidth of 80GB/s (64GB/s effective) per direction between them. Moreover, PCI Express bandwidth would be preserved for CPU-to-GPU communications. In case of four-GPU sub-system, graphics processors would get up to 40GB/s bandwidth to communicate with each other.

According to Nvidia, NVLink is projected to deliver up to two times higher performance in many applications simply by replacing the PCIe interconnect for communication among peer GPUs. It should be noted that in an NVLink-enabled system, CPU-initiated transactions such as control and configuration are still directed over a PCIe connection, while any GPU-initiated transactions use NVLink, which allows to preserve the PCIe programming model.

Additional bandwidth provided by NVLink could allow one to build a personal computer with up to eight GPUs. However, to make it useful in applications beyond technical computing, Nvidia will have to find a way to efficiently use eight graphics cards for rendering. Since performance scaling beyond two GPUs is generally low, it is unlikely that eight-way multi-GPU technology will actually make it to the market. However, if Nvidia manages to improve efficiency of current multi-GPU technologies in general by replacing SLI [scalable link interface] with NVLink, that could further boost popularity of the company’s graphics cards among gamers.

Performance improvement could be even more significant in systems that completely rely on NVLink instead of PCI Express. IBM plans to add NVLink to select Power microprocessors for supercomputers and the technology will be extremely useful for high-performance servers powered by Nvidia Tesla accelerators.

Log in to comment