@scatteh316: I distinctly remember PS3 having numerous issues running Skyrim. The game would lock up and lag like crazy. Xbox 360 never had any of those issues.

The Cell and its hidden secrets

@scatteh316: You mean like when every multiplatform game under the sun ran better on the Xbox 360? A handful late in the gen that ran better on the PS3 just proves my point that the thing was too complicated for developers to bother wasting the time to make it perform as well as the 360 version.

The only games where the devs bothered were the exclusives. Those were few and far between. Naughty Dog pushing the PS3 to its absolute limits is the exception, not the rule. The real world proved that the Cell and the entire PS3 was a pain in the ass to develop on and hindered development on the platform constantly. It rarely translated to better performance in practice because it was too difficult to code for in the little amount of time game devs have to build and launch a game.

What's the point of having some super power chip if only a handful of games over the entire life of the machine are going to use it? There is no logic behind that. Winning some internet argument on which is technically more powerful doesn't mean jack shit when a the 360, that launched months earlier for $100 less, had comparably good looking games throughout the entire lifespan to the PS3. It's a pathetic waste of time that cost the PS3 a lot of potential sales early on to the 360 because of the bad PR gained from it's noticeably bad ports.

Why do you think they dumped that piece of crap in favor of x86?

Once again you are wrong..... multiplatform games HAD to use Cell to pick up the slack so RSX had enough head room for GPU tasks.

Culling, lighting, skinning.... plus a crap load more was moved from RSX to Cell to enable PS3 to even get close to the same bull park graphics and performance as 360 on multiplatform games.

This is WELL DOCUMENTED in various developer white papers.

So yes Cell DID bring real world performance improvements to PS3 in pretty much every game.

there are no hidden secrets. teh hidden power has been revealed and it turned out to be a bit meh in the end. it never pulled any sort of massive leap in the visual department over the 360 (and what it did can be more put down to the skill of the developers working on one set of hardware for a decade rather than any sort of technical greatness of the hardware itself).

the entire approach to the PS3s design was incorrect and sony, paid a high price for it. it was so wrong that the head of PS lost his job and they scrapped the entire thing for the PS4. so then we got the vita and PS4 which are much much better gaming devices.

the cell itself was interesting but, due to the advent of GPGPU, it was an evolutionary dead end. the kind of number crunching the SPEs could do...well it turns out GPUs do a lot better. this idea was not inspired by the cell either. it was in the pipeline for a long long time.

in a modern machine with a modern GPU architecture using the cell (even an updated one with more SPEs) would simply make no sense. the thigns the cell used to do: some texturing, post process AA and so on...thats stuff for the GPU now.

@tormentos: lol Few run better those often ran better because of longer Dev time dedicated to the PS3 version, you are the guy that when Oblivion came out ignored the fact it had an extra year of Dev time and that the 360 version simply needed a patch to match the PS3 version... The version which still to this day goes sub 10 fps even after the 14 patches it's recieved.

There is really only one point that needs to be made.... The cell is one of the chief reasons Sony is still in dire straights financially they wasted a large amount of funds on cell only to learn no one wanted anything to do with it is why nothing uses it.

You can argue hidden power but when a developer has to sacrifice their first born just to access that power intermittently then the added Dev time isn't worth it. "devs had to juggle affects often because the spus would only handle certain effects singularly, so the hidden power was intermintent."

@tormentos: lol Few run better those often ran better because of longer Dev time dedicated to the PS3 version, you are the guy that when Oblivion came out ignored the fact it had an extra year of Dev time and that the 360 version simply needed a patch to match the PS3 version... The version which still to this day goes sub 10 fps even after the 14 patches it's recieved.

Several run better an it is obvious that time of development was a problem,the PS3 took more time do to more complex hardware on a industry where timing is crucial for many games,games were taken up to a point and not push further on PS3 as simple as that.

The PS3 was more powerful period the combination of Cell RSX > Xenon Xenos what the 360 had was ease of use.

GT6 1440x1080p day and night and weather.

Forza 4 1280x720 no day night racing no weather because of frame impact.

@tormentos: lol Few run better those often ran better because of longer Dev time dedicated to the PS3 version, you are the guy that when Oblivion came out ignored the fact it had an extra year of Dev time and that the 360 version simply needed a patch to match the PS3 version... The version which still to this day goes sub 10 fps even after the 14 patches it's recieved.

Several run better an it is obvious that time of development was a problem,the PS3 took more time do to more complex hardware on a industry where timing is crucial for many games,games were taken up to a point and not push further on PS3 as simple as that.

The PS3 was more powerful period the combination of Cell RSX > Xenon Xenos what the 360 had was ease of use.

GT6 1440x1080p day and night and weather.

Forza 4 1280x720 no day night racing no weather because of frame impact.

Unfortunately for the cows that played games (not you since you don't) since the OVERWHELMING majority of games performed better on the 360. It wasn't even close El Tormented but keep defending Sony at all cost. It only makes you look even more pathetic.

Gaming PC system comes with CPU and discrete GpGPU. AMD APU includes CPU and GpGPU.

GpGPU handles data-intensive parallel processing.

The long time ago, DSP was a separate chip.

The decision to combine DSP and CPU is mostly for cost and latency reduction reasons. The only buffoon is you.

PC did have quad SPU based add-on PCI card and it flopped since data-intensive parallel processing is already handled by the GpGPU.

PS3 was release around November 2006 NOT 2005

Pure 3.2 Ghz clock speed argument is meaningless MORON.

That is now after APU were created when Cell came there was no APU you brain dead fool.

Cell is a CPU and all comparison with other system is done CPU vs CPU,regardless of SPU.

No you are the fool,the G80 is a GPU not a CPU not an APU your comparison is total bullshit.

The PS3 was release around 2006 the CPU was ready in 2005 ass.

IBM, Toshiba and Sony have unveiled software developer kits for the Cell processor, a move aimed at ramping up the volume of applications and utilities for the multi-core chip.

https://www.electronicsweekly.com/news/products/micros/cell-processor-ready-any-takers-2005-11/

BY 2005 it was ready.

How the fu** it is meaningless? What GPU has 3.2ghz speed? since you are comparing Cell to the G80 Cell most be a GPU or like it at least,so how come the G80 was what 575mhz? DSP had what 5 times that speed? You are comparing the G80 vs Cell because you can't fu**ing find a CPU that is even close to Cell on 2006.

Oh look how pathetic using a what 2014 APU vs a 2005 Cell? See why i say you are a dishonest joke of a poster,since you can't find a 2006 that beat Cell in what it does you use a 2014 APU.

By the way that APU has 128SP at 600mhz...lol Cell DSP are clocked at 3.2ghz once again proving my point Cell is not a GPU and should not be compare to it,as DSP are not GPU dishonest joke.

The Cell doesn't have any DSPs (although DSP functions can be emulated).

The SPE cores aren't "bad" at branch prediction. They don't have any branch prediction at all. Branch prediction has to be emulated (predicted) in the compiler which isn't nearly as efficient as hardware branch prediction.

You are actually right it doesn't but SPU are DSP like which is why people call them that way.

Reason why i say it is bad for branching yet jocker in DF claim if you want to do branching you move it to SPU.

@scatteh316: You mean like when every multiplatform game under the sun ran better on the Xbox 360? A handful late in the gen that ran better on the PS3 just proves my point that the thing was too complicated for developers to bother wasting the time to make it perform as well as the 360 version.

Yes it was powerful but to complicated in fact time to triangle a game was far far longer on PS3 than even on PS2 which is incredible since the PS2 used 2 custom made processors EE and GS,yet the PS3 was only Cell since the RSX was taken from PC.

The Cell is overrated, and it served as a crutch for PS3's weak GPU. The Xbox 360 had a much better GPU, more RAM and was a better console to program for overall.

The Cell is overrated, and it served as a crutch for PS3's weak GPU. The Xbox 360 had a much better GPU, more RAM and was a better console to program for overall.

That's the point of the discussion: the Power of The Cell was never fully unlocked, which makes us wonder what kind of secrets may be hidden within the CPU.

The Cell is overrated, and it served as a crutch for PS3's weak GPU. The Xbox 360 had a much better GPU, more RAM and was a better console to program for overall.

That's the point of the discussion: the Power of The Cell was never fully unlocked, which makes us wonder what kind of secrets may be hidden within the CPU.

It's a pointless discussion for a product that's no longer in use in any of the current gen consoles. It's history and should stay that way.

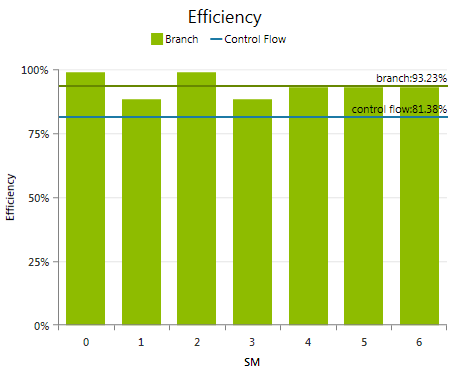

RSX's branch was very bad.

SPU's branch was a better than very bad.

Neither of those devices even support branch prediction.

You are using words you don't know.

Refer to static branch.

SPU has static branch prediction and prepare-to-branch operations. https://www.research.ibm.com/cell/cell_compilation.html

You are using words you don't know.

-------------

http://docs.nvidia.com/gameworks/content/developertools/desktop/analysis/report/cudaexperiments/kernellevel/branchstatistics.htm

Note why NVIDIA has PhysX CUDA.

/facepalm holyfuckingfuckfuck

Branching and predicting the branch are two separate functions.

Branching is like walking through a maze.

Branch prediction is like calculating the most efficient path from the beginning to the end of a changing maze.

Saying that SPUs have branch prediction is like saying that you have a GPS because you printed out instructions to grandma's house from Mapquest.com.

Again, IBM claims SPU has static branch prediction and prepare-to-branch operations.

Static prediction is the simplest branch prediction technique because it does not rely on information about the dynamic history of code executing. Instead it predicts the outcome of a branch based solely on the branch instruction.

Dynamic branch prediction[2] uses information about taken or not taken branches gathered at run-time to predict the outcome of a branch

A two-level adaptive predictor with globally shared history buffer and pattern history table is called a "gshare" predictor if it xors the global history and branch PC, and "gselect" if it concatenates them. Global branch prediction is used in AMD processors, and in Intel Pentium M, Core, Core 2, and Silvermont-based Atom processors

The Intel Core i7 has overriding branch prediction with two branch target buffers and possibly two or more branch predictors.

The AMDRyzen processor, previewed on December, 13th, 2016, revealed its newest processor architecture using a neural network based branch predictor to minimize prediction errors.

Read https://en.wikipedia.org/wiki/Branch_predictor

Facepalm yourself.

Gaming PC system comes with CPU and discrete GpGPU. AMD APU includes CPU and GpGPU.

GpGPU handles data-intensive parallel processing.

The long time ago, DSP was a separate chip.

The decision to combine DSP and CPU is mostly for cost and latency reduction reasons. The only buffoon is you.

PC did have quad SPU based add-on PCI card and it flopped since data-intensive parallel processing is already handled by the GpGPU.

PS3 was release around November 2006 NOT 2005

Pure 3.2 Ghz clock speed argument is meaningless MORON.

That is now after APU were created when Cell came there was no APU you brain dead fool.

Cell is a CPU and all comparison with other system is done CPU vs CPU,regardless of SPU.

No you are the fool,the G80 is a GPU not a CPU not an APU your comparison is total bullshit.

The PS3 was release around 2006 the CPU was ready in 2005 ass.

IBM, Toshiba and Sony have unveiled software developer kits for the Cell processor, a move aimed at ramping up the volume of applications and utilities for the multi-core chip.

https://www.electronicsweekly.com/news/products/micros/cell-processor-ready-any-takers-2005-11/

BY 2005 it was ready.

How the fu** it is meaningless? What GPU has 3.2ghz speed? since you are comparing Cell to the G80 Cell most be a GPU or like it at least,so how come the G80 was what 575mhz? DSP had what 5 times that speed? You are comparing the G80 vs Cell because you can't fu**ing find a CPU that is even close to Cell on 2006.

Oh look how pathetic using a what 2014 APU vs a 2005 Cell? See why i say you are a dishonest joke of a poster,since you can't find a 2006 that beat Cell in what it does you use a 2014 APU.

By the way that APU has 128SP at 600mhz...lol Cell DSP are clocked at 3.2ghz once again proving my point Cell is not a GPU and should not be compare to it,as DSP are not GPU dishonest joke.

The Cell doesn't have any DSPs (although DSP functions can be emulated).

The SPE cores aren't "bad" at branch prediction. They don't have any branch prediction at all. Branch prediction has to be emulated (predicted) in the compiler which isn't nearly as efficient as hardware branch prediction.

You are actually right it doesn't but SPU are DSP like which is why people call them that way.

Reason why i say it is bad for branching yet jocker in DF claim if you want to do branching you move it to SPU.

@scatteh316: You mean like when every multiplatform game under the sun ran better on the Xbox 360? A handful late in the gen that ran better on the PS3 just proves my point that the thing was too complicated for developers to bother wasting the time to make it perform as well as the 360 version.

Yes it was powerful but to complicated in fact time to triangle a game was far far longer on PS3 than even on PS2 which is incredible since the PS2 used 2 custom made processors EE and GS,yet the PS3 was only Cell since the RSX was taken from PC.

NVIDIA also has G80 engineering sample prior to it's release you stupid cow. NVIDIA made sure their G80 was released a few weeks ahead of PS3 and engaged against IBM initiated GFLOPS PR war on the PC industry.

Again, pure clock speed argument is useless for parallel workloads i.e. what's important is clock speed and instructions count per cycle.

PS3 which includes CELL is competing against a PC gaming system which includes G80. The record shows CELL died while NVIDIA CUDA idea lives on as super scale NVIDIA GP100 and numerous Maxwell/Pascal GPUs which includes Tegra X1 SoC that powers Nintendo Switch.

The facts, an APU is made up of CPU and GpGPU. Again, the main reasons for APU is mostly for cost and latency reduction.

The main difference between CUDA GpGPU and SPU are the following

1. GpGPU has thousand of hyper-threads.

2. GpGPU has thousands of registers. RISC camp talks about 32 to 128 register superiority, while GpGPU camp has thousands of registers. GpGPU camp made RISC camp's superiority narrative as GPU's extreme implementation.

3. GpGPU has many load-store units aka TMU (texture management units) greater than just CELL's 7 units from 7 SPUs. GpGPU's TMUs has very robust gather/scatter functions.

4. GpGPU has additional texture filter integer/floating point processors for each TMUs. Each TMUs has compression/decompression processors. GpGPU TMU has complex functions which runs contrary to RISC camp's simple instruction ideology .

5. GpGPU has decoupled hyper-threading from load fetch tasks. SPU doesn't have hyper-threading to switch out stalled threads waiting for data fetch.

6. GpGPU has additional load-store units from ROPS.

7. GpGPU's ROPS has complex built-in function such as blend, alpha, MSAA, compression/decompression and 'etc'. GpGPU ROPS has complex functions which runs contrary to RISC camp's simple instruction ideology.

8. GpGPU's TMUs and ROPs have powerful move units.

9. GpGPU is optimized for 3D handling with Z-Depth resolve processing.

The only CPU inside the CELL is only PPE NOT SPUs and it's a shit CPU. G80 can smash SPUs on the workloads that matter for 3D gaming.

AMD Jaguar is effectively a slightly cut-down K8 with 128bit AVX/SSE4.x units hence the technology is not 100 percent "new". AMD copy-and-paste 8 Jaguar cores.

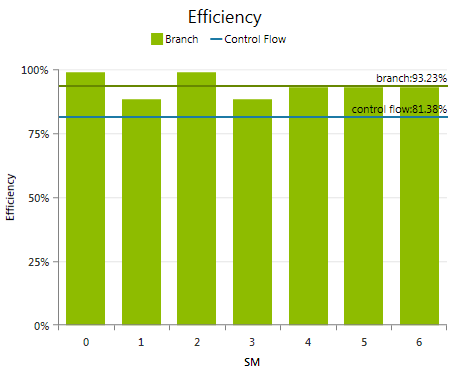

RSX's branch was very bad.

SPU's branch was a better than very bad.

Neither of those devices even support branch prediction.

You are using words you don't know.

Refer to static branch.

SPU has static branch prediction and prepare-to-branch operations. https://www.research.ibm.com/cell/cell_compilation.html

You are using words you don't know.

-------------

http://docs.nvidia.com/gameworks/content/developertools/desktop/analysis/report/cudaexperiments/kernellevel/branchstatistics.htm

Note why NVIDIA has PhysX CUDA.

/facepalm holyfuckingfuckfuck

Branching and predicting the branch are two separate functions.

Branching is like walking through a maze.

Branch prediction is like calculating the most efficient path from the beginning to the end of a changing maze.

Saying that SPUs have branch prediction is like saying that you have a GPS because you printed out instructions to grandma's house from Mapquest.com.

Again, IBM claims SPU has static branch prediction and prepare-to-branch operations.

Static prediction is the simplest branch prediction technique because it does not rely on information about the dynamic history of code executing. Instead it predicts the outcome of a branch based solely on the branch instruction.

Dynamic branch prediction[2] uses information about taken or not taken branches gathered at run-time to predict the outcome of a branch

A two-level adaptive predictor with globally shared history buffer and pattern history table is called a "gshare" predictor if it xors the global history and branch PC, and "gselect" if it concatenates them. Global branch prediction is used in AMD processors, and in Intel Pentium M, Core, Core 2, and Silvermont-based Atom processors

The Intel Core i7 has overriding branch prediction with two branch target buffers and possibly two or more branch predictors.

The AMDRyzen processor, previewed on December, 13th, 2016, revealed its newest processor architecture using a neural network based branch predictor to minimize prediction errors.

Read https://en.wikipedia.org/wiki/Branch_predictor

Facepalm yourself.

Wow, it took you a long time to read through the wiki article - and you still didn't learn anything :)

Refer to static branch.

SPU has static branch prediction and prepare-to-branch operations. https://www.research.ibm.com/cell/cell_compilation.html

You are using words you don't know.

-------------

http://docs.nvidia.com/gameworks/content/developertools/desktop/analysis/report/cudaexperiments/kernellevel/branchstatistics.htm

Note why NVIDIA has PhysX CUDA.

/facepalm holyfuckingfuckfuck

Branching and predicting the branch are two separate functions.

Branching is like walking through a maze.

Branch prediction is like calculating the most efficient path from the beginning to the end of a changing maze.

Saying that SPUs have branch prediction is like saying that you have a GPS because you printed out instructions to grandma's house from Mapquest.com.

Again, IBM claims SPU has static branch prediction and prepare-to-branch operations.

Static prediction is the simplest branch prediction technique because it does not rely on information about the dynamic history of code executing. Instead it predicts the outcome of a branch based solely on the branch instruction.

Dynamic branch prediction[2] uses information about taken or not taken branches gathered at run-time to predict the outcome of a branch

A two-level adaptive predictor with globally shared history buffer and pattern history table is called a "gshare" predictor if it xors the global history and branch PC, and "gselect" if it concatenates them. Global branch prediction is used in AMD processors, and in Intel Pentium M, Core, Core 2, and Silvermont-based Atom processors

The Intel Core i7 has overriding branch prediction with two branch target buffers and possibly two or more branch predictors.

The AMDRyzen processor, previewed on December, 13th, 2016, revealed its newest processor architecture using a neural network based branch predictor to minimize prediction errors.

Read https://en.wikipedia.org/wiki/Branch_predictor

Facepalm yourself.

Wow, it took you a long time to read through the wiki article - and you still didn't learn anything :)

You claimed SPU doesn't have "branch prediction" while IBM claimed SPU has static branch prediction. Try again.

The reason why I use a wiki source is due to your failure with the basics.

@ronvalencia: Cell definitely has branch prediction, but it is difficult to code for due to how advanced the architecture is. You both are wrong and right in a sense.

@ronvalencia: Cell definitely has branch prediction, but it is difficult to code for due to how advanced the architecture is. You both are wrong and right in a sense.

Sorry, you are wrong. CELL's in-order processing with static branch prediction design is worst than Intel Atom "Bonnell" architecture.

CELL is closer to 1st gen PowerPC 601 with 128bit Altivec unit and very long pipeline for high clock speed.

Think of Pentium IV without out-of-order processing, worst branch prediction technology but with 128bit Altivec unit which is copied 8 times on a chip and one of the processing core is incompatible with the other 7 processor cores.

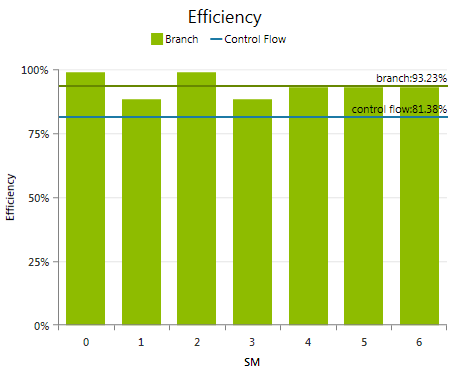

Refer to static branch.

SPU has static branch prediction and prepare-to-branch operations. https://www.research.ibm.com/cell/cell_compilation.html

You are using words you don't know.

-------------

http://docs.nvidia.com/gameworks/content/developertools/desktop/analysis/report/cudaexperiments/kernellevel/branchstatistics.htm

Note why NVIDIA has PhysX CUDA.

/facepalm holyfuckingfuckfuck

Branching and predicting the branch are two separate functions.

Branching is like walking through a maze.

Branch prediction is like calculating the most efficient path from the beginning to the end of a changing maze.

Saying that SPUs have branch prediction is like saying that you have a GPS because you printed out instructions to grandma's house from Mapquest.com.

Again, IBM claims SPU has static branch prediction and prepare-to-branch operations.

Static prediction is the simplest branch prediction technique because it does not rely on information about the dynamic history of code executing. Instead it predicts the outcome of a branch based solely on the branch instruction.

Dynamic branch prediction[2] uses information about taken or not taken branches gathered at run-time to predict the outcome of a branch

A two-level adaptive predictor with globally shared history buffer and pattern history table is called a "gshare" predictor if it xors the global history and branch PC, and "gselect" if it concatenates them. Global branch prediction is used in AMD processors, and in Intel Pentium M, Core, Core 2, and Silvermont-based Atom processors

The Intel Core i7 has overriding branch prediction with two branch target buffers and possibly two or more branch predictors.

The AMDRyzen processor, previewed on December, 13th, 2016, revealed its newest processor architecture using a neural network based branch predictor to minimize prediction errors.

Read https://en.wikipedia.org/wiki/Branch_predictor

Facepalm yourself.

Wow, it took you a long time to read through the wiki article - and you still didn't learn anything :)

You claimed SPU doesn't have "branch prediction" while IBM claimed SPU has static branch prediction. Try again.

The reason why I use a wiki source is due to your failure with the basics.

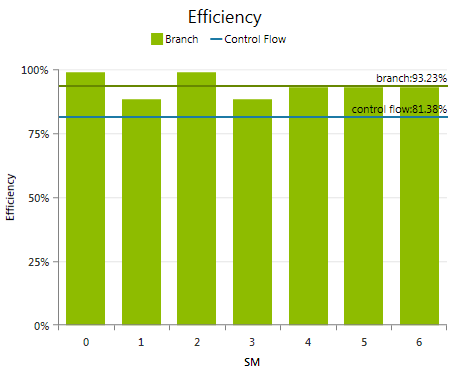

You are wasting all this time and you don't even bother to read what I wrote:

"They don't have any branch prediction at all. Branch prediction has to be emulated (predicted) in the compiler"

Static branch prediction is monolithically compiled into the binary.

Again, IBM claims SPU has static branch prediction and prepare-to-branch operations.

Static prediction is the simplest branch prediction technique because it does not rely on information about the dynamic history of code executing. Instead it predicts the outcome of a branch based solely on the branch instruction.

Dynamic branch prediction[2] uses information about taken or not taken branches gathered at run-time to predict the outcome of a branch

A two-level adaptive predictor with globally shared history buffer and pattern history table is called a "gshare" predictor if it xors the global history and branch PC, and "gselect" if it concatenates them. Global branch prediction is used in AMD processors, and in Intel Pentium M, Core, Core 2, and Silvermont-based Atom processors

The Intel Core i7 has overriding branch prediction with two branch target buffers and possibly two or more branch predictors.

The AMDRyzen processor, previewed on December, 13th, 2016, revealed its newest processor architecture using a neural network based branch predictor to minimize prediction errors.

Read https://en.wikipedia.org/wiki/Branch_predictor

Facepalm yourself.

Wow, it took you a long time to read through the wiki article - and you still didn't learn anything :)

You claimed SPU doesn't have "branch prediction" while IBM claimed SPU has static branch prediction. Try again.

The reason why I use a wiki source is due to your failure with the basics.

You are wasting all this time and you don't even bother to read what I wrote:

"They don't have any branch prediction at all. Branch prediction has to be emulated (predicted) in the compiler"

Static branch prediction is monolithically compiled into the binary.

SPU has static branch prediction hardware.

The assertion "They don't have any branch prediction at all. Branch prediction has to be emulated (predicted) in the compiler" is fake news.

The facts: CELL's SPU doesn't have dynamic branch prediction nor any higher grade branch prediction designs that are higher than dynamic branch prediction designs.

There are other design problems with IBM's SPU that resulted inferior IPC relative to AMD Jaguar.

@ronvalencia said:NVIDIA also has G80 engineering sample prior to it's release you stupid cow. NVIDIA made sure their G80 was released a few weeks ahead of PS3 and engaged against IBM initiated GFLOPS PR war on the PC industry.

Ok this is all i am going to say to you since it is easy to see that you don't have the slightest comprehension of the English language and you simple can't fallow an argument,not only that you invent wars between IBM and Nvidia which is totally a joke the PS3 has a damn Nvidia GPU you freaking MORON.

1-Cell was ready on 2005. So i am right.

2-Cell is a CPU with DSP like processors as well.

3-DSP are not GPU.

4-CPU are not GPU.

5-GPU don't have 3.2ghz speed,in fact in 2006 the top of the line GPU was half way the 1 ghz route,the 8800GTX was 575mhz.

6-Kabini is a relatively new APU,comparing it with a 2005 design and claiming some kind of victory is a joke.

LINK me to 2006 CPU beating cell.

@ronvalencia said:NVIDIA also has G80 engineering sample prior to it's release you stupid cow. NVIDIA made sure their G80 was released a few weeks ahead of PS3 and engaged against IBM initiated GFLOPS PR war on the PC industry.

Ok this is all i am going to say to you since it is easy to see that you don't have the slightest comprehension of the English language and you simple can't fallow an argument,not only that you invent wars between IBM and Nvidia which is totally a joke the PS3 has a damn Nvidia GPU you freaking MORON.

1-Cell was ready on 2005. So i am right.

2-Cell is a CPU with DSP like processors as well.

3-DSP are not GPU.

4-CPU are not GPU.

5-GPU don't have 3.2ghz speed,in fact in 2006 the top of the line GPU was half way the 1 ghz route,the 8800GTX was 575mhz.

6-Kabini is a relatively new APU,comparing it with a 2005 design and claiming some kind of victory is a joke.

LINK me to 2006 CPU beating cell.

1. PS3 was NOT available during 2005 and your argument is a red herring.

2. Integration is for cost and latency reduction which is important for game consoles.

3. GpGPU can do DSP work.

4. So what? Integration is for cost and latency reduction.

5. Pure clock speed argument is useless. 8800 GTX's Stream processor clock speed can reach 1350 Mhz while fix function GPU hardware stays at 575 Mhz. You don't know shit about PC hardware.

6. Kabini's Jaguar (dual X86 instruction per cycle out-of-order processing) is effectively slightly cut-down K8 (3 X86 instruction per cycle out-of-order processing) with 128 bit SSE4.x/AVX units. X86 instruction can decode to two or more RISC instructions i.e. X86's instruction compression advantage, hence increasing the IPC effective work when compared to pure RISC competitors. AMD was in the process taking over ATI around year 2005. Jaguar has gimped X87/MMX/3DNow legacy functions to help reduce size.

X87/MMX/3DNow are not important for X86-64 specs.

Gaming PC system with Core 2 Duo with G80 can beat PS3 system.

There's nothing new for NVIDIA CUDA processors reaching 1.5 Ghz clock speed. My MSI Gaming 980 Ti easily reached 1.5 Ghz clock speed overclock with 2816 stream processors while 1080 Ti's automatic stealth overclock reached 1.8 Ghz with 3584 steam processors. AMD has about 11 years to prepare for NVIDIA's clock speed advantage and they still failed.

The Cell is overrated, and it served as a crutch for PS3's weak GPU. The Xbox 360 had a much better GPU, more RAM and was a better console to program for overall.

That's the point of the discussion: the Power of The Cell was never fully unlocked, which makes us wonder what kind of secrets may be hidden within the CPU.

it was. GG and naughty dog had it mostly tapped out with killzone 2 and uncharted 2. it was pretty much entirely maxed out with U3 and KZ3.

the jump from U2 to U3 was small. the jump from U3 to the last of us was negligable. games like killzone 3, GT6 and the last of us...thats the cell maxed out. there is no more hidden power.

1. PS3 was NOT available during 2005 and your argument is a red herring.

2. Integration is for cost and latency reduction which is important for game consoles.

3. GpGPU can do DSP work.

4. So what? Integration is for cost and latency reduction.

5. Pure clock speed argument is useless. 8800 GTX's Stream processor clock speed can reach 1350 Mhz while fix function GPU hardware stays at 575 Mhz. You don't know shit about PC hardware.

6. Kabini's Jaguar (dual X86 instruction per cycle out-of-order processing) is effectively slightly cut-down K8 (3 X86 instruction per cycle out-of-order processing) with 128 bit SSE4.x/AVX units. X86 instruction can decode to two or more RISC instructions i.e. X86's instruction compression advantage, hence increasing the IPC effective work when compared to pure RISC competitors. AMD was in the process taking over ATI around year 2005. Jaguar has gimped X87/MMX/3DNow legacy functions to help reduce size.

X87/MMX/3DNow are not important for X86-64 specs.

Gaming PC system with Core 2 Duo with G80 can beat PS3 system.

There's nothing new for NVIDIA CUDA processors reaching 1.5 Ghz clock speed. My MSI Gaming 980 Ti easily reached 1.5 Ghz clock speed overclock with 2816 stream processors while 1080 Ti's automatic stealth overclock reached 1.8 Ghz with 3584 steam processors. AMD has about 11 years to prepare for NVIDIA's clock speed advantage and they still failed.

1-No is not as the CPU was ready on 2005 in fact in mid 2005 it was already READY,sony unlike MS didn't send developer G5 with X800 GPU to make 360 games,PS3 games actually used dev kit with final hardware,those games released on 2006 where on the making on 2005 in actual dev kist development of Cell begin in 2000 - 2001.

So yeah it is a 2005 CPU.

2-Irrelevant again.

3-Gpgpu is using the GPU to do compute task,in fact GpGPU is on its infancy and on 2006 was basically no where to be found,there was no GPU accelerated apps or programs on 2006,hell few programs barely used 2 cores or games for that matter.

So hiding on Gpgpu to compare DSP vs GPU is a joke,DSP are good in some jobs that GPU are also good at,there similarity ends there.

DSP are not GPU and certainly the speed of SPE tell the whole story.

4-Irrelevant you can't compare CPU vs GPU on what serve you best,now let see how great was the G80 running windows vs a CPU.? Oh yeah it does not,a GPU needs a CPU where ever a CPU doesn't need a GPU,it is the CPU who feeds the GPU,without one your GPU does nothing.

Want to test? take the CPU out of your PC and turn it up with just the GPU see what you get.lol

5-Bullshit clock speed of the 8800gtx was 575mhz,the rest is irrelevant shit,and even so still i don't see 3.2ghz there.

6-Kabini is newer than cell by 9 years stop your bullshit,period find me a 2006 that could beat cell i dare you..lol Link me to it non were even close and there was no APU back them to use compute and help its self.

Fact is you use the G80 because it serve you best,compare it to intel core 2 duo or Pentiun D of that time,and see what you get on folding home not even the $999 extreme edition was close to cell.

Your hate for anything sony is a joke and cloud the little judgement you have.

@ronvalencia: Cell definitely has branch prediction, but it is difficult to code for due to how advanced the architecture is. You both are wrong and right in a sense.

Sorry, you are wrong. CELL's in-order processing with static branch prediction design is worst than Intel Atom "Bonnell" architecture.

CELL is closer to 1st gen PowerPC 601 with 128bit Altivec unit and very long pipeline for high clock speed.

Think of Pentium IV without out-of-order processing, worst branch prediction technology but with 128bit Altivec unit which is copied 8 times on a chip and one of the processing core is incompatible with the other 7 processor cores.

I completely disagree, but to each his own. Cell's branching allowed for developers to optimize the processing order so that it could beat the latest and greatest processors of the time, the only issue was the tech was so new and complex, it took some time to actually hardness the power.

@ronvalencia: Cell definitely has branch prediction, but it is difficult to code for due to how advanced the architecture is. You both are wrong and right in a sense.

Sorry, you are wrong. CELL's in-order processing with static branch prediction design is worst than Intel Atom "Bonnell" architecture.

CELL is closer to 1st gen PowerPC 601 with 128bit Altivec unit and very long pipeline for high clock speed.

Think of Pentium IV without out-of-order processing, worst branch prediction technology but with 128bit Altivec unit which is copied 8 times on a chip and one of the processing core is incompatible with the other 7 processor cores.

I completely disagree, but to each his own. Cell's branching allowed for developers to optimize the processing order so that it could beat the latest and greatest processors of the time, the only issue was the tech was so new and complex, it took some time to actually hardness the power.

Your disagreement is not based on facts.

Reborn AMD K8 with 128bit SSE4.x/AVX aka Jaguar says Hi i.e. Jaguar has superior IPC (instruction per cycle) over 3.2 Ghz IBM PPE and SPUs.

1. PS3 was NOT available during 2005 and your argument is a red herring.

2. Integration is for cost and latency reduction which is important for game consoles.

3. GpGPU can do DSP work.

4. So what? Integration is for cost and latency reduction.

5. Pure clock speed argument is useless. 8800 GTX's Stream processor clock speed can reach 1350 Mhz while fix function GPU hardware stays at 575 Mhz. You don't know shit about PC hardware.

6. Kabini's Jaguar (dual X86 instruction per cycle out-of-order processing) is effectively slightly cut-down K8 (3 X86 instruction per cycle out-of-order processing) with 128 bit SSE4.x/AVX units. X86 instruction can decode to two or more RISC instructions i.e. X86's instruction compression advantage, hence increasing the IPC effective work when compared to pure RISC competitors. AMD was in the process taking over ATI around year 2005. Jaguar has gimped X87/MMX/3DNow legacy functions to help reduce size.

X87/MMX/3DNow are not important for X86-64 specs.

Gaming PC system with Core 2 Duo with G80 can beat PS3 system.

There's nothing new for NVIDIA CUDA processors reaching 1.5 Ghz clock speed. My MSI Gaming 980 Ti easily reached 1.5 Ghz clock speed overclock with 2816 stream processors while 1080 Ti's automatic stealth overclock reached 1.8 Ghz with 3584 steam processors. AMD has about 11 years to prepare for NVIDIA's clock speed advantage and they still failed.

1-No is not as the CPU was ready on 2005 in fact in mid 2005 it was already READY,sony unlike MS didn't send developer G5 with X800 GPU to make 360 games,PS3 games actually used dev kit with final hardware,those games released on 2006 where on the making on 2005 in actual dev kist development of Cell begin in 2000 - 2001.

So yeah it is a 2005 CPU.

2-Irrelevant again.

3-Gpgpu is using the GPU to do compute task,in fact GpGPU is on its infancy and on 2006 was basically no where to be found,there was no GPU accelerated apps or programs on 2006,hell few programs barely used 2 cores or games for that matter.

So hiding on Gpgpu to compare DSP vs GPU is a joke,DSP are good in some jobs that GPU are also good at,there similarity ends there.

DSP are not GPU and certainly the speed of SPE tell the whole story.

4-Irrelevant you can't compare CPU vs GPU on what serve you best,now let see how great was the G80 running windows vs a CPU.? Oh yeah it does not,a GPU needs a CPU where ever a CPU doesn't need a GPU,it is the CPU who feeds the GPU,without one your GPU does nothing.

Want to test? take the CPU out of your PC and turn it up with just the GPU see what you get.lol

5-Bullshit clock speed of the 8800gtx was 575mhz,the rest is irrelevant shit,and even so still i don't see 3.2ghz there.

6-Kabini is newer than cell by 9 years stop your bullshit,period find me a 2006 that could beat cell i dare you..lol Link me to it non were even close and there was no APU back them to use compute and help its self.

Fact is you use the G80 because it serve you best,compare it to intel core 2 duo or Pentiun D of that time,and see what you get on folding home not even the $999 extreme edition was close to cell.

Your hate for anything sony is a joke and cloud the little judgement you have.

1. Again, PS3 wasn't ready during year 2005 i.e. PS3 without RSX is worst than released PS3. Good luck with emulating graphics command processor unit, texture filtering, texture move/copy, transparencies, blending, z-depth context tracking, MSAA workloads with just 6 SPUs.

The only CPU with self hosting Unix type OS capability inside a CELL BE is just the shitty PPE.

2. Integration is irrelevant for large scale discrete GpGPU with high purchase price limits.

3. Bullshit, PS3 wasn't available during year 2005.

For Batllefield 3's tile deferred render for lights compute

PS3 = SPUs

Xbox 360 = Xenos in micro-code compute mode.

Later, Xbox 360 Slim has XCGPU with PPE X3 + ATI Xenos fusion.

AMD has the patent for unified shader GpGPU not IBM, Sony and Toshiba. AMD is currently suing Android vendors who are shipping ARM's unified shader Mali and Imagination's unified shader PowerVR GPUs (deferred tile render mode).

GameCube/Wii's ATI IGP with it's embedded ram already has software immediate tile render mode.

Try again.

4. SPUs can't self host Unix type OS.

5. Wrong again. 8800 GTX's 575 Mhz refers to non-SM compute unit's clock speed. >1 Ghz clock speed refers to SM units i.e. the programmable compute units with similar role as SPUs you stupid cow.

6. There's nothing new with recycled K8 with modification for 128bit SSE/AVX and gimped MMX/X87/3DNow units. K8 already has 128bit SSE1/2 FADD units.

Jaguar was updated with

a. 128bit SSE1/2/3/4/AVX FADD and FMUL units,

b. removed MMX/X87/3DNow units with SSE/AVX units emulating MMX/X87/3DNow legacy instruction set

c. removed 3rd X86 decoder/ALU/AGU set.

Unlike IBM, AMD didn't strip their game console CPU solution down to PowerPC G1 level cost cutting.

They knew multithreading and multicore was the future, so they invested in it instead of waiting for someone to develop a multicore CPU. Intel didn't launch it's first quad core CPU until 2007. Remember, the X360 got it's 3 core CPU because of the development of the Cell.

It's hard to call the Cell a big failure since developers learned quite a lot from it. Would Guerrilla games be where they are now if they hadn't worked on the Cell? Or Naughty Dog? They are still doing stuff few else can, even after the move to X86.

The biggest faults of the PS3 hardware was the slow and old Nvidia GPU, the split pools of RAM, and only having one PPU (main CPU core on the Cell). If it had had two cores, porting games from PC/X360 would probably have been a lot easier with that fix alone.

The multi core in 360 had nothing to do with the multi core in the cell, IBM already had multi core CPU's back in 2001 (POWER4). They borrowed some of the technology developed by the CELL but left the cell's async arch on the table.

Naughty Dog/ Guerilla would likely be in the same position even without the cell. The things they probably learned from it probably ended up being tossed out. They pushed work loads onto the CELL, and now they are pushing workloads onto GPU... Although they probably had a head start with good threaded game engines.

If it had two cores it probably still would have been difficult also because of the split ram. The multi threaded design focus was beneficial on 360 anyway when porting over, but when red faction guerrilla was released, due to the memory restraints the developer struggled a lot to get that sucker on there.

It had something to do with it, since they borrowed some of the technology in the CPU. I think having 3 cores to do general purpose processing was easier for developers, and Sony couldn't implement some additional functions since it had no extra cpu power available. But that may not have been a problem if it had had a better GPU instead, which ends up being the biggest fault of the PS3, not the Cell. Unified shaders and memory would have fixed a lot. But we can also say that the Cell was a failure because it cost too much to develop and forced them to take the shortcuts in the architecture.

It had something to do with it, since they borrowed some of the technology in the CPU. I think having 3 cores to do general purpose processing was easier for developers, and Sony couldn't implement some additional functions since it had no extra cpu power available. But that may not have been a problem if it had had a better GPU instead, which ends up being the biggest fault of the PS3, not the Cell. Unified shaders and memory would have fixed a lot. But we can also say that the Cell was a failure because it cost too much to develop and forced them to take the shortcuts in the architecture.

Right the actual core was mostly developed for the ps3 and was repurposed for xbox, but that wasn't why it got a 3 cores. IBM owns the core and it was developed with sony. The technology for CELL was designed more like having 6 DSP processors attached to a single core, the multi core architecture was totally different than what xbox had. They shared similar stripped down PPC cores but completely difference multi core architectures.

The CELL is one of the biggest faults of the ps3. The cell was a handicap in the end where xbox was designed for ease of development and flexibility in almost all aspects. The cell made development more complex have separate compilers/sdk. Unified shaders/memory wouldn't have solved the issues that this caused, and I don't think it would have been easy to implement unified memory with the spu's because of this. Having a better GPU would have just been a way to circumvent the CELL problem, but you probably would have ended up with a system costing much more than $600 because of the GPU r&d for the tech that didn't really exist yet. Basically sony banked on this technology and had more standard cpu/gpu/memory setup. The cell was a bad choice for them.

Forced them to take shortcuts in the architecture? I'm not sure what you mean.

It had something to do with it, since they borrowed some of the technology in the CPU. I think having 3 cores to do general purpose processing was easier for developers, and Sony couldn't implement some additional functions since it had no extra cpu power available. But that may not have been a problem if it had had a better GPU instead, which ends up being the biggest fault of the PS3, not the Cell. Unified shaders and memory would have fixed a lot. But we can also say that the Cell was a failure because it cost too much to develop and forced them to take the shortcuts in the architecture.

Right the actual core was mostly developed for the ps3 and was repurposed for xbox, but that wasn't why it got a 3 cores. IBM owns the core and it was developed with sony. The technology for CELL was designed more like having 6 DSP processors attached to a single core, the multi core architecture was totally different than what xbox had. They shared similar stripped down PPC cores but completely difference multi core architectures.

The CELL is one of the biggest faults of the ps3. The cell was a handicap in the end where xbox was designed for ease of development and flexibility in almost all aspects. The cell made development more complex have separate compilers/sdk. Unified shaders/memory wouldn't have solved the issues that this caused, and I don't think it would have been easy to implement unified memory with the spu's because of this. Having a better GPU would have just been a way to circumvent the CELL problem, but you probably would have ended up with a system costing much more than $600 because of the GPU r&d for the tech that didn't really exist yet. Basically sony banked on this technology and had more standard cpu/gpu/memory setup. The cell was a bad choice for them.

Forced them to take shortcuts in the architecture? I'm not sure what you mean.

But don't you think the PS3 would have benefited another PPU? Only having one SPU for the OS wasn't enough. The X360 had a whole PPU, and 2 available to games, just like a PC.

The Cell was a handicap because a lot of work had to be moved from the GPU because it wasn't as efficient as the GPU in the X360. And the tools wasn't properly developed.

Imagine if Sony had been stuck with a more simple CPU and that awful GPU alone? Fact is, AMD was ahead of Nvidia in GPU technology, so if Sony had not had the Cell, they would have been a lot worse of. And we would not have had all those fantastic games on the PS3. KZ2, UC2, TLoU, RDR, GTA5 would probably not have been possible on the PS3. But we can agree that it wasn't an ideal CPU, but what other choice did they have if they were ending up with a bad GPU anyways.

I do think they were forced to take some shortcuts. Like the separat RAM pools and the slow GPU. They were developing the Cell with the XDR memory in mind to keep the speed up. But then when they found out they needed a GPU later in the development, they had to go with two different RAM pools. They also apparently had to lower the processing power on the Cell when adding the GPU.

But don't you think the PS3 would have benefited another PPU? Only having one SPU for the OS wasn't enough. The X360 had a whole PPU, and 2 available to games, just like a PC.

The Cell was a handicap because a lot of work had to be moved from the GPU because it wasn't as efficient as the GPU in the X360. And the tools wasn't properly developed.

Imagine if Sony had been stuck with a more simple CPU and that awful GPU alone? Fact is, AMD was ahead of Nvidia in GPU technology, so if Sony had not had the Cell, they would have been a lot worse of. And we would not have had all those fantastic games on the PS3. KZ2, UC2, TLoU, RDR, GTA5 would probably not have been possible on the PS3. But we can agree that it wasn't an ideal CPU, but what other choice did they have if they were ending up with a bad GPU anyways.

I do think they were forced to take some shortcuts. Like the separat RAM pools and the slow GPU. They were developing the Cell with the XDR memory in mind to keep the speed up. But then when they found out they needed a GPU later in the development, they had to go with two different RAM pools. They also apparently had to lower the processing power on the Cell when adding the GPU.

I mean your argument relies on the CELL not being the primary source of power which means their R&D would have focused elsewhere. Have you every heard of the concept of opportunity costs? Their budget for R&D was spent on a technology that didn't pay off. The cpu/gpu and memory setup weren't shortcuts, they were standard technologies at the time. The problem with the memory was 512 just wasn't enough and the OS budget and lack of flexibility hurt them in the long run the separate pools weren't necessarily the problem, but xbox was a bit more flexible because of its unified architecture, it could do more with less, along with having more to work with as the OS had a lower memory budget. Xbox just happened to have a very innovative design. AMD was ahead but it had literally just released those technologies, those were NEW tech based on of years of R&D and a focus of developer friendliness.

Anything the PS3 could do graphically, the 360 could do better. The CELL was a pos, that's why no other devices utilized it. Despite Sony claiming it was a supercomputer on a chip.

It had something to do with it, since they borrowed some of the technology in the CPU. I think having 3 cores to do general purpose processing was easier for developers, and Sony couldn't implement some additional functions since it had no extra cpu power available. But that may not have been a problem if it had had a better GPU instead, which ends up being the biggest fault of the PS3, not the Cell. Unified shaders and memory would have fixed a lot. But we can also say that the Cell was a failure because it cost too much to develop and forced them to take the shortcuts in the architecture.

Right the actual core was mostly developed for the ps3 and was repurposed for xbox, but that wasn't why it got a 3 cores. IBM owns the core and it was developed with sony. The technology for CELL was designed more like having 6 DSP processors attached to a single core, the multi core architecture was totally different than what xbox had. They shared similar stripped down PPC cores but completely difference multi core architectures.

The CELL is one of the biggest faults of the ps3. The cell was a handicap in the end where xbox was designed for ease of development and flexibility in almost all aspects. The cell made development more complex have separate compilers/sdk. Unified shaders/memory wouldn't have solved the issues that this caused, and I don't think it would have been easy to implement unified memory with the spu's because of this. Having a better GPU would have just been a way to circumvent the CELL problem, but you probably would have ended up with a system costing much more than $600 because of the GPU r&d for the tech that didn't really exist yet. Basically sony banked on this technology and had more standard cpu/gpu/memory setup. The cell was a bad choice for them.

Forced them to take shortcuts in the architecture? I'm not sure what you mean.

But don't you think the PS3 would have benefited another PPU? Only having one SPU for the OS wasn't enough. The X360 had a whole PPU, and 2 available to games, just like a PC.

The Cell was a handicap because a lot of work had to be moved from the GPU because it wasn't as efficient as the GPU in the X360. And the tools wasn't properly developed.

Imagine if Sony had been stuck with a more simple CPU and that awful GPU alone? Fact is, AMD was ahead of Nvidia in GPU technology, so if Sony had not had the Cell, they would have been a lot worse of. And we would not have had all those fantastic games on the PS3. KZ2, UC2, TLoU, RDR, GTA5 would probably not have been possible on the PS3. But we can agree that it wasn't an ideal CPU, but what other choice did they have if they were ending up with a bad GPU anyways.

I do think they were forced to take some shortcuts. Like the separat RAM pools and the slow GPU. They were developing the Cell with the XDR memory in mind to keep the speed up. But then when they found out they needed a GPU later in the development, they had to go with two different RAM pools. They also apparently had to lower the processing power on the Cell when adding the GPU.

R&D for CELL's SPU could have bought NVIDIA CUDA based GPU e.g.

RSX's 24 pixel shader + 8 vertex shader pipelines hence 32 shader pipeline budget could have ended up with Geforce 8600 GT/8700 GTS with 32 CUDA shader cores. G80 was released a few weeks ahead of PS3.

Geforce 8600 GT/8700 GTS can play Xbox 360 ported games similar to Xbox 360. G80 has some of DX10.1 features not just pure DX10.0 features. ATI was ahead for about 1 year, then ATI was beaten by NV's CUDA GPUs. ATI hasn't recovered since 8800 GTX's release. NVIDIA hasn't changed it's game plan with +1Ghz GpGPUs.

Sony's R&D budget was split between CELL (PPE and SPU) and RSX. Sony paid for the cheaper G7X/RSX IP instead of higher priced CUDA IP.

With PS4, the focus is with the largest GPU for the given chip budget.

The alternative time line would be

ATI Xenos (DX9L kitbash)+ NEC EDRAM + IBM PPE 3X = Xbox 360

vs

NV GF 8600 GT (DX10.1 kitbash) + IBM PPE 3X = PS3 alternative. Replace expensive XDR memory with 192 bit cheaper GDDR3 768 MB. 128 bit GDDR3 (GPU) can split from 64bit GDDR3 (CPU) or operate in unified mode with 192 bit GDDR3 (mix CPU/GPU mode).

With enough $$$$, NVIDIA has given the original Xbox, NV's best tech (at that time) that powered GeForce 4 Ti i.e. NV2A has the same pipeline count and features as GeForce 4 Ti.

R&D for CELL's SPU could have bought NVIDIA CUDA based GPU e.g.

RSX's 24 pixel shader + 8 vertex shader pipelines hence 32 shader pipeline budget could have ended up with Geforce 8600 GT/8700 GTS with 32 CUDA shader cores. G80 was released a few weeks ahead of PS3.

Geforce 8600 GT/8700 GTS can play Xbox 360 ported games similar to Xbox 360. G80 has some of DX10.1 features not just pure DX10.0 features. ATI was ahead for about 1 year.

Sony's R&D budget was split between CELL (PPE and SPU) and RSX. Sony paid for cheaper G7X/RSX IP instead of higher priced CUDA IP.

With PS4, the focus is with the largest GPU for the given chip budget.

The alternative time line would be

ATI Xenos (DX9L kitbash)+ NEC EDRAM + IBM PPE 3X = Xbox 360

vs

NV GF 8600 GT (DX10.1 kitbash) + IBM PPE 3X = PS3 alternative. Replace expensive XDR memory with 192 bit cheap GDDR3 768 MB.

With enough $$$$, NVIDIA has given the original Xbox, NV's best tech that powered GeForce 4 Ti i.e. NV2A has the same pipeline count and features as GeForce 4 Ti.

Yup, we can always count on ron to lose sight of of the simplicity of the conversation and blow his load on tech specs.

Your missing the point as always. The alternative don't matter. Was the CELL a problem? Yes, being the focus and the premier technology that SONY chose to run with it was a problem for developers and expensive for consumers, and wasn't a good solution for a gaming machine. You're statements are kind of pointless as with @Martin_G_N... with the power of hindsight we can easily see better solutions for sony... but at the time thats what they spent the tech budget on R&D and in the product. With xbox being released early, they weren't redesigning shit unless it was a truly terrible idea.

R&D for CELL's SPU could have bought NVIDIA CUDA based GPU e.g.

RSX's 24 pixel shader + 8 vertex shader pipelines hence 32 shader pipeline budget could have ended up with Geforce 8600 GT/8700 GTS with 32 CUDA shader cores. G80 was released a few weeks ahead of PS3.

Geforce 8600 GT/8700 GTS can play Xbox 360 ported games similar to Xbox 360. G80 has some of DX10.1 features not just pure DX10.0 features. ATI was ahead for about 1 year.

Sony's R&D budget was split between CELL (PPE and SPU) and RSX. Sony paid for cheaper G7X/RSX IP instead of higher priced CUDA IP.

With PS4, the focus is with the largest GPU for the given chip budget.

The alternative time line would be

ATI Xenos (DX9L kitbash)+ NEC EDRAM + IBM PPE 3X = Xbox 360

vs

NV GF 8600 GT (DX10.1 kitbash) + IBM PPE 3X = PS3 alternative. Replace expensive XDR memory with 192 bit cheap GDDR3 768 MB.

With enough $$$$, NVIDIA has given the original Xbox, NV's best tech that powered GeForce 4 Ti i.e. NV2A has the same pipeline count and features as GeForce 4 Ti.

Yup, we can always count on ron to lose sight of of the simplicity of the conversation and blow his load on tech specs.

Your missing the point as always. The alternative don't matter. Was the CELL a problem? Yes, being the focus and the premier technology that SONY chose to run with it was a problem for developers and expensive for consumers, and wasn't a good solution for a gaming machine. You're statements are kind of pointless as with @Martin_G_N... with the power of hindsight we can easily see better solutions for sony... but at the time thats what they spent the tech budget on R&D and in the product. With xbox being released early, they weren't redesigning shit unless it was a truly terrible idea.

CELL idea was born from PS2's EE and GS idea, while PC GPUs are evolving into general purpose vector processor monsters.

The original PS3 was a dual CELL setup.

Extra SPUs would be used to emulate GPU's fix function math processors.

CELL idea was born from PS2's EE and GS idea, while PC GPUs are evolving into general purpose vector processor monsters.

The original PS3 was a dual CELL setup.

Extra SPUs would be used to emulate GPU's fix function math processors.

You can't let it die can you? Keep spewing tech nonsense when the point doesn't need to be all that technical. The CELL was not an optimal/flexible/easy to code for solution and the ps3 suffered for that. With all your knowledge on tech your barely scraping that point and toss in tons of superfluous facts. Great the CELL was an iteration on the emotion engine... that's not telling me or any one else why that's a failed philosophy.

CELL idea was born from PS2's EE and GS idea, while PC GPUs are evolving into general purpose vector processor monsters.

The original PS3 was a dual CELL setup.

Extra SPUs would be used to emulate GPU's fix function math processors.

You can't let it die can you? Keep spewing tech nonsense when the point doesn't need to be all that technical. The CELL was not an optimal/flexible/easy to code for solution and the ps3 suffered for that. With all your knowledge on tech your barely scraping that point and toss in tons of superfluous facts. Great the CELL was an iteration on the emotion engine... that's not telling me or any one else why that's a failed philosophy.

The Cell is more than just a processor. It was a very unique entity; it's own life force in a sense. It's mysterious power has never fully been harnessed, which makes it a bit of an enigma. The Cell 100% carried the PS3 to a 1st place finish last gen.

CELL idea was born from PS2's EE and GS idea, while PC GPUs are evolving into general purpose vector processor monsters.

The original PS3 was a dual CELL setup.

Extra SPUs would be used to emulate GPU's fix function math processors.

You can't let it die can you? Keep spewing tech nonsense when the point doesn't need to be all that technical. The CELL was not an optimal/flexible/easy to code for solution and the ps3 suffered for that. With all your knowledge on tech your barely scraping that point and toss in tons of superfluous facts. Great the CELL was an iteration on the emotion engine... that's not telling me or any one else why that's a failed philosophy.

Do you think CELL's CPU+8 DSP like vector units was a brand new idea?

N64's Reality Signal Processor has a scalar processor (MIPS 4 CPU) with 8 16bit integer vector co-processors. Paired two 16bit integer vector processor for 32 bit operations.

Background: The team who created N64's RSP created ArtX company and was later bought out by ATI and later contributed in creating Radeon 9700. The ability to pair units to process larger data types still exist in Radeon HD 7970 where two 32 bit ALU joins for 1 64bit operation.

Reality Signal Processor also includes fix function units such as rasterizer (triangles/geometry transformation), texture memory load, texture filter, color combiner and blender units.

6 SPUs can be abstracted by Swiftshader type software and it can acted like Direct3D9c GPU from the programmer's POV.

Background: Swiftshader uses similar just-in-time re-compiler technology as PC GPU drivers and respects CPU's cache boundaries i.e. tile render loop inside the cache.

Swiftshader type + 6 SPUs solution would be slower since Swiftshader has to emulated GPU's fix function units.

SPUs can be made easy to program and fast when you have a large driver team from AMD and NVIDIA. IBM is incapable for creating a fast OpenGL driver for CELL.

A naked GPU without abstraction layer and driver is just as hard to program.

http://techreport.com/review/31224/the-curtain-comes-up-on-amd-vega-architecture/3

To help the DSBR do its thing, AMD is fundamentally altering the availability of Vega's L2 cache to the pixel engine in its shader clusters. In past AMD architectures, memory accesses for textures and pixels were non-coherent operations, requiring lots of data movement for operations like rendering to a texture and then writing that texture out to pixels later in the rendering pipeline. AMD also says this incoherency raised major synchronization and driver-programming challenges.

To cure this headache, Vega's render back-ends now enjoy access to the chip's L2 cache in the same way that earlier stages in the pipeline do. This change allows more data to remain in the chip's L2 cache instead of being flushed out and brought back from main memory when it's needed again, and it's another improvement that can help deferred-rendering techniques.

That's AMD self criticizing it's current GPU design. AMD driver team is doing the hard work to make their GPU into Direct3D11 and 12 compliant GPU.

SPU's local memory is also non-coherent from the rest of the system just as XBO's ESRAM being non-coherent.

What's special about CELL? Is it wanabe half baked GPU without abstraction layer and drivers?

PS4 gives game programmers the option for easy DX11 like API with PSSL( MS HLSL 5.x like) or near metal programming access just above the GPU driver .

EA DICE for Battlefield 3 has implemented tiled deferred render on both SPUs and Xenos microcode mode ALU(aka. hit-the-metal). EA DICE is known to heavily to promote low level APIs such as DirectX12, Vulkan and Mantle.

CELL idea was born from PS2's EE and GS idea, while PC GPUs are evolving into general purpose vector processor monsters.

The original PS3 was a dual CELL setup.

Extra SPUs would be used to emulate GPU's fix function math processors.

You can't let it die can you? Keep spewing tech nonsense when the point doesn't need to be all that technical. The CELL was not an optimal/flexible/easy to code for solution and the ps3 suffered for that. With all your knowledge on tech your barely scraping that point and toss in tons of superfluous facts. Great the CELL was an iteration on the emotion engine... that's not telling me or any one else why that's a failed philosophy.

The Cell is more than just a processor. It was a very unique entity; it's own life force in a sense. It's mysterious power has never fully been harnessed, which makes it a bit of an enigma. The Cell 100% carried the PS3 to a 1st place finish last gen.

A naked Radeon HD GCN without driver and abstraction layer is just as hard to program. Remove DirectX12, DirectX11 and Radeon driver, you get AMD's SPUs with GPU fix function units.

*snip*

I didn't actually want to know, and its still all relatively useless information, some of it is too much detail. Like the first half about n64, pointless.

"SPUs can be made easy to program and fast when you have a large driver team from AMD and NVIDIA. IBM is incapable for creating a fast OpenGL driver for CELL.

A naked GPU without abstraction layer and driver is just as hard to program."

That is probably all you needed. The detail about the memory isn't very clear.

The Cell is more than just a processor. It was a very unique entity; it's own life force in a sense. It's mysterious power has never fully been harnessed, which makes it a bit of an enigma. The Cell 100% carried the PS3 to a 1st place finish last gen.

Yes one that forces a person to design their own layer ontop of the cpu's and isn't all that flexible. And has certainly been harnessed, either with folding at home which is likely easier to do since its just tons of number crunchin, so its easy to see.. or with games that even if there was a bit of optimizations they could do would likely be 99% there.

What GPU runs at 3.2 ghz you MORON...

Even better what 2005 GPU run at 3.2ghz.

Gotta love it when the OP leads with blaming "lazy developers"

Gamers should just stick to gaming.

The developers clearly didn't want to program for the PS3 because it was too different fo them. Like so many people in the world, they fear what they don't understand. The developers didn't know how to harness the full power of The Cell, so they basically just said "screw it" and were lazy with their development. Shame on them. The Cell could probably still hang with the latest gaming rigs had it's True Form actually been unlocked. A very mystic piece of hardware.

Gotta love it when the OP leads with blaming "lazy developers"

Gamers should just stick to gaming.

The developers clearly didn't want to program for the PS3 because it was too different fo them. Like so many people in the world, they fear what they don't understand. The developers didn't know how to harness the full power of The Cell, so they basically just said "screw it" and were lazy with their development. Shame on them. The Cell could probably still hang with the latest gaming rigs had it's True Form actually been unlocked. A very mystic piece of hardware.

CELL's SPUs are obsolete. PS4 GPU's SPUs(aka GCN CU) obliterates CELL's SPUs.

GCN's 8 ACE units was designed for Sony's SPU ported middleware.

Its probably 2x more powerful than the Switch's CPU. A 2017 console.

Well a cell phone can keep up with Switch...lolz

Keep up? The new Galaxy S8 will already be more powerful than the Switch when it launches. If you're talking tablets, the Pixel C released in 2015 is also more powerful than it.

Please Log In to post.

Log in to comment