I don't aggregate any numbers, The fact is microsoft is using this new tech and it will be implemented in the newer intel chips. Anantech said it themselves, the fast on chip ram can counter the gddr5 speed advantage, they just don't know for sure, because they don't know how it's implemented.

And the cloud is not like some hollow promise (this isn't sony with their emotion engine and the cell), they have the amount of servers to pull this off, the only thing you will need is a ultra fast internet connection and this will be more common the following years.

It seems you keep forgetting this is microsoft, they're already making game operating systems for 20 years. Directx is their invention. Sony was still making basic 3d games while you could already play 3d accelerated games like halflife, unreal and system shock 2. Sony is laggging behind all these years. I mean they had to make a system that was nearly twice the price of the x360 back in 2006 and it was released a year later.

On paper the ps3 was al lot stronger than the x360 and we all know how that turned out. It's like you're reviewing unified shaders 6 months before the x360's release. If you think sony is just going to surpass them out of nowhere , you got another thing coming. The cloud could very well be the solution to the console lifecycles and the ultimate solution in piracy.

However, sony has it's advantages, their first party support is better than microsofts (imo) and owning a game on a ps4 will not be the same as a cloud powered game on the xboxone but the hardware advantage in the ps4 smells a lot like history repeating itself.

And you're forgetting about the kinect, maybe i'm dreaming but microsoft is the only one that bring us one step close to star trek's holodek. Wether it's going to be succesfull is another question, but it's the reason the xboxone costs more than ps4. Withouth the kinect it would be like 2005-2006 all over again.

evildead6789

Miss leading joke..

First of all the 7870 at 150gb/s is not bandwidth started neither is the 7850,whin mean the PS4 isn't either.

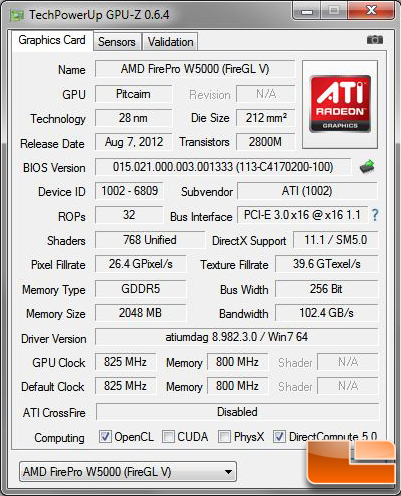

The xbox one can have 250gb/s bandwidth,but it doesn't have a damn GPU powerful enough to saturate that bandwidth,the xbox one has a 12 CU 768 GPU,the closes GPU out there that Ronvalencia think fits this criteria is the Firepro W5000 which has the exact same number of CU and SP,it doesn't mean it is by the way,the firepro has a 102gb/s bandwidth,and i am sure is no bandwidth limited is just 1.3 TF.

So putting more bandwidth on something that doesn't have the power to fill it mean sh**,yeah it just mean that the GPU will not be bandwidth startved,by the way the EDRAM on xbox 360 has a 256GB/s the PS3 has barely 23GB/s so maybe now you know that those fixed hardware are not the be all end all of hradwares,they are quick fixes rather than enhancements.

So even is some how by a miracle ESRAM can counter byte per byte GDDR5 (which i know it won't) that doesn't make up for the 6 extra CU the PS4 has and 384 SP more the PS4 has over the xbox one as well,more CU more SP more performance is very simple,and is a line you can fallow on GCN from the very weakest 7xxx to the strongest.

Cloud is a hoax look at the damn Tittanall is look like sh**,is not impressive by any means,and it use the damn cloud want to bet who will have the better looking version between PC and xbox one.? Now don't hyde on the fact that PC had stronger GPU,because we all know the power of the cloud as sold by MS is infinite right.?

40 times the xbox 360 power right.?

230 Gflops X 40 = 9200 Gflops in other words 9.2 TF of performance from the cloud MS will deliver over a dirty stinking 50MB internet connection.

By MS the xbox one with cloud will have more performance than a Titan GPU...:lol:

You are and idiot,the PS3 and xbox 360 have similar power with the PS3 edging it abit,the 360 has a stronger GPU the PS3 a stronger CPU and both units specs were BS,1TF xbox 360 2TF PS3 both were fake and it was MS who started not sony.

And thanking MS for 3D acelerated games is a joke,MS doesn't sell GPU or invented them idiot.

Log in to comment