How is not.? The 7970 cheapest model is like $379 the cheapest 680GTX is like $459 and both basically perform the same. The RSX on PS3 was a piece of crap compare to the Xenos,and yet it cost sony more than what MS pay for the Xenos. Better driver is a lame excuse to try to justify Nvidia lame ass over priced crap,MS drop them because of that,Sony drop them because of that and i don't think any console maker will ever work with them again.. Nvidia like Intel sell way over priced,the only difference is at least intel has the upper hand on CPU while Nvidia doesn't really.[QUOTE="tormentos"][QUOTE="Cranler"] Bang for the buck on console isnt the same as bang for the buck on pc. I prefer the Nvidia experience on pc. I gladly pay an extra $50 for the better drivers you get with Nvidia.Cranler

Gpu is nothing without the drivers. New drivers are constantly in the works and lots of money is spent on them.

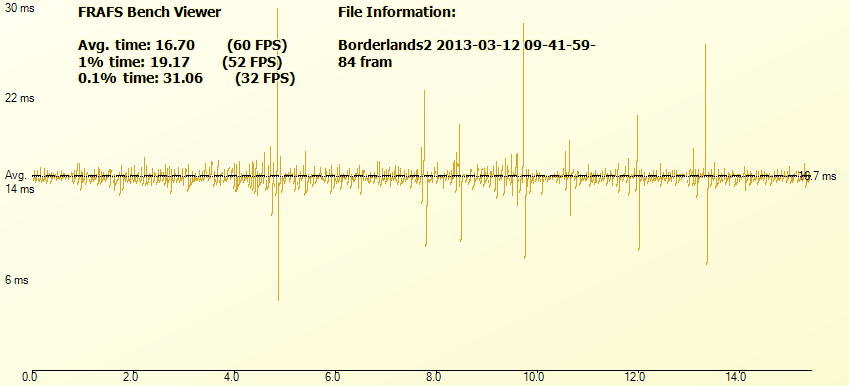

Maybe this will shed some light on why people are willing spend more on Nvidia http://hardforum.com/showthread.php?t=1683315

This poll indicates otherwise http://www.overclock.net/t/1351071/crossfire-7970-or-sli-680s

Log in to comment