And developers only tell the truth when it comes to Sony?

Fanboy at its best

And developers only tell the truth when it comes to Sony?

Fanboy at its best

You mean like i fought Mark Cerny claims about FP16 making the PS4 a 8.4TF machine.?

Your PC built in 2014/2015 is missing double rate 'float16' feature.

Vega 11 with 6 TFLOPS float32 yields 12 TFLOPS float16.

That is total bullshit the PS4 Pro has the same feature and is not pumping 8.4TF of power,not all process can be float16.

And even so is not like the GPU will double its performance,stop making shitty ass assumptions man you are like the new misterXmedia..

The same shit was say about DX12 and nothing happen.

I call it like i see it unlike you lemmings...

If i was like you i would be riding FP16 and 8.4Tf which even Ronvalencia was hyping when he believed the xbox one X would have that feature,as soon as it was confirmed it didn't the downplay campaign on his part started to the point of calling FP16 useless..lol

And developers only tell the truth when it comes to Sony?

Fanboy at its best

You mean like i fought Mark Cerny claims about FP16 making the PS4 a 8.4TF machine.?

Your PC built in 2014/2015 is missing double rate 'float16' feature.

Vega 11 with 6 TFLOPS float32 yields 12 TFLOPS float16.

That is total bullshit the PS4 Pro has the same feature and is not pumping 8.4TF of power,not all process can be float16.

And even so is not like the GPU will double its performance,stop making shitty ass assumptions man you are like the new misterXmedia..

The same shit was say about DX12 and nothing happen.

I call it like i see it unlike you lemmings...

If i was like you i would be riding FP16 and 8.4Tf which even Ronvalencia was hyping when he believed the xbox one X would have that feature,as soon as it was confirmed it didn't the downplay campaign on his part started to the point of calling FP16 useless..lol

So everyone lies, got you.

Sensible people take multiple sources before starting to believe things and even then they understand its gaming and people lie.

We have already seen more 4k games than non 4k games coming for Xbox One X at gamecom, multiple developers have said how good it is. But I cant be arsed talking speculation. So happy to wait for it to come out before laughing at how much better it is.

Lol, this back and forth is pretty entertaining.

Is it though? It's just constant spamming of the same charts. I do love how heated they all get but

That's why it's so funny. I started just skimming over posts after a while. Not sure how they could possibly waste so much time on something so trivial. Lol

When you genuinely look at it, its quite impressive how passionate they are

And developers only tell the truth when it comes to Sony?

Fanboy at its best

You mean like i fought Mark Cerny claims about FP16 making the PS4 a 8.4TF machine.?

Your PC built in 2014/2015 is missing double rate 'float16' feature.

Vega 11 with 6 TFLOPS float32 yields 12 TFLOPS float16.

That is total bullshit the PS4 Pro has the same feature and is not pumping 8.4TF of power,not all process can be float16.

And even so is not like the GPU will double its performance,stop making shitty ass assumptions man you are like the new misterXmedia..

The same shit was say about DX12 and nothing happen.

I call it like i see it unlike you lemmings...

If i was like you i would be riding FP16 and 8.4Tf which even Ronvalencia was hyping when he believed the xbox one X would have that feature,as soon as it was confirmed it didn't the downplay campaign on his part started to the point of calling FP16 useless..lol

You're not addressing system topics but engaging in a poster war.

8.4 TFLOPS without proper memory bandwidth increase has diminished returns and dev for Mantis Burn Racing made his own comments on Xbox One X vs PS4 Pro (used double rate FP16 feature).

https://mspoweruser.com/mantis-burn-racing-will-feature-better-effects-xbox-one-x-playstation-4-pro/

Yeah, it’s a pretty cool piece of kit. It’s substantially more powerful than the PlayStation 4 Pro…but this really is the most powerful games console on the planet. Microsoft are really throwing a lot more into the development kit itself too, really going that extra step to make development a breeze, which is great to see…We did have to drop a few minor things on PlayStation 4 Pro, such as some of the particle effect detail, and lower precision shading…We can go the other way on Xbox One X, adding extra detail. We already scale both ways for a range of hardware specs on PC, so it’s relatively straightforward for us to take that over to console too. As an added extra, we’re throwing in some anti-aliasing on Xbox One X at 4K. We had to drop this on PlayStation 4 Pro–we were happy to do that because with pixels so small, a bit of aliasing isn’t a problem. But when we can do it, it adds a whole extra layer to the crispness and clarity of the image. It’s still early days yet, so we are looking at other areas we may be able to ramp up too–we have some interesting options here, but I don’t want to promise anything specific just yet.

When I made PS4 Pro Mantis Burn Racing 4K vs MSI Gaming GeForce GTX 980 Ti stock factory comparison topic, I was very conservative i.e. maxed details GTX 980 Ti version vs less quality PS4 Pro version. When apple to apple is comparison used, the gap between GTX 980 Ti and PS4 Pro will be larger.

Furthermore, CU is NOT the only source for GPU FLOPS.

From http://www.anandtech.com/show/11740/hot-chips-microsoft-xbox-one-x-scorpio-engine-live-blog-930am-pt-430pm-utc#post0821123606

12:36PM EDT - 8x 256KB render caches

12:37PM EDT - 2MB L2 cache with bypass and index buffer access

12:38PM EDT - out of order rasterization, 1MB parameter cache, delta color compression, depth compression, compressed texture access

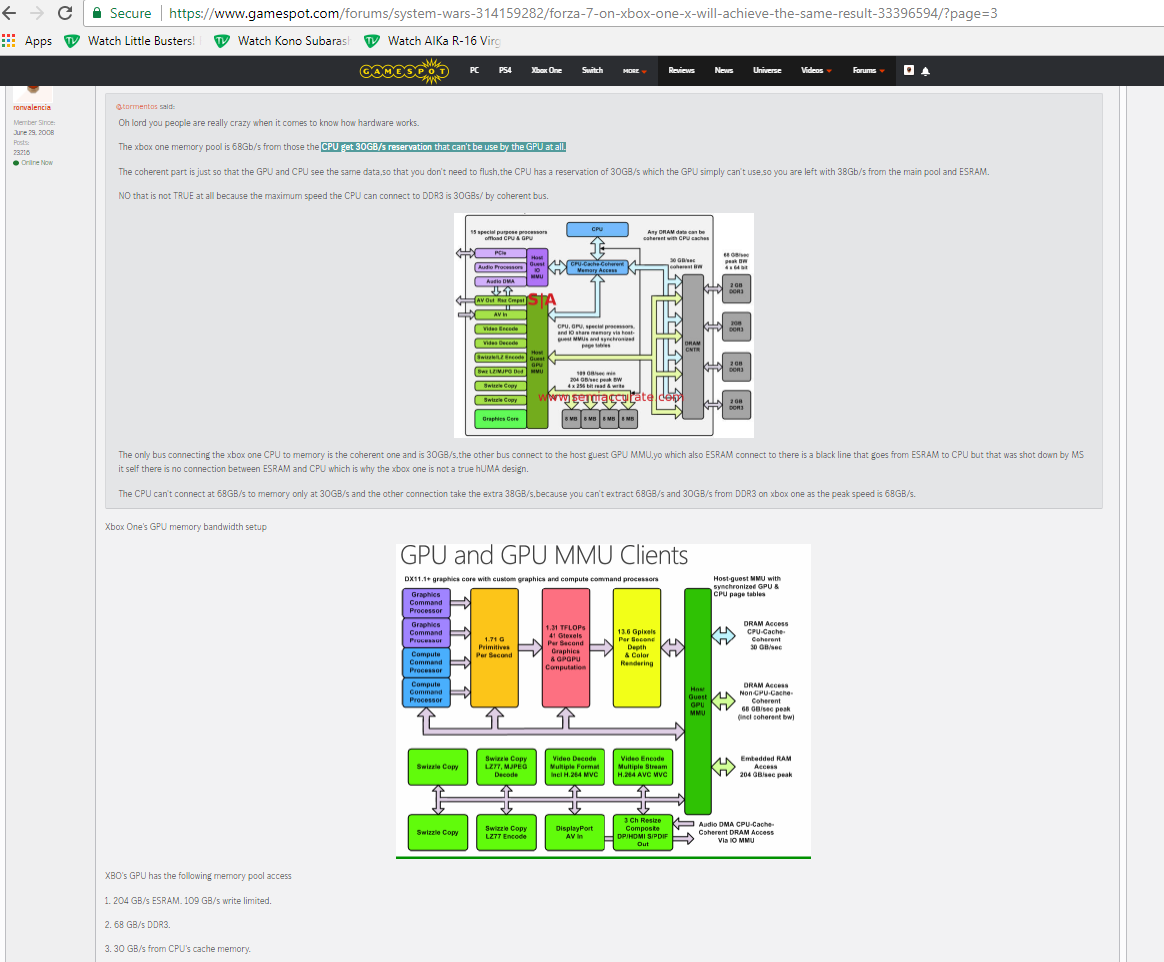

X1X's GPU's Render Back Ends (RBE) has 256KB cache each and there's 8 of them, hence 2 MB render cache.

X1X's GPU has 2 MB L2 cache, 1 MB parameter cache and 2MB render cache. That's 5 MB of cache.

https://www.amd.com/Documents/GCN_Architecture_whitepaper.pdf

The old GCN's render back end (RBE) cache. Page 13 of 18.

Once the pixels fragments in a tile have been shaded, they flow to the Render Back-Ends (RBEs). The RBEs apply depth, stencil and alpha tests to determine whether pixel fragments are visible in the final frame. The visible pixels fragments are then sampled for coverage and color to construct the final output pixels. The RBEs in GCN can access up to 8 color samples (i.e. 8x MSAA) from the 16KB color caches and 16 coverage samples (i.e. for up to 16x EQAA) from the 4KB depth caches per pixel. The color samples are blended using weights determined by the coverage samples to generate a final anti-aliased pixel color. The results are written out to the frame buffer, through the memory controllers

GCN version 1.0's RBE cache size is just 20 KB. 8x RBE = 160 KB render cache (for 7970)

AMD R9-290X/R9-390X's aging RBE/ROPS comparison. https://www.slideshare.net/DevCentralAMD/gs4106-the-amd-gcn-architecture-a-crash-course-by-layla-mah

16 RBE with each RBE contains 4 ROPS. Each RBE has 24 bytes cache.

24 bytes x 16 = 384 bytes.

X1X's RBE/ROPS has 2048 KB or 2 MB render cache.

X1X's RBE has 256 KB. 8x RBE = 2048 KB (or 2 MB) render cache. X1X has hold more rendering data on the chip when compared to Radeon HD 7970.

http://www.eurogamer.net/articles/digitalfoundry-2017-project-scorpio-tech-revealed

We quadrupled the GPU L2 cache size, again for targeting the 4K performance."

X1X GPU's 2MB L2 cache can be used for rendering in addition to X1X's 2 MB render cache.

RX-480's RBE (Render Back Ends)

It seems RX-480's RBEs doesn't have any improvements.

When X1X's L2 cache (for TMU) and render cache (for ROPS) is combined, the total cache size is 4MB which is similar VEGA's shared 4MB L2 cache for RBE/ROPS and TMUs.

This is the main reason why X1X's ForzaTech has a performance boost relative to R9-390X and RX-480 OC.

@tormentos: Dude just STOP! Look at the amount you post and what you post. Your entire life is just being anti Microsoft. Every time I come here and see a Xbox thread, I see 50% of the thread is you trying to minimize whatever positive news there is for Xbox. You do not even spend close to this much time in Sony threads. Your entire life is hating something.

Look dude I am really not trying to hate but your life seems to just be geared towards hating Microsoft. I get we are on system wars but it is not like you are even some Sony fanboy but your just anti Microsoft.

Did Bill Gates kick your dog or something?

You're not addressing system topics but engaging in a poster war.

8.4 TFLOPS without proper memory bandwidth increase has diminished returns and dev for Mantis Burn Racing made his own comments on Xbox One X vs PS4 Pro (used double rate FP16 feature).

I don't need to address anything you are a Joke a total and complete hypocrite and you are on record DEFENDING FP16 and using Mark Cerny argument to piggy back ride for Scorpio when you BELIEVED that Vega was going to be inside Scorpio yu Validated Cerny's claim and i refute your CLAIM.

What i quote you saying just now is a small part of a long ass post of you defending FP16 claiming Vega 6TF would = 12TF without proper bandwidth increase you HYPOCRITE you claim scorpio would exceed a 1080GTX based on 12TF deliver by FP16,and not once you even bring an arguments about Bandwidth not once you claim Scorpio 326GB/s is not enough for 12TF,oh but as soon as you were told that Scorpio didn't have FP16,you started a smear campaign to downplay it,and when i used your own argument against you,you simply hided on Bandwidth which you didn't to with Scorpio.

In fact 8,4TF in FP16 would not require more bandwidth than 4.2TF at FP32,because FP16 allow you to run 2 16 floats in the space of 1 32 float which mean no additional bandwidth require,which is why you never made the claim about bandwidth for scorpio 12TF because you know FP16 not only increase performance it does so without requiring extra bandwidth.

@ronvalencia: Yeah cause 16 bit floating point is going to make all the difference.

GeForce FX's float16 shaders path has existed before DX10's float32 hardware changes.

Tegra X1 and GP100 has double rate float16 shaders. GP102 and GP104 has broken float16 shaders i.e. 1/64 speed of float32 rate which is exposed by CUDA 7.5. Float32 units can emulate float16 without any performance benefits.

VooFoo Dev Was Initially Doubtful About PS4 PRO GPU’s Capabilities But Was Pleasantly Surprised Later

“I was actually very pleasantly surprised. Not initially – the specs on paper don’t sound great, as you are trying to fill four times as many pixels on screen with a GPU that is only just over twice as powerful, and without a particularly big increase in memory bandwidth,” he explained, echoing the sentiment that a lot of us seem to have, before adding, “But when you drill down into the detail, the PS4 Pro GPU has a lot of new features packed into it too, which means you can do far more per cycle than you can with the original GPU (twice as much in fact, in some cases). You’ve still got to work very hard to utilise the extra potential power, but we were very keen to make this happen in Mantis Burn Racing.

“In Mantis Burn Racing, much of the graphical complexity is in the pixel detail, which means most of our cycles are spent doing pixel shader work. Much of that is work that can be done at 16-bit rather than 32-bit precision, without any perceivable difference in the end result – and PS4 Pro can do 16 bit-floating point operations twice as fast as the 32-bit equivalent.”

Mantis Burn Racing PS4 Pro version reached 4K/60 fps which is 4X effectiveness over the original PS4 version's 1080p/60 fps.

FP16 optimisation is similar to GeForce FX's era The Way Meant to be Played optimisation paths.

On the original PS4, developers already using FP16 for raster hardware e.g. Kill Zone ShadowFall.

Sebbbi a dev on Beyond3D had this to say about FP16

Sebbbi (A dev on Beyond3D) comment on FP16.

Originally Posted by Sebbbi on Beyond3D 2 years ago

Sometimes it requires more work to get lower precision calculations to work (with zero image quality degradation), but so far I haven't encountered big problems in fitting my pixel shader code to FP16 (including lighting code). Console developers have a lot of FP16 pixel shader experience because of PS3. Basically all PS3 pixel shader code was running on FP16.

It is still is very important to pack the data in memory as tightly as possible as there is never enough bandwidth to lose. For example 16 bit (model space) vertex coordinates are still commonly used, the material textures are still dxt compressed (barely 8 bit quality) and the new HDR texture formats (BC6H) commonly used in cube maps have significantly less precision than a 16 bit float. All of these can be processed by 16 bit ALUs in pixel shader with no major issues. The end result will still be eventually stored to 8 bit per channel back buffer and displayed.

Could you give us some examples of operations done in pixel shaders that require higher than 16 bit float processing?

EDIT: One example where 16 bit float processing is not enough: Exponential variance shadow mapping (EVSM) needs both 32 bit storage (32 bit float textures + 32 bit float filtering) and 32 bit float ALU processing.

However EVSM is not yet universally possible on mobile platforms right now, as there's no standard support for 32 bit float filtering in mobile devices (OpenGL ES 3.0 just recently added support for 16 bit float filtering, 32 bit float filtering is not yet present). Obviously GPU manufacturers can have OpenGL ES extensions to add FP32 filtering support if their GPU supports it (as most GPUs should as this has been a required feature in DirectX since 10.0).

#33sebbbi, Oct 18, 2014 Last edited by a moderator: Oct 18, 2014

http://wccftech.com/flying-wild-hog-ps4-pro-gpu-beast/

What do you think of Sony’s PlayStation 4 Pro in terms of performance? Is it powerful enough to deliver 4K gaming?

PS4Pro is a great upgrade over base PS4. the CPU didn’t get a big upgrade, but GPU is a beast. It also has some interesting hardware features, which help with achieving 4K resolution without resorting to brute force.

PS4 Pro’s architect Mark Cerny said that the console introduces the ability to perform two 16-bit operations at the same time, instead of one 32-bit operation. He suggested that this has the potential to “radically increase the performance of games” – do you agree with this assessment?

Yes. Half precision (16 bit) instructions are a great feature. They were used some time ago in Geforce FX, but didn’t manage to gain popularity and were dropped. It’s a pity, because most operations don’t need full float (32 bit) precision and it’s a waste to use full float precision for them. With half precision instructions we could gain much better performance without sacrificing image quality.

MS , AMD , Sony & Intel think it will work for graphics

GCN 3 /4 has single rate native float 16 which reduces memory bandwidth usage i.e. on memory bandwidth bound situations, native float16 shaders support can double the effective floating point operations within the same memory bandwidth. A double rate float16 occurs at the GPU level.

With SM6's native float16, AMD is going to do another Async compute like gimping on exiting NVIDIA GPUs.

AMD is using their relationship with MS to drive SM6's direction.

https://www.gamespot.com/forums/system-wars-314159282/cross-platform-is-a-gamechanger-xbox-pc-brotherhoo-33379398/?page=1

This is you YOU YOU YOU..Hyping FP16 because back then you BELIEVED Scorpio would be VEGAS BASED so you were on BOARD.

Allow me to highlight some of your hypocrisy and flip flopping.

Your PC built in 2014/2015 is missing double rate 'float16' feature.

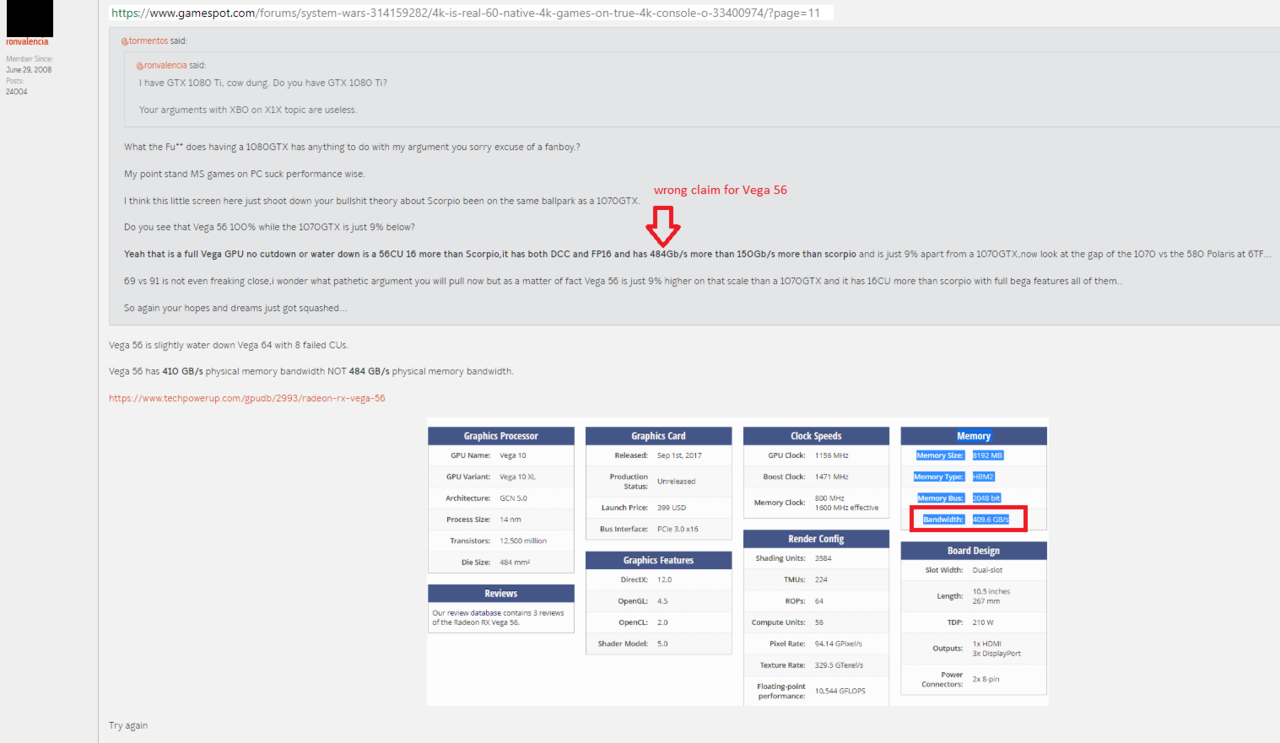

Vega 11 with 6 TFLOPS float32 yields 12 TFLOPS float16.

This ^^ was in response to a @GarGx1 post,see how you downplay his argument about his PC been more been more powerful than Scorpio? You use FP16 which you claim his PC doesn't have and you claim Vega 11 with 6TF yields 12TF odd i don't see any concern from you about bandwidth here why? 326GB/s is not enough for 12TF specially when it is shared not just GPU memory.

8.4 TFLOPS without proper memory bandwidth increase has diminished returns

This is you on this very same thread.

GCN 3 /4 has single rate native float 16 which reduces memory bandwidth usage i.e. on memory bandwidth bound situations, native float16 shaders support can double the effective floating point operations within the same memory bandwidth. A double rate float16 occurs at the GPU level.

This ^^ is the equivalent of you spitting your own face,this ^^ is why you never EVEEEEEEEEEEEERRRRRRRRR bring a bandwidth argument when you BELIEVED that Scorpio had FP16,because you KNOW FP16 doesn't require more bandwidth,what you are doing here is been dishonest in order to defend the xbox one X.

My argument hasn't change FP16 will not make the Pro beat the xbox one X,you on the other hand are a total hypocrite a flip flopping joke of a poster....

MS , AMD , Sony & Intel think it will work for graphics

See see how you introduce MS there.?

Oh we all know why you inserted MS there.

@ronvalencia said:

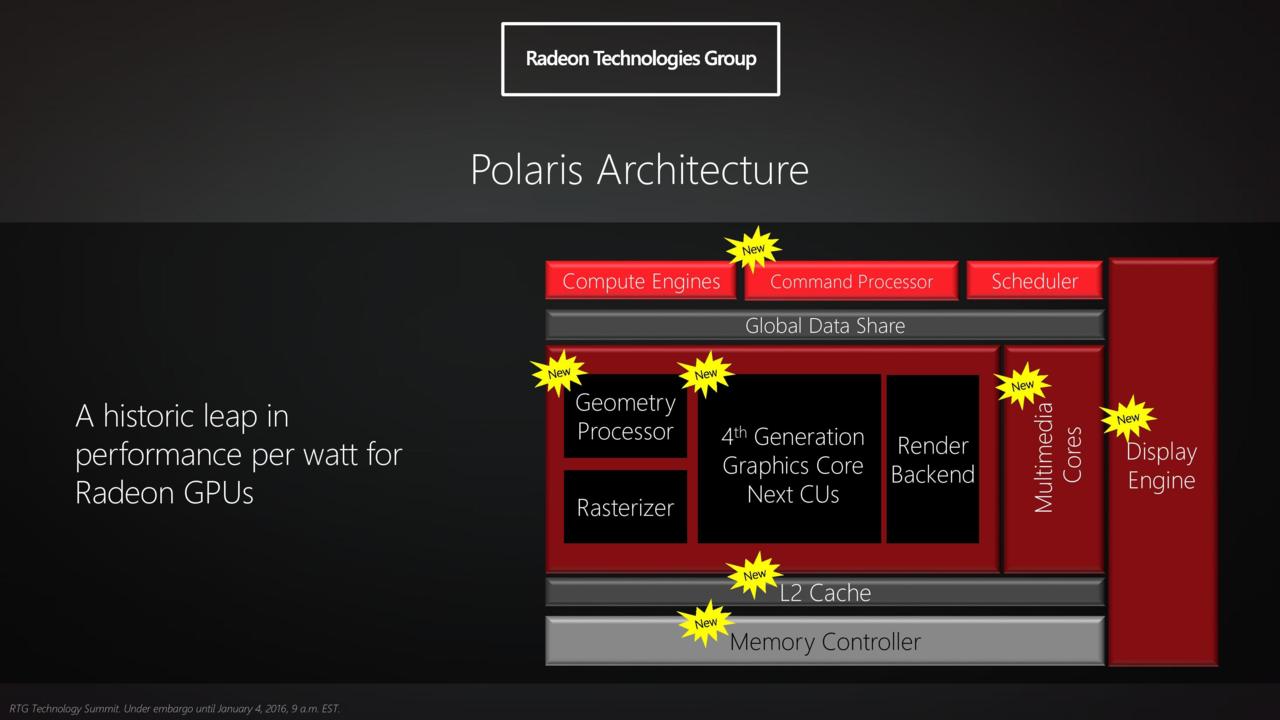

The major difference is one box has Polaris based transistor design maturity and other box has Vega based transistor design maturity.

Also, MS gave more time for AMD to complete the Vega IP while Sony rushed out half baked Polaris with 2 features from Vega.

Hahahahaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa...

Your buffoonery and double standard has been exposed,you even quoted Sebbi from Beyond3D a programer to defend FP16 when you blindly believed that Scorpio had a Vega GPU.

The only person as sad as you here is NyaDC..

You're not addressing system topics but engaging in a poster war.

8.4 TFLOPS without proper memory bandwidth increase has diminished returns and dev for Mantis Burn Racing made his own comments on Xbox One X vs PS4 Pro (used double rate FP16 feature).

I don't need to address anything you are a Joke a total and complete hypocrite and you are on record DEFENDING FP16 and using Mark Cerny argument to piggy back ride for Scorpio when you BELIEVED that Vega was going to be inside Scorpio yu Validated Cerny's claim and i refute your CLAIM.

What i quote you saying just now is a small part of a long ass post of you defending FP16 claiming Vega 6TF would = 12TF without proper bandwidth increase you HYPOCRITE you claim scorpio would exceed a 1080GTX based on 12TF deliver by FP16,and not once you even bring an arguments about Bandwidth not once you claim Scorpio 326GB/s is not enough for 12TF,oh but as soon as you were told that Scorpio didn't have FP16,you started a smear campaign to downplay it,and when i used your own argument against you,you simply hided on Bandwidth which you didn't to with Scorpio.

In fact 8,4TF in FP16 would not require more bandwidth than 4.2TF at FP32,because FP16 allow you to run 2 16 floats in the space of 1 32 float which mean no additional bandwidth require,which is why you never made the claim about bandwidth for scorpio 12TF because you know FP16 not only increase performance it does so without requiring extra bandwidth.

@ronvalencia: Yeah cause 16 bit floating point is going to make all the difference.

GeForce FX's float16 shaders path has existed before DX10's float32 hardware changes.

Tegra X1 and GP100 has double rate float16 shaders. GP102 and GP104 has broken float16 shaders i.e. 1/64 speed of float32 rate which is exposed by CUDA 7.5. Float32 units can emulate float16 without any performance benefits.

VooFoo Dev Was Initially Doubtful About PS4 PRO GPU’s Capabilities But Was Pleasantly Surprised Later

“I was actually very pleasantly surprised. Not initially – the specs on paper don’t sound great, as you are trying to fill four times as many pixels on screen with a GPU that is only just over twice as powerful, and without a particularly big increase in memory bandwidth,” he explained, echoing the sentiment that a lot of us seem to have, before adding, “But when you drill down into the detail, the PS4 Pro GPU has a lot of new features packed into it too, which means you can do far more per cycle than you can with the original GPU (twice as much in fact, in some cases). You’ve still got to work very hard to utilise the extra potential power, but we were very keen to make this happen in Mantis Burn Racing.

“In Mantis Burn Racing, much of the graphical complexity is in the pixel detail, which means most of our cycles are spent doing pixel shader work. Much of that is work that can be done at 16-bit rather than 32-bit precision, without any perceivable difference in the end result – and PS4 Pro can do 16 bit-floating point operations twice as fast as the 32-bit equivalent.”

Mantis Burn Racing PS4 Pro version reached 4K/60 fps which is 4X effectiveness over the original PS4 version's 1080p/60 fps.

FP16 optimisation is similar to GeForce FX's era The Way Meant to be Played optimisation paths.

On the original PS4, developers already using FP16 for raster hardware e.g. Kill Zone ShadowFall.

Sebbbi a dev on Beyond3D had this to say about FP16

Sebbbi (A dev on Beyond3D) comment on FP16.

Originally Posted by Sebbbi on Beyond3D 2 years ago

Sometimes it requires more work to get lower precision calculations to work (with zero image quality degradation), but so far I haven't encountered big problems in fitting my pixel shader code to FP16 (including lighting code). Console developers have a lot of FP16 pixel shader experience because of PS3. Basically all PS3 pixel shader code was running on FP16.

It is still is very important to pack the data in memory as tightly as possible as there is never enough bandwidth to lose. For example 16 bit (model space) vertex coordinates are still commonly used, the material textures are still dxt compressed (barely 8 bit quality) and the new HDR texture formats (BC6H) commonly used in cube maps have significantly less precision than a 16 bit float. All of these can be processed by 16 bit ALUs in pixel shader with no major issues. The end result will still be eventually stored to 8 bit per channel back buffer and displayed.

Could you give us some examples of operations done in pixel shaders that require higher than 16 bit float processing?

EDIT: One example where 16 bit float processing is not enough: Exponential variance shadow mapping (EVSM) needs both 32 bit storage (32 bit float textures + 32 bit float filtering) and 32 bit float ALU processing.

However EVSM is not yet universally possible on mobile platforms right now, as there's no standard support for 32 bit float filtering in mobile devices (OpenGL ES 3.0 just recently added support for 16 bit float filtering, 32 bit float filtering is not yet present). Obviously GPU manufacturers can have OpenGL ES extensions to add FP32 filtering support if their GPU supports it (as most GPUs should as this has been a required feature in DirectX since 10.0).

#33sebbbi, Oct 18, 2014 Last edited by a moderator: Oct 18, 2014

http://wccftech.com/flying-wild-hog-ps4-pro-gpu-beast/

What do you think of Sony’s PlayStation 4 Pro in terms of performance? Is it powerful enough to deliver 4K gaming?

PS4Pro is a great upgrade over base PS4. the CPU didn’t get a big upgrade, but GPU is a beast. It also has some interesting hardware features, which help with achieving 4K resolution without resorting to brute force.

PS4 Pro’s architect Mark Cerny said that the console introduces the ability to perform two 16-bit operations at the same time, instead of one 32-bit operation. He suggested that this has the potential to “radically increase the performance of games” – do you agree with this assessment?

Yes. Half precision (16 bit) instructions are a great feature. They were used some time ago in Geforce FX, but didn’t manage to gain popularity and were dropped. It’s a pity, because most operations don’t need full float (32 bit) precision and it’s a waste to use full float precision for them. With half precision instructions we could gain much better performance without sacrificing image quality.

MS , AMD , Sony & Intel think it will work for graphics

GCN 3 /4 has single rate native float 16 which reduces memory bandwidth usage i.e. on memory bandwidth bound situations, native float16 shaders support can double the effective floating point operations within the same memory bandwidth. A double rate float16 occurs at the GPU level.

With SM6's native float16, AMD is going to do another Async compute like gimping on exiting NVIDIA GPUs.

AMD is using their relationship with MS to drive SM6's direction.

https://www.gamespot.com/forums/system-wars-314159282/cross-platform-is-a-gamechanger-xbox-pc-brotherhoo-33379398/?page=1

This is you YOU YOU YOU..Hyping FP16 because back then you BELIEVED Scorpio would be VEGAS BASED so you were on BOARD.

Allow me to highlight some of your hypocrisy and flip flopping.

Your PC built in 2014/2015 is missing double rate 'float16' feature.

Vega 11 with 6 TFLOPS float32 yields 12 TFLOPS float16.

This ^^ was in response to a @GarGx1 post,see how you downplay his argument about his PC been more been more powerful than Scorpio? You use FP16 which you claim his PC doesn't have and you claim Vega 11 with 6TF yields 12TF odd i don't see any concern from you about bandwidth here why? 326GB/s is not enough for 12TF specially when it is shared not just GPU memory.

8.4 TFLOPS without proper memory bandwidth increase has diminished returns

This is you on this very same thread.

GCN 3 /4 has single rate native float 16 which reduces memory bandwidth usage i.e. on memory bandwidth bound situations, native float16 shaders support can double the effective floating point operations within the same memory bandwidth. A double rate float16 occurs at the GPU level.

This ^^ is the equivalent of you spitting your own face,this ^^ is why you never EVEEEEEEEEEEEERRRRRRRRR bring a bandwidth argument when you BELIEVED that Scorpio had FP16,because you KNOW FP16 doesn't require more bandwidth,what you are doing here is been dishonest in order to defend the xbox one X.

My argument hasn't change FP16 will not make the Pro beat the xbox one X,you on the other hand are a total hypocrite a flip flopping joke of a poster....

MS , AMD , Sony & Intel think it will work for graphics

See see how you introduce MS there.?

Oh we all know why you inserted MS there.

@ronvalencia said:

The major difference is one box has Polaris based transistor design maturity and other box has Vega based transistor design maturity.

Also, MS gave more time for AMD to complete the Vega IP while Sony rushed out half baked Polaris with 2 features from Vega.

Hahahahaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa...

Your buffoonery and double standard has been exposed,you even quoted Sebbi from Beyond3D a programer to defend FP16 when you blindly believed that Scorpio had a Vega GPU.

The only person as sad as you here is NyaDC..

Once again the fraud that is wrongvalencia has been exposed. Good job man

@tormentos: Dude just STOP! Look at the amount you post and what you post. Your entire life is just being anti Microsoft. Every time I come here and see a Xbox thread, I see 50% of the thread is you trying to minimize whatever positive news there is for Xbox. You do not even spend close to this much time in Sony threads. Your entire life is hating something.

Look dude I am really not trying to hate but your life seems to just be geared towards hating Microsoft. I get we are on system wars but it is not like you are even some Sony fanboy but your just anti Microsoft.

Did Bill Gates kick your dog or something?

Oh look whos's talking one of the biggest PS haters and MS suck up this place has ever seen..

Please Log In to post.

Log in to comment