I'm guessing this is including "playstation"

What do you mean?

This topic is locked from further discussion.

@ronvalencia: 1. Well aware of who made the cpu.

2. Well aware of who made the gpu.

3. Got it from a microsoft technical fellow that posted on neogaf

He basically said they tested the gpu with 14 gcn compute units and found it more beneficial to bump up the clock speed and disabled 2 of the cu's

1. your memory type was not correct.

2. your memory type was not correct.

3. Increasing the GPU clock speed also increased ESRAM and ROPS's clock speed.

I have shown you a 69 GB/s memory bandwidth has reduced my R9-290 at 1Ghz core into a near X1's GPU.

Xbox One's primary memory is rated around 68 GB/s and it would need to use the tiling tricks to make use of ESRAM as a texture cache for GPU's TMUs. Both ROPS and TMUs (texture management units) are bound by memory bandwidth.

No one has pointed out from Microsoft if this will benefit Xbox One yet. I think that it is rather premature to think this will have any meaningful impact on it at this point in time. Now, if Microsoft comes out and says that this will help the Xbox then great for the people who own it. It will only help multiplatform releases. The common denominator is always a drag if it is lagging behind.

Its rather silly though to think that the PS4 will not have advancements in their Open GL. Sorry Xbox fans, it might improve, but hardware has its limitations. Not saying the One is bad, it is just a machine after all, and not horribly behind the PS4, but that is its spot this gen.

@ronvalencia:

The ps4 current advantage over the one and the gtx 770 memory and core clock speed vs the gtx 780 throws that Microsoft argument out the water.

For those who don't know the 770 stock has a higher clock gpu and memory that runs 1Ghz (with a G) faster than the 780 but the higher cuda cores count/higher memory bandwidth more than makes up for it.

Current PC games doesn't target NVIDIA 770/780's weaknesses i.e. if a game uses 64 UVA with all shader types, it will gimp all Kelper GPUs.

Similar problem occurred for Radeon X1950 XTX when it doesn't have unified shader features and it stopped running games like Battlefield 3 (uses GpGPU mode in Xbox 360). Radeon X1950 XTX's 302 GFLOPS is greater than Xbox 360's 240 GFLOPS and Radeon X1950 XTX's raw power was rendered useless when it's missing certain feature set.

From http://en.wikipedia.org/wiki/Direct3D#Feature_levels

DX feature level 11_1 = AMD HD 7000/8000/Rx 200s, Intel Haswell and Qualcomm Adreno 420.

The oddball is NVIDIA.

PS; Tegra 4's feature level 9_1 is LOL.

No one has pointed out from Microsoft if this will benefit Xbox One yet. I think that it is rather premature to think this will have any meaningful impact on it at this point in time. Now, if Microsoft comes out and says that this will help the Xbox then great for the people who own it. It will only help multiplatform releases. The common denominator is always a drag if it is lagging behind.

Its rather silly though to think that the PS4 will not have advancements in their Open GL. Sorry Xbox fans, it might improve, but hardware has its limitations. Not saying the One is bad, it is just a machine after all, and not horribly behind the PS4, but that is its spot this gen.

Again, I don't see where ANY xbox fan has claimed that ps4 will not have advancements in Open GL. Why the hell are you guys so damn insecure? DX12 news for X1 does not =bad news for sony...it simply implies good news for X1 development going fwd. The hell is wrong with you guys?

No one has pointed out from Microsoft if this will benefit Xbox One yet. I think that it is rather premature to think this will have any meaningful impact on it at this point in time. Now, if Microsoft comes out and says that this will help the Xbox then great for the people who own it. It will only help multiplatform releases. The common denominator is always a drag if it is lagging behind.

Its rather silly though to think that the PS4 will not have advancements in their Open GL. Sorry Xbox fans, it might improve, but hardware has its limitations. Not saying the One is bad, it is just a machine after all, and not horribly behind the PS4, but that is its spot this gen.

PS4 doesn't have OpenGL.

Read https://twitter.com/Wolfire/status/408656394271744000

"PS4 uses its own low-level rendering API called GNM, and a higher-level wrapper called GNMX. There is no OpenGL or DX support."

@ronvalencia: on a scale of 1 to 10, how bad does it bother you knowing the PS4 is more powerful than the XOne?

0. I can't care less... its just frustrating and annoying when people say PS4 is superior in every way because its not in so many ways. Just because it runs multiplatform games slightly better now. and I mean SLIGHLTY.

graphics don't bother me at all this gen, pretty much every game looks great.

by cow logic, the Wii U is more powerful than the X1.

@ronvalencia: on a scale of 1 to 10, how bad does it bother you knowing the PS4 is more powerful than the XOne?

0. I can't care less... its just frustrating and annoying when people say PS4 is superior in every way because its not in so many ways. Just because it runs multiplatform games slightly better now. and I mean SLIGHLTY.

graphics don't bother me at all this gen, pretty much every game looks great.

by cow logic, the Wii U is more powerful than the X1.

Where would you consider the XBO superior to the PS4 in the hardware department?

@ronvalencia: on a scale of 1 to 10, how bad does it bother you knowing the PS4 is more powerful than the XOne?

I don't have an Xbox One console, hence your question is not applicable to my gaming PCs.

No one has pointed out from Microsoft if this will benefit Xbox One yet. I think that it is rather premature to think this will have any meaningful impact on it at this point in time. Now, if Microsoft comes out and says that this will help the Xbox then great for the people who own it. It will only help multiplatform releases. The common denominator is always a drag if it is lagging behind.

Its rather silly though to think that the PS4 will not have advancements in their Open GL. Sorry Xbox fans, it might improve, but hardware has its limitations. Not saying the One is bad, it is just a machine after all, and not horribly behind the PS4, but that is its spot this gen.

Again, I don't see where ANY xbox fan has claimed that ps4 will not have advancements in Open GL. Why the hell are you guys so damn insecure? DX12 news for X1 does not =bad news for sony...it simply implies good news for X1 development going fwd. The hell is wrong with you guys?

Who are you guys? Also, did you read my post? I said it would benefit multiplatform releases, which would also be on PS4. How did I indicate that it would be bad? Because I called out some Xbox fans (not necessarily directed at you) for throwing the super SDK updates that would pwnzers the PS4. Seems you are the insecure one to replying to my post like that if it did not apply to you.

No one has pointed out from Microsoft if this will benefit Xbox One yet. I think that it is rather premature to think this will have any meaningful impact on it at this point in time. Now, if Microsoft comes out and says that this will help the Xbox then great for the people who own it. It will only help multiplatform releases. The common denominator is always a drag if it is lagging behind.

Its rather silly though to think that the PS4 will not have advancements in their Open GL. Sorry Xbox fans, it might improve, but hardware has its limitations. Not saying the One is bad, it is just a machine after all, and not horribly behind the PS4, but that is its spot this gen.

Again, I don't see where ANY xbox fan has claimed that ps4 will not have advancements in Open GL. Why the hell are you guys so damn insecure? DX12 news for X1 does not =bad news for sony...it simply implies good news for X1 development going fwd. The hell is wrong with you guys?

Who are you guys? Also, did you read my post? I said it would benefit multiplatform releases, which would also be on PS4. How did I indicate that it would be bad? Because I called out some Xbox fans (not necessarily directed at you) for throwing the super SDK updates that would pwnzers the PS4. Seems you are the insecure one to replying to my post like that if it did not apply to you.

Ok, Ok, you came back and said your piece...I can respect that.

No one has pointed out from Microsoft if this will benefit Xbox One yet. I think that it is rather premature to think this will have any meaningful impact on it at this point in time. Now, if Microsoft comes out and says that this will help the Xbox then great for the people who own it. It will only help multiplatform releases. The common denominator is always a drag if it is lagging behind.

Its rather silly though to think that the PS4 will not have advancements in their Open GL. Sorry Xbox fans, it might improve, but hardware has its limitations. Not saying the One is bad, it is just a machine after all, and not horribly behind the PS4, but that is its spot this gen.

Here is a comment from a poster on Reddit about the potential impact of this news :

"It amazes me how while most of you seem to have a grasp on this... A lot of you guys (and Sony haters) do not.

No. X1 having DX12 will not "make" a game 1440p or any other silly metric. We don't know what is in DX12 yet, but we can assume like DX11 there will be specific hardware acceleration for certain techniques. Without getting too far into it, a developer might now have access to DX12 ray tracing, Dx12 SuperFog, DX12 ultraMegaConcentricityRendering, or you can make up your own effect for example. That dev can then use that effect that saves GPU time they would have otherwise implemented in a less efficient way. This frees up resources for things like rendering in 1080p or whatever extra effects/AI/gameplay/whatever are desired.

We would not "have to wait" for PC Hardware to start shipping before seeing DX12 in X1 games. If anything, DX12 on X1 should improve the adoption on PC. There is commonality in 9/10/11/12 often with a software switch enabling the DX version. If MS is good with their dev tools, they will have DX12 features asap - regardless of what the PC market is doing.

That said... Developers are going to love commonality. If a company wants to release a PC, X1, PS4 game, they're going to be able to write very similar code on the X1/PC using DX11/12, the PS4 will be a port to OpenGL. This could have been a potential issue with Theif. Add in commonality with PC, WinMobile, X1, I bet we start seeing high level 3-platform indie titles soon as well.

Yes, possible performance increases aside... This PROVES that the X1 GPU is not the same 7000 series as the PS4. We knew AMD had a large custom deal with AMD and we know the PS4 is extremely similar to production parts (and advantage for them at launch for sure). But... The inclusion of DirectX 12 means that MS is still holding some cards close to their chest, that we don't know 100% of the story, and that while MS obviously launched early - there is a long term plan in place."

@ronvalencia: on a scale of 1 to 10, how bad does it bother you knowing the PS4 is more powerful than the XOne?

0. I can't care less... its just frustrating and annoying when people say PS4 is superior in every way because its not in so many ways. Just because it runs multiplatform games slightly better now. and I mean SLIGHLTY.

graphics don't bother me at all this gen, pretty much every game looks great.

by cow logic, the Wii U is more powerful than the X1.

Where would you consider the XBO superior to the PS4 in the hardware department?

Dual tessellation and dual rasterizer units (not referring to ROPS) runs at slightly higher clock speed.

As shown from my Tomb Raider 2013 benchmark, R9-290 with 69 GB/s memory bandwidth was limited to near X1 non-tiling results.

So its Xbox One exclusive, this is not coming on PS4

You're an idiot nuff said. That said either this will be very good for the Xbox One or turn out like DX 10 for Xbox 360

No one has pointed out from Microsoft if this will benefit Xbox One yet. I think that it is rather premature to think this will have any meaningful impact on it at this point in time. Now, if Microsoft comes out and says that this will help the Xbox then great for the people who own it. It will only help multiplatform releases. The common denominator is always a drag if it is lagging behind.

Its rather silly though to think that the PS4 will not have advancements in their Open GL. Sorry Xbox fans, it might improve, but hardware has its limitations. Not saying the One is bad, it is just a machine after all, and not horribly behind the PS4, but that is its spot this gen.

Here is a comment from a poster on Reddit about the potential impact of this news :

"It amazes me how while most of you seem to have a grasp on this... A lot of you guys (and Sony haters) do not.

No. X1 having DX12 will not "make" a game 1440p or any other silly metric. We don't know what is in DX12 yet, but we can assume like DX11 there will be specific hardware acceleration for certain techniques. Without getting too far into it, a developer might now have access to DX12 ray tracing, Dx12 SuperFog, DX12 ultraMegaConcentricityRendering, or you can make up your own effect for example. That dev can then use that effect that saves GPU time they would have otherwise implemented in a less efficient way. This frees up resources for things like rendering in 1080p or whatever extra effects/AI/gameplay/whatever are desired.

We would not "have to wait" for PC Hardware to start shipping before seeing DX12 in X1 games. If anything, DX12 on X1 should improve the adoption on PC. There is commonality in 9/10/11/12 often with a software switch enabling the DX version. If MS is good with their dev tools, they will have DX12 features asap - regardless of what the PC market is doing.

That said... Developers are going to love commonality. If a company wants to release a PC, X1, PS4 game, they're going to be able to write very similar code on the X1/PC using DX11/12, the PS4 will be a port to OpenGL. This could have been a potential issue with Theif. Add in commonality with PC, WinMobile, X1, I bet we start seeing high level 3-platform indie titles soon as well.

Yes, possible performance increases aside... This PROVES that the X1 GPU is not the same 7000 series as the PS4. We knew AMD had a large custom deal with AMD and we know the PS4 is extremely similar to production parts (and advantage for them at launch for sure). But... The inclusion of DirectX 12 means that MS is still holding some cards close to their chest, that we don't know 100% of the story, and that while MS obviously launched early - there is a long term plan in place."

The Reddit post is LOL since PS4 doesn't have OpenGL.

Read https://twitter.com/Wolfire/status/408656394271744000

"PS4 uses its own low-level rendering API called GNM, and a higher-level wrapper called GNMX. There is no OpenGL or DX support."

----------------

http://www.tomshardware.com/news/amd-mantle-opengl-directx-gdc-2014,26188.html

"AMD Supports Possible Lower Level DirectX" -tomshardware

From http://www.anandtech.com/show/7818/low-level-graphics-api-developments-gdc-2014

Microsoft: “You asked us to do more,” the DirectX session reads. “You asked us to bring you even closer to the metal and to do so on an unparalleled assortment of hardware. You also asked us for better tools so that you can squeeze every last drop of performance out of your PC, tablet, phone and console.”

AMD: “AMD would like you to know that it supports and celebrates a direction for game development that is aligned with AMD’s vision of lower-level, ‘closer to the metal’ graphics APIs for PC gaming,” reports an AMD rep. “While industry experts expect this to take some time, developers can immediately leverage efficient API design using Mantle, and AMD is very excited to share the future of our own API with developers at this year’s Game Developers Conference.”

Notice the word console with DirectX 12 i.e. MS's current game console is Xbox One which runs on AMD GCN. DirectX 12 is aligned with AMD's vision on "lower-level, ‘closer to the metal’ graphics APIs for PC gaming". Xbox One constant would be the clue if the current PC GPU hardware would run DirectX 12.

The main goal for DirectX 12 is to get "closer to the metal" and better hardware control.

------------------------

DirectX: Direct3D Futures (Presented by Microsoft) Max McMullen | Development Lead, Windows Graphics, From http://schedule.gdconf.com/session-id/828181

Location: Room 2002, West Hall Date: Thursday, March 20 Time: 4:00pm-5:00pm

"Come learn how future changes to Direct3D will enable next generation games to run faster than ever before! In this session we will discuss future improvements in Direct3D that will allow developers an unprecedented level of hardware control and reduced CPU rendering overhead across a broad ecosystem of hardware. If you use cutting-edge 3D graphics in your games, middleware, or engines and want to efficiently build rich and immersive visuals, you don't want to miss this talk."

Direct3D and the Future of Graphics APIs (Presented by AMD), From http://schedule.gdconf.com/session-id/828412

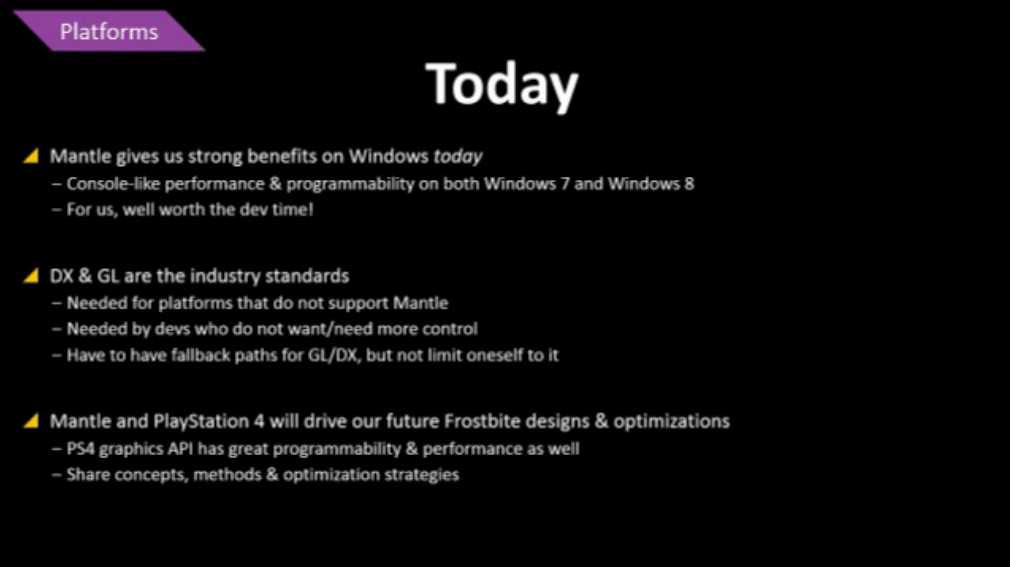

Johan Andersson | Technical Director, Frostbite

Dan Baker | Partner, Oxide Games

Dave Oldcorn | Software Engineering Fellow, AMD

Location: Room 3020, West Hall Date: Thursday, March 20 Time: 5:30pm-6:30pm

"In this session AMDs Dave Oldcorn, Frostbite technical Director Johan Andersson and Oxides Dan Baker will look at how new Direct3D advancements enhance efficiency and enable fully-threaded building of command buffers. They will demonstrate how AMD is using its recent experience in efficient graphics API design and its partnership with Microsoft to provide developers with the infrastructure to render next-generation graphics workloads at full performance. This presentation also discusses the best ways to exploit AMD hardware under heavy load and will invite developers to influence driver and hardware development."

AMD/EA DICE's work on Mantle gets recycled for DirectX 12.

So its Xbox One exclusive, this is not coming on PS4

You're an idiot nuff said. That said either this will be very good for the Xbox One or turn out like DX 10 for Xbox 360

To be fair I think dx10 was announced a year after the 360 launched.....

So its Xbox One exclusive, this is not coming on PS4

You're an idiot nuff said. That said either this will be very good for the Xbox One or turn out like DX 10 for Xbox 360

To be fair I think dx10 was announced a year after the 360 launched.....

That is true but even at that, I know MS tried but why even announce it if the 360 could not handle it, it was a little silly.

No one has pointed out from Microsoft if this will benefit Xbox One yet. I think that it is rather premature to think this will have any meaningful impact on it at this point in time. Now, if Microsoft comes out and says that this will help the Xbox then great for the people who own it. It will only help multiplatform releases. The common denominator is always a drag if it is lagging behind.

Its rather silly though to think that the PS4 will not have advancements in their Open GL. Sorry Xbox fans, it might improve, but hardware has its limitations. Not saying the One is bad, it is just a machine after all, and not horribly behind the PS4, but that is its spot this gen.

Here is a comment from a poster on Reddit about the potential impact of this news :

"It amazes me how while most of you seem to have a grasp on this... A lot of you guys (and Sony haters) do not.

No. X1 having DX12 will not "make" a game 1440p or any other silly metric. We don't know what is in DX12 yet, but we can assume like DX11 there will be specific hardware acceleration for certain techniques. Without getting too far into it, a developer might now have access to DX12 ray tracing, Dx12 SuperFog, DX12 ultraMegaConcentricityRendering, or you can make up your own effect for example. That dev can then use that effect that saves GPU time they would have otherwise implemented in a less efficient way. This frees up resources for things like rendering in 1080p or whatever extra effects/AI/gameplay/whatever are desired.

We would not "have to wait" for PC Hardware to start shipping before seeing DX12 in X1 games. If anything, DX12 on X1 should improve the adoption on PC. There is commonality in 9/10/11/12 often with a software switch enabling the DX version. If MS is good with their dev tools, they will have DX12 features asap - regardless of what the PC market is doing.

That said... Developers are going to love commonality. If a company wants to release a PC, X1, PS4 game, they're going to be able to write very similar code on the X1/PC using DX11/12, the PS4 will be a port to OpenGL. This could have been a potential issue with Theif. Add in commonality with PC, WinMobile, X1, I bet we start seeing high level 3-platform indie titles soon as well.

Yes, possible performance increases aside... This PROVES that the X1 GPU is not the same 7000 series as the PS4. We knew AMD had a large custom deal with AMD and we know the PS4 is extremely similar to production parts (and advantage for them at launch for sure). But... The inclusion of DirectX 12 means that MS is still holding some cards close to their chest, that we don't know 100% of the story, and that while MS obviously launched early - there is a long term plan in place."

The Reddit post is LOL since PS4 doesn't have OpenGL.

Read https://twitter.com/Wolfire/status/408656394271744000

"PS4 uses its own low-level rendering API called GNM, and a higher-level wrapper called GNMX. There is no OpenGL or DX support."

----------------

http://www.tomshardware.com/news/amd-mantle-opengl-directx-gdc-2014,26188.html

"AMD Supports Possible Lower Level DirectX" -tomshardware

From http://www.anandtech.com/show/7818/low-level-graphics-api-developments-gdc-2014

Microsoft: “You asked us to do more,” the DirectX session reads. “You asked us to bring you even closer to the metal and to do so on an unparalleled assortment of hardware. You also asked us for better tools so that you can squeeze every last drop of performance out of your PC, tablet, phone and console.”

AMD: “AMD would like you to know that it supports and celebrates a direction for game development that is aligned with AMD’s vision of lower-level, ‘closer to the metal’ graphics APIs for PC gaming,” reports an AMD rep. “While industry experts expect this to take some time, developers can immediately leverage efficient API design using Mantle, and AMD is very excited to share the future of our own API with developers at this year’s Game Developers Conference.”

Notice the word console with DirectX 12 i.e. MS's current game console is Xbox One which runs on AMD GCN. DirectX 12 is aligned with AMD's vision on "lower-level, ‘closer to the metal’ graphics APIs for PC gaming". Xbox One constant would be the clue if the current PC GPU hardware would run DirectX 12.

The main goal for DirectX 12 is to get "closer to the metal" and better hardware control.

------------------------

DirectX: Direct3D Futures (Presented by Microsoft) Max McMullen | Development Lead, Windows Graphics, From http://schedule.gdconf.com/session-id/828181

Location: Room 2002, West Hall Date: Thursday, March 20 Time: 4:00pm-5:00pm

"Come learn how future changes to Direct3D will enable next generation games to run faster than ever before! In this session we will discuss future improvements in Direct3D that will allow developers an unprecedented level of hardware control and reduced CPU rendering overhead across a broad ecosystem of hardware. If you use cutting-edge 3D graphics in your games, middleware, or engines and want to efficiently build rich and immersive visuals, you don't want to miss this talk."

Direct3D and the Future of Graphics APIs (Presented by AMD), From http://schedule.gdconf.com/session-id/828412

Johan Andersson | Technical Director, Frostbite

Dan Baker | Partner, Oxide Games

Dave Oldcorn | Software Engineering Fellow, AMD

Location: Room 3020, West Hall Date: Thursday, March 20 Time: 5:30pm-6:30pm

"In this session AMDs Dave Oldcorn, Frostbite technical Director Johan Andersson and Oxides Dan Baker will look at how new Direct3D advancements enhance efficiency and enable fully-threaded building of command buffers. They will demonstrate how AMD is using its recent experience in efficient graphics API design and its partnership with Microsoft to provide developers with the infrastructure to render next-generation graphics workloads at full performance. This presentation also discusses the best ways to exploit AMD hardware under heavy load and will invite developers to influence driver and hardware development."

AMD/EA DICE's work on Mantle gets recycled for DirectX 12.

Ignore the ps4 and open GL portion of that Reddit post . I was posting that to get a fundamental understanding of how DX12 could possibly effect X1 development going forward. Anything ps4 and open GL related is not the concern of this thread.

Major nelson says otherwise a bout the edram banwidth

http://majornelson.com/2005/05/20/xbox-360-vs-ps3-part-4-of-4/

There's a 32 GB/s connection between Xbox 360's GPU and eDRAM.

eDRAM has 256 GB/s with it's ROPS i.e. ROPS was placed with eDRAM. Xbox 360 has 8 ROPS at 500Mhz which will limit it's raster operations.

The main GPU can't access eDRAM at 256 GB/s i.e. bottlenecked by 32 GB/s connection.

So its Xbox One exclusive, this is not coming on PS4

You're an idiot nuff said. That said either this will be very good for the Xbox One or turn out like DX 10 for Xbox 360

To be fair I think dx10 was announced a year after the 360 launched.....

That is true but even at that, I know MS tried but why even announce it if the 360 could not handle it, it was a little silly.

Not sure what your asking. DX10 was announced as MS's latest Direct X software for developers at the time. Mainly used for the PC. xbox 360 happened to be out during that time frame so many assumed that MS would of built a GPU that could of taken advantage of DX10...it couldn't. All it could do is mimic some of the dx10 basic features and that was about it.

This situation (in my opinion) is slightly different because both DX12 and xbox 1 were being created during the same time frame. I think dx12 was not ready and in an effort to compete with Sony, MS went ahead and launched the system early. (and it shows) This time around though, I suspect they build their GPU to fully support the latest iteration of DX.

Ignore the ps4 and open GL portion of that Reddit post . I was posting that to get a fundamental understanding of how DX12 could possibly effect X1 development going forward. Anything ps4 and open GL related is not the concern of this thread.

The Reddit poster doesn't know a thing i.e. the poster didn't read GDC's summaries. DirectX 12's goal is similar to AMD's Mantle and it doesn't follow the old DirectX's heavy layer model.

DirectX 11.2 is last DirectX release with heavy layer model.

X1 has it's own SDK/driver improvements.

Expect Games to run at sub 720p then.

It will probably provide a small performace boost to the xbox one since there may be features in dx12 that makes the xbox one's driver software slightly better or features that are curently in the xbox one that are locked will be unlocked and the xbox one will be able to use these features.

No one has pointed out from Microsoft if this will benefit Xbox One yet. I think that it is rather premature to think this will have any meaningful impact on it at this point in time. Now, if Microsoft comes out and says that this will help the Xbox then great for the people who own it. It will only help multiplatform releases. The common denominator is always a drag if it is lagging behind.

Its rather silly though to think that the PS4 will not have advancements in their Open GL. Sorry Xbox fans, it might improve, but hardware has its limitations. Not saying the One is bad, it is just a machine after all, and not horribly behind the PS4, but that is its spot this gen.

I think one of the cool things happening at the moment is that hardware is becoming less of a problem.

For example Tiled Resources ( Open GL and DirectX ) 6Gb textures in to 16mb...

More ideas like this and hardware would become less relevant and the focus would be on what the software can do to make a game look good without actually having descent specs....

PS4 does not use OpenGL -___-

DX12, you guys! Watch Dogs might even reach 980p! Ignoring the fact that most games don't even utilise DX11.

That'll be the lemming mantra throughout the year then. "W-wait for DirectX 12!!!" same as it was "W-wait for the dGPU!!!" last year.

Everyone seems to have been bitching about Direct X for a fair while now (gamers and developers) when it comes to the resources it uses and the effect it has on game performance. But when MS does something about it, people are still upset, I don't understand.

Do people want an improved Direct X or not?

As for the X1, anything that can improve optimization is a good thing.

@gamemediator: Some great logic you speak of. It is nice to have another person to see things for as they are and not just resort to fanboy remarks.

I think the X1 has the hardware already in place for DX12 and the benefits should be very useful.

There is a lot we do not know about THE ONE. I think from a tech stand point, it is far more sophisticated than the PS4 and we are only scratching the surface.

But the X1 is so weak, it doesn't really matter.

its like extracting the last bit of juice from a very very tiny orange

People act like the PS4 GPU is an equivalent to a GeForce Titan. It's not that much better.

Also from what I've heard recently, low level apis benefit entry level computers more so anyway. It's not like people with decent i5s and i7s give a shit about the CPU overhead. And it's likely to have a smaller impact on mid to higher end GPUs.

Doesn't matter, the reason new generations of DirectX mean better graphics on PC is because of new hardware that supports the features of the new DirectX runtime. If the hardware doesn't support the new features of DirectX, then having it is like tits on a boar.

@blackace: Yeah, those new SDKs are really helping. Just look at MGS, Watch Dogs and The Witcher.

Oh wait...

You do realize the SDK was just released recently right. The Witcher 3, WatchDog and all those other games that have been in development for over a year won't be using it. MSGV is a port. Hideo didn't do any programming on XB1. lol!! He just ported that shit over. That game isn't even using XB1's upscaler. Complete crap.

Lets see how Halo 5 and Sunset Overdrive turns out.

Lazy devs, remember that?

@ronvalencia:

Thank you RON for saying what I have been saying since Mantle was first shown, it is DX software, oxide middleware (hardware tiling PRT,) When mantle showed its last benchmarks right before update of the SDK of the x1. Dx12 is X1 and the x1 and kaveri Soc is just part of the new embedded ram gpus that's coming.

@ronvalencia: 1. Well aware of who made the cpu.

2. Well aware of who made the gpu.

3. Got it from a microsoft technical fellow that posted on neogaf

He basically said they tested the gpu with 14 gcn compute units and found it more beneficial to bump up the clock speed and disabled 2 of the cu's

1. your memory type was not correct.

2. your memory type was not correct.

3. Increasing the GPU clock speed also increased ESRAM and ROPS's clock speed.

I have shown you a 69 GB/s memory bandwidth has reduced my R9-290 at 1Ghz core into a near X1's GPU.

Xbox One's primary memory is rated around 68 GB/s and it would need to use the tiling tricks to make use of ESRAM as a texture cache for GPU's TMUs. Both ROPS and TMUs (texture management units) are bound by memory bandwidth.

1 and 2. I switch the C and the G in GPU and CPU and left out a G in GDDR3.

Wow that really takes away from the argument there. Its corrected now. It was typed from a smart phone

3.The same applies to the increase ROP speed for 770 and 780. The 780 has more of them running at a slower speed.

Your GPU is still faster than the xbox 1 gpu with the reduced memory bandwidth. Reduce your benchmark settings to what the xbox one version runs at. You don't know what the xbox one version settings are at.

Hmm, I wonder which console has twice the number of ROPs running at a lower speed....

Assuming linear scaling the speed bumb for the xbox one gpu received over the ps4 wouldn't make up for the 100% more ROPS the the Ps4 has over the Xbox 1. (16 vs 32)

The 360 and One are more similar then they are different.

Developers have had almost 10 years dealing with the ESRAM/EDRAM. Why are multiplatform games suffering issues? The only excuse available is the 384 stream processor difference and 16 ROPs vs 32.

The PS4 has more hardware dedicated for graphics processing resources. No amount of API fixes will change that. Any low level feature of the DX12 can be ported to any API that has low level access. Even OPENGL has features added to make porting Direct3D easier.

This kind of sucks considering Direct X 11 is still very fresh and new and now we can;t get to used to it since MS is going to want everyone to go onto 12 now

DX12 is the one that took the longest to come out. No idea why people wish for stagnation.

because then they are gonna force you to upgrade to Windows 8 or maybe Windows 9 (coming next year) + having to buy new cards that support the API.

This directX thing is just a marketing ploy.

@ronvalencia: 1. Well aware of who made the cpu.

2. Well aware of who made the gpu.

3. Got it from a microsoft technical fellow that posted on neogaf

He basically said they tested the gpu with 14 gcn compute units and found it more beneficial to bump up the clock speed and disabled 2 of the cu's

1. your memory type was not correct.

2. your memory type was not correct.

3. Increasing the GPU clock speed also increased ESRAM and ROPS's clock speed.

I have shown you a 69 GB/s memory bandwidth has reduced my R9-290 at 1Ghz core into a near X1's GPU.

Xbox One's primary memory is rated around 68 GB/s and it would need to use the tiling tricks to make use of ESRAM as a texture cache for GPU's TMUs. Both ROPS and TMUs (texture management units) are bound by memory bandwidth.

1 and 2. I switch the C and the G in GPU and CPU and left out a G in GDDR3.

Wow that really takes away from the argument there. Its corrected now. It was typed from a smart phone

3.The same applies to the increase ROP speed for 770 and 780. The 780 has more of them running at a slower speed.

Your GPU is still faster than the xbox 1 gpu with the reduced memory bandwidth. Reduce your benchmark settings to what the xbox one version runs at. You don't know what the xbox one version settings are at.

Hmm, I wonder which console has twice the number of ROPs running at a lower speed....

Assuming linear scaling the speed bumb for the xbox one gpu received over the ps4 wouldn't make up for the 100% more ROPS the the Ps4 has over the Xbox 1. (16 vs 32)

The 360 and One are more similar then they are different.

Developers have had almost 10 years dealing with the ESRAM/EDRAM. Why are multiplatform games suffering issues? The only excuse available is the 384 stream processor difference and 16 ROPs vs 32.

The PS4 has more hardware dedicated for graphics processing resources. No amount of API fixes will change that. Any low level feature of the DX12 can be ported to any API that has low level access. Even OPENGL has features added to make porting Direct3D easier.

Using 3DMarks Vantage's color fill rate test which involves "test draws frames by filling the screen multiple times. The color and alpha of each corner of the screen is animated with the interpolated color written directly to the target using alpha blending".

From http://www.anandtech.com/show/5625/amd-radeon-hd-7870-ghz-edition-radeon-hd-7850-review-rounding-out-southern-islands/16

3DMark Vantage Pixel Fille rate (from anandtech and techreport)

Radeon HD 7850 (32 ROPS at 860 Mhz) = 8 Gpixel = 153.6 GB/s

Radeon HD 7870 (32 ROPS at 1Ghz) = 8 Gpixel = 153.6 GB/s (7.9 Gpixel from techreport)

Radeon HD 7950-800 (32 ROPS at 800 Mhz) = 12.1 Gpixel = 240 GB/s

Radeon HD 7970-925 (32 ROPS at 925 Mhz) = 13.2 Gpixel = 260 GB/s (from techreport)

----

The increase between 240 GB/s vs 153 GB/s is 1.56X

The increase between 12.1 Gpixel vs 8 Gpixel is 1.51X

----

The increase between 260 GB/s vs 153.6 Gpixel is 1.69X

The increase between 13.2 Gpixel vs 8 GB/s is 1.65X

----

There's a near linear relationship with increased memory bandwidth and pixel file rate.

Lets assume 7950-800 is the top 32 ROPs capability. For 16 ROPS, if we divide 7950-800's 240 GB/s by 2 it will yield ~120 GB/s write.

Lets assume 7970-925 is the top 32 ROPs capability. For 16 ROPS, if we divide 7970-925's 260 GB/s by 2 it will yield ~130 GB/s write.

For 853Mhz 16 ROPS estimate, it's (853/800) x120 = 127.95 GB/s. Roughly equivalent to 17 ROPS at 800Mhz.

Adding another 16 ROPS on X1 would be almost pointless.

To maximise eSRAM usage, Xbox One would need to use tiling tricks for TMUs (i.e. AMD PRT/Tiled Resource) and render targets.

---

For PS4's 32 ROPS estimate, 176 GB/s/7.5 GB/s per ROP = 23.46 ROPS or 24 ROPS.

PS4's 32 ROPS would not be fully used i.e. 7950-800Mhz's superior 32 ROPS results with 240 GB/s bandwidth over PS4's 32 ROPS with 176GB/s bandwidth.

Microsoft's ROPs math includes both read and write which will saturate X1's ESRAM bandwidth i.e. "eight bytes write, four bytes read". Your not comparing apple with oranges.

My claim: 16 ROPS at 853Mhz is sufficient for 1920x1080p when it's given at least 128 GB/s memory bandwidth.

My Radeon HD R9-290 at 1Ghz(via 290X firmware) with 128 GB/s memory bandwidth Tomb Raider 2013 + ultimate settings results.

At 128 GB/s memory bandwidth ~= 16 ROPS at 853Mhz, it was still able drive to 1920x1080p resolution and 54 fps average.

Pixel fill rate is a function of ROPS and memory bandwidth.

AMD Catalyst 14.2 beta 1.3 driver restores user's memory clock speed control as a clock speed slider bar i.e. I set memory bandwidth between 32 GB/s to >320 GB/s.

AMD Catalyst 13.12 driver's memory control has percentage based slider bar i.e. the lowest setting is 40 percent of 320 GB/s = 128 GB/s.

-----------------------

The main reasons why Xbox One's many games are running less than 1080p.

Read http://gamingbolt.com/xbox-ones-esram-too-small-to-output-games-at-1080p-but-will-catch-up-to-ps4-rebellion-games#SBX8QyXmrlJEyBW1.99

Bolcato stated that, “It was clearly a bit more complicated to extract the maximum power from the Xbox One when you’re trying to do that. I think eSRAM is easy to use. The only problem is…Part of the problem is that it’s just a little bit too small to output 1080p within that size. It’s such a small size within there that we can’t do everything in 1080p with that little buffer of super-fast RAM.

“It means you have to do it in chunks or using tricks, tiling it and so on...

...

Will the process become easier over time as understanding of the hardware improves? “Definitely, yeah. They are releasing a new SDK that’s much faster and we will be comfortably running at 1080p on Xbox One. We were worried six months ago and we are not anymore, it’s got better and they are quite comparable machines.

“Yeah, I mean that’s probably why, well at least on paper, it’s a bit more powerful. But I think the Xbox One is gonna catch up. But definitely there’s this eSRAM. PS4 has 8GB and it’s almost as fast as eSRAM [bandwidth wise] but at the same time you can go a little bit further with it, because you don’t have this slower memory. That’s also why you don’t have that many games running in 1080p, because you have to make it smaller, for what you can fit into the eSRAM with the Xbox One.”

----------------------

Note that tiling tricks doesn't overcomeALU bound issues i.e. refer to prototype 7850 with 12 CU (768 stream processors with 48 TMUs) at 860Mhz and 153.6 GB/s memory bandwidth.

PS4's GCN (1.84 TFLOPS with 176 GB/s memory bandwidth) solution is very close to Radeon HD R7-265 (1.89 TFLOPS with 179 GB/s memory bandwidth) which is faster than retail 7850 with 16 CUs (1.76 TFLOPS with 153.6 GB/s memory bandwidth).

For prototype 7850 with 12 CUs, read http://www.tomshardware.com/reviews/768-shader-pitcairn-review,3196.html

Remember, X1 doesn't have prototype 7850's 2GB GDDR5 with 153.6 GB/s bandwidth which doesn't need any tiling tricks for current PC games.

PS4's GCN solution slots with the highest mid-range Radeon HD R7 model i.e. model R7-265.

-------------

OpenGL is not available on PS4

But the X1 is so weak, it doesn't really matter.

its like extracting the last bit of juice from a very very tiny orange

People act like the PS4 GPU is an equivalent to a GeForce Titan. It's not that much better.

Also from what I've heard recently, low level apis benefit entry level computers more so anyway. It's not like people with decent i5s and i7s give a shit about the CPU overhead. And it's likely to have a smaller impact on mid to higher end GPUs.

Low level API access enable other algorithm optimisations as shown by Intel's Instant Access demos. A direct port doesn't usually show these type of algorithm optimisation gains.

If PC gains low level API access via DirectX 12, then PC's codebase can change with algorithm optimisations that would work on Xbox One and PS4.

DirectX 12 enables a game to be created from ground up with better algorithm optimisations. Atm, PC (i.e. Microsoft's fault) is the bottleneck for deep algorithm optimisations.

AMD/EA DICE's "Mantle For Windows" forced Microsoft to fix their Direct3D/WDM issues on the Window PC platform.

Notice that AMD, Intel, NVIDIA and Qualcomm are all supporting DirectX 12 and these are USA semiconductor companies with zero count from the UK (i.e. Imagination Technologies's PowerVR and ARM's Mali).

@ronvalencia:

Thank you RON for saying what I have been saying since Mantle was first shown, it is DX software, oxide middleware (hardware tiling PRT,) When mantle showed its last benchmarks right before update of the SDK of the x1. Dx12 is X1 and the x1 and kaveri Soc is just part of the new embedded ram gpus that's coming.

Maybe AMD and MS collaborated on the next gen gpu and Mantle and DX12 are the same, beta tested in the future...

@GravityX: If you read the R&D docs that's exactly what it is and if you look at the x1 gpu its like no other at this moment it intel, ARM, NVIDIA, AMD and MS designed the gpu just look what the x1 is doing inside and its all a little piece of each of them company latest movements.

@ronvalencia:

Thank you RON for saying what I have been saying since Mantle was first shown, it is DX software, oxide middleware (hardware tiling PRT,) When mantle showed its last benchmarks right before update of the SDK of the x1. Dx12 is X1 and the x1 and kaveri Soc is just part of the new embedded ram gpus that's coming.

Maybe AMD and MS collaborated on the next gen gpu and Mantle and DX12 are the same, beta tested in the future...

Another example on AMD GCN ISA vs DX and GL from http://timothylottes.blogspot.com.au/2013/08/notes-on-amd-gcn-isa.html

"DX and GL are years behind in API design compared to what is possible on GCN. For instance there is no need for the CPU to do any binding for a traditional material system with unique shaders/textures/samplers/buffers associated with geometry. Going to the metal on GCN, it would be trivial to pass a 32-bit index from the vertex shader to the pixel shader, then use the 32-bit index and S_BUFFER_LOAD_DWORDX16 to get constants, samplers, textures, buffers, and shaders associated with the material. Do a S_SETPC to branch to the proper shader.

This kind of system would use a set of uber-shaders grouped by material shader register usage. So all objects sharing a given uber-shader can get drawn in say one draw call (draw as many views as you have GPU perf for). No need for traditional vertex attributes either, again just one S_SETPC branch in the vertex shader and manually fetch what is needed from buffers or textures..."

--------------------

More hardware details on NVIDIA "Maxwell" from https://devblogs.nvidia.com/parallelforall/5-things-you-should-know-about-new-maxwell-gpu-architecture/

On Maxwell, each SMM (128 stream processors) has 64 KB shared local memory. On GCN, each CU (64 stream processors) has 64 KB shared local memory.

On Kelper, each SMX (192 stream processors) has 64 KB shared local memory that is shared with L1 cache, hence reducing the effective shared local memory. For Maxwell, NVIDIA separated the L1 cache functions from 64 KB share local memory and combined L1 cache to texture cache.

On GCN, the CU's L1 cache is separated from the 64 KB local shared memory. GCN CU's L1 cache tied with textures units. GCN's CU's L1 cache supports compressed data formats.

In terms of basic design for GpGPU, NVIDIA's Maxwell is closer to AMD's GCN.

But the X1 is so weak, it doesn't really matter.

its like extracting the last bit of juice from a very very tiny orange

People act like the PS4 GPU is an equivalent to a GeForce Titan. It's not that much better.

Also from what I've heard recently, low level apis benefit entry level computers more so anyway. It's not like people with decent i5s and i7s give a shit about the CPU overhead. And it's likely to have a smaller impact on mid to higher end GPUs.

Low level API access enable other algorithm optimisations as shown by Intel's Instant Access demos. A direct port doesn't usually show these type of algorithm optimisation gains.

If PC gains low level API access via DirectX 12, then PC's codebase can change with algorithm optimisations that would work on Xbox One and PS4.

DirectX 12 enables a game to be created from ground up with better algorithm optimisations. Atm, PC (i.e. Microsoft's fault) is the bottleneck for deep algorithm optimisations.

AMD/EA DICE's "Mantle For Windows" forced Microsoft to fix their Direct3D/WDM issues on the Window PC platform.

Notice that AMD, Intel, NVIDIA and Qualcomm are all supporting DirectX 12 and these are USA semiconductor companies with zero count from the UK (i.e. Imagination Technologies's PowerVR and ARM's Mali).

I was actually trying to reference an article that was written on a tech website recently but I can't find it. The article said that technology like Mantle would have a much bigger impact on entry level CPU/GPUs like AMDs APUs for example.

My point was that people in this thread are saying DX12 will have a negative impact on the X1 performance and I was pointing out, actually it's probably most suited to current gen consoles.

Of course it's needed across the board though. The optimizations are things that probably should have been looked into a long time ago. It probably shouldn't have needed AMD to force it.

This kind of sucks considering Direct X 11 is still very fresh and new and now we can;t get to used to it since MS is going to want everyone to go onto 12 now

DX12 is the one that took the longest to come out. No idea why people wish for stagnation.

because then they are gonna force you to upgrade to Windows 8 or maybe Windows 9 (coming next year) + having to buy new cards that support the API.

This directX thing is just a marketing ploy.

Hardware evolves, software evolves. Only because you don't want to upgrade for 10 years doesn't mean everyonefeels the same.

Plus you really think games are going to be DX12 only the moment DX12 launches? Is going to take years before it becomes the norm. The reason I'm exited is not because DX12 games are going to come out this year, that's not going to happen but because I want to see where gaming is going to in the long run. For example DX10 & 11 didn't have a big use over their lifespan however their research and knowledge that was gathered back then is used today on modern consoles and PC games.

Let MS throw money at that research, nothing bad will come out of it

Please Log In to post.

Log in to comment