They got a $400 console version running next to literally a $3000 PC version and you still can't tell the difference lmfao! They even tried to zoom in 1000X on some pebble in the distance to show off dat PC powah! Lol! Whew. Hermits must be butthurt as a MF this gen. All that money down the drain just for indiscernible differences on garbage Ubisoft ports. It's like you paid for a high end hooker and got stuck with a TS. HAHAHAHA! Ahhh so good. Lemmings watch out. It'll be even worse for Xbone 2. Lol!

For Honor on ps4 Pro..1440p 30fps low/med settings

Nah, I ain't mad. I've got AAA games to play on my PS4 Pro. When was the last time you played a AAA game on your PotatoBox?

Yes! You are mad. You mad at For Honor for running like shit and you now feel like the PS4 Pro is performing as shitty as the Xbox One "teh fail" system. You said all of the in your "I am not angry I am just mad" posts that followed your "I am not mad just mad" post. LMAO.

Ya'll better pray that thing has actual exclusive games

"We said we're not going to have console-exclusive games for Project Scorpio. It's one ecosystem--whether you have an Xbox One S or Project Scorpio, we don't want anyone to be left behind"

http://www.gamespot.com/articles/xbox-scorpio-wont-have-exclusive-games-except-for-/1100-6442754/

Ubishit trash game runs like shit, no surprise.

Where is the Switch version of this shit game?

It runs better than DowngradeCharted 4 and also looks more 4K than Sony First Party offerings, LMAO!

Stop peasant..is 4k 60FPS ultra or nothing and your 970 doesn't cut it..hahahahaha

They got a $400 console version running next to literally a $3000 PC version and you still can't tell the difference lmfao! They even tried to zoom in 1000X on some pebble in the distance to show off dat PC powah! Lol! Whew. Hermits must be butthurt as a MF this gen. All that money down the drain just for indiscernible differences on garbage Ubisoft ports. It's like you paid for a high end hooker and got stuck with a TS. HAHAHAHA! Ahhh so good. Lemmings watch out. It'll be even worse for Xbone 2. Lol!

resolutionwise you need to be another foot closer to the tv.

but the ambient occlusion, shadows and draw distance are clear....as well as particle effects and other small things are all more vibrant on pc. watch the vid.

not to mention the 30fps.

Nah, I ain't mad. I've got AAA games to play on my PS4 Pro. When was the last time you played a AAA game on your PotatoBox?

Yes! You are mad. You mad at For Honor for running like shit and you now feel like the PS4 Pro is performing as shitty as the Xbox One "teh fail" system. You said all of the in your "I am not angry I am just mad" posts that followed your "I am not mad just mad" post. LMAO.

? You didn't answer my question and went on a a butthurt lemming tirade. I didn't buy my PS4 Pro expecting PC performance from it and that's why I have a PC. You however bought your PotatoBox expecting some games to play on it and it's been eons since the FlopBone got a AAA game. In fact the only real AAA game on it is Forza Horizon 3 which also happens to be on PC (runs and looks better on PC too).

Horizon comes out in less than a week and is a certified AAA game only available on PS4, and the PS4 Pro version just happens to be the best version of it. NiOh another certified AAAE game on PS4 came out a few weeks ago. These games already more than justify my purchase. Can you say the same for your PotatoBox? It's hilarious when lems like you claim ownage, you can only claim ownage from a position of strength. Not when you have the weaker console and no games to play on it. At least Sheep have some exclusives to justify their weak hardware. ? What do lems like you have?

Another fanboy that only looks at review scores to judge games. That is so damn sad you are missing out on games that you really might enjoy, but you have to put up your fanboy glasses before doing so. Do you ever enjoy any movies that the critics hate, I would bet money that you do. Do you only listen to music that critics only give 4 or 5 stars, I bet you don't. Please stop this fanboy bullshit and I only play games that are 85 or higher because that is the only way they are any good. *cry*

@Xabiss: ? "Review scores don't matter when your games are flopping" - Lems 2017

Thanks for proving my point of again not answering my question and trying to deflect. Do you like movies that critics hate? Do you only only listen to music with 4 or 5 stars? So why do you only play games that only get top reviews? Like I said before it is a damn shame you are missing out on some games that you might actually enjoy, but keep deflecting like an idiot. It is the only thing you ever do in post!

Not a Lem BTW and defiantly not a damn fanboy like you!

@Xabiss: ? "Review scores don't matter when your games are flopping" - Lems 2017

BTW my shinny new PS4Pro will be here on Monday just in time for me to dig into Horizon on release day! Oh what life is like not to be a fanboy or a hater, but to be a TRUE GAMER!!!!

@Xabiss: ? "Review scores don't matter when your games are flopping" - Lems 2017

BTW my shinny new PS4Pro will be here on Monday just in time for me to dig into Horizon on release day! Oh what life is like not to be a fanboy or a hater, but to be a TRUE GAMER!!!!

Awwww yeahhhhh! Welcome to the Pro club my friend. You will not be disappointed.

Yes! You are mad. You mad at For Honor for running like shit and you now feel like the PS4 Pro is performing as shitty as the Xbox One "teh fail" system. You said all of the in your "I am not angry I am just mad" posts that followed your "I am not mad just mad" post. LMAO.

? You didn't answer my question and went on a a butthurt lemming tirade. I didn't buy my PS4 Pro expecting PC performance from it and that's why I have a PC. You however bought your PotatoBox expecting some games to play on it and it's been eons since the FlopBone got a AAA game. In fact the only real AAA game on it is Forza Horizon 3 which also happens to be on PC (runs and looks better on PC too).

Horizon comes out in less than a week and is a certified AAA game only available on PS4, and the PS4 Pro version just happens to be the best version of it. NiOh another certified AAAE game on PS4 came out a few weeks ago. These games already more than justify my purchase. Can you say the same for your PotatoBox? It's hilarious when lems like you claim ownage, you can only claim ownage from a position of strength. Not when you have the weaker console and no games to play on it. At least Sheep have some exclusives to justify their weak hardware. ? What do lems like you have?

I don't know the fanboy vernacular you are using. Its hard to understand what you are saying when you go on your fanboy tirade. Does any of what you type improve the PS4 Pro performance of For Honor? Or are you too mad to stay on topic?

@Pedro: LOL, you're still dodging the question. I rest my case. TLHBRekt.

Still terribly angry I see. So you mad that Ubisoft game runs like shit on the Pro and you mad that it makes you feel like you are playing on an Xbox One, and you then go on a tirade but I am butthurt and Rekt when you were the one bitching the whole time. LMAO.

@Pedro: ? Sorry for your loss. Maybe next gen you'll make better purchasing decisions.

Look like you are still mad. LMAO

? Tell us how you really feel. Let it all out.

@KBFloYd Where did they say low/medium settings?

Meanwhile on the WiiU and Switch it has an EXTREMELY stable performance of : 0p, 0fps reaching peaks of, you guessed it,0p and 0fps. On the upside it also doesn't have v-sync or framepacing issues.

What a letdown. Meh Nintendo.

Completely irrelevant.

Look like you are still mad. LMAO

? Tell us how you really feel. Let it all out.

No Feelings on the matter but you shared yours. Lets reflect. :)

Ubishit trash game runs like shit, no surprise.

Where is the Switch version of this shit game?

Things typical runs like shit on shit hardware.

Then there was the meltdown madness.

? You didn't answer my question and went on a a butthurt lemming tirade. I didn't buy my PS4 Pro expecting PC performance from it and that's why I have a PC. You however bought your PotatoBox expecting some games to play on it and it's been eons since the FlopBone got a AAA game. In fact the only real AAA game on it is Forza Horizon 3 which also happens to be on PC (runs and looks better on PC too).

Horizon comes out in less than a week and is a certified AAA game only available on PS4, and the PS4 Pro version just happens to be the best version of it. NiOh another certified AAAE game on PS4 came out a few weeks ago. These games already more than justify my purchase. Can you say the same for your PotatoBox? It's hilarious when lems like you claim ownage, you can only claim ownage from a position of strength. Not when you have the weaker console and no games to play on it. At least Sheep have some exclusives to justify their weak hardware. ? What do lems like you have?

Don't be mad. Its just a Ubisoft game that runs like shit and makes you feel like you are playing on an Xbox One.

@Pedro: So the reason for your lemming meltdown is that you want PS4 owners to feel what it's like to be an Xboner this gen getting mediocre running multiplats? ? That explains everything now. Too bad the Xbone version is still the worst performing version lol. I still can't sympathize with you.

? Hold that L lemming

what a letdown....but but meh pro.

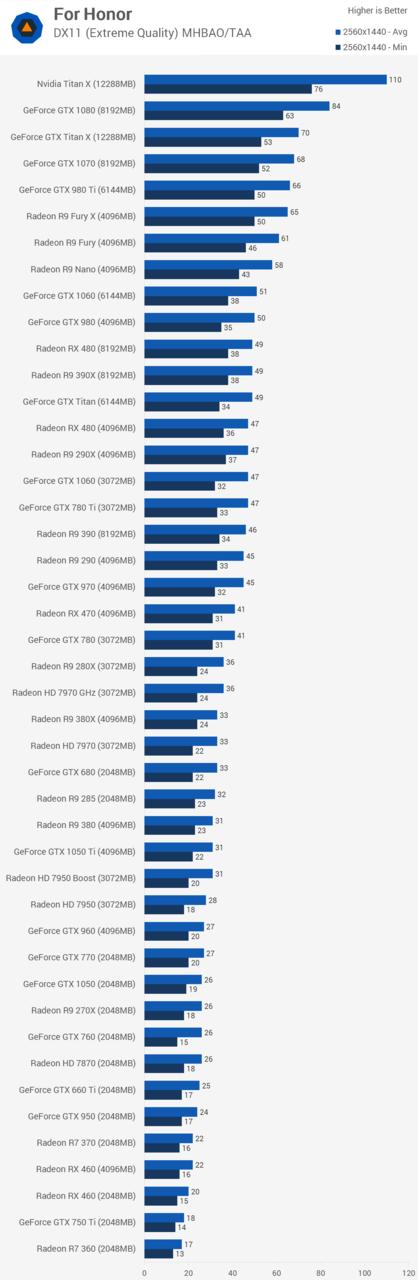

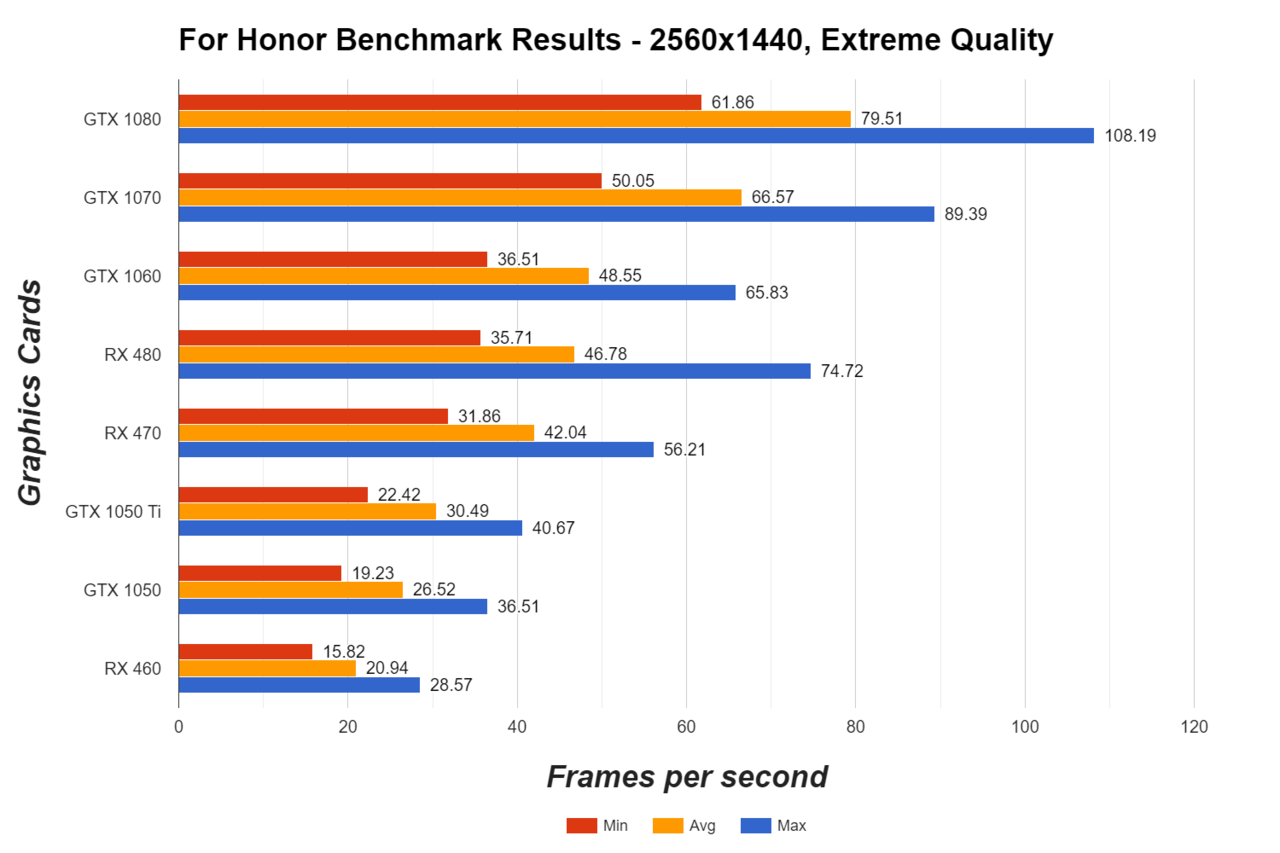

RX-470 delivered 41 fps at 1440p and extreme quality. For Honor is a Nvidia Gameworks title.

http://www.gamespot.com/articles/for-honor-benchmarked-on-all-modern-graphics-cards/1100-6448115/

RX-470 delivered 56 fps at 1440p and extreme quality.

Here’s how those cards performed at high settings:

| Graphics Card and Settings Used | Min FPS | Avg FPS | Max FPS |

|---|---|---|---|

| RX 480 (1440p, High preset) | 54.39 | 66.05 | 75.82 |

| RX 470 (1440p, High preset) | 48.57 | 58.79 | 71.58 |

| GTX 1050 Ti (1440p, High preset) | 34.02 | 42.2 | 50.16 |

| GTX 1050 (1440p High preset) | 29.64 | 37.22 | 44.66 |

| RX 460 (1440p, High preset) | 24.88 | 30.25 | 34.73 |

@Pedro: So the reason for your lemming meltdown is that you want PS4 owners to feel what it's like to be an Xboner this gen getting mediocre performing multiplats? ? That explains everything now. Too bad the Xbone version is still the worst performing version lol. I still can't sympathize with you.

LOL. LMAO. You can't say I am having a meltdown when you are the one that has clearly lost their cool. LMAO. I can't believe you are still angry because you owned yourself and you can't figure out how to backpedal your way out. LMAO. Don't be mad, be glad. BTW, non of what you said changes anything about For Honor's performance on the Pro. It doesn't matter how hard your try. LMAO. You still mad bro.

@quadknight said:

Ubishit trash game runs like shit, no surprise.

@Pedro: So the reason for your lemming meltdown is that you want PS4 owners to feel what it's like to be an Xboner this gen getting mediocre performing multiplats? ? That explains everything now. Too bad the Xbone version is still the worst performing version lol. I still can't sympathize with you.

LOL. LMAO. You can't say I am having a meltdown when you are the one that has clearly lost their cool. LMAO. I can't believe you are still angry because you owned yourself and you can't figure out how to backpedal your way out. LMAO. Don't be mad, be glad. BTW, non of what you said changes anything about For Honor's performance on the Pro. It doesn't matter how hard your try. LMAO. You still mad bro.

? Typing all the "LMAO" in the world still can't hide your obvious arsehurt in your replies.

@Shewgenja: Can't take the heat, get out of the kitchen bitch. You're always dogging on MS and Xbox One, but now that you see the PS Pro is absolute shit and a half assed piece of crap, you have meltdowns. Scorpio is going to run circles around your precious PS4, OG or Pro, and you're going to cry and have a meltdown every step of the way.

resolutionwise you need to be another foot closer to the tv.

but the ambient occlusion, shadows draw distance are clear.

not to mention the 30fps.

Meanwhile you are stock with 2001 graphics..ahahahahaa

And you're stuck with a DOS computer and NO console.

Ubishit trash game runs like shit, no surprise.

Where is the Switch version of this shit game?

It runs better than DowngradeCharted 4 and also looks more 4K than Sony First Party offerings, LMAO!

Stop peasant..is 4k 60FPS ultra or nothing and your 970 doesn't cut it..hahahahaha

Oh, it was your attempt at being funny. Listen guys, El-Tomato is trying to be funny.

Your consolation prize: Ha Ha Ha

@Shewgenja: Can't take the heat, get out of the kitchen bitch. You're always dogging on MS and Xbox One, but now that you see the PS Pro is absolute shit and a half assed piece of crap, you have meltdowns. Scorpio is going to run circles around your precious PS4, OG or Pro, and you're going to cry and have a meltdown every step of the way.

Oh, nope. It was more of a fair warning. Now, I hope Scorpio is a proper Gen 9 console with a fresh software lineup and renewed interest from MS in making exclusives. That would be for the absolute best in my opinion.

However, if all Scorpio is going to be is a peen waving fest over multiplats, I will rain on the parade in ways that make 2013 look like a legendary dream realm. I don't get mad. I get even.

They got a $400 console version running next to literally a $3000 PC version and you still can't tell the difference lmfao! They even tried to zoom in 1000X on some pebble in the distance to show off dat PC powah! Lol! Whew. Hermits must be butthurt as a MF this gen. All that money down the drain just for indiscernible differences on garbage Ubisoft ports. It's like you paid for a high end hooker and got stuck with a TS. HAHAHAHA! Ahhh so good. Lemmings watch out. It'll be even worse for Xbone 2. Lol!

Its 30fps not 60fps it requires half the GPU power to run 30fps game. Is not 4K native, its checkboarding. And low graphics settings is shit.

? Typing all the "LMAO" in the world still can't hide your obvious arsehurt in your replies.

Asshurt? Why would I be asshurt. I am not the one that is mad at For Honor "runs like shit"

@quadknight said:

Ubishit trash game runs like shit, no surprise.

Nor was I so offended that I need to lecture in order to hide my madness like this

@quadknight said:

? You didn't answer my question and went on a a butthurt lemming tirade. I didn't buy my PS4 Pro expecting PC performance from it and that's why I have a PC. You however bought your PotatoBox expecting some games to play on it and it's been eons since the FlopBone got a AAA game. In fact the only real AAA game on it is Forza Horizon 3 which also happens to be on PC (runs and looks better on PC too).

Horizon comes out in less than a week and is a certified AAA game only available on PS4, and the PS4 Pro version just happens to be the best version of it. NiOh another certified AAAE game on PS4 came out a few weeks ago. These games already more than justify my purchase. Can you say the same for your PotatoBox? It's hilarious when lems like you claim ownage, you can only claim ownage from a position of strength. Not when you have the weaker console and no games to play on it. At least Sheep have some exclusives to justify their weak hardware. ? What do lems like you have?

LMAO. <----because it makes you more mad. LMAO.

@Shewgenja: Lol you trying to undermine Scorpio running all multiplats at 1080p 60fps with no compromises is very ignorant. I'm not saying Scorpio will do that, but if it does... There's not much you can say without looking like a butthurt cow.

They got a $400 console version running next to literally a $3000 PC version and you still can't tell the difference lmfao! They even tried to zoom in 1000X on some pebble in the distance to show off dat PC powah! Lol! Whew. Hermits must be butthurt as a MF this gen. All that money down the drain just for indiscernible differences on garbage Ubisoft ports. It's like you paid for a high end hooker and got stuck with a TS. HAHAHAHA! Ahhh so good. Lemmings watch out. It'll be even worse for Xbone 2. Lol!

Its 30fps not 60fps it requires half the GPU power to run 30fps game. Is not 4K native, its checkboarding. And low graphics settings is shit.

Why are lems talking about low graphic settings being shit? Lets get real, folks haha

@Pedro: So the reason for your lemming meltdown is that you want PS4 owners to feel what it's like to be an Xboner this gen getting mediocre performing multiplats? ? That explains everything now. Too bad the Xbone version is still the worst performing version lol. I still can't sympathize with you.

LOL. LMAO. You can't say I am having a meltdown when you are the one that has clearly lost their cool. LMAO. I can't believe you are still angry because you owned yourself and you can't figure out how to backpedal your way out. LMAO. Don't be mad, be glad. BTW, non of what you said changes anything about For Honor's performance on the Pro. It doesn't matter how hard your try. LMAO. You still mad bro.

? Typing all the "LMAO" in the world still can't hide your obvious arsehurt in your replies.

Bizzaro world

Oh, it was your attempt at being funny. Listen guys, El-Tomato is trying to be funny.

Your consolation prize: Ha Ha Ha

NO i am dead serious what is the point of whoring PC graphics if you are not on top.? Your PC is another PS4 Pro just below the real kings...

Hahahahaa

Bizzaro world

The one you live with your lemming friends sure is..hahaha

Oh, it was your attempt at being funny. Listen guys, El-Tomato is trying to be funny.

Your consolation prize: Ha Ha Ha

NO i am dead serious what is the point of whoring PC graphics if you are not on top.? Your PC is another PS4 Pro just below the real kings...

Hahahahaa

Who said I'm not on top? I can still max out every game and be able to run at 60 FPS. Not to mention, it's upto me to customize the experience as I desire plus more exclusives, better games, free online and still better graphics than the latest not 4K capable Pro. LMAO!

I'll get 4K when the hardware is capable enough for it.

Anyone else tired of retconned Scorpio threads? I swear to god, for each of these you turkeys make, I'm going to make a thread comparing my gaming PC to the Scorpio. Ya'll better pray that thing has actual exclusive games because I will bury you to your balls in cross-plat performance threads if not. Trust.

Anyone else tired of "WHere's Xbox One Exclusive", "PS4 already own 2017 in Exclusives", "Do we even need an Xbox when you have a PC" threads? Because those even occur more, and are always the same, with just dakur, and some alt accounts from banned cows jerking each other off thinking about Xbox.

Also no one expects Scorpio to do better than a PC, that's just silly... It will just outperform PS Pro by a big margin. But funny to see that you've got your panties in a bunch due to the bad performance of the PS Pro. Why would you even buy that console, when you can buy the PS4 slim for less money, practically game the same, and an actual console upgrade is coming out at the end of the year, instead of this half-assed solution.

@Guy_Brohski: ummm higher than 1080p max on og ps4 , you could keep thinking its gonna be pc 60fps or nuttin type customers that are targeted by sony tho.

1080p is still the default

Get over it

A upgrade is a upgrade. You act as if the price didnt continue to be 400 on top of it. Smhh ungreatful

@Shewgenja: Can't take the heat, get out of the kitchen bitch. You're always dogging on MS and Xbox One, but now that you see the PS Pro is absolute shit and a half assed piece of crap, you have meltdowns. Scorpio is going to run circles around your precious PS4, OG or Pro, and you're going to cry and have a meltdown every step of the way.

Oh, nope. It was more of a fair warning. Now, I hope Scorpio is a proper Gen 9 console with a fresh software lineup and renewed interest from MS in making exclusives. That would be for the absolute best in my opinion.

However, if all Scorpio is going to be is a peen waving fest over multiplats, I will rain on the parade in ways that make 2013 look like a legendary dream realm. I don't get mad. I get even.

GPU shader power: Scorpio is 1.03X faster than RX-480

Memory bandwidth: Scorpio is 1.25X faster than RX-480

Vega's tile/polygon binning cache rendering will be very important for Nvidia Gameworks titles.

On basic parameters in both shader ALU and memory bandwidth, Vega 10 is slightly 2X over RX-480 i.e.

double RX-480's 46.78 fps to 92 fps

double RX-480's 49 fps 98 fps.

This is with Fury X drivers with no support for Vega's tile/polygon binning cache rendering. Vega 10 estimated to be faster than GTX 1080

http://www.anandtech.com/show/11002/the-amd-vega-gpu-architecture-teaser/2

ROPs & Rasterizers: Binning for the Win(ning)

We’ll suitably round-out our overview of AMD’s Vega teaser with a look at the front and back-ends of the GPU architecture. While AMD has clearly put quite a bit of effort into the shader core, shader engines, and memory, they have not ignored the rasterizers at the front-end or the ROPs at the back-end. In fact this could be one of the most important changes to the architecture from an efficiency standpoint.

Back in August, our pal David Kanter discovered one of the important ingredients of the secret sauce that is NVIDIA’s efficiency optimizations. As it turns out, NVIDIA has been doing tile based rasterization and binning since Maxwell, and that this was likely one of the big reasons Maxwell’s efficiency increased by so much. Though NVIDIA still refuses to comment on the matter, from what we can ascertain, breaking up a scene into tiles has allowed NVIDIA to keep a lot more traffic on-chip, which saves memory bandwidth, but also cuts down on very expensive accesses to VRAM.

For Vega, AMD will be doing something similar. The architecture will add support for what AMD calls the Draw Stream Binning Rasterizer, which true to its name, will give Vega the ability to bin polygons by tile. By doing so, AMD will cut down on the amount of memory accesses by working with smaller tiles that can stay-on chip. This will also allow AMD to do a better job of culling hidden pixels, keeping them from making it to the pixel shaders and consuming resources there.

As we have almost no detail on how AMD or NVIDIA are doing tiling and binning, it’s impossible to say with any degree of certainty just how close their implementations are, so I’ll refrain from any speculation on which might be better. But I’m not going to be too surprised if in the future we find out both implementations are quite similar. The important thing to take away from this right now is that AMD is following a very similar path to where we think NVIDIA captured some of their greatest efficiency gains on Maxwell, and that in turn bodes well for Vega.

Meanwhile, on the ROP side of matters, besides baking in the necessary support for the aforementioned binning technology, AMD is also making one other change to cut down on the amount of data that has to go off-chip to VRAM. AMD has significantly reworked how the ROPs (or as they like to call them, the Render Back-Ends) interact with their L2 cache. Starting with Vega, the ROPs are now clients of the L2 cache rather than the memory controller, allowing them to better and more directly use the relatively spacious L2 cache.

@ronvalencia said:GPU shader power: Scorpio is 1.03X faster than RX-480

Memory bandwidth: Scorpio is 1.25X faster than RX-480

Link to MS confirming 100% it is Vega and not Polaris refresh or custom polaris like Pro.

Link to where MS claim that 320GB/s is ONLY and solely for GPU,because the RX480 bandwidth isn't share with any CPU.

Ya'll better pray that thing has actual exclusive games

"We said we're not going to have console-exclusive games for Project Scorpio. It's one ecosystem--whether you have an Xbox One S or Project Scorpio, we don't want anyone to be left behind"

http://www.gamespot.com/articles/xbox-scorpio-wont-have-exclusive-games-except-for-/1100-6442754/

Xbox One S wouldn't be able to run VR games.

Please Log In to post.

Log in to comment