@ronvalencia: yeah my 970 OCs like crap - I did not get a good one

Those who said the X1X GPU is a match for the 1070 come forward and apologize

15% gap on averaging is not closing.... hahahahahahaha.........

And why you using 4k for? X doesn't run at native 4k making these results irrelevant...... ahahahahahaha....

R9-390X involved is not 6 TFLOPS i.e. it's 5.9 TFLOPS with 1050 Mhz classic GPU hardware clock speed and it's architecture is in PS4 era.

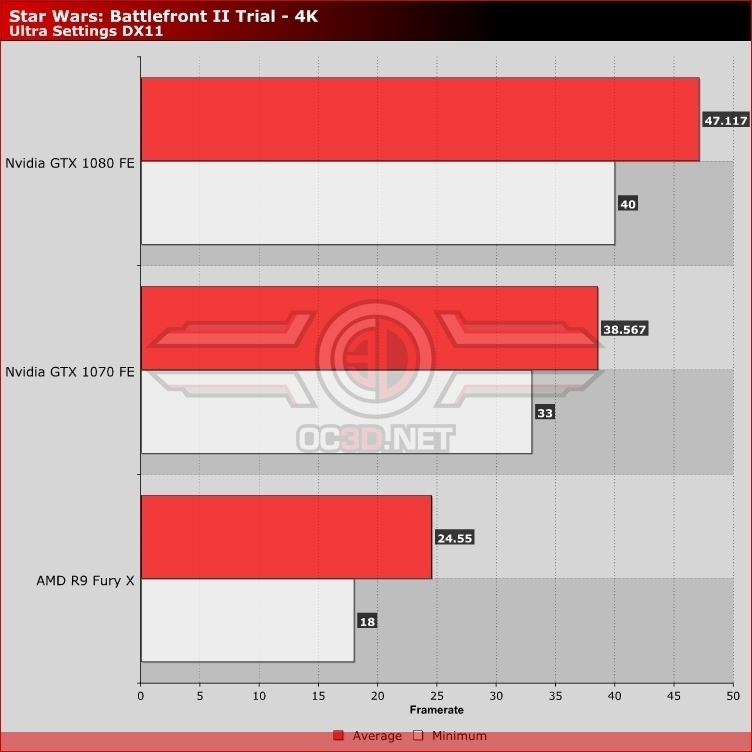

Both GTX 1070 and R9-390X failed 60 fps target at 4K.

Both GTX 1070 and R9-390X has similar fps range i.e. 41 fps and 35 fps respectively at 4K.

R9-390X (5.9 TFLOPS)'s 35 fps nearly rivals GTX 980 Ti (~6 TFLOPS) with 38 fps.

X1X's version reaches native 4K with 1800p resolution drop (69 percent of 4K).

41 fps is 68 percent from 60 fps, hence it would need resolution to drop proportionally.

35 fps vs 41 fps is a 15% difference between 390x vs 1070....... let alone X1X has to rely on dynamic scaling and lower settings....... enough of this crap, even if and I mean a "big if" X1X gpu was equal to GTX 1070 the other bottlenecks like the cpu etc and lack of a dedicated 8gb buffer for the gpu would prevent the X1X to perform comparable to a GTX 1070 .

X1X and all current gpus on the market are not 4k ready without using compromises.

35 fps vs 41 fps is a 15% difference between 390x vs 1070....... let alone X1X has to rely on dynamic scaling and lower settings....... enough of this crap, even if and I mean a "big if" X1X gpu was equal to GTX 1070 the other bottlenecks like the cpu etc and lack of a dedicated 8gb buffer for the gpu would prevent the X1X to perform comparable to a GTX 1070.

X1X and all current gpus on the market are not 4k ready without using compromises.

X1X's version reaches native 4K with 1800p resolution drop (69 percent of 4K) i.e. 31 percent pixels wasn't rendered.

41 fps is 68 percent from 60 fps, hence it would need resolution to drop proportionally to maintain 60 fps target i.e. 32 percent of pixels missed 16.6 ms target.

X1X doesn't have PC's copy memory bandwidth overheads from system memory to graphics memory i.e. PC GPU's VRAM bandwidth will lose about 16 GB/s from PCI-E (one direction PCI-E ver3 16 lanes) memory copy. Battlefront 2's 60 fps was budgeted for PS4 CPU I/O. 16 GB/s+16GB/s PCI-E 3.0 16 lanes was able to contain 160 fps.

15% gap on averaging is not closing.... hahahahahahaha.........

And why you using 4k for? X doesn't run at native 4k making these results irrelevant...... ahahahahahaha....

R9-390X involved is not 6 TFLOPS i.e. it's 5.9 TFLOPS with 1050 Mhz classic GPU hardware clock speed and it's architecture is in PS4 era.

Both GTX 1070 and R9-390X failed 60 fps target at 4K.

Both GTX 1070 and R9-390X has similar fps range i.e. 41 fps and 35 fps respectively at 4K.

R9-390X (5.9 TFLOPS)'s 35 fps nearly rivals GTX 980 Ti (~6 TFLOPS) with 38 fps.

X1X's version reaches native 4K with 1800p resolution drop (69 percent of 4K).

41 fps is 68 percent from 60 fps, hence it would need resolution to drop proportionally.

Oh is that how you measure it Ron, guestimates?

You are forgetting something:

In terms of all the additional bells and whistles, it's perhaps predictable that the PC version delivers the most complete package. Virtually all aspects of the post-process pipeline receive upgrades, texture quality across the board is better, LODs are pushed out and ground cover can be ramped up too, producing a more dense scene overall. Shadow quality is on another level entirely, to the point where individual leaves cast shadows, so yes, PC users with powerful hardware can rest easy

The X does 1800p not at Ulra settings like the GTX 1070 does when it gets 41FPS at 4K.

- Shadow quality

- Post Processing

- Texture Quality

- Better LODs

68%... What gibberish.

@ronvalencia:

I would also like to add:

Want to know just how much better the PC version is?...

Battlefront 2 is a beautiful-looking game overall and 1080p60 maxed is achievable on mainstream-level graphics hardware like the GeForce GTX 1060 and Radeon RX 580, with horsepower to spare. In fact, it looks so good that we'd really like to see the consoles offering an alternative rendering mode to leverage the Frostbite engine's full feature set at 1080p, based on just how impressive the PC's showing is here.

Link: http://www.eurogamer.net/articles/digitalfoundry-2017-star-wars-battlefront-2-xbox-one-x-pc-ps4-pro-analysis

Slow down Ron, not here to attack you, I'm trying to get you to understand something here, but you need to help me to help you, just simply answer my question: what CPU was used when you were looking at the GTX 1070 bar on that bench chart? was it an i7-5960x?

35 fps vs 41 fps is a 15% difference between 390x vs 1070....... let alone X1X has to rely on dynamic scaling and lower settings....... enough of this crap, even if and I mean a "big if" X1X gpu was equal to GTX 1070 the other bottlenecks like the cpu etc and lack of a dedicated 8gb buffer for the gpu would prevent the X1X to perform comparable to a GTX 1070.

X1X and all current gpus on the market are not 4k ready without using compromises.

X1X's version reaches native 4K with 1800p resolution drop (69 percent of 4K) i.e. 31 percent pixels wasn't rendered.

41 fps is 68 percent from 60 fps, hence it would need resolution to drop proportionally to maintain 60 fps target i.e. 32 percent of pixels missed 16.6 ms target.

X1X doesn't have PC's copy memory bandwidth overheads from system memory to graphics memory i.e. PC GPU's VRAM bandwidth will lose about 16 GB/s from PCI-E (one direction PCI-E ver3 16 lanes) memory copy. Battlefront 2's 60 fps was budgeted for PS4 CPU I/O. 16 GB/s+16GB/s PCI-E 3.0 16 lanes was able to contain 160 fps.

yet your ignoring the fact that X1X using lower quality of Shadows. Post Processing, Texture Quality and LOD....... So again your 68% figure is full of BS.

What the hell are you talking about...... "PCI-E 3.0 16 lanes was able to contain 160 fps"...... your talking malarkey.....

@ronvalencia:

I would also like to add:

Want to know just how much better the PC version is?...

Battlefront 2 is a beautiful-looking game overall and 1080p60 maxed is achievable on mainstream-level graphics hardware like the GeForce GTX 1060 and Radeon RX 580, with horsepower to spare. In fact, it looks so good that we'd really like to see the consoles offering an alternative rendering mode to leverage the Frostbite engine's full feature set at 1080p, based on just how impressive the PC's showing is here.

Link: http://www.eurogamer.net/articles/digitalfoundry-2017-star-wars-battlefront-2-xbox-one-x-pc-ps4-pro-analysis

Again, X1X was design for higher resolution box and it's existence is for higher resolution when compared to Sony's competition. DF has moved the goal post from "resolution gate" to 1080p max details.

@ronvalencia:

I would also like to add:

Want to know just how much better the PC version is?...

Battlefront 2 is a beautiful-looking game overall and 1080p60 maxed is achievable on mainstream-level graphics hardware like the GeForce GTX 1060 and Radeon RX 580, with horsepower to spare. In fact, it looks so good that we'd really like to see the consoles offering an alternative rendering mode to leverage the Frostbite engine's full feature set at 1080p, based on just how impressive the PC's showing is here.

Link: http://www.eurogamer.net/articles/digitalfoundry-2017-star-wars-battlefront-2-xbox-one-x-pc-ps4-pro-analysis

Again, X1X was design for higher resolution box and it's existence is for higher resolution when compared Sony's competition. DF has moved the goal post from "resolution gate" to 1080p max details.

The only one doing that is you.

15% gap on averaging is not closing.... hahahahahahaha.........

And why you using 4k for? X doesn't run at native 4k making these results irrelevant...... ahahahahahaha....

R9-390X involved is not 6 TFLOPS i.e. it's 5.9 TFLOPS with 1050 Mhz classic GPU hardware clock speed and it's architecture is in PS4 era.

Both GTX 1070 and R9-390X failed 60 fps target at 4K.

Both GTX 1070 and R9-390X has similar fps range i.e. 41 fps and 35 fps respectively at 4K.

R9-390X (5.9 TFLOPS)'s 35 fps nearly rivals GTX 980 Ti (~6 TFLOPS) with 38 fps.

X1X's version reaches native 4K with 1800p resolution drop (69 percent of 4K).

41 fps is 68 percent from 60 fps, hence it would need resolution to drop proportionally.

Oh is that how you measure it Ron, guestimates?

You are forgetting something:

In terms of all the additional bells and whistles, it's perhaps predictable that the PC version delivers the most complete package. Virtually all aspects of the post-process pipeline receive upgrades, texture quality across the board is better, LODs are pushed out and ground cover can be ramped up too, producing a more dense scene overall. Shadow quality is on another level entirely, to the point where individual leaves cast shadows, so yes, PC users with powerful hardware can rest easy

The X does 1800p not at Ulra settings like the GTX 1070 does when it gets 41FPS at 4K.

- Shadow quality

- Post Processing

- Texture Quality

- Better LODs

68%... What gibberish.

BS, DF has stated X1X's texture assets was a match against PC's max settings. Gibberish indeed.

Shadow quality.. slightly slower resolution

Reflection quality, slightly slower resolution

LODs, tessellation power ... GTX 1070 has 5 G Triangles per second while X1X has 4.68 5 G Triangles per second. I already posted this difference.

15% gap on averaging is not closing.... hahahahahahaha.........

And why you using 4k for? X doesn't run at native 4k making these results irrelevant...... ahahahahahaha....

R9-390X involved is not 6 TFLOPS i.e. it's 5.9 TFLOPS with 1050 Mhz classic GPU hardware clock speed and it's architecture is in PS4 era.

Both GTX 1070 and R9-390X failed 60 fps target at 4K.

Both GTX 1070 and R9-390X has similar fps range i.e. 41 fps and 35 fps respectively at 4K.

R9-390X (5.9 TFLOPS)'s 35 fps nearly rivals GTX 980 Ti (~6 TFLOPS) with 38 fps.

X1X's version reaches native 4K with 1800p resolution drop (69 percent of 4K).

41 fps is 68 percent from 60 fps, hence it would need resolution to drop proportionally.

Oh is that how you measure it Ron, guestimates?

You are forgetting something:

In terms of all the additional bells and whistles, it's perhaps predictable that the PC version delivers the most complete package. Virtually all aspects of the post-process pipeline receive upgrades, texture quality across the board is better, LODs are pushed out and ground cover can be ramped up too, producing a more dense scene overall. Shadow quality is on another level entirely, to the point where individual leaves cast shadows, so yes, PC users with powerful hardware can rest easy

The X does 1800p not at Ulra settings like the GTX 1070 does when it gets 41FPS at 4K.

- Shadow quality

- Post Processing

- Texture Quality

- Better LODs

68%... What gibberish.

BS, DF has stated X1X's texture asset was a match against PC's max settings. Gibberish indeed.

That quote is from DF... http://www.eurogamer.net/articles/digitalfoundry-2017-star-wars-battlefront-2-xbox-one-x-pc-ps4-pro-analysis

@waahahah: Why do you quote me? I never said he got “rekt” in the cpu discussion. Simply that the benhmark he used is not from a reputable source no matter how much he wants it.

Do you have a better benchmark? I fail see the issue its not like commander did the tests just to show the 8600k power. And its consistent results with games that scale with cpu cores.

30-50FPS... On the 60FPS mode.

Same as a PC with a GTX 1070?...

The GTX 1070...

At the end of the day.......OPTIMIZATION, OPTIMIZATION, OPTIMIZATION.

You can try to compare it to a similar PC spec but it will not make a difference when the CPU & GPU is custom(not off a shelf) and the blazing fast 9GB of available GDDR5 memory to top it out. The devs can play and tinker with what they want to get the most out of the X. So what you may think is a "bottleneck", an experienced dev will find a work-a-round. There's a reason the system is getting praised all around the net....accept it & move on. Good day SW =]

@ronvalencia: yeah my 970 OCs like crap - I did not get a good one

That's easy to fix...... flash it.

R9-390X involved is not 6 TFLOPS i.e. it's 5.9 TFLOPS with 1050 Mhz classic GPU hardware clock speed and it's architecture is in PS4 era.

Both GTX 1070 and R9-390X failed 60 fps target at 4K.

Both GTX 1070 and R9-390X has similar fps range i.e. 41 fps and 35 fps respectively at 4K.

R9-390X (5.9 TFLOPS)'s 35 fps nearly rivals GTX 980 Ti (~6 TFLOPS) with 38 fps.

X1X's version reaches native 4K with 1800p resolution drop (69 percent of 4K).

41 fps is 68 percent from 60 fps, hence it would need resolution to drop proportionally.

Oh is that how you measure it Ron, guestimates?

You are forgetting something:

In terms of all the additional bells and whistles, it's perhaps predictable that the PC version delivers the most complete package. Virtually all aspects of the post-process pipeline receive upgrades, texture quality across the board is better, LODs are pushed out and ground cover can be ramped up too, producing a more dense scene overall. Shadow quality is on another level entirely, to the point where individual leaves cast shadows, so yes, PC users with powerful hardware can rest easy

The X does 1800p not at Ulra settings like the GTX 1070 does when it gets 41FPS at 4K.

- Shadow quality

- Post Processing

- Texture Quality

- Better LODs

68%... What gibberish.

BS, DF has stated X1X's texture asset was a match against PC's max settings. Gibberish indeed.

That quote is from DF... http://www.eurogamer.net/articles/digitalfoundry-2017-star-wars-battlefront-2-xbox-one-x-pc-ps4-pro-analysis

Too bad for you, GTX 1070 in some instance is lower than 41 fps's 68 percent from 60 fps i.e. 38.567 fps is 64 percent of 60 fps and 33 fps is 55 percent from 60 fps.

Video time stamp: 02:58, core artwork match PC's highest preset quote. https://youtu.be/BE72jzVHph8 Your DF link is missing DF video's core asset remarks.

30-50FPS... On the 60FPS mode.

Same as a PC with a GTX 1070?...

The GTX 1070...

Have you realize that STRIX GTX 1070 is overclocked to 2114 Mhz which yields 8.117 TFLOPS and 2088Mhz which yields 8.017 TFLOPS. It's already about 23 to 24 percent higher from 6.5 TFLOPS. It's even faster than 7.8 TFLOPS version.

At 2.114 Ghz, GTX 1070's 5 G triangles per second turns into ~6.2 G triangles per second and X1X GPU only has 4.68 G triangles per second. Tile L2 cache render at 2088 Mhz and 2114 Mhz is even faster. The classic GPU hardware and compute TFLOPS is faster at 2.114 Ghz and 2.088 Ghz clock speed.

Note why I stated >8 TFLOPS GTX 1070 existence.

@ronvalencia: yeah my 970 OCs like crap - I did not get a good one

That's easy to fix...... flash it.

Some higher grade BIOS includes higher voltage curve for the GPU core, hence flashing it enables higher clock speed. Different BIOS may have different video port assignments and it's better to re-flash within the same brand. I haven't re-flash my GTX Gaming X 980 Ti into Lightning variant since it's already OC pass the Lightning's config.

@ronvalencia: yeah my 970 OCs like crap - I did not get a good one

That's easy to fix...... flash it.

Some higher grade BIOS includes higher voltage curve for the GPU core, hence flashing it enables higher clock speed. Different BIOS may have different video port assignments and it's better to re-flash within the same brand. I haven't re-flash my GTX Gaming X 980 Ti into Lightning variant since it's already OC pass the Lightning's config.

Yes thank you for explaining and wasting my time...... I already know how it works and what it does.

Secondly, was I even talking to you?

@ronvalencia:

Its there in black and white from DF...

texture quality across the board is better

Unless DF contradicted them selves which would make the comparison void wouldn't it?...

As for your ARK claims?...

Let me get this straight you think the X is on par with a stock 1070?...

In the video posted X1X is running ARK in its 1080p 60FPS which is on medium settings... As you can see in the video its framerate is hovering between 30-50FPS.

No your telling me that:

- A stock 1070 would perform 1080p medium 30-50FPS

- A overclocked GTX 1070 would be getting 60-70FPS on the High preset

I repeat you are saying that the difference between a stock 1070 and overclocked 1070 is 66% performance boost on a higher image quality?...

How S**** do you think people are?...

At the end of the day.......OPTIMIZATION, OPTIMIZATION, OPTIMIZATION.

You can try to compare it to a similar PC spec but it will not make a difference when the CPU & GPU is custom(not off a shelf) and the blazing fast 9GB of available GDDR5 memory to top it out. The devs can play and tinker with what they want to get the most out of the X. So what you may think is a "bottleneck", an experienced dev will find a work-a-round. There's a reason the system is getting praised all around the net....accept it & move on. Good day SW =]

hahaha so delusional! XD

At the end of the day.......OPTIMIZATION, OPTIMIZATION, OPTIMIZATION.

You can try to compare it to a similar PC spec but it will not make a difference when the CPU & GPU is custom(not off a shelf) and the blazing fast 9GB of available GDDR5 memory to top it out. The devs can play and tinker with what they want to get the most out of the X. So what you may think is a "bottleneck", an experienced dev will find a work-a-round. There's a reason the system is getting praised all around the net....accept it & move on. Good day SW =]

hahaha so delusional! XD

reminds me of the past with Sony fanboys claiming the power of the 8gb of GDDR5 in the PS4 and the other tid bits..... And once again they ignore the fact that the 9gb is shared between game cache and vram buffer. then ignoring the "semi custom" statement from AMD. hardware is still mostly based off of Polaris and Jaguar architecture.

Less forget Optimization also includes using lower quality assets and settings to reach a set target.

At the end of the day.......OPTIMIZATION, OPTIMIZATION, OPTIMIZATION.

You can try to compare it to a similar PC spec but it will not make a difference when the CPU & GPU is custom(not off a shelf) and the blazing fast 9GB of available GDDR5 memory to top it out. The devs can play and tinker with what they want to get the most out of the X. So what you may think is a "bottleneck", an experienced dev will find a work-a-round. There's a reason the system is getting praised all around the net....accept it & move on. Good day SW =]

yeah of course, we all know the famous optimization is some kind of magic, sure, sure......

I think the first thing to nail down is what are the exact detail settings the XB1X use for each game. I honestly doubt it's at Ultra settings like those in the PC benchmarks.

Once that's done, it's up to individual owners to test their PC cards at those settings since most sites choose the max settings possible.

Ultra Settings tend to have a low return, considering the higher requirements. Unfortunately, it penalizes PCs when it comes to platform comparisons. The best method is to find the settings for the least common denominator (usually the consoles) and use those instead.

X1X's version reaches native 4K with 1800p resolution drop (69 percent of 4K) i.e. 31 percent pixels wasn't rendered.

41 fps is 68 percent from 60 fps, hence it would need resolution to drop proportionally to maintain 60 fps target i.e. 32 percent of pixels missed 16.6 ms target.

X1X doesn't have PC's copy memory bandwidth overheads from system memory to graphics memory i.e. PC GPU's VRAM bandwidth will lose about 16 GB/s from PCI-E (one direction PCI-E ver3 16 lanes) memory copy. Battlefront 2's 60 fps was budgeted for PS4 CPU I/O. 16 GB/s+16GB/s PCI-E 3.0 16 lanes was able to contain 160 fps.

Huh what? That's bullshit. Resolution isn't proporitional to frame rate. A 30% bump in resolution will not result in a 30% loss in fps. Like resolution is the only thing cutting into the frame rate. What a liar.

In the absence of benchmarks at 4k with a high setting (closer to Xbox One X version) I found this on GeForce website

At 2560x1440, meanwhile, the GeForce GTX 1070 is recommended, running at over 66 FPS on average. And at 3840x2160, you’ll want the world's fastest consumer graphics card, the GeForce GTX 1080 Ti, which runs at an average of 55 FPS at this demanding resolution, with a scrumptious level of detail, for an experience you can’t match on any other platform or PC config.

https://www.geforce.com/whats-new/articles/star-wars-battlefront-2-geforce-gtx-recommended-gpus

Notice this is at high details not Ultra

If 1080 can run at 55fps at 4k with high settings a 1070 must be less.

maybe they mean ultra when they say high

@tdkmillsy: Maybe who knows. Either way set numbers are meaningless for framerates... Watching a video is the best way to determine the performance. A 1070 at 1440P ultra its framerate fluctuates from the 70-110PS. The 1080 Ti at ultra is 70-80FPS at 4K.

I would wait for videos to come out of the 1070 at 4K with high settings... Or ask someone who has the card and game to post a video.

The consoles use dynamic resolution which hides the true performance numbers they can achieve... The X doesn't just run at High'ish settings at 4K it drops to 1800p when things get tough.

@tdkmillsy: Maybe who knows. Either way set numbers are meaningless for framerates... Watching a video is the best way to determine the performance. A 1070 at 1440P ultra its framerate fluctuates from the 70-110PS. The 1080 Ti at ultra is 70-80FPS at 4K.

I would wait for videos to come out of the 1070 at 4K with high settings... Or ask someone who has the card and game to post a video.

The consoles use dynamic resolution which hides the true performance numbers they can achieve... The X doesn't just run at High'ish settings at 4K it drops to 1800p when things get tough.

There is an option in-game for dynamic resolution on Pc.

Please Log In to post.

Log in to comment