crytek boss says ps3 and 360 hold back pc

This topic is locked from further discussion.

if bet if crytek programmed software renderer quadcore could run crysis on max settings better than best intergrated graphics chip on the market.

yodogpollywog

Why anyone would like to play games/render videos in a integrated graphic chip? Dedicated GPUs are cheap, whereas Intel integrated chips are awful weak. What you are asking for is to demand that games being developed in monoaural audio due stereo or multi-channel surround is more complex...

Well, be reasy to upgrade your system every 2 years once consoles are gone then! :(Kleeyook

I didn't change my main desktop rig in 2.5 years. Is Q6600 with a 275 GTX and 4 GB. It runs smoothly any game on the market at 1690 x 1050, and in fact, recent games as Arrowhead, BC 2 or BO runs faster than older games as S.T.A.L.K.E.R. or Crysis. Black Ops runs over 80 fps with AA X16 and AF X8 in may rig.

Any GPU in the market with the power of the 3 years old 8800 GT or better is capable to run any PC game with better settings than the console counterpart. Lately I'm only spending money in PC hardware jumping from good peripherals (Logitech ones) to exceptional ones (Razer Mamba Mouse, Steel Series XL pad, etc.). The fun thing is that I build a new HTPC for my living room with a i7 and a 5870 and I find it totally over the top; there's currently too much power in the hardware to the kind of games available: current PC hardware is overpowered.

Well its a known fact that PC games can do incredibly well in sales. Check out WoW, StarCraft 2, The Sims, TF2, CSS etc... I think the thing that killed PC gaming was the the games were just not good enough. Most talented teams went down the console route to expand their market share and to gain more money and then the PC dominant games such as Unreal Tournament, Quake series the command and conquer etc have fallen away because they were simply not good enough.

Consoles have become better from last gen because of their online support and more features but so has the PC. The cost of PC was and always has still been there and if anything maybe I am right in saying its even cheaper now yet we still find atleast for me no real decent PC exclusives. There are some good ones but they come out buggy as hell i.e. STALKER.

no im not new to pc gaming i've been doing it for years.. I just haven't looked at outdated technology lol! Quite interesting tech skills for doing that but pretty lame at the same time, if someone wants to play quake they should just get a normal pc lol. Its great for proving a point, maybe one day someone can hack the rsx and run pc games on ps3, now that would be epic! I wonder why sony stopped OS support.. maybe cause of things like this?[QUOTE="SPBoss"][QUOTE="yodogpollywog"]

umm u must be new to gaming

http://www.youtube.com/watch?v=J2En3SCxlJM

quake 2 on linux ps3 running only off the cpu software rendering, u cant access the rsx on linux with ps3.

yodogpollywog

you must be new.

because u couldnt even buy graphics card when quake 1 shipped in 1996.

Hmm, that's odd, because what was driving the Windows 95 interface then? I distinctly recall a lot of hype over Local Bus video cards (like PCI cards which were eclipsing VESA Local Bus as the Pentiums hit their prime). You needed something to connect the monitor.[QUOTE="yodogpollywog"]

[QUOTE="ferret-gamer"] In oher words you have no idea whatsoever what you are talking about so you just will continue to repeat the same stuff that i debunked in the very post you quoted.ferret-gamer

nope i been pc gaming before u were born.

Yet i know more about the topic :D

So lets end this once and for all:

You keep claiming that Software rendering is superior because of a comparison of Unreal. Let me explain exactly why that is no longer a usable reason. The very first GPUs used a Fixed Function Pixel Process Pipeline. Whereas the Software Rendering of the time was not limited to that. But Graphics Chips are no longer limited to FFPP, as they invented something called "Shaders". Perhaps you have heard of them? Graphics processor now had a superior way of programability along with FAR superior Performance, making them the much better choice for rendering.

And thanks to the more-generalizes nature of GPUs, we're almost to the point that GPUs can go beyond their normal scanline rendering and shading techniques. Think realtime raytracing. It'll probably come within two generations.i can't disagree with that on a tech level but i would add that if the console version outsells the pc version then wouldn't it mean consumer demand is what is holding back pc?

Are you serious? Software rendering through the cpu is a dumb idea in 2010, it was fine in the mid 90's or earlier since Pc's didnt have a dedicated gpu's, just video memory and the cpu handled it all. Todays gpu's are heck alot faster then any cpu that is out. this is why were seeing a large shift into parallel processing performed by gpu's not cpu's. And why do you keep on bringing up old games that used software rendering? Yes we get it, it can be used today but you will see a major drop in performance and abilites since, as I stated above GPU's process data alot faster then CPU's. So why even bother using it?[QUOTE="04dcarraher"]

[QUOTE="yodogpollywog"]

you dont seem to be getting it, unreal tournament 1999 supports software rendering....EPICGAMES CREATED THE SOFTWARE RENDERER.

http://i55.tinypic.com/3324f14.jpg

see in the picture of ut1999 menu software rendering? i circled it for you.

if crytek programmed software renderer built in, it would of performed better than swiftshader 2.0 or 2.0 trying to run crysis in software mode.

yodogpollywog

if bet if crytek programmed software renderer quadcore could run crysis on max settings better than best intergrated graphics chip on the market.

If that were true, why do the strongest performance benchmarks from distributed computing projects like Folding@home come from a smaller number of GPU-based clients (which are newer, rawer, and not as well-refined as the CPU-based clients which have been there from the beginning)?[QUOTE="04dcarraher"]

[QUOTE="yodogpollywog"]If that were true, why do the strongest performance benchmarks from distributed computing projects like Folding@home come from a smaller number of GPU-based clients (which are newer, rawer, and not as well-refined as the CPU-based clients which have been there from the beginning)? [QUOTE="yodogpollywog"]

[QUOTE="fireballonfire"]

[QUOTE="yodogpollywog"]

the mod's here think im trolling.

dude my intel 466 mhz celeron could run unreal tournament 1999 software mode low settings 800x600 higher than 2 fps back in 1999.

me only getting 2 fps using swiftshader on athlon 2.1 ghz dualcore 800x600 low settings in crysis is total bs.

the reason why fps so low because it's not built into crysis, and crytek didnt make the software renderer

yodogpollywog

I'm frankly not sure what you are trying to accomplish here. Intel had an idea with the Larrabee. Much like the cell it was a hybrid CPU/GPU solution meant to replace the GPU with one chip.

That thing had as many as 24 cores running at 2.4 GHz if I remember correctly. The project got canceled and why was that you ask.

It was a power hungry behemoth that couldn't even beat a 7800 gtx in graphic heavy tasks, that's why.

Why do you think Sony baled out the last second replacing one Cell for a 7900 gtx? Because with two Cells the PS3 would have been slapped all over the place by the 360.

of course developers should support highend video card's since there better for gaming than a cpu, but intergrated graphics processor are always weak and software rendering if programmed right might actually runs games better than these igp's.

See, now you're starting to make a little sense. Against low-end integrated graphics chips you might have a shot, though even that market has its higher-end performers from the likes of nVidia and ATI. Why does Crysis perform so poorly? Because CryEngine 2 is such a beast. When it was released in 2007, no PC in existence could max it out. Put up against that high a bar, you begin to see just how high a climb you face. As for Crytek making the software renderer themselves, that depends on their knowledge of CPU optimizations. The company behind the SwiftShaders may be more familiar with those optimizations than Crytek (who had the DirectX layer to obscure some of the to-the-metal details).Yeah lets just have developers focus on the PC that will go over SO well for the gaming industry :roll:Sp4rtan_3He didn't even imply that.

[QUOTE="yodogpollywog"][QUOTE="SPBoss"] no im not new to pc gaming i've been doing it for years.. I just haven't looked at outdated technology lol! Quite interesting tech skills for doing that but pretty lame at the same time, if someone wants to play quake they should just get a normal pc lol. Its great for proving a point, maybe one day someone can hack the rsx and run pc games on ps3, now that would be epic! I wonder why sony stopped OS support.. maybe cause of things like this?HuusAsking

you must be new.

because u couldnt even buy graphics card when quake 1 shipped in 1996.

Hmm, that's odd, because what was driving the Windows 95 interface then? I distinctly recall a lot of hype over Local Bus video cards (like PCI cards which were eclipsing VESA Local Bus as the Pentiums hit their prime). You needed something to connect the monitor.2d display adapter built into motherboard.

3d display adapters werent avalible when quake 1 was released.

If 3d display adapter/video cards were avalible how come quake 1 has no support out of the box?

you must patch quake 1 to support open gl lol.

Well, it's all about marketing...after all, consolehas bigger market for fps games...look at COD numbers, pc cant even have 300k+ players online anytime.

Beside that, lol Blizzard always make games with graphics a generation behind other current pc games, but they always sell 10 times more than others.

Hmm, that's odd, because what was driving the Windows 95 interface then? I distinctly recall a lot of hype over Local Bus video cards (like PCI cards which were eclipsing VESA Local Bus as the Pentiums hit their prime). You needed something to connect the monitor.[QUOTE="HuusAsking"][QUOTE="yodogpollywog"]

you must be new.

because u couldnt even buy graphics card when quake 1 shipped in 1996.

yodogpollywog

2d display adapter built into motherboard.

3d display adapters werent avalible when quake 1 was released.

So you want to switch game reliance from a certain level of power of the GPU the be entirely dependant on the Mobo and CPU? I don't think I'm even able to be polite about this.

Seriously, you need to go back to arguing which version of Fallout 3 is less ugly.

You have absolutely nothing of value to contribute with.

I only regret that I'm not large enough to ignore you entirely.

Hmm, that's odd, because what was driving the Windows 95 interface then? I distinctly recall a lot of hype over Local Bus video cards (like PCI cards which were eclipsing VESA Local Bus as the Pentiums hit their prime). You needed something to connect the monitor.[QUOTE="HuusAsking"][QUOTE="yodogpollywog"]

you must be new.

because u couldnt even buy graphics card when quake 1 shipped in 1996.

yodogpollywog

2d display adapter built into motherboard.

3d display adapters werent avalible when quake 1 was released.

Onboard graphics were the exception back then because the graphics market was much more competitive from the likes of Tseng, S3, Trident, and even ATI (nVidia didin't enter the market until the late 90's). Most people wanted discrete graphics cards (I'll grant you, though, these were 2D or video-accelerated models--3D didn't really take off until the 3dfx Voodoo add-on later that decade).It was simply to id's credit that their engine was easy enough to adapt to the fledgling 3D standards like OpenGL. Descent was also eventually patched to take it as well, IIRC. And I think Apogee's Terminal Velocity and at least one other had something to take advantage of S3 ViRGE chips.If 3d display adapter/video cards were avalible how come quake 1 has no support out of the box?

you must patch quake 1 to support open gl lol.

yodogpollywog

[QUOTE="yodogpollywog"]It was simply to id's credit that their engine was easy enough to adapt to the fledgling 3D standards like OpenGL. Descent was also eventually patched to take it as well, IIRC. And I think Apogee's Terminal Velocity and at least one other had something to take advantage of S3 ViRGE chips.If 3d display adapter/video cards were avalible how come quake 1 has no support out of the box?

you must patch quake 1 to support open gl lol.

HuusAsking

i remember when some of the video card's were first released dude, like diamond monster 3d 4 mb friend got one, stuff was new tech alittle after quake 1 release.

Rio Diamond Monster 3D (4 MB) PCI Video Card

Graphic Processor 3dfx Voodoo graphics

http://www.dealtime.com/Sonic-Blue-Diamond-Monster-3D/info

I remember seeing my friends box to the video card that's gotta be his card.

I started gaming on dos, windows 95 didnt exist yet, i remember my friend got a new pc and it came with windows 95 lol i was like WTF is windows 95?

Hmm, that's odd, because what was driving the Windows 95 interface then? I distinctly recall a lot of hype over Local Bus video cards (like PCI cards which were eclipsing VESA Local Bus as the Pentiums hit their prime). You needed something to connect the monitor.[QUOTE="HuusAsking"][QUOTE="yodogpollywog"]

you must be new.

because u couldnt even buy graphics card when quake 1 shipped in 1996.

yodogpollywog

2d display adapter built into motherboard.

3d display adapters werent avalible when quake 1 was released.

Our family's IBM PS/2 Model 55SX (386SX 16Mhz based) has 2D display adapter built into motherboard, but

1. My 1996 PC includes S3 Trio 64UV card (PCI slot).

2. Our family's 486DX33 PC includes Cirrus Logic card (VL-BUS slot). PS; IBM PS/2 lost against PC clones.

[QUOTE="yodogpollywog"]

[QUOTE="HuusAsking"]Hmm, that's odd, because what was driving the Windows 95 interface then? I distinctly recall a lot of hype over Local Bus video cards (like PCI cards which were eclipsing VESA Local Bus as the Pentiums hit their prime). You needed something to connect the monitor.ronvalencia

2d display adapter built into motherboard.

3d display adapters werent avalible when quake 1 was released.

Our family's IBM PS/2 Model 55SX (386SX 16Mhz based) has 2D display adapter built into motherboard, but

1. My 1996 PC includes S3 Trio 64UV card (PCI slot).

2. Our family's 486DX33 PC includes Cirrus Logic card (VL-BUS slot). PS; IBM PS/2 lost against PC clones.

2d card's existed, 3d didnt.

no im not new to pc gaming i've been doing it for years.. I just haven't looked at outdated technology lol! Quite interesting tech skills for doing that but pretty lame at the same time, if someone wants to play quake they should just get a normal pc lol. Its great for proving a point, maybe one day someone can hack the rsx and run pc games on ps3, now that would be epic! I wonder why sony stopped OS support.. maybe cause of things like this?SPBoss

you must be new.

because u couldnt even buy graphics card when quake 1 shipped in 1996.

Hmm, that's odd, because what was driving the Windows 95 interface then? I distinctly recall a lot of hype over Local Bus video cards (like PCI cards which were eclipsing VESA Local Bus as the Pentiums hit their prime). You needed something to connect the monitor. Windows 3.1 has 2D (GDI) graphics accelerator cards and it's gaming API was WinG (download add-on).On January 22, 1997, id Software released GLQuake. This was designed to use the OpenGL 3D API to access hardware 3D graphics acceleration cards to rasterize the graphics, rather than having the computer's CPU fill in every pixel

[QUOTE="ronvalencia"]

[QUOTE="yodogpollywog"]

2d display adapter built into motherboard.

3d display adapters werent avalible when quake 1 was released.

yodogpollywog

Our family's IBM PS/2 Model 55SX (386SX 16Mhz based) has 2D display adapter built into motherboard, but

1. My 1996 PC includes S3 Trio 64UV card (PCI slot).

2. Our family's 486DX33 PC includes Cirrus Logic card (VL-BUS slot). PS; IBM PS/2 lost against PC clones.

2d card's existed, 3d didnt.

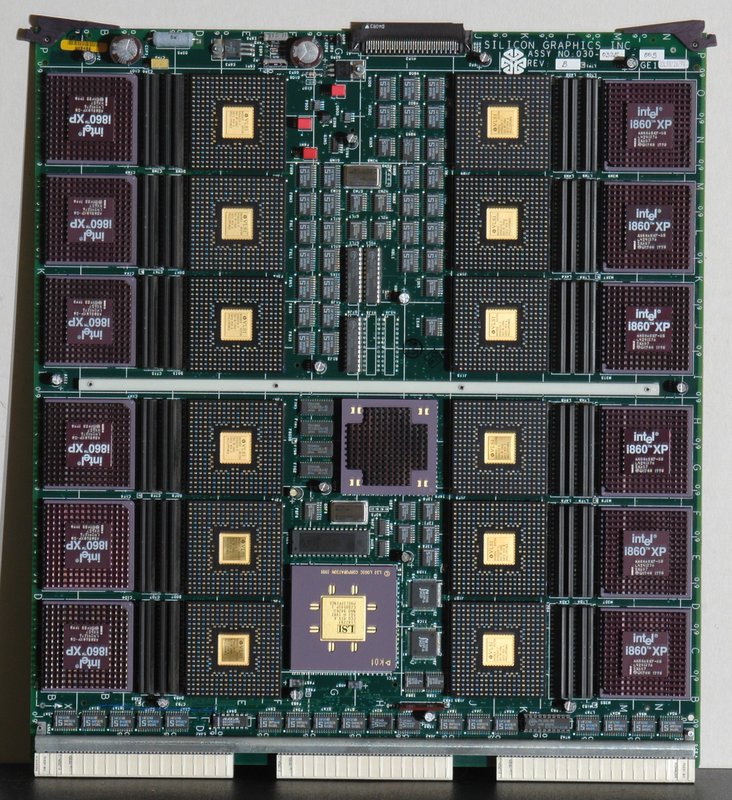

3D cards exist i.e. high end Intel i860**/i960**based OpenGL accelerators.**VLIW CPU based with Z-buffer instructions and fix function 3D features. The importance of X87 FPU's performance influenced Quake 1's frame rates e.g. Pentium's FPU vs Cyrix's FPU

Geometry Engine board from the Reality Engine 2 graphics system in the SGI Onyx

PS; AMD's Radeon HDs also sports VLIW based stream processors. The team that create this board formed a little company called ArtX i.e. GPU for N64, GC, Wii and Radeon 9700 (R300). http://en.wikipedia.org/wiki/ArtX

"ArtX paved the way for the development of ATI's R300 graphics processor (Radeon 9700) released in 2002 which formed the basis of ATI's consumer and professional products for three years afterward."

In terms of concept, the SGI processor array board was redesigned as a custom ASIC chip.

AMD includes engineering teams from DEC Alpha EV6 and SGI (via ArtX).

PS; Amiga's F-29 Retaliator 3d game uses Amiga's 2D line functions to accelerated line draws.

intel intergrated chipsets dont support direct x as far i know.

so u need to buy a video card since games dont support software rendering anymore.

yodogpollywog

The Quick Reference Guide to Intel® HD Graphics does indeed show that DirectX and Shader Model support is there. The problem is that there is no dedicated video memory for one and that the IGPs may still access the CPU which slows down graphics processing.

you dont seem to be getting it, unreal tournament 1999 supports software rendering....EPICGAMES CREATED THE SOFTWARE RENDERER.yodogpollywogYes, but it has many limitations that would not be acceptable nowadays. For instance it uses sub-affine texture coordinate interpolation, which makes dependent texture reads (used for things like bumpmapping) impossible. It also only supports per-polygon mipmapping, wich causes aliasing and blurring. And dynamic geometry uses depth sorted polygons, which results in clipping issues. The reality of computer graphics is that a minor improvement in quality requires a major increase in computing power. So fixing these limitations would have costed a serious decrease in performance. For the record, Unreal Tournament 2003/2004 used the Picomatic software renderer, but at equal quality SwiftShader outperforms it by 15% despite support for advanced shaders and never having been specifically optimized for this game. The point is that there's little correlation between who wrote the software renderer, and its performance for a particular game.

if crytek programmed software renderer built in, it would of performed better than swiftshader 2.0 or 2.0 trying to run crysis in software mode.yodogpollywogThe Crytek developers are clearly very skilled, but I doubt that they would be able to create a faster software renderer, simply because the workload can't be processed any faster. SwiftShader has very advanced optimizations to ensure that no computing power is wasted on unnecessary calculations. It also makes maximum use of vector instruction set extensions and multi-threading. Obviously there's always room for some more optimization, but the law of diminisching returns dictates that you'd spend a disproportionate amount of time on it compared to the insignificant gains. In other words, no game development company would spend another year optimizing a software renderer for a 10% performance increase. Besides, SwiftShader has been under intense development for the past five years, and it would be a very large investment to catch up with that. EPIC already realized in 2003 that licensing an existing software renderer would cost less time and money and offer close to optimal performance. Another point in case is that Microsoft's WARP renderer is only half as fast as the latest SwiftShader release. So outperforming SwiftShader would be no small feat. Software rendering could be faster with a custom API (i.e. not using the hardware-oriented Direct3D or OpenGL API), but it's again prohibitively expensive (both time and money wise) to write different paths for hardware and software rendering. So the reality is that modern software renderers need to make use of the same APIs and work around the inefficiencies the best they can. Ironically, this is actually true for hardware as well. A custom chip could render Unreal Tournament '99 at a million frames per second if it didn't spend any transistors on features not used by this game. But obviously supporting lots of modern features is more interesting than a million FPS for an outdated game. Genericity comes at a cost but you get a lot in return. That said, Crysis could run at over 20 FPS on a quad-core CPU using the latest SwiftShader release, if it disabled texture filtering when rendering screen-aligned rectangles (used for post processing and such). Filtering is practically for free on hardware but it's quite expensive for a software renderer. Note that this is not a flaw of SwiftShader, it's something that can easily be controlled from the application side. There are probably more optimization opportunities like that, which don't require changes to the software renderer.

[QUOTE="yodogpollywog"]you dont seem to be getting it, unreal tournament 1999 supports software rendering....EPICGAMES CREATED THE SOFTWARE RENDERER.c0d1f1edYes, but it has many limitations that would not be acceptable nowadays. For instance it uses sub-affine texture coordinate interpolation, which makes dependent texture reads (used for things like bumpmapping) impossible. It also only supports per-polygon mipmapping, wich causes aliasing and blurring. And dynamic geometry uses depth sorted polygons, which results in clipping issues. The reality of computer graphics is that a minor improvement in quality requires a major increase in computing power. So fixing these limitations would have costed a serious decrease in performance. For the record, Unreal Tournament 2003/2004 used the Picomatic software renderer, but at equal quality SwiftShader outperforms it by 15% despite support for advanced shaders and never having been specifically optimized for this game. The point is that there's little correlation between who wrote the software renderer, and its performance for a particular game.

if crytek programmed software renderer built in, it would of performed better than swiftshader 2.0 or 2.0 trying to run crysis in software mode.yodogpollywogThe Crytek developers are clearly very skilled, but I doubt that they would be able to create a faster software renderer, simply because the workload can't be processed any faster. SwiftShader has very advanced optimizations to ensure that no computing power is wasted on unnecessary calculations. It also makes maximum use of vector instruction set extensions and multi-threading. Obviously there's always room for some more optimization, but the law of diminisching returns dictates that you'd spend a disproportionate amount of time on it compared to the insignificant gains. In other words, no game development company would spend another year optimizing a software renderer for a 10% performance increase. Besides, SwiftShader has been under intense development for the past five years, and it would be a very large investment to catch up with that. EPIC already realized in 2003 that licensing an existing software renderer would cost less time and money and offer close to optimal performance. Another point in case is that Microsoft's WARP renderer is only half as fast as the latest SwiftShader release. So outperforming SwiftShader would be no small feat. Software rendering could be faster with a custom API (i.e. not using the hardware-oriented Direct3D or OpenGL API), but it's again prohibitively expensive (both time and money wise) to write different paths for hardware and software rendering. So the reality is that modern software renderers need to make use of the same APIs and work around the inefficiencies the best they can. Ironically, this is actually true for hardware as well. A custom chip could render Unreal Tournament '99 at a million frames per second if it didn't spend any transistors on features not used by this game. But obviously supporting lots of modern features is more interesting than a million FPS for an outdated game. Genericity comes at a cost but you get a lot in return. That said, Crysis could run at over 20 FPS on a quad-core CPU using the latest SwiftShader release, if it disabled texture filtering when rendering screen-aligned rectangles (used for post processing and such). Filtering is practically for free on hardware but it's quite expensive for a software renderer. Note that this is not a flaw of SwiftShader, it's something that can easily be controlled from the application side. There are probably more optimization opportunities like that, which don't require changes to the software renderer.

swiftshader makers dont know the cryengine as good as crytek....crytek could easily get better framerates in their engine if they created the software renderer.

cpu's can do bump mapping there's way's around everything lol.

swiftshader seems pretty unoptimized dude, i was only hitting 97% cpu usage, but my framerate was 2?

something aint right.

swiftshader is like unreal engine 3 almost, in the fact that's it works on everything, but it's not specifically designed for one application.

it doesnt take advantage of 360's hardware, swiftshader isnt optimized for cryengine running on cpu.

Are you serious? Software rendering through the cpu is a dumb idea in 2010...04dcarraherI beg to differ. There's a greater resemblance between CPU and GPU cores every generation. CPUs get support for wider vector instructions (AVX), the number of cores doubles with every semiconductor process node, and simultaneous multi-threading (Hyper-Threading) hides some of the memory access latency. At the same time GPUs are getting support for generic instructions, they're being equipped with large caches, they can process threads more independently, and (mark my words) they'll soon get speculative execution to lower register pressure and reduce thread latency. This rapid convergence even means that it will soon be a "dumb idea" to have a separate CPU and IGP. The only thing a CPU really lacks today to catch up with the GPU on graphics, is support for gather and scatter instructions. These are the parallel forms of load and store instructions, and are fundamental for fast texture sampling. They would be far more useful than actual dedicated texture sampling hardware because they're useful for a lot more. They would make almost any loop fully parallelisable. They would help accelerate all stages of the graphics pipeline, but are of great value beyond graphics as well. Even GPU designers are considering to ditch the dedicated texture samplers and replacing them with generic gather/scatter units to make it programmable.

...it was fine in the mid 90's or earlier since Pc's didnt have a dedicated gpu's, just video memory and the cpu handled it all.04dcarraherIronically now that CPUs are hundreds of times faster than in the mid 90's, software rendering has become a rarity even though it would be totally adequate for the majority of applications. Note that the most popular games are actually not shooters which require a high end system, but casual games.

Todays gpu's are heck alot faster then any cpu that is out.04dcarraherThat's true for the high end, but not in general. The most occuring graphics chip generation is the very modest GMA 9xx (http://unity3d.com/webplayer/hwstats/pages/web-2010Q2-gfxseries.html).

this is why were seeing a large shift into parallel processing performed by gpu's not cpu's.04dcarraherThe soon to be released Sandy Bridge processors will be capable of roughly 100 DP GFLOPS. And a year later it will probably be closer to 300 DP GFLOPS due to FMA and additional cores. Something like a GeForce GTX 460 can do 150 DP GFLOPS in theory, 75 DP GFLOPS in practice due to intentionally handicapping it. But even though I'm comparing a high end CPU to a crippled mid end GPU, the CPU is certainly no weakling and it's also still far easier to program and far more flexible. The performance of the GPU in GPGPU applications is typically only a fraction of the theoretical performance, due to having to fit the algorithms into the GPUs limited programming model, becoming bandwidth limited due to many cache misses, running out of register space due to branching, and/or the long round trip latency. There is definitely no "large shift" toward GPUs in high performance computing. The CPU still reigns and has lots of trumps left to play. The GPU on the other hand has to trade raw performance for better generic processing support. So things are not likely to turn into the GPUs favor. It's all but certain that the GPU will eventually go the way of the dodo. It is slowly but surely losing all its unique features. Just like sound processing went from dedicated hardware to a programmable DSP to a full CPU implementation, graphics processing will be done in software. People who want high end performance will simply buy a 256-core CPU instead of a 32-core CPU.

guys just ignore him, clearly he is just being lazy and wants to hide it. other devs have been able to improve the PC version alot, take Dirt for example, that game is miles ahead of what the PS3 or 360 version could look like. EA has said that Battliefield 3 will be different from the Console versions. he should of stayed on the PC.

didnt read the article but come on, no one is holding anyone back. Devs just dont want to put in extra work, which is why all PC games should be different from the consoles

I beg to differ. There's a greater resemblance between CPU and GPU cores every generation. CPUs get support for wider vector instructions (AVX), the number of cores doubles with every semiconductor process node, and simultaneous multi-threading (Hyper-Threading) hides some of the memory access latency. At the same time GPUs are getting support for generic instructions, they're being equipped with large caches, they can process threads more independently, and (mark my words) they'll soon get speculative execution to lower register pressure and reduce thread latency. This rapid convergence even means that it will soon be a "dumb idea" to have a separate CPU and IGP. The only thing a CPU really lacks today to catch up with the GPU on graphics, is support for gather and scatter instructions. These are the parallel forms of load and store instructions, and are fundamental for fast texture sampling. They would be far more useful than actual dedicated texture sampling hardware because they're useful for a lot more. They would make almost any loop fully parallelisable. They would help accelerate all stages of the graphics pipeline, but are of great value beyond graphics as well. Even GPU designers are considering to ditch the dedicated texture samplers and replacing them with generic gather/scatter units to make it programmable.

They're considering it, but haven't gotten there yet. Even the Larrabee guys decided that "traditional" texture units were still worth it. :P It probably makes sense for a lot of things or if you're really forward-looking, but the fact is that the average GPU workload still mostly your basic bilinear/trilinear texture fetching + filtering. I'm not sure how quickly that will change.

Also in terms of what CPU's are lacking, they're still way behind in raw single precision FP throughput and memory bandwidth. Obviously more cores solves the first problem, but unless we have good programming models to easily take advantage of the throughput it won't matter very much. I'm not at all a CPU expert so I'm not sure how well you could map a traditional massively-threaded GPU shader pipeline to lots and lots of CPU cores (or something like Reyes, for that matter).

Ironically now that CPUs are hundreds of times faster than in the mid 90's, software rendering has become a rarity even though it would be totally adequate for the majority of applications. Note that the most popular games are actually not shooters which require a high end system, but casual games.

Yeah but those casual games run on Flash, and (until recently) Flash was all software rendering. And for anything not using flash and that has even trivial 3D graphics, it's many times simpler and easier to hack together a basic D3D/GL renderer than it is to write a performant software renderer. So I don't see this as particularly weird.

It's all but certain that the GPU will eventually go the way of the dodo. It is slowly but surely losing all its unique features. Just like sound processing went from dedicated hardware to a programmable DSP to a full CPU implementation, graphics processing will be done in software. People who want high end performance will simply buy a 256-core CPU instead of a 32-core CPU.

I can understand why you and so many others are so bullish on this front, but when I hear these sorts of things I always think back to how many times people told me I would be writing ray-tracers by this point instead of using a crusty old D3D/GL rasterization pipeline. :P

Agreed. Instead of griping about it maybe they should craft a engine that can take advantage of each consoles strengths, rather than point out what most people already know. Consoles aren't upgradeable, so of course PC's would pull ahead from a technical level. There are just so many consoles in people's homes now, and they want to cash in on the userbase, but rather than bite their lip, and get the game finished, they'd rather complain to the PC community about how these systems are holding back PC development??! I don't game on my PC, and I could care less about this Crysis game, but it would be nice if they did give it their all, making it run as smooth as it can on both consoles.He's stating the obvious, whether consolities want to believe it or not. Even with general "dumbing down", alot of it happens simply because the consoles can't handle the game the way the developers want it, then it's the obvious audience and what sells to that audience (in general I'm not saying all are like this).

Espada12

But they make much less revenue from games once they fall to $19.99 or $9.99. The first year of sales is always the most important, even for PC games.I think some developers are being shortsighted by only looking at the sales of a game in the first few months.

I personally just purchased stalker a few days ago, and that's from 2007.

They need to look at pc games as an investment, not a quick cash in.

topgunmv

But OTOH, CryEngine is designed for a hardware abstraction layer like DirectX. It isn't as if the engine is hardcoded in assembler "to the metal," so to speak. Is Crytek familiar with all the tricks and optimizations of modern x64 processors? Is it aware of the full breadth of the instruction set, its latencies, and so on? As for GPUs moving towards CPU function, I beg to differ. We're discovering two distinct sets of computing that are hard to generalize. On the one hand, you have things that (for reasons of coherency and so on) need to be limited to a few robust threads. On the other hand, you have very simple tasks that are easy to parallelize. Their specialties are just about mutually exclusive. So we're seeing a generalized move towards asymmetric multicore CPUs (the Cell is an early attempt at a mainstream AMCCCPU). In future, we'll see CPUs with at least two different types of cores: the robust general purpose cores we see in CPUs today and the parallel-friendly cores we see in GPUs. They won't converge because parallel-friendly tasks aren't necessarily robust and there's also the matter of having to allocate chip real estate to the task. We're still trying to figure out the right ratio.swiftshader is like unreal engine 3 almost, in the fact that's it works on everything, but it's not specifically designed for one application.

it doesnt take advantage of 360's hardware, swiftshader isnt optimized for cryengine running on cpu.

yodogpollywog

They're a little ahead of the curve, by my reckoning. I'd say we're about two (console) generations away. There are raytracing engines being developed now, but they're still "not ready for prime time"--not enough oomph.

I can understand why you and so many others are so bullish on this front, but when I hear these sorts of things I always think back to how many times people told me I would be writing ray-tracers by this point instead of using a crusty old D3D/GL rasterization pipeline. :PTeufelhuhn

swiftshader makers dont know the cryengine as good as crytek....crytek could easily get better framerates in their engine if they created the software renderer.yodogpollywog

Knowing how the engine works doesn't help drawing a triangle any faster.

They can only take the performance characteristics of a software renderer into account. For instance they can write CPU-friendly shaders, disable redundant operations, and take advantage of extensions. But note that these are application side changes, which would benefit any software renderer equally. If you know of any non-trivial renderer side changes that would significancly improve performance, I'd love to hear about it.

And while SwiftShader build 3383 is much faster than version 2.01 at rendering Crysis, all the improvements are unrelated to CryENGINE. So again with the utmost respect I doubt that the Crytek developers would be able to contribute anything engine-specific to the renderer side that would have an even greater effect than generic optimizations.

swiftshader seems pretty unoptimized dude, i was only hitting 97% cpu usage, but my framerate was 2?yodogpollywog

On my Core i7 920 @ 3.2 GHz, I'm getting an average of 17 FPS for the 64-bit Crysis benchmark, using SwiftShader build 3383. It'sreallyquite playable. And that's for a game that's still considered a graphical marvel.

Given that my CPU can do 102.4 SP GFLOPS, and your Athlon 64 X2 2.1 GHz can only do 16.8 GFLOPS and supports fewer vector instructions, a framerate of 2 sounds about right for your system. There's only so much you can do with that computing budget. CPUs are rapidly catching up on throughput performance, but older generations like that had a very low computing density.

I can understand why you and so many others are so bullish on this front, but when I hear these sorts of things I always think back to how many times people told me I would be writing ray-tracers by this point instead of using a crusty old D3D/GL rasterization pipeline. :PTeufelhuhn

And yet quite a few people are very busy writing ray tracers using CUDA 3 or doing other innovative things with their GPUs. So keeping in mind that all old things are not replaced with the latestnew things overnight, that timeline isn't off by much.

Also, I soundly recall people saying things like "the GeForce 3 will render anything you canthrow at it", and "software renderers will never be able to run something like Oblivion". I'm just saying, things do change very rapidly. In several years computer graphics will again look very different. Whether we'll all be ray tracing or software rendering, or a bit of both, doesn't really change how impressive these advances are. At least to me...

I beg to differ. There's a greater resemblance between CPU and GPU cores every generation. CPUs get support for wider vector instructions (AVX), the number of cores doubles with every semiconductor process node, and simultaneous multi-threading (Hyper-Threading) hides some of the memory access latency. At the same time GPUs are getting support for generic instructions, they're being equipped with large caches, they can process threads more independently, and (mark my words) they'll soon get speculative execution to lower register pressure and reduce thread latency.c0d1f1ed

AMD Radeon HD 4870 has

- 2.5 megabytes of register storage space.

- 16,000 x 128-bit registers per SM.

- 16,384 threads per chip.

- greater than 1,000 threads per SM.

Each SM has 16 VLIW5 SPs. 16,000 registers isspread across 16 VLIW5 SPs i.e. 1000 x 128bit registers per VLIW5 SP. Your CELL SPE only has 128 x 128bit registers. 16 x 256 bit AVX is no where near RV770's VLIW5 register data storage.

Radeon HD 4870 has 10 SM blocks. Each VLIW5 SP includes 5 scalar stream processors. Math: 16 x 10 x 5 = 800 stream processors. At a given transistor count, normal CPU doesn't have this execution unit count density.

According to AMD's road map, your "mark my words" means nothing. VMX/SSE/SPE floating point transistor count usage is NOT efficient compared to RV770's VLIW5 unit.

Direct competitor against desktop Intel Sandybridge (CGPU) is AMD Llano (CGPU) which includes about 480 stream processors and +500 GFLOPs.

Please Log In to post.

Log in to comment