[QUOTE="ronvalencia"]DF has attempt to match the console's shader power via 600Mhz 7870 XT and 7850. DF has negated the triangle rate difference between the two boxes.7770's 1.28 TFLOPS was based on 10 CUs @ 1Ghz which doesn't match X1's 12 CU@ 853Mhz anyway.

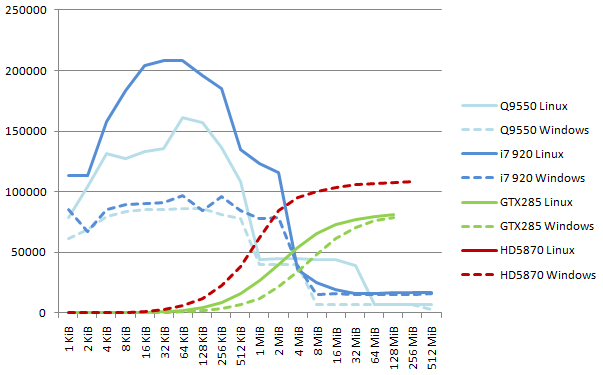

From www.tomshardware.com/reviews/768-shader-pitcairn-review,3196-5.html

12 CU @ 860Mhz 7850/7830 prototype is the closest to X1's 12 CU @ 853Mhz.

My posts are framed with the following dev quotes.

http://www.videogamer.com/news/xbox_one_and_ps4_have_no_advantage_over_the_other_says_redlynx.html

Speaking to VideoGamer.com at E3, Ilvessuo said: " Obviously we have been developing this game for a while and you can see the comparisons. I would say if you know how to use the platform they are both very powerful. I don't see a benefit over the other with any of the consoles."

----

http://www.videogamer.com/xboxone/metal_gear_solid_5_the_phantom_pain/news/ps4_and_xbox_one_power_difference_is_minimal_says_kojima.html

"The difference is small, and I don't really need to worry about it," he said, suggesting versions for Xbox One and PS4 won't be dramatically different.

----

http://gamingbolt.com/ubisoft-explains-the-difference-between-ps4-and-xbox-one-versions-of-watch_dogs

"Of course, the Xbox One isnt to be counted out. We asked Guay how the Xbox One version of Watch_Dogs would be different compared to the PC and PS4 versions of the game, to which he replied that, The Xbox One is a powerful platform, as of now we do not foresee a major difference in on screen result between the PS4 and the Xbox One. Obviously since we are still working on pushing the game on these new consoles, we are still doing R&D."

----

link

"We're still very much in the R&D period, that's what I call it, because the hardware is still new," Guay answered. "It's obvious to us that its going to take a little while before we can get to the full power of those machines and harness everything. But, even now we realise that both of them have comparable power, and for us thats good, but everyday it changes almost. Were pushing it and were going to continue doing that until [Watch Dogs] ship date."

http://www.eurogamer.net/articles/df-hardware-spec-analysis-durango-vs-orbis

"Other information has also come to light offering up a further Orbis advantage: the Sony hardware has a surprisingly large 32 ROPs (Render Output units) up against 16 on Durango. ROPs translate pixel and texel values into the final image sent to the display: on a very rough level, the more ROPs you have, the higher the resolution you can address (hardware anti-aliasing capability is also tied into the ROPs).16 ROPs is sufficient to maintain 1080p, 32 comes across as overkill, but it could be useful for addressing stereoscopic 1080p for instance, or even 4K. However, our sources suggest that Orbis is designed principally for displays with a maximum 1080p resolution."

http://www.polygon.com/2013/8/1/4580380/carmack-on-next-gen-console-hardware-very-close-very-good

Carmack on next-gen console hardware: 'very close,' 'very good'

btk2k2

There is also a pixel fillrate difference to take into account as well so if you shave off some performance of the card in that article and add a bit to the 7850 then you are basically there. -5% to the 768 pitcairn sample and +5% to the 7850 and you get 33 FPS vs 43 FPS in Crysis 2 and you get 44 FPS vs 57 FPS in BF3. A bit less than my predicted difference but close enough considering the amount of known unknowns we have regarding important performance factors on the X1. In the end though it means that games that push the X1 will be prettier and/or smoother on PS4.Fillrate would be very minor issue for X1 vs PS4 since 7870 GE's 32 ROPS @ 1Ghz wasn't able match 7970's 32 ROPS @ 925Mhz.

Fillrate requires memory writes and ROPS doesn't operate in isolation. http://en.wikipedia.org/wiki/Fillrate

6970 has 176 GB/s memory bandwidth which is similar to PS4's 176 GB/s memory bandwidth i.e. notice the near-lack of improvements between 5870's result vs 7870's results.

Log in to comment