rsx is not a g70

This topic is locked from further discussion.

[QUOTE="smackyomomma"]:lol:lol it's not a g70 danjammer69It is based highly on the G70 architecture. Whats teh funny?

umm? i was being sarcastic

it's a 90 nm g70 aka g71 with 500 mhz core clock 24 pixel

pipelines 8 vertex pipelines

Wow, a 399.99 dollar console can surpass my videocards output 480i :(

I knew the ps3 could only do HD. Wok7...

Wow, a 399.99 dollar console can surpass my videocards output 480i :(

I knew the ps3 could only do HD. Wok7...

the rsx can't do direct x 10 routines, ps3 games run in open gl not direct x, it's a proven fact that the rsx is built around g70 architecture, according to NVIDIA, who actually make the gpu, and the xenos in the 360 DOES run direct x 10 routines, so even if the ps3 could run dX10 that does not make it anything special cos the 360 can do it as well and so can all pc's running vista with a dx10 chip, the ps3 may not be maxed out yet, but how is that an advantage when pc's can never be maxed out, the RSX doesn't even have unified shader architecture which is standard in the xbox 360 and pretty much all graphics card now

He didn't say that the PS3 could run DX10, he said compared to DX10. Open GL can do the same as DX. It just does it alittle different. And If KZ2 uses 128bit HDR that is supported by DX10 then that makes the RSX DX10 equivalent.

But I agree that the OP doesn't do a good job explaining :P

He didn't say that the PS3 could run DX10, he said compared to DX10. Open GL can do the same as DX. It just does it alittle different. And If KZ2 uses 128bit HDR that is supported by DX10 then that makes the RSX DX10 equivalent.

But I agree that the OP doesn't do a good job explaining :P

trasherhead

Being a G70 derivative, the RSX can render to 128-bit surfaces (AKA 32-bit floating point). However, you can pretty much guarantee that no games use this for their main backbuffer because...

A.) It takes up 4x the memory as a regular 32-bit surface, which also means 4x the bandwidth. This would absolutely kill performance, considering bandiwidth for the RSX is limited to the level of low to mid-range cards from a 2 years ago. The ROP's also work at 1/4 speed for fp32.

B.) It's completely overkill for HDR. For games, fp16 (64-bit surfaces) provide more than enough dynamic range and precision. However most games still don't use that for HDR, since it's still 2x the memory and bandwidth requirement. It's also incompatible with MSAA. For these reasons, many games use 32-bit surfaces with an alternate color space (like LogLuv, used in Heavenly Sword and Uncharted).

Being capable of rendering to a 128-bit surface doesn't make a GPU DX10-capable, there are many other requirements it would have to fulfill. Besides, the Nvidia 6 and 7-series GPU's as well as the ATI X1000 series could all do it.

Also...KZ2 doesn't really use HDR at all. It's all very well explained in the paper they released on their engine: they use a standard 32-bit buffer, with an extra value in the alpha channel that let's them have some overbright for bloom effects.

http://talkback.zdnet.com/5208-12558-0.html?forumID=1&threadID=48909&messageID=915943&start=-9978

#1. PS3 RSX alone = 1.8 TFLOPS

Cell and RSX = equal over 2TFLOPSGTX 280 = 933 GFLOPS

ATI 4800 = 1.2 teraFLOPS

#2. I will explain:

RSX = "Multi-way Programmable Parallel Floating Point Shader Pipelines". Now no other device uses this description, but if you were a student of Graphics Cards, you would realize that this was basically describing multiple pathways through a grid or array of Shader processors.

#3. So it's not a "Fixed Function Pipeline" of G70 with 24-Vertex Shaders and 8-Pixel Shaders. This describes a Unified Shader Architecture!

#4. Supports full 128bit HDR Lighting. Same as G80's and G70 only supports 64bit HDR like all last gen GPU's. Dead give away!

#5. From as early as Feb 2005 at GDC Sony has announced that PS3 (RSX) would use OpenGL ES 2.0. At the time 2.0 wasn't out, so they wrote feature supports of RSX into OGL ES 1.1 including Fully Programmable Shader Pipeline and call it PSGL. Khronos Group took anything that could be done in a Shader out of the Fixed Function Pipeline (including Transform and Lighting) streamlining it for future Embedded Hardware. What does that mean?

#6. At least for OpenGL ES 2.0 devices (not PC version) it becomes a unified shader model and remember RSX is fully compliant. OpenGL ES 2.0 will only run on advanced hardware just like DX10.faultline187

1. GFLOPS quoted for Radeon and Geforce are just for shaders/stream processors not including it's specialised hardware functions. If RSX is a G80 level GPU it should easily run UT3 beyond 720p and 30 FPS, instead RSX runs like any other 8 ROPS DX10 GPU. In UT3's 1280x720p, Geforce 8600M GT GDDR3 beyond 30 FPS i.e. 40 to 55 FPS (depending on the driver), i.e. it's higher than the PS3 version.

2. Modern GPUs employs "many-core" concept. DX10 GPU employs thousands of threads.

3. Try again

4. http://www.entrepreneur.com/tradejournals/article/136289787.html http://www.gamesfirst.com/index.php?id=422 Geforce 7800 GTX hardware, "128-bit precision for HDR". DX9 API is another matter.

http://www.hardwaresecrets.com/article/156/3 "GeForce 7800 GTX, in order to increase video quality, uses a 128-bit register for HDR, using 32-bit for each video component."

NVIDIA even claims 128 bit HDR for Geforce 7800.

5. and 6. Refer to http://www.khronos.org/opengles/ "OpenGL ES 2.0 is defined relative to the OpenGL 2.0 specification".

It is based highly on the G70 architecture. Whats teh funny?[QUOTE="danjammer69"][QUOTE="smackyomomma"]:lol:lol it's not a g70 smackyomomma

umm? i was being sarcastic

it's a 90 nm g70 aka g71 with 500 mhz core clock 24 pixel

pipelines 8 vertex pipelines

Oh, my bad man. Seems as though I need to go to sarcasm detection training.[QUOTE="AnnoyedDragon"]What is it with console users and completely overestimating their hardware? They expect gaming super computers that out perform modern hardware for a couple of hundred quid.

faultline187

i never expected it, its just the way it is.

Basically what the main point im trying to get across is that RSX hasnt been used to its full potential, and neither has cell.

Rsx is 128bit HDR! that is a fact!

Because that's what you are seeing in Killzone 2 Game Play Video right now! Along with Deferred Rendering with 4xMSAA w/Quincunx. If that's not 4xMSAA, I don't know what is. There is no aliasing! Plus as promised they are pumping an incredible number of lighting effects out. They have Monte Carlo Ray Tracing (yes rays are cast by cell), Real Time Photon Mapping, Ray Casting, Occlusion Mapping, Practical Spherical harmonics based PRT methods, plus Radiosity done on Cell. Particle Effects like what's in this game haven't been seen in a game on any other platform.

But for the 128bit HDR they are showing!!!...... that can only be done on a DX10 compatible GPU! .....look it it up or do I have to babysit you and find your links for you. Do you know 128bit HDR is a Shader Model 4.0 feature?

With the new game Play Video, they just wiped Crysis off the DX10 feature map with Deferred Rendering and 4xMSAA! "No duh.......RSX is just a stock 7800GPU", you say!

[QUOTE="karasill"][QUOTE="faultline187"]

http://talkback.zdnet.com/5208-12558-0.html?forumID=1&threadID=48909&messageID=915943&start=-9978

#1. PS3 RSX alone = 1.8 TFLOPS

Cell and RSX = equal over 2TFLOPS

GTX 280 = 933 GFLOPS

ATI 4800 = 1.2 teraFLOPS

#2. I will explain:

RSX = "Multi-way Programmable Parallel Floating Point Shader Pipelines". Now no other device uses this description, but if you were a student of Graphics Cards, you would realize that this was basically describing multiple pathways through a grid or array of Shader processors.

#3. So it's not a "Fixed Function Pipeline" of G70 with 24-Vertex Shaders and 8-Pixel Shaders. This describes a Unified Shader Architecture!

#4. Supports full 128bit HDR Lighting. Same as G80's and G70 only supports 64bit HDR like all last gen GPU's. Dead give away!

#5. From as early as Feb 2005 at GDC Sony has announced that PS3 (RSX) would use OpenGL ES 2.0. At the time 2.0 wasn't out, so they wrote feature supports of RSX into OGL ES 1.1 including Fully Programmable Shader Pipeline and call it PSGL. Khronos Group took anything that could be done in a Shader out of the Fixed Function Pipeline (including Transform and Lighting) streamlining it for future Embedded Hardware. What does that mean?

#6. At least for OpenGL ES 2.0 devices (not PC version) it becomes a unified shader model and remember RSX is fully compliant. OpenGL ES 2.0 will only run on advanced hardware just like DX10.

faultline187 It's based off the G70 architecture, nVidia even states this. I highly doubt a 2 year old GPU is more powerful, let alone on par with the current offerings of nVidia and ATI in the PC market.

Dont forget Uncharted, why do you think the animation and movment are so responsive? Remember RSX can use XDR memory (extreamly fast) 3.2GZ.

Now you tell me what PC has ram that runs at that speed? anyone?

might have a fast clock speed but has a very narrow bus so it only get 25gb/s while a 7800gt has 32gb/s and a 8800gt has 50gb/s and 4870s have something over 100gb/s. the rsx is based off the 7800 but has it's memory bandwidth cut in half and had some vertex shaders removed to save costs. if the rsx is so good why can't it run cod4, bioshock, grid, fear or just about every multiplat game anywhere near as good as a 7800gt? ps3's flagship game mgs4 had to be run in sub 600p while if you run crysis at that resolution a 7800gt can manage high settings. The rsx might be based off the 7800gtx but it has more in common with the 7600gt and 7800gs both of which are cut down 7800gtx.I'd like to see that it's been a year and still on console game can touch crysis and warhead is the most technically advanced game of 2008Its the truth..

During 2009 you will see things that even your latest pc will not be able to produce until DX15 is out.

Remember i said it..:-)

faultline187

[QUOTE="Bebi_vegeta"][QUOTE="faultline187"]Its the truth..

During 2009 you will see things that even your latest pc will not be able to produce until DX15 is out.

Remember i said it..:-)

faultline187

I'm still wating for a game to surpass Crysis... that's been released last year.

Crysis? Well wait no longer my friend KIllzone2 comes out in Feb! first game on ps3 to use the beta version of Mt evans (DX10) comparable!

After next year all games on ps3 will be like that :_)

if you think killzone 2 will look as good a crysis, have levels on the same scale that are fully destructible and run in at least 1680 x 1050 you are dreaming[

[QUOTE="faultline187"][QUOTE="karasill"] It's based off the G70 architecture, nVidia even states this. I highly doubt a 2 year old GPU is more powerful, let alone on par with the current offerings of nVidia and ATI in the PC market.imprezawrx500

Dont forget Uncharted, why do you think the animation and movment are so responsive? Remember RSX can use XDR memory (extreamly fast) 3.2GZ.

Now you tell me what PC has ram that runs at that speed? anyone?

might have a fast clock speed but has a very narrow bus so it only get 25gb/s while a 7800gt has 32gb/s and a 8800gt has 50gb/s and 4870s have something over 100gb/s. the rsx is based off the 7800 but has it's memory bandwidth cut in half and had some vertex shaders removed to save costs. if the rsx is so good why can't it run cod4, bioshock, grid, fear or just about every multiplat game anywhere near as good as a 7800gt? ps3's flagship game mgs4 had to be run in sub 600p while if you run crysis at that resolution a 7800gt can manage high settings. The rsx might be based off the 7800gtx but it has more in common with the 7600gt and 7800gs both of which are cut down 7800gtx.Ok then you show me a pc with a 7800 or wateva running a game like killzone hahahaha (please stop it).. It's KZ2's use of Deferred Rendering with 4x MSAA (Multi-Sampled Anti-Aliasing) that makes KZ2's tech so intriguing...

Guerrilla-Games specifically state they are using full hardware supported 4x MSAA. Deferred Rendering in combination with hardware supported 4x MSAA can only be done on DX10 spec GPU's using simplified single shaders. For those of you who keep harping on some ******ed myth that RSX is a 7800, just give it up already. It's getting old!

My link above explains all..This year will be the year that everyone finds out the truth[QUOTE="faultline187"][QUOTE="karasill"] It's based off the G70 architecture, nVidia even states this. I highly doubt a 2 year old GPU is more powerful, let alone on par with the current offerings of nVidia and ATI in the PC market.imprezawrx500

Dont forget Uncharted, why do you think the animation and movment are so responsive? Remember RSX can use XDR memory (extreamly fast) 3.2GZ.

Now you tell me what PC has ram that runs at that speed? anyone?

might have a fast clock speed but has a very narrow bus so it only get 25gb/s while a 7800gt has 32gb/s and a 8800gt has 50gb/s and 4870s have something over 100gb/s. the rsx is based off the 7800 but has it's memory bandwidth cut in half and had some vertex shaders removed to save costs. if the rsx is so good why can't it run cod4, bioshock, grid, fear or just about every multiplat game anywhere near as good as a 7800gt? ps3's flagship game mgs4 had to be run in sub 600p while if you run crysis at that resolution a 7800gt can manage high settings. The rsx might be based off the 7800gtx but it has more in common with the 7600gt and 7800gs both of which are cut down 7800gtx. DDR3 can only run at 2.5GHZ for now. XDR can do up to 4.0Ghz, while the PS3 uses XDR @ 3.2Ghz.. XDR is known for it's low latency, so I don't know where you have those number differences from. But I do think the PS3 would have been better of sharing one XDR chip at 512MB, instead of the DDR3 on the GPU.This thread is still going? You would think this long after the console launch hype people would get a clue.

The PS3 may claim to be a 2TFlop machine on paper but it has never demonstrated that sort of real world performance. You were hyped, get over it.

[QUOTE="faultline187"][QUOTE="Dynafrom"] THERE is ALIASING in KZ2, and the graphics look like crap compared to crysis. I own/demod both, DO YOU? PSNID NOW.Bebi_vegeta

Never said there wasnt aliasing! i was being sarcastic. Crysis pftt lol you need your eyes checked

WARdevil

heavy rain

gow3

uncharted 2

These games are just the beggining

Not to mention sonys secret games, all procedurally generated 4D

None of theses games are out... and secret 4D was supposed to heavy rain... it's no secret, it has no 4D...

lol the only 4d iv seen to date is uncharted drakes fortune..he gets wet he dries off!

Umm any other games u know do that?

heavy rain was never ment to be 4d...But dont worry the answers are coming :-)

This thread is still going? You would think this long after the console launch hype people would get a clue.

The PS3 may claim to be a 2TFlop machine on paper but it has never demonstrated that sort of real world performance. You were hyped, get over it.

AnnoyedDragon

It is 2 tflops...

The link below is the same board cell/rsx combo in this sony zego..they use it to render complex and HIGH resolution special fx graphics!! Ps3 was made for ingame CGI..

HERE

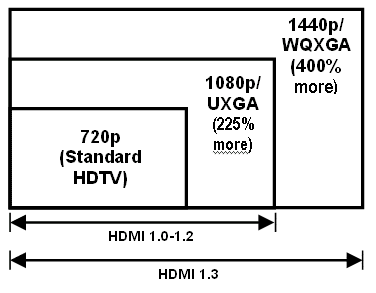

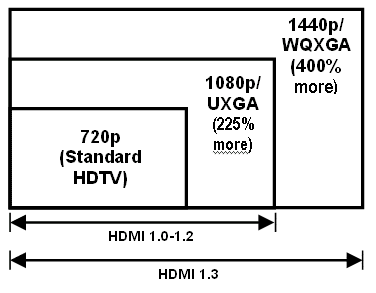

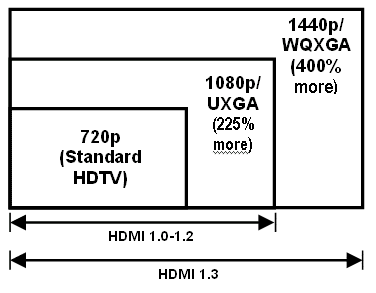

HDMI 1.3....why would they choose to use that? instead of earlier versions of HDMI? TVS AREN'T EVEN OUT YET! to suport a higher definition than 1080p! (unless you got a cinema screen at your place) ow well...BUT why would sony put the expesive version inside its console? sony are so stupid sumtimes!;)

Now the following link below explains the first link i posted above....that board same cell/rsx combo suports 4K resolutions! READ! (at the bottom of the page it states! "RSX is a graphics processor jointly developed by NVIDIA Corporation and Sony Computer Entertainment Inc. 4K: image resolution of 4096 x 2160 pixels (H x V) - more than four times the resolution of full HD (1920 x 1080)"

Here

Remember when they originally had 2 Hdmi " 1.3 " ports planned for the ps3? they took them out to lower the cost of the machine...Dosnt mean the power isnt there to do what it's ment to do...Produce cinema quality visuals in realtime.

Thank you

Yawn, you may be easily convinced but I have this nagging thing called reality telling me something isn't right here.

You are so lost in the figures, hardware and hype you don't see what is right in front of you, a console that is definitely not outputting 2TFlop quality games.

Now you may still be lost in the 2005 hype machine but most people have come to their senses and moved on, you should as well.

In fact it readily gets outperformed by multiple of atleast 2.5 to 4 by a 300Gflop card, Geforce 8800.This thread is still going? You would think this long after the console launch hype people would get a clue.

The PS3 may claim to be a 2TFlop machine on paper but it has never demonstrated that sort of real world performance. You were hyped, get over it.

AnnoyedDragon

But i heard that it does full retrospectrus, with forcfield precision dynamation relfexes on a level of tribillion shadow clocks per milisecond.http://talkback.zdnet.com/5208-12558-0.html?forumID=1&threadID=48909&messageID=915943&start=-9978

#1. PS3 RSX alone = 1.8 TFLOPS

Cell and RSX = equal over 2TFLOPSGTX 280 = 933 GFLOPS

ATI 4800 = 1.2 teraFLOPS

#2. I will explain:

RSX = "Multi-way Programmable Parallel Floating Point Shader Pipelines". Now no other device uses this description, but if you were a student of Graphics Cards, you would realize that this was basically describing multiple pathways through a grid or array of Shader processors.

#3. So it's not a "Fixed Function Pipeline" of G70 with 24-Vertex Shaders and 8-Pixel Shaders. This describes a Unified Shader Architecture!

#4. Supports full 128bit HDR Lighting. Same as G80's and G70 only supports 64bit HDR like all last gen GPU's. Dead give away!

#5. From as early as Feb 2005 at GDC Sony has announced that PS3 (RSX) would use OpenGL ES 2.0. At the time 2.0 wasn't out, so they wrote feature supports of RSX into OGL ES 1.1 including Fully Programmable Shader Pipeline and call it PSGL. Khronos Group took anything that could be done in a Shader out of the Fixed Function Pipeline (including Transform and Lighting) streamlining it for future Embedded Hardware. What does that mean?

#6. At least for OpenGL ES 2.0 devices (not PC version) it becomes a unified shader model and remember RSX is fully compliant. OpenGL ES 2.0 will only run on advanced hardware just like DX10.faultline187

I could be wrong though.

[QUOTE="AnnoyedDragon"]This thread is still going? You would think this long after the console launch hype people would get a clue.

The PS3 may claim to be a 2TFlop machine on paper but it has never demonstrated that sort of real world performance. You were hyped, get over it.

faultline187

It is 2 tflops...

The link below is the same board cell/rsx combo in this sony zego..they use it to render complex and HIGH resolution special fx graphics!! Ps3 was made for ingame CGI..

HERE

HDMI 1.3....why would they choose to use that? instead of earlier versions of HDMI? TVS AREN'T EVEN OUT YET! to suport a higher definition than 1080p! (unless you got a cinema screen at your place) ow well...BUT why would sony put the expesive version inside its console? sony are so stupid sumtimes!;)

Now the following link below explains the first link i posted above....that board same cell/rsx combo suports 4K resolutions! READ! (at the bottom of the page it states! "RSX is a graphics processor jointly developed by NVIDIA Corporation and Sony Computer Entertainment Inc. 4K: image resolution of 4096 x 2160 pixels (H x V) - more than four times the resolution of full HD (1920 x 1080)"

Here

Remember when they originally had 2 Hdmi " 1.3 " ports planned for the ps3? they took them out to lower the cost of the machine...Dosnt mean the power isnt there to do what it's ment to do...Produce cinema quality visuals in realtime.

Thank you

it says prototype board, so i doubt its i the ps3...[QUOTE="faultline187"][QUOTE="AnnoyedDragon"]This thread is still going? You would think this long after the console launch hype people would get a clue.

The PS3 may claim to be a 2TFlop machine on paper but it has never demonstrated that sort of real world performance. You were hyped, get over it.

savagetwinkie

It is 2 tflops...

The link below is the same board cell/rsx combo in this sony zego..they use it to render complex and HIGH resolution special fx graphics!! Ps3 was made for ingame CGI..

HERE

HDMI 1.3....why would they choose to use that? instead of earlier versions of HDMI? TVS AREN'T EVEN OUT YET! to suport a higher definition than 1080p! (unless you got a cinema screen at your place) ow well...BUT why would sony put the expesive version inside its console? sony are so stupid sumtimes!;)

Now the following link below explains the first link i posted above....that board same cell/rsx combo suports 4K resolutions! READ! (at the bottom of the page it states! "RSX is a graphics processor jointly developed by NVIDIA Corporation and Sony Computer Entertainment Inc. 4K: image resolution of 4096 x 2160 pixels (H x V) - more than four times the resolution of full HD (1920 x 1080)"

Here

Remember when they originally had 2 Hdmi " 1.3 " ports planned for the ps3? they took them out to lower the cost of the machine...Dosnt mean the power isnt there to do what it's ment to do...Produce cinema quality visuals in realtime.

Thank you

it says prototype board, so i doubt its i the ps3...HERE!!

The ZEGO platform is based on Sony's Cell Broadband Engine first seen in its PlayStation 3 gaming console along with RSX graphics technologies, and was developed to cut the processing times of creating and rendering increasingly complex high-resolution visual effects and computer graphics!

The second link is older than the first one i provided..but at the bottom it do's say 4K image resolution!

I bet you my life that 75% of the people reading this on system wars do not understand a thing you just said.I understand it before I work for AMD/ATI, but I doubt you do. And that link wth is with that?http://talkback.zdnet.com/5208-12558-0.html?forumID=1&threadID=48909&messageID=915943&start=-9978

#1. PS3 RSX alone = 1.8 TFLOPS

Cell and RSX = equal over 2TFLOPSGTX 280 = 933 GFLOPS

ATI 4800 = 1.2 teraFLOPS

#2. I will explain:

RSX = "Multi-way Programmable Parallel Floating Point Shader Pipelines". Now no other device uses this description, but if you were a student of Graphics Cards, you would realize that this was basically describing multiple pathways through a grid or array of Shader processors.

#3. So it's not a "Fixed Function Pipeline" of G70 with 24-Vertex Shaders and 8-Pixel Shaders. This describes a Unified Shader Architecture!

#4. Supports full 128bit HDR Lighting. Same as G80's and G70 only supports 64bit HDR like all last gen GPU's. Dead give away!

#5. From as early as Feb 2005 at GDC Sony has announced that PS3 (RSX) would use OpenGL ES 2.0. At the time 2.0 wasn't out, so they wrote feature supports of RSX into OGL ES 1.1 including Fully Programmable Shader Pipeline and call it PSGL. Khronos Group took anything that could be done in a Shader out of the Fixed Function Pipeline (including Transform and Lighting) streamlining it for future Embedded Hardware. What does that mean?

#6. At least for OpenGL ES 2.0 devices (not PC version) it becomes a unified shader model and remember RSX is fully compliant. OpenGL ES 2.0 will only run on advanced hardware just like DX10.faultline187

That fact that people talk about things blindly by throwing down numbers and preach that their right shows them how ignorant they really are. It scares me because they are going to use their ignorance to convince other people? And now my question is what is this post trying to prove or disprove? I mean what is the purpose of this thread exactly so I can throw down some insight.

[QUOTE="savagetwinkie"][QUOTE="faultline187"]it says prototype board, so i doubt its i the ps3...It is 2 tflops...

The link below is the same board cell/rsx combo in this sony zego..they use it to render complex and HIGH resolution special fx graphics!! Ps3 was made for ingame CGI..

HERE

HDMI 1.3....why would they choose to use that? instead of earlier versions of HDMI? TVS AREN'T EVEN OUT YET! to suport a higher definition than 1080p! (unless you got a cinema screen at your place) ow well...BUT why would sony put the expesive version inside its console? sony are so stupid sumtimes!;)

Now the following link below explains the first link i posted above....that board same cell/rsx combo suports 4K resolutions! READ! (at the bottom of the page it states! "RSX is a graphics processor jointly developed by NVIDIA Corporation and Sony Computer Entertainment Inc. 4K: image resolution of 4096 x 2160 pixels (H x V) - more than four times the resolution of full HD (1920 x 1080)"

Here

Remember when they originally had 2 Hdmi " 1.3 " ports planned for the ps3? they took them out to lower the cost of the machine...Dosnt mean the power isnt there to do what it's ment to do...Produce cinema quality visuals in realtime.

Thank you

faultline187

HERE!!

The ZEGO platform is based on Sony's Cell Broadband Engine first seen in its PlayStation 3 gaming console along with RSX graphics technologies, and was developed to cut the processing times of creating and rendering increasingly complex high-resolution visual effects and computer graphics!

The second link is older than the first one i provided..but at the bottom it do's say 4K image resolution!

Just because it's based on it doesn't mean that the Cell is equivalent to it :?[QUOTE="faultline187"]I bet you my life that 75% of the people reading this on system wars do not understand a thing you just said.I understand it before I work for AMD/ATI, but I doubt you do. And that link wth is with that?http://talkback.zdnet.com/5208-12558-0.html?forumID=1&threadID=48909&messageID=915943&start=-9978

#1. PS3 RSX alone = 1.8 TFLOPS

Cell and RSX = equal over 2TFLOPSGTX 280 = 933 GFLOPS

ATI 4800 = 1.2 teraFLOPS

#2. I will explain:

RSX = "Multi-way Programmable Parallel Floating Point Shader Pipelines". Now no other device uses this description, but if you were a student of Graphics Cards, you would realize that this was basically describing multiple pathways through a grid or array of Shader processors.

#3. So it's not a "Fixed Function Pipeline" of G70 with 24-Vertex Shaders and 8-Pixel Shaders. This describes a Unified Shader Architecture!

#4. Supports full 128bit HDR Lighting. Same as G80's and G70 only supports 64bit HDR like all last gen GPU's. Dead give away!

#5. From as early as Feb 2005 at GDC Sony has announced that PS3 (RSX) would use OpenGL ES 2.0. At the time 2.0 wasn't out, so they wrote feature supports of RSX into OGL ES 1.1 including Fully Programmable Shader Pipeline and call it PSGL. Khronos Group took anything that could be done in a Shader out of the Fixed Function Pipeline (including Transform and Lighting) streamlining it for future Embedded Hardware. What does that mean?

#6. At least for OpenGL ES 2.0 devices (not PC version) it becomes a unified shader model and remember RSX is fully compliant. OpenGL ES 2.0 will only run on advanced hardware just like DX10.Theverydeepend

That fact that people talk about things blindly by throwing down numbers and preach that their right shows them how ignorant they really are. It scares me because they are going to use their ignorance to convince other people? And now my question is what is this post trying to prove or disprove? I mean what is the purpose of this thread exactly so I can throw down some insight.

Well at least 25% are listening then...:D

The purpose of this thread is to help people stop compairing G70 GPU to the playstations sophisticated graphics card! RSX was made to work with cell, its a new breed of graphic card altogether...The technology that is in it will take years to describe.

Did you also know that the ps3 graphics card just so happens to be the most expensive part to manufacture, above cell and bluray?

seem's kinda wierd for them to give Nvidia so much money just for a crappy of the shelf card dont it?

Does all this stuff seriously go through your head when you're having fun playing video games???death919

Lol no not at all... i love playing video games....

Also as a quick correction to something you posted before, Killzone 2 uses Quincunx AA, not 4xMSAA. Quincunx uses only 2 sub-samples, which gives it the same performance characteristics as 2xMSAA. However it also adds a slight blur to the final image.

Back on-topic...it's 100% confirmed RSX is a G70-derivative. The only difference is that Nvidia modified it to use FlexIO instead of PCI-e. Anandtech spelled it out quite clearly here. Or if you want, search for "RSX g70" on the beyond3d forums and have a look at some of the links that pop up. The topic was discussed to death back when PS3 was released. There are absolutely no surprises on that front: it performs exactly like a G70 with half the bandwidth.Thank you. You are so on my Christmas card list next year.

Also as a quick correction to something you posted before, Killzone 2 uses Quincunx AA, not 4xMSAA. Quincunx uses only 2 sub-samples, which gives it the same performance characteristics as 2xMSAA. However it also adds a slight blur to the final image.Teufelhuhn

http://talkback.zdnet.com/5208-12558-0.html?forumID=1&threadID=48909&messageID=915943&start=-9978

#1. PS3 RSX alone = 1.8 TFLOPS

Cell and RSX = equal over 2TFLOPS

GTX 280 = 933 GFLOPS

ATI 4800 = 1.2 teraFLOPS

#2. I will explain:

RSX = "Multi-way Programmable Parallel Floating Point Shader Pipelines". Now no other device uses this description, but if you were a student of Graphics Cards, you would realize that this was basically describing multiple pathways through a grid or array of Shader processors.

#3. So it's not a "Fixed Function Pipeline" of G70 with 24-Vertex Shaders and 8-Pixel Shaders. This describes a Unified Shader Architecture!

#4. Supports full 128bit HDR Lighting. Same as G80's and G70 only supports 64bit HDR like all last gen GPU's. Dead give away!

#5. From as early as Feb 2005 at GDC Sony has announced that PS3 (RSX) would use OpenGL ES 2.0. At the time 2.0 wasn't out, so they wrote feature supports of RSX into OGL ES 1.1 including Fully Programmable Shader Pipeline and call it PSGL. Khronos Group took anything that could be done in a Shader out of the Fixed Function Pipeline (including Transform and Lighting) streamlining it for future Embedded Hardware. What does that mean?

#6. At least for OpenGL ES 2.0 devices (not PC version) it becomes a unified shader model and remember RSX is fully compliant. OpenGL ES 2.0 will only run on advanced hardware just like DX10.

That fact that people talk about things blindly by throwing down numbers and preach that their right shows them how ignorant they really are. It scares me because they are going to use their ignorance to convince other people? And now my question is what is this post trying to prove or disprove? I mean what is the purpose of this thread exactly so I can throw down some insight.

Well at least 25% are listening then...:D

The purpose of this thread is to help people stop compairing G70 GPU to the playstations sophisticated graphics card! RSX was made to work with cell, its a new breed of graphic card altogether...The technology that is in it will take years to describe.

Did you also know that the ps3 graphics card just so happens to be the most expensive part to manufacture, above cell and bluray?

seem's kinda wierd for them to give Nvidia so much money just for a crappy of the shelf card dont it?

Please run geometry shader programs on RSX.[QUOTE="faultline187"][QUOTE="Theverydeepend"] I bet you my life that 75% of the people reading this on system wars do not understand a thing you just said.I understand it before I work for AMD/ATI, but I doubt you do. And that link wth is with that?That fact that people talk about things blindly by throwing down numbers and preach that their right shows them how ignorant they really are. It scares me because they are going to use their ignorance to convince other people? And now my question is what is this post trying to prove or disprove? I mean what is the purpose of this thread exactly so I can throw down some insight.

ronvalencia

Well at least 25% are listening then...:D

The purpose of this thread is to help people stop compairing G70 GPU to the playstations sophisticated graphics card! RSX was made to work with cell, its a new breed of graphic card altogether...The technology that is in it will take years to describe.

Did you also know that the ps3 graphics card just so happens to be the most expensive part to manufacture, above cell and bluray?

seem's kinda wierd for them to give Nvidia so much money just for a crappy of the shelf card dont it?

Please run geometry shader programs on RSX.Well yes sir you are totally correct!the NVIDIA GeForce 8800 gpus were the first providing hardware support for Geometry Shaders and as you know this feature is only supported by directx 10, and shader model 4.0

Also another feature of DX10 is 128 bit High dynamic range lighting! So therefore if RSX supports 128BIT HDR it makes it a shader model 4.0 complant. Correct?

Back on-topic...it's 100% confirmed RSX is a G70-derivative. The only difference is that Nvidia modified it to use FlexIO instead of PCI-e. Anandtech spelled it out quite clearly here. Or if you want, search for "RSX g70" on the beyond3d forums and have a look at some of the links that pop up. The topic was discussed to death back when PS3 was released. There are absolutely no surprises on that front: it performs exactly like a G70 with half the bandwidth.

Also as a quick correction to something you posted before, Killzone 2 uses Quincunx AA, not 4xMSAA. Quincunx uses only 2 sub-samples, which gives it the same performance characteristics as 2xMSAA. However it also adds a slight blur to the final image.Teufelhuhn

Oh i had to scroll back! i forgot about you!

Lets see the link that you gave me was an article that go's back to ummmm JUNE 24th 2005

While the following article below, is May 25th 2005!

RSX WAS STILL IN DEVELOPMENT

So how the hell do they know so much of this supposed weak GPU thats in the ps3? You do also know that alot was changed with the GPU in that time! And no additional information has been avaliable since E3 2005...

AND WHY! would NVIDIA use GT QUAD SLI 7 SERIES cards to compare the ps3?

So obviously they know somthing we don't! Because RSX is still UNDER NDA! why so secret?

Its at the last minute of the clip...He states " This quad SLI behind me will give gamers and idea of what the playstation will be like"

Then he had to backtrack saying no no no it wont have for cards in it! LOLOL

HERe

If you have any other useful information, other than its a g70 its a g70 (which infact is not true) that would be much apreciated.

Oh and here is the link where i got the 4x Anti aliasing (Second to last paragraph i think) 8)

4X

thank you

It's based off the G70 architecture, nVidia even states this. I highly doubt a 2 year old GPU is more powerful, let alone on par with the current offerings of nVidia and ATI in the PC market.faultline187

Based! exaclty not meaning it is..

Killzone 2 has 128bit HDR lighting..that is only possible on dx10 HARDWARe!

The article clearly states DX10 VISUALS and beyond on PS3 theres no ANDs ifs or Buts about it!

Like it or not ps3 is still moving forward and no where close to being MAXED!

What gibberish. Sony could make a pot of poo and the fanboi's bye it. Do you realize that Direct X10 is a MICROSOFT Application Programming Interface? PS3 uses old graphics hardware, get over it, there is nothing special about the PS3, there is no hidden power. 2006 Wait for teh hidden powah of teh CELL! 2007 Wait some more, THIS will be the year of the PS3! 2008 ....eh just wait.....? oh and this will be the year of the PS3... 2009 Waiting for FFX...... 2010 Microsoft announces new consoles...FFX finally released:PActually the GPU in the PS3 can do 1.8 T-flops, so it's pretty fast. The latest ATI cards have reached 1.5 T-flops.Martin_G_N

Which is very strange don't you think?

If you went purely by the released figures RSX should be stepping all over the latest GPUs, yet it is quite the opposite.

[QUOTE="Martin_G_N"]Actually the GPU in the PS3 can do 1.8 T-flops, so it's pretty fast. The latest ATI cards have reached 1.5 T-flops.AnnoyedDragon

Which is very strange don't you think?

If you went purely by the released figures RSX should be stepping all over the latest GPUs, yet it is quite the opposite.

sony calculated the performance of both rsx and cell in a manner which would make their numbers extremely high, ati does it differently and calculates theirs based off real world gaming performance, not calculating it based of floating at home performance

sony calculated the performance of both rsx and cell in a manner which would make their numbers extremely high, ati does it differently and calculates theirs based off real world gaming performance, not calculating it based of floating at home performanceRiki101

Oh I know Sony's 2 TFlop figure is bull, it's just grinding that into the heads of the fanboys.

[QUOTE="Riki101"]sony calculated the performance of both rsx and cell in a manner which would make their numbers extremely high, ati does it differently and calculates theirs based off real world gaming performance, not calculating it based of floating at home performanceAnnoyedDragon

Oh I know Sony's 2 TFlop figure is bull, it's just grinding that into the heads of the fanboys.

I think that the PS3's GPU could be that fast, but it seems it's built totally different from all the other GPU's out there, so it requires different thinking, just like the cell. I think we'll see some pretty amazing graphics engines developed only for the PS3 later on, most games out there uses the engine from the X360, that don't work. And we're seeing this allready with KZ2. The biggest mistake of the PS3 and X360 would be the amount of RAM available though.it seems it's built totally different from all the other GPU's out there, so it requires different thinking, just like the cell. Martin_G_N

No it's not, it's based around the old G70 specification, practically every source states that. You can even go to the wiki and compare them if you want, this is not some experimental architecture like Cell.

This is why I dislike PS3 gamers, you never did get over that super computer hype so rationalize around reality to maintain the fantasy. If RSX had the performance everyone thinks it does it would be running all the PS3 games at 1080p right now, like Sony promised back in 2005.

The OP thinks RSX is better than an ATI 4800 and Nvidia 280 because of a number, well why isn't PS3 performing like this then? Why is Call of Duty 4 performing below 720p when according to those benchmarks it should be doing 1080p easily? Why isn't Assassins Creed running at 1080p? Why isn't Quake Wars running at 1080p?

The OP is deluded and so is everyone who agrees with him, another example of PS3 hype gone wrong.

[QUOTE="AnnoyedDragon"][QUOTE="Martin_G_N"]Actually the GPU in the PS3 can do 1.8 T-flops, so it's pretty fast. The latest ATI cards have reached 1.5 T-flops.Riki101

Which is very strange don't you think?

If you went purely by the released figures RSX should be stepping all over the latest GPUs, yet it is quite the opposite.

sony calculated the performance of both rsx and cell in a manner which would make their numbers extremely high, ati does it differently and calculates theirs based off real world gaming performance, not calculating it based of floating at home performance

Umm no that is tottaly incorect..Look im sorry it just is..

Let me explain:

HD, 4K, and beyond

Infact its the exact same board CELL/RSX Combo thats in Ps3..and they are claiming 4K!! thats 4x the resolution of HD!

Do you!!!!think sony is stupid enough to have a G70 at less than 100 GFLOPS as the RSX? and Cells well over 200+ GFLOPS? Do you people believe sony is stupid enough to put a supposed weak G70 Floating Point chip in a Computer Board!! LOL i don't think it would even display a picture

I don't know about you but i don't think id pay $5000 for a weak g70 and cell...would you?

2TFLOPS is what it has....

Read the link i posted at the start of this thread carefully...MS has kept some of DX10's APis' under closed doors for too long almost like it is proprietary. So much of the industry has moved towards a new OpenGL years ago and the new VECTOR GRAPHICS BASED standard is almost upon us. Games can now look as good from $10,000 PCs down to PDAs since the graphics a vector based and scalable.

"Because MT Evans completely replaces DX10 features and adds features the Manufacturers have already built (RSX) into their cards and are also contributing members of OpenGL. Microsoft locked those features out forcing their own standards!"

And it has come back to bite them.

This is no lie and its the truth! and if you dont believe me....then i guess your going to have to see with your own eyes!

Please Log In to post.

Log in to comment