Why does the leaked X1 SDK mention.

"The Multi-GPU DMA (XDMA) block is busy."

XDMA

Why would that instruction be in the Xbox One Dec SDK?

Serious question... I am just interested in what you guys think.

Again, my point was for PC's shared memory features. 9800 picture is the quickness quickest picture I can grab for my share memory point since I was posting my 8 inch tablet and I don't have my desktop nor my gaming laptop.

PC's graphics shared memory's existence hardly changed from DX10 to DX11.2.

yeah keep changing the topic, i am afraid is too late and you looked like a complete ignorant.

Gameplay > Graphics, but keep wishing it was the other way around with your beautiful empty worlds.

gameplay + graphics > gameplay

You smell of desperation my dear lem.

Good gameplay> Mediocre gameplay=Good graphics.

What's the point? we can do this all day but Fast robby was actually right, Gameplay should ALWAYS take precedence over graphics, Killzone shadow fall being a good case of what happens when it isn't.

or ryse, fact is both companies can do gameplay, but ps4 will always be able to pull better graphics.

@b4x: could have something to do with the rumors floating around that XB1 gpu is based on newer GCN,

On a side note how funny is it to see @tormentos ignorance bring out the wolves.

@b4x: could have something to do with the rumors floating around that XB1 gpu is based on newer GCN,

On a side note how funny is it to see @tormentos ignorance bring out the wolves.

I just don't understand why it says that in the SDK.

Trust me dude on this, tormentos will never back down. His mind won't allow it. He will dredge up info from 2000 to try to give his arguments context and strength. Stating second hand knowledge as gospel. CBOAT.....

I don't remember Microsoft or AMD ever giving information about the GPU in the X1. Have you?

I'm not saying anything about the hardware in the X1. Hell I don't know enough to make those uneducated claims. I damn sure won't spread it as gospel. I just want to know why the instruction is in the leaked SDK.

Again, my point was for PC's shared memory features. 9800 picture is the quickness quickest picture I can grab for my share memory point since I was posting my 8 inch tablet and I don't have my desktop nor my gaming laptop.

PC's graphics shared memory's existence hardly changed from DX10 to DX11.2.

yeah keep changing the topic, i am afraid is too late and you looked like a complete ignorant.

Again, my point was PC's shared memory features not about being DX10. Your misusing the context for my post.

From http://www.bit-tech.net/news/gaming/2013/06/28/directx-11-2/

DX11.2's Tiled Resource,

Designed to allow a game to use both system RAM and graphics RAM to store textures, Leblond claimed that tiled resources will enable DirectX 11.2 games to vastly improve the resolution of textures displayed in-game. By way of proof, Leblond showed off a demonstration that used a claimed 9GB of texture data - the majority of which was held in system RAM, rather than graphics RAM.

@b4x: Well M$ has confirmed most of the main things we know but they have been very vague in their explanations. The only information I've seen from M$ about the X1 gpu was in the Eurogamer interview, but they have'nt went in to depth about it like they did with the 360 or the way Cerny has explained the PS4. I figured it was just because it was known that the PS4 had a bigger GPU, no sense trying to fight a loosing battle. AMD has yet to comment on either GPU found in both systems, but the interview from Eurogamer with Goosen and Whitten they pretty much just talk about the system as a whole instead of any one component. I just assumed that they were trying to add emphasis on the fact that they built a more customized and balanced machine compared to the PS4.

As far as the XDMA goes I'm skeptical as to what it would do for the xbox. From my understanding XDMA is specifically for Xfire systems with more than one GPU and is only found in newer AMD cards like the 290, Xb1 has a single GPU so I don't know what it would be for if the xbox had it.

Damn it Tormentos, why must you ruin every single promising tech thread with your nonsense?

If you can't fathom why "PRT are textures that have only portions of the texture stored in GPU VIDEO MEMORY." implies two memory pools, you have no business in this thread.

WTF.? PRT use Vram for partial storage when the textures are not partially loaded they rest on the HDD not system, memory what teh fu** quote AMD saying PRT need 2 memory pools or that works better in fact do a search for PRT working better with 2 memory pools and see what you get..lol.

Stop single me out if you are wrong you are wrong period.

Oh by the way Trials Fusion is a PRT game which use the feature on both the xbox one and PS4 and is superior on PS4 1080p vs 900p nothing more to say.

Read because you post dude i posted a slight from AMD it self on the matter.

'No is not dude and the PS4 also has DMA'

He never said he didn't, he just questioned the logic of them being there

'The PS4 is stronger and that is how it is going to be all gen long,no secret sauce,DX12 or cloud will change that..'

Nowhere in his post did he say it wasn't or that it was going to change

You must be reading a different post because he never said any of the shit you are trying to debunk.

You cows are obviously extremely paranoid, someone posts and article about something the Xbone can do, they say nothing about it making the Xbone more powerful than the Ps4 yet you guys seem to think that by posting the article they are somehow implying that when they are not.

You accuse him of not knowing shit yet clearly you don't even know how to read properly, your reading comprehension is worse than my 8 year old kids and the school keep telling me he's behind for his age.

You basically just reamed a guy out for saying stuff he didn't actually say,The kind of thing B4X would do, well done.

No dude read the whole conversation,he thinks DMA and DME are different they aren't,stated by MS own engineers is the same like Partially resident textures and Tile Resources like MS call it on xbox one,and we know why MS did it to try to make seem like it was something unique when in fact it wasn't how many months lemmings here claimed that Tile Resources were not the same as PRT even Ronvalencia did it,until Granite a middleware developer pulled the cat out of the bag,not that sony fans didn't tell MS fans for months.

Read what the whole conversation and not what it serve you best my lemming friend.

Good gameplay> Mediocre gameplay=Good graphics.

What's the point? we can do this all day but Fast robby was actually right, Gameplay should ALWAYS take precedence over graphics, Killzone shadow fall being a good case of what happens when it isn't.

Yeah Killzone alone lets not Mention Ryse which is even worse,a game to be good doesn't need to suck graphically,there were plenty of good looking games that were good.

Again, my point was for PC's shared memory features. 9800 picture is the quickness quickest picture I can grab for my share memory point since I was posting my 8 inch tablet and I don't have my desktop nor my gaming laptop.

PC's graphics shared memory's existence hardly changed from DX10 to DX11.2.

@tormentos

From http://www.bit-tech.net/news/gaming/2013/06/28/directx-11-2/

DX11.2's Tiled Resource,

Designed to allow a game to use both system RAM and graphics RAM to store textures, Leblond claimed that tiled resources will enable DirectX 11.2 games to vastly improve the resolution of textures displayed in-game. By way of proof, Leblond showed off a demonstration that used a claimed 9GB of texture data - the majority of which was held in system RAM, rather than graphics RAM.

That is not what AMD say and on xbox one both ESRAM and DDR3 are VIDEO memory,it may be 2 different pools but both can be use for the same when DDR3 is using PRT is the same shit as on PS4 but with a huge slow pile of ram.

Leblond showed off a demonstration that used a claimed 9GB of texture data - the majority of which was held in system RAM, rather than graphics RAM.

It's a clever trick, and one that could help boost the quality of future PC games

The part you let out like always selective reader that is for PC not xbox one it wasn't demo on xbox one,just like the so call double performance of DX12 wasn't demo on XBO and they were already piggy back riding it to the xbox one,when in reality DX12 gains are already on xbox one.

Again...

http://www.eurogamer.net/articles/digitalfoundry-2014-trials-fusion-face-off

You have theories this is in practice PS4 superior version on a tile resource game...

"I'm not a detective... but I play one in SystemWars."- Tormentos

Oh i am not the only one who tag you as blackace,and even question your gif attacks of certain screen which only you and blackace post,aside from your meltdown,and arguments about been a real gamer..lol

"I'm not a detective... but I play one in SystemWars."- Tormentos

Oh i am not the only one who tag you as blackace,and even question your gif attacks of certain screen which only you and blackace post,aside from your meltdown,and arguments about been a real gamer..lol

That's such a ridiculous statement from you. Blackace has been here since 2002, and now suddenly he is going to start posting with two accounts, and at the same time let one account go through proxy servers everytime he does this? Don't make me laugh, as if anyone would put that amount of time in an online form. b4x already posted more than 5000 times this year alone, who would have the time to also post so much with the other account... It just doesn't add up, so you ight want to drop it.

@tormentos

1. Programmers code against Tiled Resource API not against AMD PRT hardware. My DirectX 11.2's Tiled Resource example link includes main memory and video memory usage with majority of texture data set stored in main memory. There's nothing in AMD's PRT slides stops system memory usage. AMD PRT was initially PC desktop solution for OpenGL workstation use-cases e.g. texture data that exceeds GPU's VRAM. Note that workstation PCs can have 16 to 64 GB of system memory with up to 16 GB VRAM GPU cards.

Xbox One's 32MB ESRAM *is* video memory which is not much different to the old ATI's Hyper Memory i.e. cheap PC video cards with very small fast VRAM and shared system memory model.

X1's TMUs can read and write on either ESRAM or DDR3.

X1's ROPS can read and write on either ESRAM or DDR3.

AMD hasn't removed ATI's Hyper-memory features in high end Radon HD cards.

X1's APIs allows direct control over this fast/small memory space while PC's Hyper memory version is govern by the driver (with CPU usage).

PC DirectX 11.2's tiled resource enables the programmer to have better control over their texture allocation. This is for PC's cheap discrete video cards with 1GB to 2GB GDDR5 VRAM i.e. the entire 1920x1080p frame buffers can fit in 1 GB or 2GB VRAM with tiled textures streaming from main memory. The PC doesn't worry about render targets being tiled.

X1's version is tricker since 32MB ESRAM is smaller than PC's cheap 1GB/2GB VRAM GDDR5 cards.

From http://www.tinysg.de/techGuides/tg4_prt.html

The Southern Islands graphic chips of Radeon 7000 series boards and FirePro W-series Workstation cards support a new OpenGL extension called AMD_sparse_texture that allows to download only parts of a texture image (tiles), rather than providing the entire image all at once. The driver will commit memory only for the downloaded tiles, and the application may decide to unload tiles again if they are no longer needed. This way, the graphics memory can serve as a texture tile cache, indexing parts of vast textures in main memory or even on disk.

2. I have shown you the pitfalls for straight HDD to VRAM i.e. texture pop ins. PC solves this problem with fast SSD (slow storage memory relative to DDR3, but faster than HDD).

@tormentos

1. Programmers code against Tiled Resource API not against AMD PRT hardware. My DirectX 11.2's Tiled Resource example link includes main memory and video memory usage with majority of texture data set stored in main memory. There's nothing in AMD's PRT slides stops system memory usage. AMD PRT was initially PC desktop solution for OpenGL workstation use-cases e.g. texture data that exceeds GPU's VRAM. Note that workstation PCs can have 16 to 64 GB of system memory with up to 16 GB VRAM GPU cards.

Xbox One's 32MB ESRAM *is* video memory which is not much different to the old ATI's Hyper Memory i.e. cheap PC video cards with very small fast VRAM and shared system memory model.

X1's TMUs can read and write on either ESRAM or DDR3.

X1's ROPS can read and write on either ESRAM or DDR3.

AMD hasn't removed ATI's Hyper-memory features in high end Radon HD cards.

X1's APIs allows direct control over this fast/small memory space while PC's Hyper memory version is govern by the driver (with CPU usage).

PC DirectX 11.2's tiled resource enables the programmer to have better control over their texture allocation. This is for PC's cheap discrete video cards with 1GB to 2GB GDDR5 VRAM i.e. the entire 1920x1080p frame buffers can fit in 1 GB or 2GB VRAM with tiled textures streaming from main memory. The PC doesn't worry about render targets being tiled.

X1's version is tricker since 32MB ESRAM is smaller than PC's cheap 1GB/2GB VRAM GDDR5 cards.

From http://www.tinysg.de/techGuides/tg4_prt.html

The Southern Islands graphic chips of Radeon 7000 series boards and FirePro W-series Workstation cards support a new OpenGL extension called AMD_sparse_texture that allows to download only parts of a texture image (tiles), rather than providing the entire image all at once. The driver will commit memory only for the downloaded tiles, and the application may decide to unload tiles again if they are no longer needed. This way, the graphics memory can serve as a texture tile cache, indexing parts of vast textures in main memory or even on disk.

2. I have shown you the pitfalls for straight HDD to VRAM i.e. texture pop ins. PC solves this problem with fast SSD (slow storage memory relative to DDR3, but faster than HDD).

GPU on PC specially GCN have nothing like ESRAM inside it,ESRAM is basically a cache or frame which try to emulate the effects a big pool of fast ram does on PC GPU.

And while PC have 2 memory setups they don't work like the xbox one,with 1 pool of system+video ram and 32MB for fast memory at least that is not how it works on almost all Desktop PC.

I already quoted the part you left out like always you just post what serve you best that example was for PC,much like DX12 benchmarks claiming double performance was and we all know how that end,the gains would not transfer to the xbox one..

The driver will commit memory only for the downloaded tiles, and the application may decide to unload tiles again if they are no longer needed. This way, the graphics memory can serve as a texture tile cache, indexing parts of vast textures in main memory or even on disk.

2.I have shown how your point is irrelevant because the PS4 also takes SSD the xbox one doesn't,and that was a streaming problem,in the end PRT was the same on both platforms and the end results which is what matter was that the PS4 was superior so much for TR working better with 2 memory pools now quote AMD on it because you have fail to give me a quote from AMD saying PRT work better with 2 memory pools.

@b4x: could have something to do with the rumors floating around that XB1 gpu is based on newer GCN,

On a side note how funny is it to see @tormentos ignorance bring out the wolves.

Digital Foundry: When you look at the specs of the GPU, it looks very much like Microsoft chose the AMD Bonaire design and Sony chose Pitcairn - and obviously one has got many more compute units than the other. Let's talk a little bit about the GPU - what AMD family is it based on: Southern Islands, Sea Islands, Volcanic Islands?

Andrew Goossen: Just like our friends we're based on the Sea Islands family.

But as i have learn already you would still claim is something different even if Phil Spencer came slap you in the face and tell you that you are a raging lunatic..

The fun part is that then latter you deny riding the secret sauce and pretend you don't ride that shit..lol

Why does the leaked X1 SDK mention.

XDMA

Why would that instruction be in the Xbox One Dec SDK?

Serious question... I am just interested in what you guys think.

This doesn't lie...

1 core on each GPU it was already confirmed Blackace,there is nothing secret do you see the PS4 picture it has a 20 CU GPU 3 memory controllers and 2 jaguar clusters,do you see the xbox one soc.?

1 core ESRAM,ESRAM,ESRAM 2 memory controllers and 2 jaguar clusters yeah MS wasted its die space on ESRAM why it did so.?

1-Because ESRAM can produce power and is better to have it than 6 extra CU.

2-Because DDR3 memory on xbox one is to slow for GPU+CPU.

3-Because after 14 CU GCN GPU get diminishing returns.

Pic one..

What you are quoting there is for for Crossfire without a bridge my god...

I just don't understand why it says that in the SDK.

Trust me dude on this, tormentos will never back down. His mind won't allow it. He will dredge up info from 2000 to try to give his arguments context and strength. Stating second hand knowledge as gospel. CBOAT.....

I don't remember Microsoft or AMD ever giving information about the GPU in the X1. Have you?

I'm not saying anything about the hardware in the X1. Hell I don't know enough to make those uneducated claims. I damn sure won't spread it as gospel. I just want to know why the instruction is in the leaked SDK.

Digital Foundry: When you look at the specs of the GPU, it looks very much like Microsoft chose the AMD Bonaire design and Sony chose Pitcairn - and obviously one has got many more compute units than the other. Let's talk a little bit about the GPU - what AMD family is it based on: Southern Islands, Sea Islands, Volcanic Islands?

Andrew Goossen: Just like our friends we're based on the Sea Islands family.

Digital Foundry XBO Architect interview.

The GPU is sea island GCN1.1 and the soc was expose already there is no secret sauce look at the pictures up there..

@b4x: Well M$ has confirmed most of the main things we know but they have been very vague in their explanations. The only information I've seen from M$ about the X1 gpu was in the Eurogamer interview, but they have'nt went in to depth about it like they did with the 360 or the way Cerny has explained the PS4.

I figured it was just because it was known that the PS4 had a bigger GPU, no sense trying to fight a loosing battle. AMD has yet to comment on either GPU found in both systems, but the interview from Eurogamer with Goosen and Whitten they pretty much just talk about the system as a whole instead of any one component. I just assumed that they were trying to add emphasis on the fact that they built a more customized and balanced machine compared to the PS4.

As far as the XDMA goes I'm skeptical as to what it would do for the xbox. From my understanding XDMA is specifically for Xfire systems with more than one GPU and is only found in newer AMD cards like the 290, Xb1 has a single GPU so I don't know what it would be for if the xbox had it.

That is because MS doesn't talk about inferior hardware in great detail if they are ahead oh they would even make silly ass charts like on the 360 days,but when they know they are losing is more about balance than actually talking about how weak the console is,and why they had to give it a last minute up clock..

Oh AMD did comment on it..

He said, "Everything that Sony has shared in that single chip is AMD [intellectual property], but we have not built an APU quite like that for anyone else in the market. It is by far the most powerful APU we have built to date

http://www.theinquirer.net/inquirer/news/2250802/amd-to-sell-a-cut-down-version-of-sonys-playstation-4-apu

Fanboys like you refuse to listen since February 2013 it was known that the PS4 was more powerful AMD it self stated so,again there is no need to talk about how much weaker your hardware is in detail MS was already making an ass of them self with the whole balance crap that only xbox fans eat up.

How the fu** using slower ram,with a small patch of fast ram and a weaker GPU is better balance than having a stronger GPU,straight forward design and 1 memory structure which is unified and fast without curves or needing extra work to make it work.

The xbox one is so balance that developers most break their back fighting with ESRAM to fit all their targets,is so balance that the majority of its games are superior on the other platform and still on 2014 still plague by sub 1080p game and even 720p games.

The xbox one is far from balance,it is all the contrary unbalance and weak,and only fanboys believe that shit.

That's such a ridiculous statement from you. Blackace has been here since 2002, and now suddenly he is going to start posting with two accounts, and at the same time let one account go through proxy servers everytime he does this? Don't make me laugh, as if anyone would put that amount of time in an online form. b4x already posted more than 5000 times this year alone, who would have the time to also post so much with the other account... It just doesn't add up, so you ight want to drop it.

Maybe you should read the part were blackace admit having more than 1 account..lol

Do you even think mods check your ip.?

Damn man you really are dumber than you look,i can hop in right now into my eltormo account and post from a different IP without using a proxy..lol

But i don't think he changes connection,B4X arguments,posting stile,meltdown and even the whole i am a manticore crap are the same as blackace 100%,we are not talking about a few similarities here.

Oh the fun par it how B4X act as if he was here for 10 years..lol...

But i am sure that if it was the other way around slowroby you would be saying how that cow was that other cow..lol

@tormentos:

Why do you always type things I know?

I asked why the instruction was in the SDK..... I didn't ask to copy and paste the internet.... of an article I read this summer 10 times. X-rays that I saw the day they were released.

I'm not saying secret sauce anything. I'm asking why the command is in the SDK.

Nothing more, nothing less. If you know.. Tell me.

Why would it even be there? That's my question. I'm aware of all the things you typed.

If you can answer my question that would be cool. Why is it there in the first place?

@tormentos:

Why do you always type things I know?

I asked why the instruction was in the SDK..... I didn't ask to copy and paste the internet.... of an article I read this summer 10 times. X-rays that I saw the day they were released.

I'm not saying secret sauce anything. I'm asking why the command is in the SDK.

Nothing more, nothing less. If you know.. Tell me.

Why would it even be there? That's my question. I'm aware of all the things you typed.

If you can answer my question that would be cool. Why is it there in the first place?

Because you just uncover the command line for the second xbox one GPU Black4ceX now run make a new thread about,i am sure your other you would be happy since he also claim secret GPU inside the xbox one..

Just wait until E3 2015... just wait...

That's such a ridiculous statement from you. Blackace has been here since 2002, and now suddenly he is going to start posting with two accounts, and at the same time let one account go through proxy servers everytime he does this? Don't make me laugh, as if anyone would put that amount of time in an online form. b4x already posted more than 5000 times this year alone, who would have the time to also post so much with the other account... It just doesn't add up, so you ight want to drop it.

Maybe you should read the part were blackace admit having more than 1 account..lol

Do you even think mods check your ip.?

Damn man you really are dumber than you look,i can hop in right now into my eltormo account and post from a different IP without using a proxy..lol

But i don't think he changes connection,B4X arguments,posting stile,meltdown and even the whole i am a manticore crap are the same as blackace 100%,we are not talking about a few similarities here.

Oh the fun par it how B4X act as if he was here for 10 years..lol...

But i am sure that if it was the other way around slowroby you would be saying how that cow was that other cow..lol

I'm not blackace for the last time man... You can't be serious with this horseshit.

There are people on here that actually know who I am.. I'm "P_Personal / B4X.

Ask direweasel. I lurked these boards in 2001.. before posting.

Buy / rent an X1 and I'll have me and blackace join the chat. If he agrees. We can do it on the PS4 too.

My XBL account is older... Xbox Beta. I'm sure his is old too. Anyone can have several PSN accounts though.. due to not having a subscription last gen. Xbox cost money since day one. Can't fake that.

Just say when.

EDIT: What's your Xbox 360 gamertag? I'll send you a friend request right now. We can talk.

@tormentos:

Why do you always type things I know?

I asked why the instruction was in the SDK..... I didn't ask to copy and paste the internet.... of an article I read this summer 10 times. X-rays that I saw the day they were released.

I'm not saying secret sauce anything. I'm asking why the command is in the SDK.

Nothing more, nothing less. If you know.. Tell me.

Why would it even be there? That's my question. I'm aware of all the things you typed.

If you can answer my question that would be cool. Why is it there in the first place?

Because you just uncover the command line for the second xbox one GPU Black4ceX now run make a new thread about,i am sure your other you would be happy since he also claim secret GPU inside the xbox one..

Just wait until E3 2015... just wait...

I don't talk about secret sauce or just wait BS....

If you can't answer why the command in in the SDK. I'll ask on gaf. Or try to search for an answer.

I want to know why it is in the SDK. That's all.

Well its been confirmed X1 had Microsoft silently unlocked a cpu core for developers for games. And apparently it was used for Assassins Creed Unity which explains alot with the higher framerate averages vs the PS4. MS you sneaky snake.......

@tormentos: And while PC have 2 memory setups they don't work like the xbox one,with 1 pool of system+video ram and 32MB for fast memory at least that is not how it works on almost all Desktop PC.

WTF. The xb1 architecture is almost exactly like a PC. The only difference is PC has more VRAM. But just like PC xbox one has shared system ram with GPU and fast bandwidth Vram. PC=GPU+vram+system ram Xb1=GPU+system ram+esram=vram

- Can Saturate PCie 3.0 x16 bus bandwidth (16 GB/sec bidirectional) - http://community.us.playstation.com/t5/PlayStation-General/This-Is-Why-the-PS4-is-More-Powerful-Than-Xbox-One/td-p/43871526

PS4 DMA = 16GB/s PC DMA = 32GB/s XB1 DME = 35GB/s and 68GB/s

So there is one difference between PS4 DMA and Xb1 DME. Now can you provide me a link to where it says PS4 DMA have extra features compared to normal DMA engines? You have already posted a link to how XB1 DME's are different compared to normal DMA engines. At this point the only difference I see between PS4 DMA's and standard DMA's is the fact that Ps4 has half the bandwidth of normal DMA's while XB1 has up to twice the bandwidth and extra functionality.

So even though M$ added extra functionality to the DMA's they are still they same as PS4? Going by that logic since both GPU's are based off of Sea Islands we can say XB1 GPU is the same as PS4 GPU regardless of the fact that Sony added extra cu's.

How can you say the XB1 is unbalanced? DDR3 for CPU and vram for GPU equals more balance than GDDR5 for both CPU and GPU. The PS4 CPU will never be able to keep up with the GPU=unbalanced, this has already been discussed. It's a known fact that XB1 has a latency advantage over PS4. It's documented already that the PS4 bandwidth can drop below 140GB/s when the CPU is working. Xb1 GPU will never have to sacrifice bandwidth the CPU can handle the 68GB/s from the DDR3 and the GPU can handle the 68GB/s and the 200GB/s from the esram. There is no fighting for bandwidthh between the CPU and the GPU on the XB1.

How the fu** using slower ram,with a small patch of fast ram and a weaker GPU is better balance than having a stronger GPU,straight forward design and 1 memory structure which is unified and fast without curves or needing extra work to make it work.

Maybe you should ask PC gamers since their system uses slower ram for CPU, and faster ram for GPU. PC gamers average at least 8GB system ram and 2GBs for Vram, but yet PC's stomp all over the consoles.

Bro just let it go you're making yourself look ridiculous, so much so that other people can't even ignore it and have stepped in to call you out on your BS and lack of knowledge. Nobody is saying the XB1 is stronger than the PS4, it just has a few extra things in place to try and make up for it's shortcomings.

@tormentos:

Why do you always type things I know?

I asked why the instruction was in the SDK..... I didn't ask to copy and paste the internet.... of an article I read this summer 10 times. X-rays that I saw the day they were released.

I'm not saying secret sauce anything. I'm asking why the command is in the SDK.

Nothing more, nothing less. If you know.. Tell me.

Why would it even be there? That's my question. I'm aware of all the things you typed.

If you can answer my question that would be cool. Why is it there in the first place?

Because you just uncover the command line for the second xbox one GPU Black4ceX now run make a new thread about,i am sure your other you would be happy since he also claim secret GPU inside the xbox one..

Just wait until E3 2015... just wait...

Uumm.. my alt starts with an "X" and there is no 2nd GPU in the XB1. That was debunked a long time ago by Microsoft. El Tormo is still having meltdowns over all of this I see. The big stuff hasn't even happened yet. lol!! Dude.. keep a Defibrillation by your computer. You're going to need it.

To B4X:

I think I have the answer. The Xbox One GPU is internally seperated into 2 Blocks of SC's (Shader Cores) each with 6 SC's. That is why there are 2 Command Processors for the GPU and why there are multiple display planes. Also there is a difference between a normal CU and the SC's here but we won't know the difference until MS explains their hardware better.

@tormentos:

You are talking about the physical form.

X1's ESRAM *is* graphics memory since both TMU and ROPS can read/write on it.

Again,

X1's TMU can read and write to either ESRAM or DDR3

X1's ROPS can read and write to either ESRAM or DDR3

Since X1's GPU also acts like PC IGP, the main memory is also shared as graphics memory.

Closest PC relative is 8 CU GCN enabled AMD Kaveri APU or Intel Iris Pro APU which includes on-chip 128 MB EDRAM.

AMD Kaveri APU doesn't have any Hyper-memory to boost performance.

Intel Iris Pro APU can access both 128 MB EDRAM and DDR3(IGP mode). Both AMD and Intel has patent swap agreements.

PS4 is a super scale PC IGP with main memory being 256bit GDDR5-5500.

In terms of physical form, there are other products with embedded graphics memory on AMD GPU chip packages.

PC's embedded Radeon HD GCN has on chip package GDDR5.

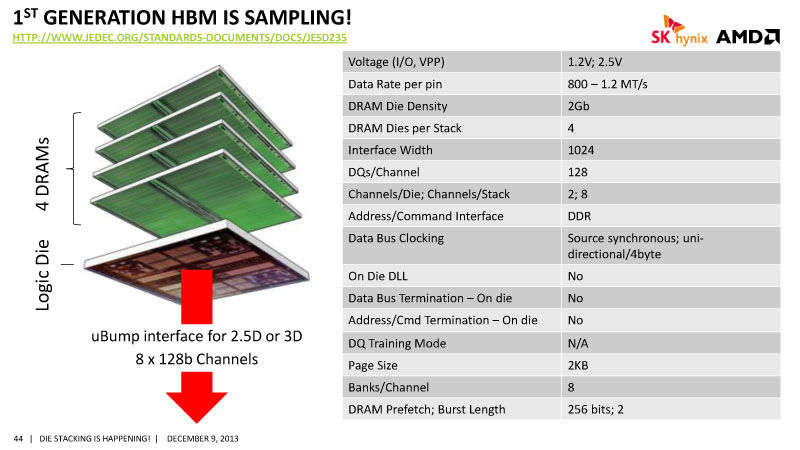

The future AMD GCN has embedded stack memory on top of the GPU die.

Also, HBM would give AMD PC APUs a massive boost in performance i.e. GoFlo 20 nm APU with 16 CU GCN + 1 to 2GB HBM.

PS4 with SSD upgrade

...

Nearly pointless i.e. gimped by AMD's tablet class storage controller e.g. Battlefield 4, Speed For Speed,

Sony didn't select desktop/workstation/server class AMD chipset storage controller.

On a side note,

from http://www.rebellion.co.uk/blog/2014/10/2/mantle-comes-to-sniper-elite-3

AMD Mantle enables concurrent ACE (Asynchronous Compute queue) and GCU (graphics command unit) operations

There’s still a fair amount of scope for increasing performance with Mantle, particularly as we’re not yet taking advantage of the Asynchronous Compute queue. This would allow us to take some of our expensive compute shaders – like our Obscurance Fields technique – and schedule them to run in parallel with the rendering of shadow maps, which are particularly light on ALU work

Similar principle for PS4.

AMD Mantle's Multi-GPU mode with single GPU.

No, but when the shit hits the fan, I hope you're still around.

I'll be around when nothing but air blows like the other 2 'milestones' that a certain area of the internet have been hoping for since 2013. Looking forward to your disappointment.

Well its been confirmed X1 had Microsoft silently unlocked a cpu core for developers for games. And apparently it was used for Assassins Creed Unity which explains alot with the higher framerate averages vs the PS4. MS you sneaky snake.......

That's bad news then, pretty sure patches have sorted the PS4 frame rate, so not sure what that says about Ubisoft or the unlocking of the core on the xbone :L.

Maybe you should read the part were blackace admit having more than 1 account..lol

Do you even think mods check your ip.?

Damn man you really are dumber than you look,i can hop in right now into my eltormo account and post from a different IP without using a proxy..lol

But i don't think he changes connection,B4X arguments,posting stile,meltdown and even the whole i am a manticore crap are the same as blackace 100%,we are not talking about a few similarities here.

Oh the fun par it how B4X act as if he was here for 10 years..lol...

But i am sure that if it was the other way around slowroby you would be saying how that cow was that other cow..lol

Yes, I would think mods check IP, on other forums they do this, so...

So will it be back to da powwa of da clawd AGAIN when this falls through AGAIN?

Well its been confirmed X1 had Microsoft silently unlocked a cpu core for developers for games. And apparently it was used for Assassins Creed Unity which explains alot with the higher framerate averages vs the PS4. MS you sneaky snake.......

Half a core. Ubisoft lied about the CPU thing with ACU as well, the frame rate wasn't improved at all when they reduced the number of NPCs during tests.

@tormentos:

You are talking about the physical form.

X1's ESRAM *is* graphics memory since both TMU and ROPS can read/write on it.

Again,

X1's TMU can read and write to either ESRAM or DDR3

X1's ROPS can read and write to either ESRAM or DDR3

Since X1's GPU also acts like PC IGP, the main memory is also shared as graphics memory.

Closest PC relative is 8 CU GCN enabled AMD Kaveri APU or Intel Iris Pro APU which includes on-chip 128 MB EDRAM.

AMD Kaveri APU doesn't have any Hyper-memory to boost performance.

Intel Iris Pro APU can access both 128 MB EDRAM and DDR3(IGP mode). Both AMD and Intel has patent swap agreements.

PS4 is a super scale PC IGP with main memory being 256bit GDDR5-5500.

In terms of physical form, there are other products with embedded graphics memory on AMD GPU chip packages.

PC's embedded Radeon HD GCN has on chip package GDDR5.

The future AMD GCN has embedded stack memory on top of the GPU die.

Also, HBM would give AMD PC APUs a massive boost in performance i.e. GoFlo 20 nm APU with 16 CU GCN + 1 to 2GB HBM.

PS4 with SSD upgrade

...

Nearly pointless i.e. gimped by AMD's tablet class storage controller e.g. Battlefield 4, Speed For Speed,

Sony didn't select desktop/workstation/server class AMD chipset storage controller.

On a side note,

from http://www.rebellion.co.uk/blog/2014/10/2/mantle-comes-to-sniper-elite-3

AMD Mantle enables concurrent ACE (Asynchronous Compute queue) and GCU (graphics command unit) operations

There’s still a fair amount of scope for increasing performance with Mantle, particularly as we’re not yet taking advantage of the Asynchronous Compute queue. This would allow us to take some of our expensive compute shaders – like our Obscurance Fields technique – and schedule them to run in parallel with the rendering of shadow maps, which are particularly light on ALU work

Similar principle for PS4.

AMD Mantle's Multi-GPU mode with single GPU.

Here comes Ronvalencia again with its useless charts and irrelevant crap that has nothing to do with the real argument.

I can believe you even posted a chart comparing HDD vs SSD and claim is nearly pointless are you fu**ing blind buffoon.?

Oh in BF4 and NFS is useless... How about the other games you selective reader.

Seagate momentus vs Stock HDD.

Cold boot.

23 Stock - 17.5 SSD

Stand by boot

31.2 stock - 22.5 ssd.

Resugun

19 second stock - 11 second SSD.

Contrast

14 stock - 5 SSD..

What the fu** man how the fu** can you be this selective are you think people is blind and will not look at what your posting.?

Hell even the damn scorpio 7200RPM will boost speed considerably you don't even need an SSD.

So while you try to imply that SSD doesn't do anything because 2 damn games on a chart with 22 examples didn't benefit is a joke,hell look at the scropio it reduced load times in BF4 from 44 seconds to 20 and isn't even SSD...

Some may work better other won,but to claim nearly pointless if a joke dude,and show how selective and blind you are as if i would not see the part were other games do benefit.

But my arguments wasn't about load times it was about faster texture streaming..

That Rebellion quote mean nothing it is for PC not xbox one which also has Asynchronous Compute queue as MS claims.

In the end the advantage is for the PS4 because it is stronger and there is nothing the xbox one can't do about that,no 2 memory pools,secret compressors and decompressors or any crap it is gimped vs the PS4 and its architectures is not very friendly with heavy deferred games with tons of stuff at once,like MGS5 and PES 2014 confirmed.

So will it be back to da powwa of da clawd AGAIN when this falls through AGAIN?

Well its been confirmed X1 had Microsoft silently unlocked a cpu core for developers for games. And apparently it was used for Assassins Creed Unity which explains alot with the higher framerate averages vs the PS4. MS you sneaky snake.......

Half a core. Ubisoft lied about the CPU thing with ACU as well, the frame rate wasn't improved at all when they reduced the number of NPCs during tests.

It was the lack of optimization which also affect the PC side ACU will go down in history as one of the worse screw up a company has made this gen,and the fact that they admit to hold back the PS4 version resolution wise show how UBI sold them self for a few millions.

Well its been confirmed X1 had Microsoft silently unlocked a cpu core for developers for games. And apparently it was used for Assassins Creed Unity which explains alot with the higher framerate averages vs the PS4. MS you sneaky snake.......

Half a core. Ubisoft lied about the CPU thing with ACU as well, the frame rate wasn't improved at all when they reduced the number of NPCs during tests.

I don't think they implemented it that fast... This was part of the November SDK, even Halo MCC didn't use these resources, so I think it's highly unlikely that AC:U used them. So unless you have a link where Ubisoft is stating this, you guys are talking shit again

Well its been confirmed X1 had Microsoft silently unlocked a cpu core for developers for games. And apparently it was used for Assassins Creed Unity which explains alot with the higher framerate averages vs the PS4. MS you sneaky snake.......

Half a core. Ubisoft lied about the CPU thing with ACU as well, the frame rate wasn't improved at all when they reduced the number of NPCs during tests.

I don't think they implemented it that fast... This was part of the November SDK, even Halo MCC didn't use these resources, so I think it's highly unlikely that AC:U used them. So unless you have a link where Ubisoft is stating this, you guys are talking shit again

"Digital Foundry theorizes that the extra CPU power “may partly explain why a small amount of multi-platform titles released during Q4 2014 may have possessed performance advantages over their PS4 counterparts in certain scenarios.”"

"Digital Foundry theorizes that the extra CPU power “may partly explain why a small amount of multi-platform titles released during Q4 2014 may have possessed performance advantages over their PS4 counterparts in certain scenarios.”"

But at the same time they are saying this:

The 2015 Multiplayer design and APIs are made available in preview form back in September 2014, but it's not yet clear when we will begin to see titles utilising this design. There has been discussion as to whether Halo: The Master Chief Collection uses the 2015 design, but with the new technology introduced (in preview form no less) so close to the game's launch, this seems rather unlikely.

Also that's their theory, since when should we believe DF that just THINKS, no proof what so ever, that they are right? Xbox One versions ran better because faster CPU.

Let's say November SDK was actually released the first of November. AC:U was released the 11th of November. Even if Unity went gold one week before release (which is practically impossible), they would still only have 5 days to implement it. To compare, SSOD released 31st of October, and it went gold the 9th of October. 3 weeks before release.

"Digital Foundry theorizes that the extra CPU power “may partly explain why a small amount of multi-platform titles released during Q4 2014 may have possessed performance advantages over their PS4 counterparts in certain scenarios.”"

But at the same time they are saying this:

The 2015 Multiplayer design and APIs are made available in preview form back in September 2014, but it's not yet clear when we will begin to see titles utilising this design. There has been discussion as to whether Halo: The Master Chief Collection uses the 2015 design, but with the new technology introduced (in preview form no less) so close to the game's launch, this seems rather unlikely.

Also that's their theory, since when should we believe DF that just THINKS, no proof what so ever, that they are right? Xbox One versions ran better because faster CPU.

Let's say November SDK was actually released the first of November. AC:U was released the 11th of November. Even if Unity went gold one week before release (which is practically impossible), they would still only have 5 days to implement it. To compare, SSOD released 31st of October, and it went gold the 9th of October. 3 weeks before release.

Dont overlook the possibility that they modified cpu usage, with one of the many patches that were released from the first day it was released. There was two patches before DF looked and tested unity.

Though granted, chances are that the 7th core many not have the reason but the fact that MS's tools are easier and more efficient to use.

Doesn't matter what it can do, because at the end of the day it still gets curb stomped by the ps4 spec-wise.

Doesn't matter what it can do, because at the end of the day it still gets curb stomped by the ps4 spec-wise.

What? So it doesn't matter what it does in actual practical examples, but look at the theoretical powaaaaa, much more important...

@tormentos:

In the end the advantage is for the PS4 because it is stronger and there is nothing the xbox one can't do about that,no 2 memory pools,secret compressors and decompressors or any crap it is gimped vs the PS4 and its architectures is not very friendly with heavy deferred games with tons of stuff at once,like MGS5 and PES 2014 confirmed.

Nothing can be done, so Nintendo and Microsoft should just give up and not bother doing anything. Typical idiot statement.

They all have their strengths but you wouldn't see that.

@tormentos:

You are talking about the physical form.

X1's ESRAM *is* graphics memory since both TMU and ROPS can read/write on it.

Again,

X1's TMU can read and write to either ESRAM or DDR3

X1's ROPS can read and write to either ESRAM or DDR3

Since X1's GPU also acts like PC IGP, the main memory is also shared as graphics memory.

Closest PC relative is 8 CU GCN enabled AMD Kaveri APU or Intel Iris Pro APU which includes on-chip 128 MB EDRAM.

AMD Kaveri APU doesn't have any Hyper-memory to boost performance.

Intel Iris Pro APU can access both 128 MB EDRAM and DDR3(IGP mode). Both AMD and Intel has patent swap agreements.

PS4 is a super scale PC IGP with main memory being 256bit GDDR5-5500.

In terms of physical form, there are other products with embedded graphics memory on AMD GPU chip packages.

PC's embedded Radeon HD GCN has on chip package GDDR5.

The future AMD GCN has embedded stack memory on top of the GPU die.

Also, HBM would give AMD PC APUs a massive boost in performance i.e. GoFlo 20 nm APU with 16 CU GCN + 1 to 2GB HBM.

PS4 with SSD upgrade

...

Nearly pointless i.e. gimped by AMD's tablet class storage controller e.g. Battlefield 4, Speed For Speed,

Sony didn't select desktop/workstation/server class AMD chipset storage controller.

On a side note,

from http://www.rebellion.co.uk/blog/2014/10/2/mantle-comes-to-sniper-elite-3

AMD Mantle enables concurrent ACE (Asynchronous Compute queue) and GCU (graphics command unit) operations

There’s still a fair amount of scope for increasing performance with Mantle, particularly as we’re not yet taking advantage of the Asynchronous Compute queue. This would allow us to take some of our expensive compute shaders – like our Obscurance Fields technique – and schedule them to run in parallel with the rendering of shadow maps, which are particularly light on ALU work

Similar principle for PS4.

AMD Mantle's Multi-GPU mode with single GPU.

Here comes Ronvalencia again with its useless charts and irrelevant crap that has nothing to do with the real argument.

I can believe you even posted a chart comparing HDD vs SSD and claim is nearly pointless are you fu**ing blind buffoon.?

Oh in BF4 and NFS is useless... How about the other games you selective reader.

Seagate momentus vs Stock HDD.

Cold boot.

23 Stock - 17.5 SSD

Stand by boot

31.2 stock - 22.5 ssd.

Resugun

19 second stock - 11 second SSD.

Contrast

14 stock - 5 SSD..

What the fu** man how the fu** can you be this selective are you think people is blind and will not look at what your posting.?

Hell even the damn scorpio 7200RPM will boost speed considerably you don't even need an SSD.

So while you try to imply that SSD doesn't do anything because 2 damn games on a chart with 22 examples didn't benefit is a joke,hell look at the scropio it reduced load times in BF4 from 44 seconds to 20 and isn't even SSD...

Some may work better other won,but to claim nearly pointless if a joke dude,and show how selective and blind you are as if i would not see the part were other games do benefit.

But my arguments wasn't about load times it was about faster texture streaming..

That Rebellion quote mean nothing it is for PC not xbox one which also has Asynchronous Compute queue as MS claims.

In the end the advantage is for the PS4 because it is stronger and there is nothing the xbox one can't do about that,no 2 memory pools,secret compressors and decompressors or any crap it is gimped vs the PS4 and its architectures is not very friendly with heavy deferred games with tons of stuff at once,like MGS5 and PES 2014 confirmed.

My sample size is larger.

http://www.pcper.com/reviews/General-Tech/PlayStation-4-PS4-HDD-SSHD-and-SSD-Performance-Testing

Note the difference 7200 rpm vs SSD.

My AMD Mantle comment is for PC, hence it's a side note. AMD Mantle can simulate AFR CrossFire on a single GPU which is n+1 GPU context.

I don't think they implemented it that fast... This was part of the November SDK, even Halo MCC didn't use these resources, so I think it's highly unlikely that AC:U used them. So unless you have a link where Ubisoft is stating this, you guys are talking shit again

Here'stwo.

It isn't the volume of NPCs that are leading to Assassin's Creed Unity's performance issues, but an 'overloaded instruction queue', Ubisoft has said.

"Though crowd size was something we looked at extensively pre-launch, it is something we continue to keep a close eye on," Ubisoft added. "We have just finished a new round of tests on crowd size but have found it is not linked to this problem and does not improve frame rate, so we will be leaving crowds as they are."

Poorly optimised and rushed.

I don't think they implemented it that fast... This was part of the November SDK, even Halo MCC didn't use these resources, so I think it's highly unlikely that AC:U used them. So unless you have a link where Ubisoft is stating this, you guys are talking shit again

Here'stwo.

It isn't the volume of NPCs that are leading to Assassin's Creed Unity's performance issues, but an 'overloaded instruction queue', Ubisoft has said.

"Though crowd size was something we looked at extensively pre-launch, it is something we continue to keep a close eye on," Ubisoft added. "We have just finished a new round of tests on crowd size but have found it is not linked to this problem and does not improve frame rate, so we will be leaving crowds as they are."

Poorly optimised and rushed.

I meant Ubisoft stating they used November SDK for the Xbox One.

The new XDK with DX12 sounds juicy as hell. Looks like Phil trolled everyone about DX12.

You don't even know what most of it is about, Justin.

It'll be meagre just like a lemming's reading abilities. :)

My sample size is larger.

http://www.pcper.com/reviews/General-Tech/PlayStation-4-PS4-HDD-SSHD-and-SSD-Performance-Testing

Note the difference 7200 rpm vs SSD.

My AMD Mantle comment is for PC, hence it's a side note. AMD Mantle can simulate AFR CrossFire on a single GPU which is n+1 GPU context.

Oh please dude what the hell you just posted another one which show SSD is faster you are basically trying to find one that prove your point and failing miserably.

SSD does benefit the PS4 and since that was the problem with Trials Fusion the PS4 yeah is superior not matter what.

Anything GCN can do on PC can do it on BOTH consoles feature wise.

Doesn't matter what it can do, because at the end of the day it still gets curb stomped by the ps4 spec-wise.

What? So it doesn't matter what it does in actual practical examples, but look at the theoretical powaaaaa, much more important...

Theoretical.? Hahahaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa

Basically all games out there are superior on PS4 but ACU which was gimped on purpose by a company who had a deal with MS on a holiday bundle.

The PS4 power is not theoretical is very well documented that is like saying the 7850OC is theoretically more powerful than the 7770 yeah right..

@tormentos:

In the end the advantage is for the PS4 because it is stronger and there is nothing the xbox one can't do about that,no 2 memory pools,secret compressors and decompressors or any crap it is gimped vs the PS4 and its architectures is not very friendly with heavy deferred games with tons of stuff at once,like MGS5 and PES 2014 confirmed.

Nothing can be done, so Nintendo and Microsoft should just give up and not bother doing anything. Typical idiot statement.

They all have their strengths but you wouldn't see that.

Power wise there is nothing they can do just like sony could not do anything to beat the xbox graphically with the PS2,the difference in hardware is to big period,even more for Nintendo which you bring now..

Non has strengths vs the PS4 they are in fact weaker all around.

Wow such a heated thread. Well, I think the newer SDK is going to help developer making more efficient games. Hope MS release a game using Tiled Resource as well. I think the future gaming will be great. Especially games like FH2 is already so high resolution with MSAA with open world using the old SDK. Woot.

There was already a game using Tile Resources is call Trials fusion and is superior on PS4.

FH2 look like garbage compare with DC and the SDK use for FH2 uses the xbox one 10% GPU reserve which is what really help the xbox one rise some frames or a few lines of resolution,any other SDK coming will not improve graphics as the 10% SDK,you people should stop man MS will not increase power 30% with every SDK and many SDK are actually as well to fix bugs as well or make things easier.

The xbox one GPU has basically give all it had to gave.

The new XDK with DX12 sounds juicy as hell. Looks like Phil trolled everyone about DX12.

They only people Phil trolled were lemmings which still believe on that crap.

MS magic drivers that transform gimped version of a 7790 into a dual R290X... lol

Doesn't matter what it can do, because at the end of the day it still gets curb stomped by the ps4 spec-wise.

What? So it doesn't matter what it does in actual practical examples, but look at the theoretical powaaaaa, much more important...

Theoretical.? Hahahaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaaa

Basically all games out there are superior on PS4 but ACU which was gimped on purpose by a company who had a deal with MS on a holiday bundle.

Sure, ok kiddo, here have some fun with this

@tormentos: Non has strengths vs the PS4 they are in fact weaker all around.

So let me get this right according to you no other console has any strengths vs the PS4, they are weaker in every area.

Go on say yes, reconfirm your an idiot fanboy who cannot see beyond your precious PS4 and cannot appreciate anything that's not on your console.

@tormentos: Non has strengths vs the PS4 they are in fact weaker all around.

So let me get this right according to you no other console has any strengths vs the PS4, they are weaker in every area.

Go on say yes, reconfirm your an idiot fanboy who cannot see beyond your precious PS4 and cannot appreciate anything that's not on your console.

Name 1 in which the xbox one or wii u are stronger just 1...

@tormentos: Non has strengths vs the PS4 they are in fact weaker all around.

So let me get this right according to you no other console has any strengths vs the PS4, they are weaker in every area.

Go on say yes, reconfirm your an idiot fanboy who cannot see beyond your precious PS4 and cannot appreciate anything that's not on your console.

Name 1 in which the xbox one or wii u are stronger just 1...

Answer the question first.

@tormentos:

Answering a question with a question isn't answering the question.

Its a yes or no question, which you are avoiding answering.

So I ask again Yes or No

So let me get this right according to you no other console has any strengths vs the PS4, they are weaker in every area.

Answer the question first.

No you prove what are the strengths any of the 2 have over the PS4...

As i already say name 1,my question pretty much answer yours,name 1 thing the xbox one or Wii u have stronger than the PS4.

Kinect > PS Camera, Xbox One CPU > PS4 CPU because Microsoft overclocked it, which Sony can't because they can't handle the heat, so the CPU performance of the Xbox One is better than the one from Sony. This is of course thinking EXACTLY as you do, theoretical power, so Xbox One CPU > PS4 CPU. Xbox One also supports 802.11a WiFi band and 5Ghz channel support, which PS4 doesn't.

Those are all HW things that Xbox One does better than PS4, top of my head. I'm not mentioning HDMI-In port and Project Morpheus because one has nothing to do with gaming, and the other hasn't been released yet.

Answer the question first.

No you prove what are the strengths any of the 2 have over the PS4...

As i already say name 1,my question pretty much answer yours,name 1 thing the xbox one or Wii u have stronger than the PS4.

Kinect > PS Camera, Xbox One CPU > PS4 CPU because Microsoft overclocked it, which Sony can't because they can't handle the heat, so the CPU performance of the Xbox One is better than the one from Sony. This is of course thinking EXACTLY as you do, theoretical power, so Xbox One CPU > PS4 CPU. Xbox One also supports 802.11a WiFi band and 5Ghz channel support, which PS4 doesn't.

Those are all HW things that Xbox One does better than PS4, top of my head. I'm not mentioning HDMI-In port and Project Morpheus because one has nothing to do with gaming, and the other hasn't been released yet.

Grr wanted him to answer the question first :)

Please Log In to post.

Log in to comment