@gpuking:

I agree with this guy, 10TF is wishful thinking.

Here are the facts.

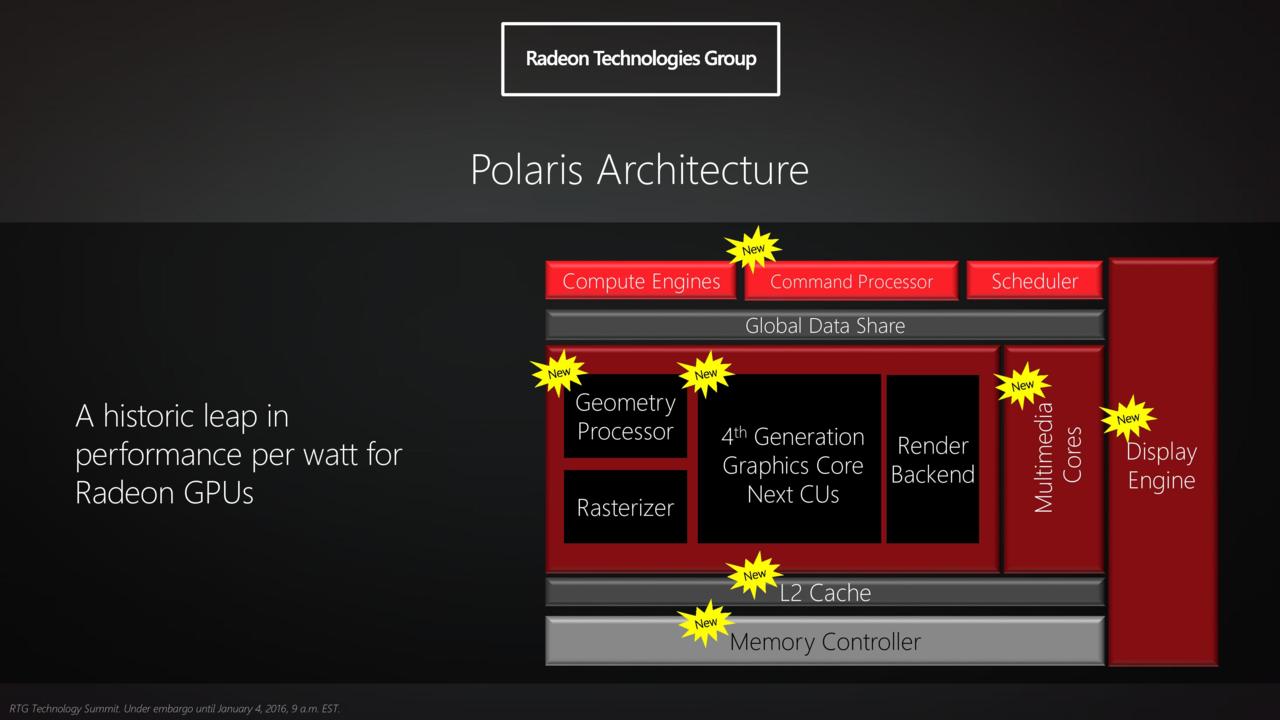

1. No single core 10tf gpu exists on the market right now, much less with the optimal TDP to fit inside a console. No, not even 14nm Polaris.

2. 5-6x Neo is 20-25tf, not quite 10tf.

3. Suppose such a 10tf console is real, it wont sell at anywhere near $400-$500, it would be upwards of $1200

4. MS won't alienate the established 20m users with a new architecture

5. The raw power of 10tf wont be remotely close to fully utilized since 3rd parties need to make games for Xbone too, you'd only get aa, res and other incremental improvements at best.

6. Use your god damn brain lems before you post

15m user base, not 20m.

LOL I weep for them. 15m it is then.

@gpuking:

Here are the facts.

1. No single core 10tf gpu exists on the market right now, much less with the optimal TDP to fit inside a console. No, not even 14nm Polaris.

2. 5-6x Neo is 20-25tf, not quite 10tf.

3. Suppose such a 10tf console is real, it wont sell at anywhere near $400-$500, it would be upwards of $1200

4. MS won't alienate the established 20m users with a new architecture

5. The raw power of 10tf wont be remotely close to fully utilized since 3rd parties need to make games for Xbone too, you'd only get aa, res and other incremental improvements at best.

6. Use your god damn brain lems before you post

The problem for you is Vega has 4X pref/watt over Polaris's 2.5X pref/watt. Navi has 5X pref/watt.

Within less than a year, AMD changes pref/watt from 2.5X to 4X.

At similar PS4 TDP, NEO's 2.3X faster than PS4's GPU and it follows Polaris 2.5X pref/watt improvement.

I'll fucking LOL if this is true, so many cows here would look so bad.

If anyone can get their hands on next gen GPUs, and willing to sell at a loss, It would be Microsoft.

@gpuking:

Here are the facts.

1. No single core 10tf gpu exists on the market right now, much less with the optimal TDP to fit inside a console. No, not even 14nm Polaris.

2. 5-6x Neo is 20-25tf, not quite 10tf.

3. Suppose such a 10tf console is real, it wont sell at anywhere near $400-$500, it would be upwards of $1200

4. MS won't alienate the established 20m users with a new architecture

5. The raw power of 10tf wont be remotely close to fully utilized since 3rd parties need to make games for Xbone too, you'd only get aa, res and other incremental improvements at best.

6. Use your god damn brain lems before you post

The problem for you is Vega has 4X pref/watt over Polaris's 2.5X pref/watt. Navi has 5X pref/watt.

I know that, but like all future roadmap gpus they are nowhere near matured enough to be mass produced yet due to bad yield. The rumor puts the release at next year Spring isn't it?

I'll fucking LOL if this is true, so many cows here would look so bad.

If anyone can get their hands on next gen GPUs, and willing to sell at a loss, It would be Microsoft.

I'm already LOLing at your sheer ignorance, lol. It's alright breh, you had it tough this gen, we heard ya.

@gpuking:

Here are the facts.

1. No single core 10tf gpu exists on the market right now, much less with the optimal TDP to fit inside a console. No, not even 14nm Polaris.

2. 5-6x Neo is 20-25tf, not quite 10tf.

3. Suppose such a 10tf console is real, it wont sell at anywhere near $400-$500, it would be upwards of $1200

4. MS won't alienate the established 20m users with a new architecture

5. The raw power of 10tf wont be remotely close to fully utilized since 3rd parties need to make games for Xbone too, you'd only get aa, res and other incremental improvements at best.

6. Use your god damn brain lems before you post

The problem for you is Vega has 4X pref/watt over Polaris's 2.5X pref/watt. Navi has 5X pref/watt.

I know that, but like all future roadmap gpus they are nowhere near matured enough to be mass produced yet due to bad yield. The rumor puts the release at next year Spring isn't it?

Say hello to iPhone business model i.e. common garden walled software ecosystem with hardware upgrades every year... The end user doesn't have to worry about IT support issues.

Polaris = Q2/Q3 2016 for Polaris 10 and 11. 2.5X pref/watt

Vega = Q4 2016/2017 for Vega 10. Unknown for Vega 11. 4X pref/watt

Navi = 2018, 5X pref/watt.. slowing down with pref/watt improvements... it's time to switch towards another process tech e.g. 7 nm.

That's hardware upgrades almost every year.

This is why Sony has stated PS5 may not exist since constant evolution for PS4 may reach PS5 level anyway ie. FreeBSD OS X86-64 + Radeon HD driver shouldn't have any PS2/PS3 style generation limits. It's the exclusive titles that sells the platform and hardware is just empty canvas and paint brush. There are larger canvas and better quality paint brush.

Sony didn't resist FinFET Polaris upgrade and stick with what they have i.e. similar $$$, similar TDP, 2.5X pref/watt better, BC capable (NEO can run unmodified PS4 tiles without enhancements). Sony pulled the trigger for a hardware re-fresh.

If XBox NEXT has 10 TFLOPS then PS4 NEXT would have it also. Both MS and Sony are just like PC OEMs with semi-custom needs and both wants the lowest price/ best "bang per buck". Semi-custom = non-PC form factors and cost reduction assembly (it's a single large SoC).

The PCB + surface mount chips (including the large SoC) for XBO and PS4 are assembled by automated robots. With the desktop gaming PC, you need human resource to assemble different PC parts.

@shawty_beatz:

1st off all, I wasn't being bitchy. Not even close.

Why "first of all" when that's all you're going to say?

@gpuking:

I agree with this guy, 10TF is wishful thinking.

AMD already offering 5X pref/watt for it's console corporate customers. The road map's main purpose to entice it's existing and new corporate customers to commit towards AMD's solution. This is the same for Intel and NVIDIA's road maps.

Using Navi's 5X perf/watt.

(5X/ 2.3X) x 4.2 TFLOPS = 9.1 TFLOPS.

Using Vega's 4X perf/watt

.(4X/ 2.3X) x 4.2 TFLOPS = 7.1 TFLOPS.

Apple's shift from IBM's PowerPC to Intel's X86 was mostly due to Intel's superior road map (Apple's statement). Corporations buys into road maps.

The other question is the cost, hence why AMD's has a road map for this question.

This video explains why large chip PC GPUs has a high cost and the solution for it i.e. MCM GPUs. DX12 is a stepping stone for AMD's next change.

NVIDIA's GP100 600 mm^2 large single chip for console price is impossible (without incurring massive loses), but there's other solutions to solve this problem.

MCM = Multi Chip Module.

We know that 10 tflops is impossible and even if it was how much would it cost??

Consolites don't even want to spend money on headsets or external hard drives for example. When gaming on live many gamers lack these items frequently. Look at many of the consolites crying over the ps4 neo at only 400 for 3.5 tflops basically a gtx 970.

We know that 10 tflops is impossible and even if it was how much would it cost??

Consolites don't even want to spend money on headsets or external hard drives for example. When gaming on live many gamers lack these items frequently. Look at many of the consolites crying over the ps4 neo at only 400 for 3.5 tflops basically a gtx 970.

Did you watch AMD's Master Plan Part 2 video?

NEO's GPU has 4.19 TFLOPS (36 CU at 911 Mhz) and not bound by 970's 3.5 GB.

If you apply AMD Vega's 4X pref/watt on NEO's GPU, it's ~7.1 TFLOPS

If you apply AMD Navi's 5X pref/watt on NEO's GPU, it's ~9.1 TFLOPS.

Both Sony and MS should be into their 2018 game console development.

Xbox Next's 10 TFLOPS roughly follows Navi's 5X pref/watt.

I debunked your assertion Wii U being 160 stream processor. It's you who started to get personal.

You are completely insane if you think the Xbone 1.5 is at 10 TF. You just discredited yourself by posting this bullcrap.

The Xbone 1.5 will be weaker than the base PS4 model because Microsoft is not as smart as Cerny. Get it through your thick skull.

Microsoft already stated they don't want to do half gen step and if there's any upgrade, they wanted a substantial upgrade. Remember, Microsoft has the larger chip size than PS4's 348 mm^2 and high capability GDDR5 is a known factor at this time. Get it through your thick skull.

Microsoft's testing for different Xbox configurations would be pointless for yet another Xbox One's 1.x TFLOPS level power.

AMD knows the large chips doesn't deliver the same "bang per buck" as smaller chips and a solution must be found for any future consoles and mainstream PC SKUs i.e. watch the AMD's Masterplan Part 2 video. Consoles will NOT have PC's Vega 10, but Vega has a higher pref/watt than Polaris's 2.5X.

Btw, From http://arstechnica.com/gadgets/2016/03/amd-gpu-vega-navi-revealed/

There's another Vega in the form of "Vega 11".

AMD also confirmed that there will be at least two GPUs released under Vega: Vega 10 and Vega 11.

Polaris has it's high-low ASICs. 1st gen FinFET design

Vega has it's high-low ASICs. 2nd gen FinFET design.

If there's a new process tech, there's a new wave of PC GPUs.

AMD usually mixes different PC GCN revisions within a series models e.g. GCN 1.0, 1.1, 1.2 within the same marketing Rx-3x0 model series.

Unlike XBO/PS4's GPU designs, Xbox 360's GPU (SIMD based) wasn't attached to PC's Radeon HD's VLIW5/VLIW4 based designs.

What MS says and what they actually do are 2 different things.

Ex: MS says that they have great exclusives, yet their exclusives are trash.

MS will need to include the eSRAM in their Xbone 1.5 in order to enable backwards compatibility with the Xbone, so their SoC size is irrelevant, since 50+% of it will be consumed by the eSRAM.

MS will be lucky to even match the base PS4. PS4 is an engineering marvel. MS still hasn't fully reversed-engineered it yet, meaning they can't copy 100% of it in time for the Xbone 1.5 launch. It's game over. SONY wins again.

If you recall from Oxide's BradW statement on eSRAM, the programmer access eSRAM via Xbox One's specific eSRAM APIs. Microsoft updated eSRAM API to make it easier to use.

Xbox One's ESRAM is treated like local video memory with 32 MB size.

Did you remember Tiled Resource API being run NVIDIA GPU that consumed 16 MB of VRAM?

While DX11 layer was thrown in the bin, programmers doesn't have real access to the hardware with current generation consoles i.e. programmer has to go through the GPU driver e.g. for PS4's low level API's case.

At the driver level programming, the programmer can create any multi-threading model just like AMD's driver programmers e.g. bolted Mantle API on AMD's WDDM 1.3 drivers and bypassed DX11 layer, but Xbox One programmers doesn't have AMD's driver access level, hence they are stuck with DX11.X multi-threading model. It took MS DX12 update for XBO to change multi-threading model.

The real metal programming is the access level to create a GPU driver with any multi-threading model.

Furthermore, modern X86 CPUs translates CISC into RISC and programmers doesn't have access to X86's internal RISC instructions i.e. the secect sauce remains with Intel and AMD.

AMD K5 has provided programmer access to it's internal RISC core instruction set i.e. the ability to bypass the X86 decoders to gain additional performance. On PS4's Jaguar CPU, you don't get that access.

BradW has asserted PS4's low level API as last gen API with updates.

You should be a politician.

If you recall from Oxide's BradW statement on eSRAM, the programmer access eSRAM via Xbox One's specific eSRAM APIs. Microsoft updated eSRAM API to make it easier to use.

Xbox One's ESRAM is treated like local video memory with 32 MB size.

Did you remember Tiled Resource API being run NVIDIA GPU that consumed 16 MB of VRAM?

While DX11 layer was thrown in the bin, programmers doesn't have real access to the hardware with current generation consoles i.e. programmer has to go through the GPU driver e.g. for PS4's low level API's case.

At the driver level programming, the programmer can create any multi-threading model just like AMD's driver programmers e.g. bolted Mantle API on AMD's WDDM 1.3 drivers and bypassed DX11 layer, but Xbox One programmers doesn't have AMD's driver access level, hence they are stuck with DX11.X multi-threading model. It took MS DX12 update for XBO to change multi-threading model.

The real metal programming is the access level to create a GPU driver with any multi-threading model.

Furthermore, modern X86 CPUs translates CISC into RISC and programmers doesn't have access to X86's internal RISC instructions i.e. the secect sauce remains with Intel and AMD.

AMD K5 has provided programmer access to it's internal RISC core instruction set i.e. the ability to bypass the X86 decoders to gain additional performance. On PS4's Jaguar CPU, you don't get that access.

BradW has asserted PS4's low level API as last gen API with updates.

You should be a politician.

My point is ESRAM API calls can be remapped into specific/reserve 256 bit GDDR5-7000 memory locations.

Cows has this vision of PS4 or XBO is like PS2 in of terms metal programming.

Before Don Mattrick almost destroyed the Xbox brand, Microsoft really listened to developers when considering their console's specs. I wouldn't doubt that the NextBox will be a powerhouse compared to Neo/NX, because MS can afford to take a loss on hardware, as long as it has a strong software line-up to recoup costs. I'd expect "NextBox" to have backwards compatibility with Xbox One out of the gate, and a late 2017 release at the earliest. A slim Xbox One with a price drop could help move a few more million units of their current gen box in the meantime. MS needs a strategy like this to have any chance of coming out on top early in "Gen 9". Because it seems lately that more powerful console sells better, hence PS4>XboxOne>WiiU in sales and specs wise. That's just my opinion though, E3 could be very interesting this year.

@gpuking:

I agree with this guy, 10TF is wishful thinking.

Two years ago, having 4.2 TFLOPS GCN within PS4 size box was wishful thinking and here we are with PS4 NEO which has that raw GPU power. PS4 NEO is almost half way to 10 TFLOPS.

You are completely insane if you think the Xbone 1.5 is at 10 TF. You just discredited yourself by posting this bullcrap.

The Xbone 1.5 will be weaker than the base PS4 model because Microsoft is not as smart as Cerny. Get it through your thick skull.

Microsoft already stated they don't want to do half gen step and if there's any upgrade, they wanted a substantial upgrade. Remember, Microsoft has the larger chip size than PS4's 348 mm^2 and high capability GDDR5 is a known factor at this time. Get it through your thick skull.

Microsoft's testing for different Xbox configurations would be pointless for yet another Xbox One's 1.x TFLOPS level power.

AMD knows the large chips doesn't deliver the same "bang per buck" as smaller chips and a solution must be found for any future consoles and mainstream PC SKUs i.e. watch the AMD's Masterplan Part 2 video. Consoles will NOT have PC's Vega 10, but Vega has a higher pref/watt than Polaris's 2.5X.

Btw, From http://arstechnica.com/gadgets/2016/03/amd-gpu-vega-navi-revealed/

There's another Vega in the form of "Vega 11".

AMD also confirmed that there will be at least two GPUs released under Vega: Vega 10 and Vega 11.

Polaris has it's high-low ASICs. 1st gen FinFET design

Vega has it's high-low ASICs. 2nd gen FinFET design.

If there's a new process tech, there's a new wave of PC GPUs.

AMD usually mixes different PC GCN revisions within a series models e.g. GCN 1.0, 1.1, 1.2 within the same marketing Rx-3x0 model series.

Unlike XBO/PS4's GPU designs, Xbox 360's GPU (SIMD based) wasn't attached to PC's Radeon HD's VLIW5/VLIW4 based designs.

What MS says and what they actually do are 2 different things.

Ex: MS says that they have great exclusives, yet their exclusives are trash.

MS will need to include the eSRAM in their Xbone 1.5 in order to enable backwards compatibility with the Xbone, so their SoC size is irrelevant, since 50+% of it will be consumed by the eSRAM.

MS will be lucky to even match the base PS4. PS4 is an engineering marvel. MS still hasn't fully reversed-engineered it yet, meaning they can't copy 100% of it in time for the Xbone 1.5 launch. It's game over. SONY wins again.

If you recall from Oxide's BradW statement on eSRAM, the programmer access eSRAM via Xbox One's specific eSRAM APIs. Microsoft updated eSRAM API to make it easier to use.

Xbox One's ESRAM is treated like local video memory with 32 MB size.

Did you remember Tiled Resource API being run NVIDIA GPU that consumed 16 MB of VRAM?

While DX11 layer was thrown in the bin, programmers doesn't have real access to the hardware with current generation consoles i.e. programmer has to go through the GPU driver e.g. for PS4's low level API's case.

At the driver level programming, the programmer can create any multi-threading model just like AMD's driver programmers e.g. bolted Mantle API on AMD's WDDM 1.3 drivers and bypassed DX11 layer, but Xbox One programmers doesn't have AMD's driver access level, hence they are stuck with DX11.X multi-threading model. It took MS DX12 update for XBO to change multi-threading model.

The real metal programming is the access level to create a GPU driver with any multi-threading model.

Furthermore, modern X86 CPUs translates CISC into RISC and programmers doesn't have access to X86's internal RISC instructions i.e. the secect sauce remains with Intel and AMD.

AMD K5 has provided programmer access to it's internal RISC core instruction set i.e. the ability to bypass the X86 decoders to gain additional performance. On PS4's Jaguar CPU, you don't get that access.

BradW has asserted PS4's low level API as last gen API with updates.

MisterXmedia, is that you? Or one of his followers. So, we also have MrX followers here on Gamespot. God bless them.

Damn nonsense. Even if they could make a 10TF console within the next year, why limit it to having to play the same games as Xbone?

You didn't debunk anything lol. You just linked to one forum post to say that an entire thread was wrong. But hey, what more can I expect from someone who fell for an April Fool's joke a month later and then tried desperately to throw it under the rug instead of just admitting to the mistake?

You countered my assertion for Wii U's 320 stream processor with 160 stream processor assertion and you used drop in un-referenced/poorly referenced "Beyond3d" as a source.

The Gaf tech junkies that claim it's a 160sp part think they can just look at a screenshot of a highly customized die and figure out what's on it. A gpu weaker than 360 is running deferred rendered games at 60fps, with higher res textures, more advanced lighting and on top of that nigh on all Wii U games have v-sync? Sure. Nobody but the manufacturer knows for a fact exactly what it is, but a 320sp chip just makes sense with the above being true.

The same people claimed Ps4 and Xbox 720 just couldn't have more than 2-4gb of ram, because why would you need more? Oh and NX can't have more than 8gb.

I guess when I build my Zen and polaris PC soon I won't need more than 8gb, why would anyone need more lol.

@Chozofication:

Another GAF poster compared Brazo's 40 nm fabed CU against WIi U's fabed 40 nm CU, hence debunking 160 stream processor count.

40 SP x8 = 320

This thread delivers !! :D

Damn, Ron's got'em all crying, stomping their hooves and gnashing their teeth, good stuff mah man.

To heck with calling it Xbox Next, this bad boy needs to be called Xbox Won.... for real.lol :P

If you recall from Oxide's BradW statement on eSRAM, the programmer access eSRAM via Xbox One's specific eSRAM APIs. Microsoft updated eSRAM API to make it easier to use.

Xbox One's ESRAM is treated like local video memory with 32 MB size.

Did you remember Tiled Resource API being run NVIDIA GPU that consumed 16 MB of VRAM?

While DX11 layer was thrown in the bin, programmers doesn't have real access to the hardware with current generation consoles i.e. programmer has to go through the GPU driver e.g. for PS4's low level API's case.

At the driver level programming, the programmer can create any multi-threading model just like AMD's driver programmers e.g. bolted Mantle API on AMD's WDDM 1.3 drivers and bypassed DX11 layer, but Xbox One programmers doesn't have AMD's driver access level, hence they are stuck with DX11.X multi-threading model. It took MS DX12 update for XBO to change multi-threading model.

The real metal programming is the access level to create a GPU driver with any multi-threading model.

Furthermore, modern X86 CPUs translates CISC into RISC and programmers doesn't have access to X86's internal RISC instructions i.e. the secect sauce remains with Intel and AMD.

AMD K5 has provided programmer access to it's internal RISC core instruction set i.e. the ability to bypass the X86 decoders to gain additional performance. On PS4's Jaguar CPU, you don't get that access.

BradW has asserted PS4's low level API as last gen API with updates.

You should be a politician.

My point is ESRAM API calls can be remapped into specific/reserve 256 bit GDDR5-7000 memory locations.

Cows has this vision of PS4 or XBO is like PS2 in of terms metal programming.

If you assume that the extra latency won't cause issues running older titles, sure.

You didn't debunk anything lol. You just linked to one forum post to say that an entire thread was wrong. But hey, what more can I expect from someone who fell for an April Fool's joke a month later and then tried desperately to throw it under the rug instead of just admitting to the mistake?

You countered my assertion for Wii U's 320 stream processor with 160 stream processor assertion and you used drop in un-referenced/poorly referenced "Beyond3d" as a source.

The Gaf tech junkies that claim it's a 160sp part think they can just look at a screenshot of a highly customized die and figure out what's on it. A gpu weaker than 360 is running deferred rendered games at 60fps, with higher res textures, more advanced lighting and on top of that nigh on all Wii U games have v-sync? Sure. Nobody but the manufacturer knows for a fact exactly what it is, but a 320sp chip just makes sense with the above being true.

The same people claimed Ps4 and Xbox 720 just couldn't have more than 2-4gb of ram, because why would you need more? Oh and NX can't have more than 8gb.

I guess when I build my Zen and polaris PC soon I won't need more than 8gb, why would anyone need more lol.

To be fair, PS4 originally did have 4GB but had to up it to 8GB because MS did, but MS mainly did it to make XB1 essentially a fully functional HTPC.

If you recall from Oxide's BradW statement on eSRAM, the programmer access eSRAM via Xbox One's specific eSRAM APIs. Microsoft updated eSRAM API to make it easier to use.

Xbox One's ESRAM is treated like local video memory with 32 MB size.

Did you remember Tiled Resource API being run NVIDIA GPU that consumed 16 MB of VRAM?

While DX11 layer was thrown in the bin, programmers doesn't have real access to the hardware with current generation consoles i.e. programmer has to go through the GPU driver e.g. for PS4's low level API's case.

At the driver level programming, the programmer can create any multi-threading model just like AMD's driver programmers e.g. bolted Mantle API on AMD's WDDM 1.3 drivers and bypassed DX11 layer, but Xbox One programmers doesn't have AMD's driver access level, hence they are stuck with DX11.X multi-threading model. It took MS DX12 update for XBO to change multi-threading model.

The real metal programming is the access level to create a GPU driver with any multi-threading model.

Furthermore, modern X86 CPUs translates CISC into RISC and programmers doesn't have access to X86's internal RISC instructions i.e. the secect sauce remains with Intel and AMD.

AMD K5 has provided programmer access to it's internal RISC core instruction set i.e. the ability to bypass the X86 decoders to gain additional performance. On PS4's Jaguar CPU, you don't get that access.

BradW has asserted PS4's low level API as last gen API with updates.

You should be a politician.

My point is ESRAM API calls can be remapped into specific/reserve 256 bit GDDR5-7000 memory locations.

Cows has this vision of PS4 or XBO is like PS2 in of terms metal programming.

If you assume that the extra latency won't cause issues running older titles, sure.

Quantum Break PC's memory bandwidth bound issue is one example.

MisterXmedia, is that you? Or one of his followers. So, we also have MrX followers here on Gamespot. God bless them.

I'm consistent on applying AMD's pref/watt improvements for both Sony and MS.

Navi's 5X pref/watt from PS4's 1.84 TFLOPS yields 9.2 TFLOPS which is close to 10 TFLOPS.

Polaris 2.5X pref/watt from PS4's 1.84 TFLOPS yields 4.6 TFLOPS which is close to NEO's 4.2TFLOPS.

For general cases, I don't support MisterXmedia's view on XBO beating PS4.

Just mooaar poooof... it's happening, XBOX WON Incoming :P

http://www.gamespot.com/articles/gamestop-expecting-new-playstation-and-xbox-consol/1100-6439518/

Xbox Next an upgradable Xbox capable of up to 10TF. Sounds a lot like a PC to me.

Microsoft had a choice, step out or step up.

Looks like they stepping up to me. You probably can get 10TF if your willing to pay (and maybe wait a little longer), but I'm sure the standard Xbox Next will be a touch better than the new PS4K.

Xbox Next an upgradable Xbox capable of up to 10TF. Sounds a lot like a PC to me.

Microsoft had a choice, step out or step up.

Looks like they stepping up to me. You probably can get 10TF if your willing to pay (and maybe wait a little longer), but I'm sure the standard Xbox Next will be a touch better than the new PS4K.

Besides 2018's Navi or 2016's Polaris, "Vega 11" could be the other choice.

@ronvalencia: An example of what? How to not make a PC game? Because anyone can agree there. If you're trying to say that it tries to treat system RAM as eSRAM, you'll need to prove that... but even then it's not relevant because I was talking about GDDR5 latency.

If MS decided to make Xbox Next, and it was a next gen console with the latest hardware, I'd be sooooo surprised. It wouldn't be cost effective, it would kill off the Xbone completely, it would be too expensive to make sales... there's just so many reasons why this won't happen.

@ronvalencia: An example of what? How to not make a PC game? Because anyone can agree there. If you're trying to say that it tries to treat system RAM as eSRAM, you'll need to prove that... but even then it's not relevant because I was talking about GDDR5 latency.

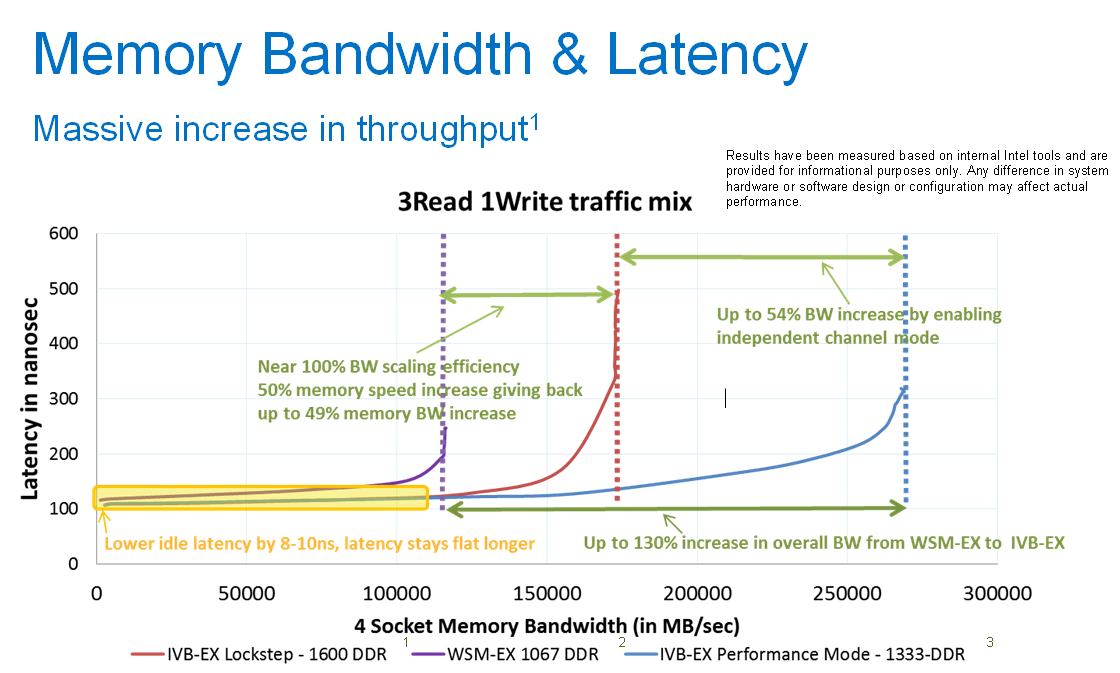

Read http://electronicdesign.com/analog/memory-wall-ending-multicore-scaling

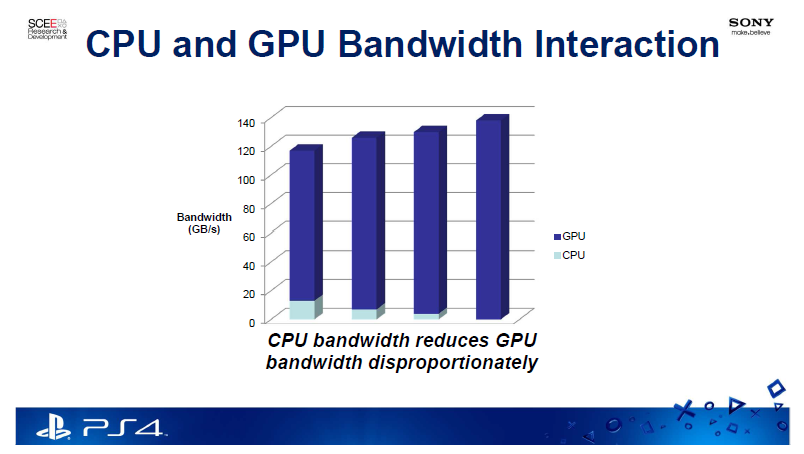

XBO's eSRAM has 156 GB/s and DDR3 has 52 GB/s effective bandwidth.

140 / 204 = 71 percent efficient (ESRAM)

52 / 68 = 76 percent efficient (DDR3)

http://www.eurogamer.net/articles/digitalfoundry-vs-the-xbox-one-architects

in real testing you get 140-150GB/s rather than the peak 204GB/

http://www.eurogamer.net/articles/digitalfoundry-microsoft-to-unlock-more-gpu-power-for-xbox-one-developers

At our peak fill-rate of 13.65GPixels/s that adds up to 164GB/s of real bandwidth that is needed which pretty much saturates our ESRAM bandwidth

PS4's 135 GB/s (GDDR5)

135 / 176 = 77 percent efficient

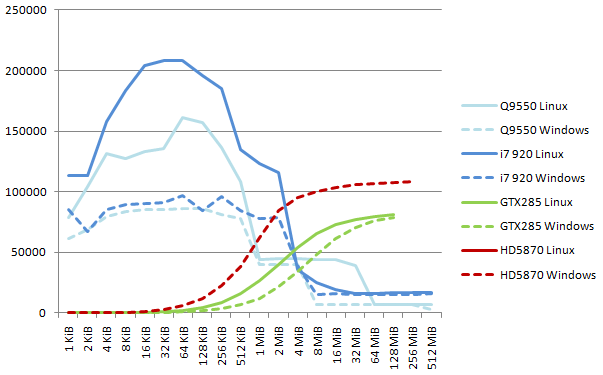

AMD Radeon HD 5870 has theoretical 153 GB/s with a 108 GB/s practical i.e. 70.5 percent efficient.

AMD's memory controller efficiencies are pretty consistent i.e. AMD uses brute force to overcome their "glass jaws" issues with their memory controllers.

Polaris has a new memory controller and still supports GDDR5 i.e. it's about time AMD address this "low hanging fruit".

Intel... superior with low latency memory controller.

Intel's memory controller is superior in low access latency.

-------------------------------------------------------------------------------------------------

To test the memory bound problem

Can XBO's TMU saturate memory bandwidth?

Answer: Yes.

Fury X's HBM's 512GB/s x 77 percent efficient = 394 GB/s

394 / 140= 2.8X

Fury X's 1928x1080p = 2077440 pixels per frame x 40 fps = 83,097,600 pixels per second

XBO's 1280x720p = 921,600 pixels per frame x 30 fps =27,648,000 pixels per second

83,097,600 / 27,648,000 = 3.0X

Notice 2.8X is close to 3.0X, hence it's a memory wall problem.

GDDR5 is nothing without memory controller. AMD's memory controller is a f**king joke. As with Bulldozer CPU, AMD did not fix their "glass jaws" issues for a long time!!!! Hopefully, they fix this problem with Polaris !!

Quantum Break's shader programs was kept simple enough to exploit XBO's memory bandwidth strength. The PC version attempted to replicate XBO's memory handling and negated PC GPU's higher FLOPS power.

Shader programs such as SweetFX are heavy shader programs hence it plays into PC GPU's strength.

.

@ronvalencia: Why can't you ever just say want you mean instead of making long-winded posts with your semi-broken English and a bunch of images? I'm not wasting time trying to decode that post to figure out which parts of it are actually relevant to the conversation and which are just you trying to prove that you're savvy. Just get to the damn point.

@ronvalencia: Why can't you ever just say want you mean instead of making long-winded posts with your semi-broken English and a bunch of images? I'm not wasting time trying to decode that post to figure out which parts of it are actually relevant to the conversation and which are just you trying to prove that you're savvy. Just get to the damn point.

Computer science major is a prerequisite when dealing with computer science issues. My day job is C++ programmer for Microsoft Windows platform.

In basic terms, Quantum Break for PC is a direct XBO port with XBO style memory handling behavior i.e. QB exploited XBO's memory bandwidth advantage and the PC port was forced to deal with it. DX12 will not shield different hardware behaviors.

PC GPU's higher TFLOPS was gimped by memory wall.

AMD provided fat PC GPUs with higher memory bandwidth hence better results than NVIDIA's counterparts.

@ronvalencia: Why can't you ever just say want you mean instead of making long-winded posts with your semi-broken English and a bunch of images? I'm not wasting time trying to decode that post to figure out which parts of it are actually relevant to the conversation and which are just you trying to prove that you're savvy. Just get to the damn point.

Computer science major is a prerequisite when dealing with computer science issues.

It's not that I don't understand what you're saying as much as it is that I don't understand why you're saying it. I'll ask you one question, and you'll seemingly try to answer 10 questions that I'm not asking. It's one thing to be thorough, but you just ramble and I always end up with more questions than answers when talking with you. And the worst part is that you think that you're answering what I'm asking when you don't even seem to know what I'm asking. Based on your response, you think that I was asking a general question about GDDR5 in consoles. What I was actually asking was how GDDR5 could be used in place of the XB1's eSRAM considering the fact that GDDR5 has higher latency than standard DDR3. Stick to answering that question. If I need a more in-depth explanation, I'll ask for one.

@ronvalencia: Why can't you ever just say want you mean instead of making long-winded posts with your semi-broken English and a bunch of images? I'm not wasting time trying to decode that post to figure out which parts of it are actually relevant to the conversation and which are just you trying to prove that you're savvy. Just get to the damn point.

Computer science major is a prerequisite when dealing with computer science issues.

It's not that I don't understand what you're saying as much as it is that I don't understand why you're saying it. I'll ask you one question, and you'll seemingly try to answer 10 questions that I'm not asking. It's one thing to be thorough, but you just ramble and I always end up with more questions than answers when talking with you. And the worst part is that you think that you're answering what I'm asking when you don't even seem to know what I'm asking. Based on your response, you think that I was asking a general question about GDDR5 in consoles. What I was actually asking was how GDDR5 could be used in place of the XB1's eSRAM considering the fact that GDDR5 has higher latency than standard DDR3. Stick to answering that question. If I need a more in-depth explanation, I'll ask for one.

Quantum Break direct XBO port to PC is the best example for GDDR5 equipped PC GPUs i.e. fast enough GDDR5 can handle optimized XBO ports.

OP is even more clueless than the hermits. wow. just wow. even ignoring the sheer ludicrousness of a mid gen xbone refresh being 10 tflop, 3.8 multiplied by 5-6 = 10 right? we really need better education in this world. kids today are in such a sorry state.

If this console can't make a vanilla latte then it has lost a customer.

High expectations for this processing power, it should be able to form espresso items out of thin air.

OP is even more clueless than the hermits. wow. just wow. even ignoring the sheer ludicrousness of a mid gen xbone refresh being 10 tflop, 3.8 multiplied by 5-6 = 10 right? we really need better education in this world. kids today are in such a sorry state.

I'm aware of article's issue i.e. it's a direct copy and paste.

Replace OP article with

http://www.tweaktown.com/news/51972/new-xbox-5-times-more-powerful-ps4-neo-rocks-10-tflops/index.html

"Microsoft insider" is just repeating AMD's Navi 5X perf/watt estimates with PS4's GPU size.

Vega's 4X perf/watt improvement could be another candidate.

http://arstechnica.com/gadgets/2016/03/amd-gpu-vega-navi-revealed/

We know Vega 10 is a large chip GPU, so what's "Vega 11"?

Another link for "Vega 10" and "Vega 11" from http://www.anandtech.com/show/10145/amd-unveils-gpu-architecture-roadmap-after-polaris-comes-vega

From https://forum.beyond3d.com/threads/amd-vega-speculation-rumors-and-discussion.57662/

Vega(2nd gen FinFET)'s perf/watt improvements range from 1.5X to 2X over Polaris (1st gen FinFET).

@ronvalencia: Why can't you ever just say want you mean instead of making long-winded posts with your semi-broken English and a bunch of images? I'm not wasting time trying to decode that post to figure out which parts of it are actually relevant to the conversation and which are just you trying to prove that you're savvy. Just get to the damn point.

Computer science major is a prerequisite when dealing with computer science issues.

It's not that I don't understand what you're saying as much as it is that I don't understand why you're saying it. I'll ask you one question, and you'll seemingly try to answer 10 questions that I'm not asking. It's one thing to be thorough, but you just ramble and I always end up with more questions than answers when talking with you. And the worst part is that you think that you're answering what I'm asking when you don't even seem to know what I'm asking. Based on your response, you think that I was asking a general question about GDDR5 in consoles. What I was actually asking was how GDDR5 could be used in place of the XB1's eSRAM considering the fact that GDDR5 has higher latency than standard DDR3. Stick to answering that question. If I need a more in-depth explanation, I'll ask for one.

Quantum Break direct XBO port to PC is the best example for GDDR5 equipped PC GPUs i.e. fast enough GDDR5 can handle optimized XBO ports.

Yeah, you don't understand my question. Also, using the worst PC port of all time as the "best example" of anything is a joke.

If MS decided to make Xbox Next, and it was a next gen console with the latest hardware, I'd be sooooo surprised. It wouldn't be cost effective, it would kill off the Xbone completely, it would be too expensive to make sales... there's just so many reasons why this won't happen.

If MS chose to do nothing or even worse release an Xbox Next that's less powerful than the next PS4 it would have worse consequences. There only option is go big or go home and if they get a PC under your TV that's upgradable in the form of Xbox Next it would add to there already growing Windows 10 platform and that's the true goal.

If there's anyone that can take a hit using the latest technology its Microsoft. The have pockets much larger than Sony.

I'll fucking LOL if this is true, so many cows here would look so bad.

If anyone can get their hands on next gen GPUs, and willing to sell at a loss, It would be Microsoft.

I'm already LOLing at your sheer ignorance, lol. It's alright breh, you had it tough this gen, we heard ya.

Everyone had a rough gen bruh, it was a failure.

Next generation begins holiday 2016

@techhog89: He's using Quantum Break as proof that GDDR5 can be used for eSRAM functions in rushed XB1 to PC ports, but it provides a substantial performance hit due to the latency discrepency that you pointed out.

Quantum Break direct XBO port to PC is the best example for GDDR5 equipped PC GPUs i.e. fast enough GDDR5 can handle optimized XBO ports.

Yeah, you don't understand my question. Also, using the worst PC port of all time as the "best example" of anything is a joke.

Latency has an impact on effective memory bandwidth.

A low latency should have yielded higher effective memory bandwidth for XBO's eSRAM, but i don't see this case i.e. 140 GB/s to 150 GB/s from 204 GB/s???? XBO's eSRAM's effective memory bandwidth is no where near L1 cache(SRAM) or register file storage(SRAM)'s memory bandwidth efficiency. It either shows NEC design eSRAM is a POS or AMD's interface with eSRAM is a POS

I rather see that 32 MB ESRAM turn into another 14 CU blocks, hence the total CU count would be 28 instead of 14. XBO has the larger SoC over PS4 but it doesn't translate into compute power.

Btw, Polaris has memory compression beyond ROPS delta compression.

In terms of effective memory bandwidth and results, Fury X's HBM delivers about 2.8X over XBO's eSRAM solution, hence 256 bit high clocked GDDR5 would be enough for XBO's Quantum Break results.

Quantum Break was designed for XBO and the PC hardware has to follow XBO's strengths .

With around 10 TFLOPS GCN, I don't think MS will be using Polaris and it's GDDR5 solution i.e. HBMv2 or GDDR5X must be used.

Please Log In to post.

Log in to comment