@ronvalencia said:

NVIDIA Kepler is not an AMD GCN 1.0//1.1 hence it has different ROPS and memory controller behaviours i.e. it's not apples to apples comparison.

7950 (28 CU) was already rivalled or slightly less than by FirePro W8000 (28 CU) with 256bit bus and both are using AMD's Tahiti Pro chip. 7950's 384bit memory is useful for overclock editions e.g. my 7950 was 900Mhz factory overclock edition, which is close to normal 7970.

FirePro W8000's consumer part is 7870 XT which uses Tahiti LE chip (24 CU) with higher clock speeds i.e. 925Mhz base clock which rivals non-BE 7950.

7870 XT = 925 Mhz x 24 CU = 2.84 TFLOPS.

7950 = 800 Mhz x 28 CU = 2.87 TFLOPS

7950 BE = 850 Mhz(or 925mhz Turbo) x 28 CU = 3.05 TFLOPS to 3.315 TFLOPS

7950 900Mhz OC ed = 3.23 TFLOPS

7970 = 3.79 TFLOPS.

Radaon HD R9-285 (28 CU) with 256bit memory beats R9-280 with 384bit and it's close/rivals to R9-280X i.e. R9-285 has frame buffer compression improvements.

Xbox One's effective ESRAM bandwidth is lower than 200 GB/s, hence it's pointless line. Microsoft already shown their effective ROPS and ESRAM memory bandwidth to be around 150 GB/s. If the frame buffer tiling is used, Xbox One's other issue would be 1.3 TFLOPS shader power which wouldn't be a big issue with proper first party games i.e. they would have budgeted it's shader workload against the hardware.

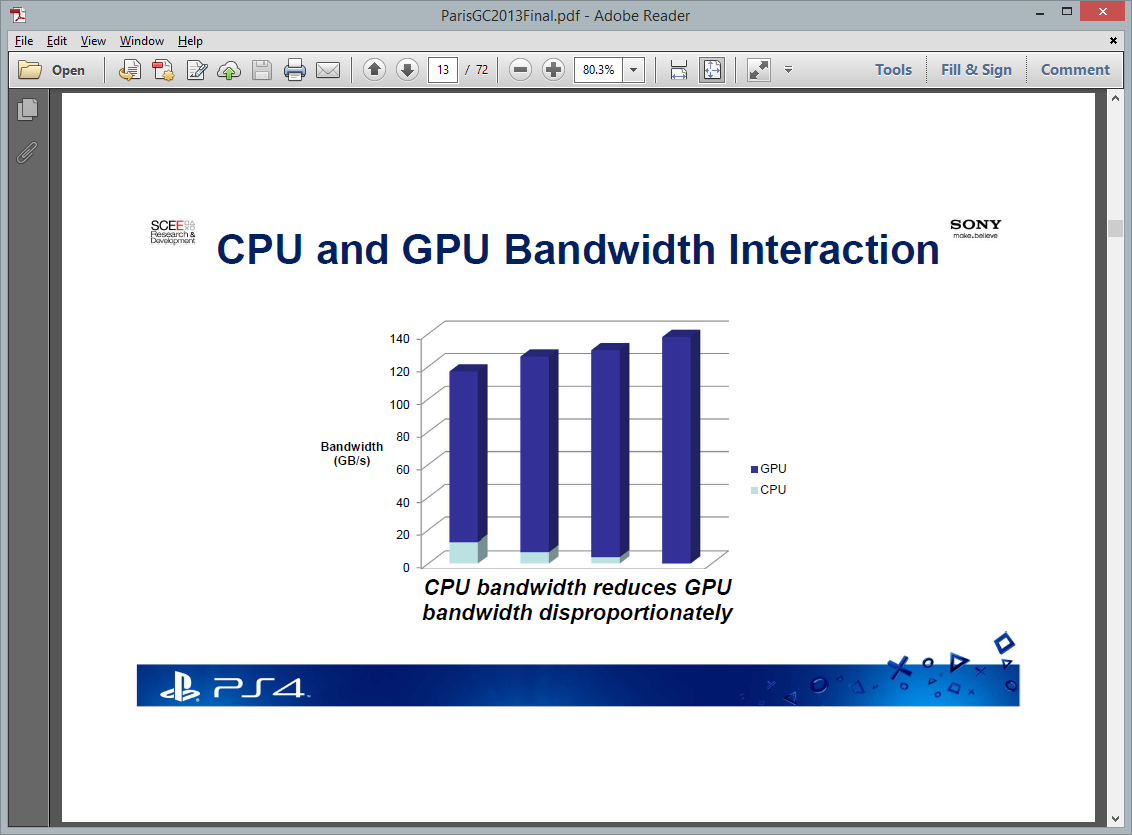

Sony hasn't removed PS4's CPU access to GDDR5 memory i.e. AI and physics calculations would be done with memory interactions prior to GPU command buffer creation.

Ever since Commodore Amiga 500/1200's IGP and unified memory architecture, I have disliked unified memory architecture.

PS3's implementation is wrong i.e. you don't split GPU work between two sets of memory pools with two separate asymmetric graphics/dsp processors.

The gaming PC setup with fat dGPU + VRAM is better i.e. CPU can do its AI + Physics calculations with DDR3 memory without slowing down GPU's VRAM. It's only when the CPU side has created the command buffer that a transfer is required to the GPU side. The current game console hasn't replicated the gaming PC setup.

The ideal games console would be

1. CPU has it's own memory pool.

2. GPU has it's own memory pool with sufficient size for the modern frame buffer workloads and in-flight texture storage.

3. High speed links between CPU and GPU. This is use for workloads that needs unified memory access i.e. CPU can write to GPU's memory pool or GPU can write to CPU's memory pool.

The above setup has the best of both worlds.

From http://wccftech.com/evidence-amd-apus-featuring-highbandwidth-stacked-memory-surfaces/

AMD's next gen APU has both external main memory and on-chip HBM (VRAM) pools i.e. Xbox One done right.

It doesn't matter bandwidth is bandwidth,and GCN on PC with stronger hardware than the xbox one like the 7790 have 96Gb/s and is enough to out perform the xbox one and even some times the PS4 as i have see in some games.

So is irrelevant to hove more bandwidth, the xbox one has under power hardware,is like having a 5 lane race track but your card is a damn Hyundai Accent running vs a stronger Elantra.

All that crap and the firepro is totally irrelevant to the argument fact is having more bandwidth on xbox one means nothing and that has been proven without doubt,in fact it was from 140gb/s to 150gb/s which is not even higher than the PS4.

Physics don't need to even run on the CPU on PS4,GPU compute will handle them better faster and save CPU time.

Yeah the PS3 was so wrong that it beat the xbox 360 graphics wise,i guess they knew something you didn't,but again that is totally irrelevant.

@tymeservesfate said:

you dont have to believe anything they say...despite multiple people inside and outside of MS have said there will be an effect. Some even giving detailed walkthroughs on how and why DX12 will make an effect...Its entirely in your right to not believe them. Ignore this thread n say its bullshit nonsense n self serving PR. Go ahead...do that.

I just hope you're not a cow that blindly believed Sony was going to bring greatness, then waited a year n got almost nothing from them. And are now still blindly keeping the faith in Sony to deliver "teh greatness" while saying MS is feeding people bullshit lol. That would be funny.

Despite MS own Phil Spencer saying it will not drastically change graphics...hahahahaa

That is funny because the PS4 has like 70+ games more than the xbox one,so greatness came even less to the xbox one and and has way less games to play,so bad it is that your biggest release in the next 8 months is an Indie name Ori,because Evolve is also on PS4.

@delta3074 said:

'If you don't know how to run it that is your thing,the same shit was say about the galaxy s 1 and the tegra 2 games,which was say would not work on the S1,and all you need to run them was a chainfire tools which is basically a software driver.'

i know what chainfire tools is tormentos and i also have a Galaxy S1 with a custom Cyanogenmod 4.3 android Os running on it (official android for S1 only goes up to 2.6)

You have to have a Rooted phone or tablet to use Chainfire 3D because it's software layer that sits between the Game and the GPU and changes the instructions sent from the Game to the GPU.

'Piracy is a rife on Ios to were the fu** has you been.? For years it has been i have several friends who don't pay a single cent for apps on ios'

You need to jailbreak an Iphone, Ipad to play pirated games and certain game are deliberatley gimped if your phone is jailbroken.

You don't need to root and android phone to play pirated games, thats the difference.

Only people with Jailbroken Apple products can play pirated games, Anyone with an android tab or phone can play pirated android games.

Piracy is a way bigger issue on android.

No offence dude but you should leave this alone, i have a ton of experience of messing around with Iphones and android phones and tablets, it's been my Hobby since i got my S1 4 years ago/

I am a tablet gamer these days so i know my shit when it comes to Android and IOS, when you have actually rooted and put a custom OS on one then come and talk to me.

Seriously Tormentos, this is something i know more about from personal experience, all you can do is post articles you find on the internet, Give it up because your bare bone description of chainfire 3D was pathetic and showed that you don't understand how Chainfire actually works.

You need to know which GPU the game is made for before you can even thionk about using it and rooting Android phones and Tablets is not childs play dude, one wrong move and you can Brick the bootloader and destroy your phone or tablet and you need a specific custom OS for each different model of phone or tablet, chosse the wrong one and you have a dead phone/tablet.

I would like to point out now that i do not play pirated games, i do not use chainfire tools because there are very few games that don't run on Tegra 3 which my Google Asus Nexus 7 2012 has and Cyanogenmod custom OS are endorsed by Google and is supported by Microsoft.

http://www.stuff.tv/android/microsoft-investing-aftermarket-android-operating-system-cyanogenmod/news

Also, if you overclock the Nexus 7 to 2.0 Ghz it won't last that long, i know i have overclocked android devices and it's the same deal as with PC's.

Also, overclocking the CPUU won't benefit you that much as very few games need a processor over 1.4 Ghz which the Nexus 7 can easily run.

It already flys, i don't need to overclock it, i WILL NOT ROOT MY NEXUS 7 to overclock it because it is far too Risky to Root a nexus 7.

Word to the wise, if you have to mess around with the Bootloader to Root your device DON'T DO IT unless you are completely sure what you are doing because once you change the Bootloader you CANNOT Reverse it.

Basically rooting your phone is the easy here they do it even for your,hell most places were they sell used phones here sell it unlock and rooted.

Oh please dude millions upon millions of iphone users jail broke their iphone,my friend has a 6 and already is jailbroken,is this notion that only few people do this,ios make more money because they tent to charge for things,while android tents to rely more on freemium.

Just like angry birds use to cost $1 on ios and was free on Android.

WTF....hahahahaaa

Dude i use to had chain fire on my SG1,i had it rooted and over cloked,just like my S2 from sprint the Epic 4G touch,which was the fastest of all S2,on my S2 i use to ran mijjz but i ran several more,juts like S1.

Now i own an Lg3 but i haven't root it because i don't game on my phone any mores,other than for strategy games like clash of clans and other very simple touch games controls are always a mess.

I have been a member of XDA since 2010,and have rooted phone for friends as well,so yeah i know.

Oh please you need to be royally stupid or mess up good to actually brick your phone,and even then there is ways to save it,not in all cases but there is,my older step son bricked a galaxys and i revived it with Odin,it got stock on a boot loop.

And basically the link you posted there what is say is that MS like always is trying to fu** the competition,by investing in cyanogen and trying to segregate android which will ultimately fail,because irrelevant of cyanogen running a good custom OS is small and irrelevant vs Google,Most phones come with Android which is free for makers,cyanogen is a rom inside that android bobble,making an OS of its own will yield no results this is MS they don't help you for nothing,so the real reason here is try to fragment android and see if the pathetic windows phones have a breath of air...lol

Hell that is another market sony is beating MS,in the quarter ending in September 30 MS sold 9..3 million phones sony sold 9.9 million phones,and the Xperia z is one of the lesser selling phones..lol

No dude you don't need to go that high is was just showing how it can fly,my sprint S2 still working and is over clock my daughter has it.

It is far to risky for a newbie like you...

Rooting may sound like a tricky procedure, but it's really not. Thanks to an awesome root-kit made specifically for all Nexus devices (including both the 2012 and 2013 Nexus 7), the process for rooting is virtually painless.

Update: There's a new root method available, and it's the easiest one yet. No need for USB cables, computers, drivers, or toolkits. Simply download an app, run it, and you're root 30 seconds later. Check out our guide here, it's worked flawlessly with 2013 Nexus 7's, but currently doesn't work for 2012 editions as well. Worst comes to worst, you can always come back to this guide, as that process does not erase any data.

http://nexus7.wonderhowto.com/how-to/root-your-nexus-7-tablet-running-android-4-4-kitkat-windows-guide-0150849/

Newbie...

I don't play on tables i don't like touch controls,alto i could use a controller i don't really feel like doing that.

Log in to comment