I like the Face-Shot in the 8th picture.

I dont really care for the Wiiu and I dont really care how it matches up to The Witcher and such. Seems irrelevant,

This topic is locked from further discussion.

I like the Face-Shot in the 8th picture.

I dont really care for the Wiiu and I dont really care how it matches up to The Witcher and such. Seems irrelevant,

[QUOTE="04dcarraher"] Yep, at best expect a downclocked 7850, its also been confirmed that the next xbox isnt even using AMD next chip line sea islands but southern islands. So you wont see 7870 or better at all in the console because of power and cooling requirements . loosingENDS

Wild sily rumors are "confirmation" now ?

So a GTX 690 going to be ancient tech only being a year and a half old by the time the next xbox comes out.... GTX 690 being basically two downclocked GTX 680's in SLI. Also having a 300w TDP, still being direct x 11 based, having 2gb of memory per gpu = ancient :lol: . Your hilarious the next xbox has been finalized and has been in production for awhile now, ie reports of low yield rates. So if you had common sense the you would know the gpu being used in the next xbox is beyond ancient compared to any top tier gpu's created from last year.

04dcarraher

Do you realize that 1.5 years old video cards from xbox 360 release date were pure crap comparing to Xenos ?

Even 2005 cards like SLI 7800GTX are crap compoaring to Xenos, that means xbox 720 can have a GPU vastly better than the top in 2013

I would not even put the ancient 690GTX in the discussion TBH, would be like talking about 2004 video cards when Xenos was so much better than anything in 2005

7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.mrfrosty151986I had a 7800GTX, couldn't play Mirror's Edge at above 800x600...

denial eh? multiple sources report different production "rumors" from this summer to this fall report of low yield rates., which means its in production. Your goofy, MS spent a bucket load for a new standardization that was going to on the horizon for gpus. While they did spend the cash for unified architecture the performance and memory restrictions did not allow the 360 to be top tier. Now this time around there is no new architecture coming out, and the fact that gpu power and cooling requirements for top tier gpus have tripled since the release of the 360. Also for the early dev kits the reason why they were behind on the gpu front is because it took AMD more then a year to release a Pc unified architecture based gpu. while Nvidia released a unified architecture one year after the 360 being 3x faster. Also you dont need a dev kit to have the same power of gpu's as the console to code the game, having a gpu thats equal or faster then the console gpu allows emulation and bullshots that you love to see.[QUOTE="04dcarraher"][QUOTE="loosingENDS"]

Rumors are not always true you know, the dev kits are in production

It is rather different, since 360 had two far weaker dev kits produced before the final hardware

These dev kits R800 one i think were not even close to Xenos

Inconsistancy

7800GTX total system draw 266w (vga only| 120)

680GTX 271w (vga only| 168 avg, 188peak)

Yea, 3x increase.

A 7800GTX(120w) has about the same power draw as a 7870 (120w) (hey look, they're nearly the same number!)

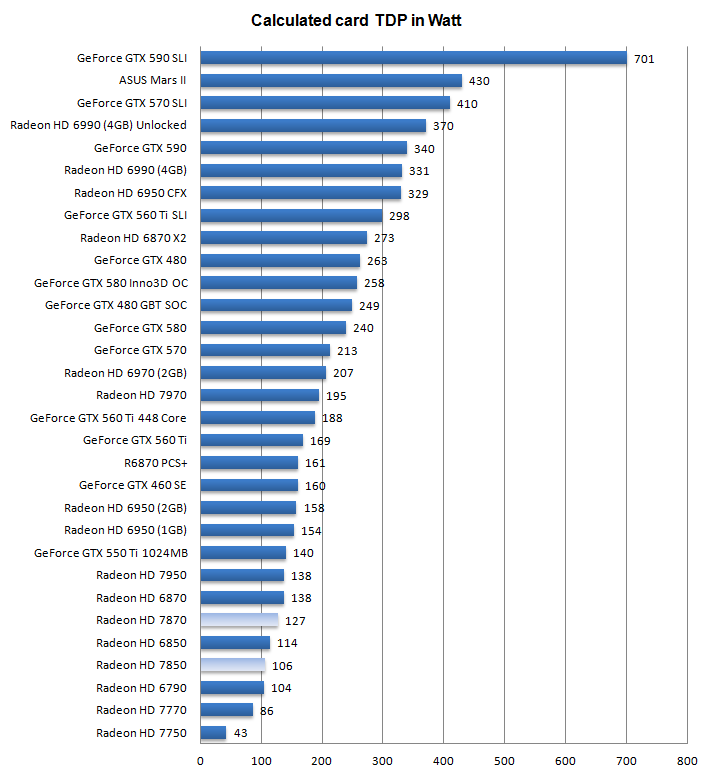

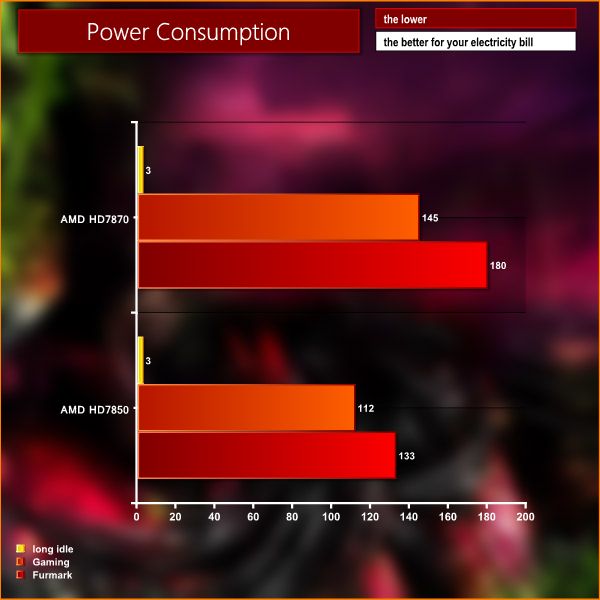

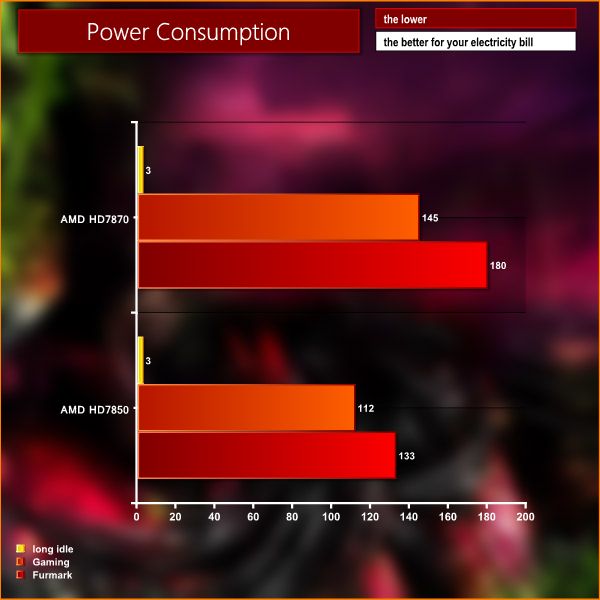

Max TDP of 7870 is 175w, while 7850 has a TDP of 130w and GTX 680 has a TDP of 195w. The 360's Xenos gpu had a TDP of around 90w. then the RSX had about 85w TDP both at 90nm. And since a 7870 at full load can use 175w its basically has 2x the tdp of the Xenos gpu, and the 7950 has a TDP of 200w and 7970 has a TDP of 250w. So yes top tier gpu's need nearly 3x the power and cooling when fully used compared to the console gpu's. And if are talking about gpu's like 6990, or GTX 690 they are over 3x the requirements.2005 gpu's cooling

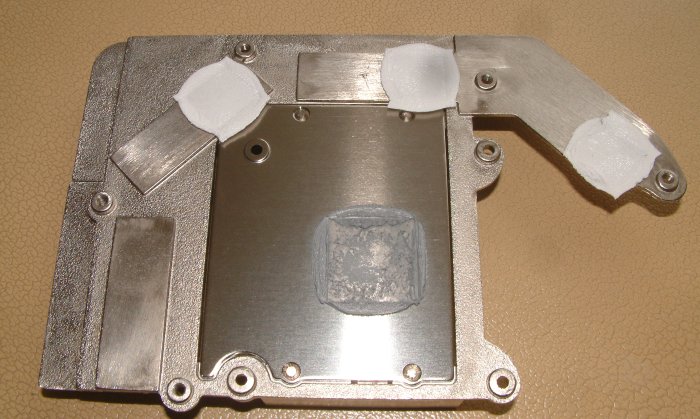

to 2012's gpu cooling

I had a 7800GTX, couldn't play Mirror's Edge at above 800x600... Stop comparing the way a console utilises GPU's to the way PC's do...you just make yourself look stupid.[QUOTE="mrfrosty151986"]7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.Inconsistancy

Man I get it for the new guys. They don't know Loosey. But you guys that have been around a while should feel ashamed. Hes making a fool out of every single one of you.

sandbox3d

The funny thing is I'm new and I already said that he did a good job on rustling ppls jimmies. :lol:

Still this was a fun thread for me.

[QUOTE="loosingENDS"][QUOTE="04dcarraher"] Nope, its already in production so 2011/2012 medium ranged based tech

04dcarraher

Rumors are not always true you know, the dev kits are in production

It is rather different, since 360 had two far weaker dev kits produced before the final hardware

These dev kits R800 one i think were not even close to Xenos

denial eh? multiple sources report different production "rumors" from this summer to this fall report of low yield rates., which means its in production. Your goofy, MS spent a bucket load for a new standardization that was going to on the horizon for gpus. While they did spend the cash for unified architecture the performance and memory restrictions did not allow the 360 to be top tier. Now this time around there is no new architecture coming out, and the fact that gpu power and cooling requirements for top tier gpus have tripled since the release of the 360. Also for the early dev kits the reason why they were behind on the gpu front is because it took AMD more then a year to release a Pc unified architecture based gpu. while Nvidia released a unified architecture one year after the 360 being 3x faster. Also you dont need a dev kit to have the same power of gpu's as the console to code the game, having a gpu thats equal or faster then the console gpu allows emulation and bullshots that you love to see.AMD didn't build on 1 scalar + SIMD4 based GPU for the PC i.e. AMD designed VLIW5 based GPU.

AMD returns to scalar + SIMD based GPU with GCN (Graphics Core Next).

[QUOTE="Inconsistancy"]I had a 7800GTX, couldn't play Mirror's Edge at above 800x600... Stop comparing the way a console utilises GPU's to the way PC's do...you just make yourself look stupid.[QUOTE="mrfrosty151986"]7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.mrfrosty151986

He forgets consoles have specially tweaked settings.

Also The 7800GTX is techinically a lot faster spec wise but it lacks unified shaders and Mirror's Edge is made on Unreal 3 engine which is a shader intensive game.

He might also have a weak CPU since he never mentions what it was.

If you compared the 7800GTX and consoles with non shader intensive games then the 7800GTX would roundhouse kick the consoles.

The 8600gt is basically a 7600gt with unified shaders and it perform equal to/or better than consoles.

This next generation doesn't have anything special like unified shaders.

[QUOTE="Inconsistancy"]I had a 7800GTX, couldn't play Mirror's Edge at above 800x600... Stop comparing the way a console utilises GPU's to the way PC's do...you just make yourself look stupid. Pot calling the kettle black? "7800 GTX would destroy Xenos"[QUOTE="mrfrosty151986"]7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.mrfrosty151986

[QUOTE="Inconsistancy"]

[QUOTE="04dcarraher"] denial eh? multiple sources report different production "rumors" from this summer to this fall report of low yield rates., which means its in production. Your goofy, MS spent a bucket load for a new standardization that was going to on the horizon for gpus. While they did spend the cash for unified architecture the performance and memory restrictions did not allow the 360 to be top tier. Now this time around there is no new architecture coming out, and the fact that gpu power and cooling requirements for top tier gpus have tripled since the release of the 360. Also for the early dev kits the reason why they were behind on the gpu front is because it took AMD more then a year to release a Pc unified architecture based gpu. while Nvidia released a unified architecture one year after the 360 being 3x faster. Also you dont need a dev kit to have the same power of gpu's as the console to code the game, having a gpu thats equal or faster then the console gpu allows emulation and bullshots that you love to see. 04dcarraher

7800GTX total system draw 266w (vga only| 120)

680GTX 271w (vga only| 168 avg, 188peak)

Yea, 3x increase.

A 7800GTX(120w) has about the same power draw as a 7870 (120w) (hey look, they're nearly the same number!)

Max TDP of 7870 is 175w, while 7850 has a TDP of 130w and GTX 680 has a TDP of 195w. The 360's Xenos gpu had a TDP of around 90w. then the RSX had about 85w TDP both at 90nm. And since a 7870 at full load can use 175w its basically has 2x the tdp of the Xenos gpu, and the 7950 has a TDP of 200w and 7970 has a TDP of 250w. So yes top tier gpu's need nearly 3x the power and cooling when fully used compared to the console gpu's. And if are talking about gpu's like 6990, or GTX 690 they are over 3x the requirements.Max TDP != real world.

Real world (ie, video game) puts the 7870 at 108avg:118peak, not its max of 175.

http://www.tomshardware.com/reviews/geforce-gtx-670-test-review,3217-15.html

Real world (this case, a 3d benchmark) put the x1800xt at a peak of 112.2, and the 7800gtx512 at 94.7-107.8 (couldn't find average, max 128)

http://www.xbitlabs.com/articles/graphics/display/geforce7800gtx512_5.html

""and the fact that gpu power and cooling requirements for top tier gpus have tripled since the release of the 360""

You said top tier gpu's, in your post, not the console's gpu's, then you go on in your response to compare them to the consoles directly as if they were the "top tier" (they were underclocked). And again, the max and real world consumptions are different.

And this last bit, no. The 7990/690's are TWO top tier gpu's, not 1.

how about some Wii U BO2 comparisons? I think the Wii U version looks the best out of all of them.

don't pick a visually dull looking wii U game and then compare it to a visually stunning 360/PS3 game and say "wow, look at those graphics"

if you were going to do that why not pick a game that was first released on the current gen consoles and then compare it to zombiU graphics

Max TDP of 7870 is 175w, while 7850 has a TDP of 130w and GTX 680 has a TDP of 195w. The 360's Xenos gpu had a TDP of around 90w. then the RSX had about 85w TDP both at 90nm. And since a 7870 at full load can use 175w its basically has 2x the tdp of the Xenos gpu, and the 7950 has a TDP of 200w and 7970 has a TDP of 250w. So yes top tier gpu's need nearly 3x the power and cooling when fully used compared to the console gpu's. And if are talking about gpu's like 6990, or GTX 690 they are over 3x the requirements.[QUOTE="04dcarraher"]

[QUOTE="Inconsistancy"]

7800GTX total system draw 266w (vga only| 120)

680GTX 271w (vga only| 168 avg, 188peak)

Yea, 3x increase.

A 7800GTX(120w) has about the same power draw as a 7870 (120w) (hey look, they're nearly the same number!)

Inconsistancy

Max TDP != real world.

Real world (ie, video game) puts the 7870 at 108avg:118peak, not its max of 175.

http://www.tomshardware.com/reviews/geforce-gtx-670-test-review,3217-15.html

Real world (this case, a 3d benchmark) put the x1800xt at a peak of 112.2, and the 7800gtx512 at 94.7-107.8 (couldn't find average, max 128)

http://www.xbitlabs.com/articles/graphics/display/geforce7800gtx512_5.html

""and the fact that gpu power and cooling requirements for top tier gpus have tripled since the release of the 360""

You said top tier gpu's, in your post, not the console's gpu's, then you go on in your response to compare them to the consoles directly as if they were the "top tier" (they were underclocked). And again, the max and real world consumptions are different.

And this last bit, no. The 7990/690's are TWO top tier gpu's, not 1.

The 95-107W is the 512mb model and OC models . 256 mb models which is what consoles have is at 80W. Besides both 360 and PS3 use a cut down version of those so i highly doubt they use more than 75-80W so there is still a substantial difference between them and the 7870. Also look the size of the card and cooling system on a 7870 and a 7800gtx and imagine a 7870 in a box as small as a 360 with only 1 small exhaust fan ( 7800gtx with a lower power consumption and Rrod remind you anything ?) . Not sure Ms will go that way againMax TDP of 7870 is 175w, while 7850 has a TDP of 130w and GTX 680 has a TDP of 195w. The 360's Xenos gpu had a TDP of around 90w. then the RSX had about 85w TDP both at 90nm. And since a 7870 at full load can use 175w its basically has 2x the tdp of the Xenos gpu, and the 7950 has a TDP of 200w and 7970 has a TDP of 250w. So yes top tier gpu's need nearly 3x the power and cooling when fully used compared to the console gpu's. And if are talking about gpu's like 6990, or GTX 690 they are over 3x the requirements.[QUOTE="04dcarraher"]

[QUOTE="Inconsistancy"]

7800GTX total system draw 266w (vga only| 120)

680GTX 271w (vga only| 168 avg, 188peak)

Yea, 3x increase.

A 7800GTX(120w) has about the same power draw as a 7870 (120w) (hey look, they're nearly the same number!)

Inconsistancy

Max TDP != real world.

Real world (ie, video game) puts the 7870 at 108avg:118peak, not its max of 175.

http://www.tomshardware.com/reviews/geforce-gtx-670-test-review,3217-15.html

Real world (this case, a 3d benchmark) put the x1800xt at a peak of 112.2, and the 7800gtx512 at 94.7-107.8 (couldn't find average, max 128)

http://www.xbitlabs.com/articles/graphics/display/geforce7800gtx512_5.html

""and the fact that gpu power and cooling requirements for top tier gpus have tripled since the release of the 360""

You said top tier gpu's, in your post, not the console's gpu's, then you go on in your response to compare them to the consoles directly as if they were the "top tier" (they were underclocked). And again, the max and real world consumptions are different.

And this last bit, no. The 7990/690's are TWO top tier gpu's, not 1.

Lol your really are living up to your name aren't you...... You cannot buy a normal "old" 7870 any more , they are all ghz editions, which do have a an average of TDP 175w, AMD clocks them at 1,000MHz, hence the GHz Edition suffix, and accompanies it with 2GB of GDDR5 memory set to operate at 1200MHz (for an effective peak transfer rate of 4800 MT/s). The card has a 190W power envelope, but AMD says "typical" power consumption is 175W. Then your comparing a pc gpu's vs stripped down console gpu's...... not even close , the Xenos only had around 90w TDP while the RSX ~85w TDPyour dense, assuming that I dont know GTX 690, 6990,GTX 295 etc are dual gpu single card's :roll:

Also you need to understand that max TDP means full 100% load all the time. A "normal" 7870 has a max TDP of 175w, if the card always stays below the 175w threshold, or even a 150w threshold, then why do they have two 6 pin pci-e connectors? For a max wattage 225wavailable with pci-e slot. While a 7850 has 130w tdp . and only has one pci-e connector, only able to have 150w total with pci-e slot foravailable power. Does it mean they will always reach that load no, but since console gpu's tend to be fully used your not going to see fluxuating usage like on PC and see typical loads that stay well under TDP limit.

I had a 7800GTX, couldn't play Mirror's Edge at above 800x600...[QUOTE="mrfrosty151986"]7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.Inconsistancy

2006/2007 PC doesn't have CELL to fix Geforce 7's aging design.

From forum.beyond3d.com/showthread.php?t=57736&page=5

------------------------

"I could go on for pages listing the types of things the spu's are used for to make up for the machines aging gpu, which may be 7 series NVidia but that's basically a tweaked 6 series NVidia for the most part. But I'll just type a few off the top of my head:"

1) Two ppu/vmx units There are three ppu/vmx units on the 360, and just one on the PS3. So any load on the 360's remaining two ppu/vmx units must be moved to spu.

2) Vertex culling You can look back a few years at my first post talking about this, but it's common knowledge now that you need to move as much vertex load as possible to spu otherwise it won't keep pace with the 360.

3) Vertex texture sampling You can texture sample in vertex shaders on 360 just fine, but it's unusably slow on PS3. Most multi platform games simply won't use this feature on 360 to make keeping parity easier, but if a dev does make use of it then you will have no choice but to move all such functionality to spu.

4) Shader patching Changing variables in shader programs is cake on the 360. Not so on the PS3 because they are embedded into the shader programs. So you have to use spu's to patch your shader programs.

5) Branching You never want a lot of branching in general, but when you do really need it the 360 handles it fine, PS3 does not. If you are stuck needing branching in shaders then you will want to move all such functionality to spu.

6) Shader inputs You can pass plenty of inputs to shaders on 360, but do it on PS3 and your game will grind to a halt. You will want to move all such functionality to spu to minimize the amount of inputs needed on the shader programs.

7) MSAA alternatives Msaa runs full speed on 360 gpu needing just cpu tiling calculations. Msaa on PS3 gpu is very slow. You will want to move msaa to spu as soon as you can.

8. Post processing 360 is unified architecture meaning post process steps can often be slotted into gpu idle time. This is not as easily doable on PS3, so you will want to move as much post process to spu as possible.

9) Load balancing 360 gpu load balances itself just fine since it's unified. If the load on a given frame shifts to heavy vertex or heavy pixel load then you don't care. Not so on PS3 where such load shifts will cause frame drops. You will want to shift as much load as possible to spu to minimize your peak load on the gpu.

10) Half floats You can use full floats just fine on the 360 gpu. On the PS3 gpu they cause performance slowdowns. If you really need/have to use shaders with many full floats then you will want to move such functionality over to the spu's.

11) Shader array indexing You can index into arrays in shaders on the 360 gpu no problem. You can't do that on PS3. If you absolutely need this functionality then you will have to either rework your shaders or move it all to spu.

Etc, etc, etc...

Note that Radeon X1900 aged better than Geforce 7.

[QUOTE="Inconsistancy"]

[QUOTE="04dcarraher"] Max TDP of 7870 is 175w, while 7850 has a TDP of 130w and GTX 680 has a TDP of 195w. The 360's Xenos gpu had a TDP of around 90w. then the RSX had about 85w TDP both at 90nm. And since a 7870 at full load can use 175w its basically has 2x the tdp of the Xenos gpu, and the 7950 has a TDP of 200w and 7970 has a TDP of 250w. So yes top tier gpu's need nearly 3x the power and cooling when fully used compared to the console gpu's. And if are talking about gpu's like 6990, or GTX 690 they are over 3x the requirements.04dcarraher

Max TDP != real world.

Real world (ie, video game) puts the 7870 at 108avg:118peak, not its max of 175.

http://www.tomshardware.com/reviews/geforce-gtx-670-test-review,3217-15.html

Real world (this case, a 3d benchmark) put the x1800xt at a peak of 112.2, and the 7800gtx512 at 94.7-107.8 (couldn't find average, max 128)

http://www.xbitlabs.com/articles/graphics/display/geforce7800gtx512_5.html

""and the fact that gpu power and cooling requirements for top tier gpus have tripled since the release of the 360""

You said top tier gpu's, in your post, not the console's gpu's, then you go on in your response to compare them to the consoles directly as if they were the "top tier" (they were underclocked). And again, the max and real world consumptions are different.

And this last bit, no. The 7990/690's are TWO top tier gpu's, not 1.

Lol your really are living up to your name aren't you...... You cannot buy a normal "old" 7870 any more , they are all ghz editions, which do have a an average of TDP 175w, AMD clocks them at 1,000MHz, hence the GHz Edition suffix, and accompanies it with 2GB of GDDR5 memory set to operate at 1200MHz (for an effective peak transfer rate of 4800 MT/s). The card has a 190W power envelope, but AMD says "typical" power consumption is 175W. Then your comparing a pc gpu's vs stripped down console gpu's...... not even close , the Xenos only had around 90w TDP while the RSX ~85w TDPyour dense, assuming that I dont know GTX 690, 6990,GTX 295 etc are dual gpu single card's :roll:

Also you need to understand that max TDP means full 100% load all the time. A "normal" 7870 has a max TDP of 175w, while a 7850 has 130w. Does it mean they will always reach that load no, but since console gpu's tend to be fully used your not going to see fluxuating usage like on PC and see typical loads that stay well under TDP limit.

7870 Ghz Edition's TDP http://www.guru3d.com/articles_pages/amd_radeon_hd_7850_and_7870_review,7.html

AMD designed extra TDP headroom for overclocking.

[QUOTE="mrfrosty151986"][QUOTE="Inconsistancy"] I had a 7800GTX, couldn't play Mirror's Edge at above 800x600...InconsistancyStop comparing the way a console utilises GPU's to the way PC's do...you just make yourself look stupid. Pot calling the kettle black? "7800 GTX would destroy Xenos" Depends on the workload e.g. Xenos would destroy 7800 GTX on 32bit compute and shader branching.

7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.

mrfrosty151986

Outside of NVIDIA TWIMTBP DX9c, Xenos would destroy NVIDIA 7800 GTX.

NVIDIA 7800 GTX doesn't fully accelerate Direct3D 9c since it has issues with 32bit compute.

Lol your really are living up to your name aren't you...... You cannot buy a normal "old" 7870 any more , they are all ghz editions, which do have a an average of TDP 175w, AMD clocks them at 1,000MHz, hence the GHz Edition suffix, and accompanies it with 2GB of GDDR5 memory set to operate at 1200MHz (for an effective peak transfer rate of 4800 MT/s). The card has a 190W power envelope, but AMD says "typical" power consumption is 175W. Then your comparing a pc gpu's vs stripped down console gpu's...... not even close , the Xenos only had around 90w TDP while the RSX ~85w TDP[QUOTE="04dcarraher"]

[QUOTE="Inconsistancy"]

Max TDP != real world.

Real world (ie, video game) puts the 7870 at 108avg:118peak, not its max of 175.

http://www.tomshardware.com/reviews/geforce-gtx-670-test-review,3217-15.htmlReal world (this case, a 3d benchmark) put the x1800xt at a peak of 112.2, and the 7800gtx512 at 94.7-107.8 (couldn't find average, max 128)

http://www.xbitlabs.com/articles/graphics/display/geforce7800gtx512_5.html""and the fact that gpu power and cooling requirements for top tier gpus have tripled since the release of the 360""

You said top tier gpu's, in your post, not the console's gpu's, then you go on in your response to compare them to the consoles directly as if they were the "top tier" (they were underclocked). And again, the max and real world consumptions are different.

And this last bit, no. The 7990/690's are TWO top tier gpu's, not 1.

ronvalencia

your dense, assuming that I dont know GTX 690, 6990,GTX 295 etc are dual gpu single card's :roll:

Also you need to understand that max TDP means full 100% load all the time. A "normal" 7870 has a max TDP of 175w, while a 7850 has 130w. Does it mean they will always reach that load no, but since console gpu's tend to be fully used your not going to see fluxuating usage like on PC and see typical loads that stay well under TDP limit.

7870 Ghz Edition's TDP http://www.guru3d.com/articles_pages/amd_radeon_hd_7850_and_7870_review,7.html

AMD designed extra TDP headroom for overclocking.

aka not 100% load used only typical used, according to AMD "the horses mouth" says "typical" full power consumption is 175W. Again you dont need two pci-e power connectors to do overclocking when even the ghz edition "only" uses 127 watts. You can get away with only one pci-e connector and still have headroom.

This above shows for example of what peak loads to do when fully used. You dont see 100% usage in Pc games and cannot use them as proof when comparing with console gpu's where they do push to the limit to get all they can out of them.

[QUOTE="ronvalencia"]

[QUOTE="04dcarraher"] Lol your really are living up to your name aren't you...... You cannot buy a normal "old" 7870 any more , they are all ghz editions, which do have a an average of TDP 175w, AMD clocks them at 1,000MHz, hence the GHz Edition suffix, and accompanies it with 2GB of GDDR5 memory set to operate at 1200MHz (for an effective peak transfer rate of 4800 MT/s). The card has a 190W power envelope, but AMD says "typical" power consumption is 175W. Then your comparing a pc gpu's vs stripped down console gpu's...... not even close , the Xenos only had around 90w TDP while the RSX ~85w TDP

your dense, assuming that I dont know GTX 690, 6990,GTX 295 etc are dual gpu single card's :roll:

Also you need to understand that max TDP means full 100% load all the time. A "normal" 7870 has a max TDP of 175w, while a 7850 has 130w. Does it mean they will always reach that load no, but since console gpu's tend to be fully used your not going to see fluxuating usage like on PC and see typical loads that stay well under TDP limit.

04dcarraher

7870 Ghz Edition's TDP http://www.guru3d.com/articles_pages/amd_radeon_hd_7850_and_7870_review,7.html

AMD designed extra TDP headroom for overclocking.

aka not 100% load used only typical used, according to AMD "the horses mouth" says "typical" full power consumption is 175W. Again you dont need two pci-e power connectors to do overclocking when even the ghz edition "only" uses 127 watts. You can get away with only one pci-e connector and still have headroom.

This above shows for example of what peak loads to do when fully used. You dont see 100% usage in Pc games and cannot use them as proof when comparing with console gpu's where they do push to the limit to get all they can out of them.

Note that Guru3D's benchmarks also used FurMarks.[QUOTE="04dcarraher"][QUOTE="ronvalencia"]

7870 Ghz Edition's TDP http://www.guru3d.com/articles_pages/amd_radeon_hd_7850_and_7870_review,7.html

AMD designed extra TDP headroom for overclocking.

ronvalencia

aka not 100% load used only typical used, according to AMD "the horses mouth" says "typical" full power consumption is 175W. Again you dont need two pci-e power connectors to do overclocking when even the ghz edition "only" uses 127 watts. You can get away with only one pci-e connector and still have headroom.

This above shows for example of what peak loads to do when fully used. You dont see 100% usage in Pc games and cannot use them as proof when comparing with console gpu's where they do push to the limit to get all they can out of them.

Note that Guru3D's benchmarks also used FurMarks. Nope not anymore "We decided to move away from Furmark in early 2011 and are now using a game like application" "We however are not disclosing what application that is as we do not want AMD/NVIDIA to "optimize & monitor" our stress test whatsoever, for our objective reasons of course." So their un reliable when it comes to full peak loads.[QUOTE="ronvalencia"][QUOTE="04dcarraher"]Note that Guru3D's benchmarks also used FurMarks. Nope not anymore "We decided to move away from Furmark in early 2011 and are now using a game like application" "We however are not disclosing what application that is as we do not want AMD/NVIDIA to "optimize & monitor" our stress test whatsoever, for our objective reasons of course." So their un reliable when it comes to full peak loads.aka not 100% load used only typical used, according to AMD "the horses mouth" says "typical" full power consumption is 175W. Again you dont need two pci-e power connectors to do overclocking when even the ghz edition "only" uses 127 watts. You can get away with only one pci-e connector and still have headroom.

This above shows for example of what peak loads to do when fully used. You dont see 100% usage in Pc games and cannot use them as proof when comparing with console gpu's where they do push to the limit to get all they can out of them.

04dcarraher

Ops, sorry, my mistake.

They could lower the PowerTune limit if Sony or MS targets a lower TDP.

From http://www.geeks3d.com/20120305/amd-radeon-hd-7870-and-hd-7850-launched/

7850 would be a better candiate i.e. reallocate CELL's five SPU's TDP towards the GPU side. PS3 has TDP budget for CELL and RSX.

[QUOTE="mrfrosty151986"]

7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.

ronvalencia

Outside of NVIDIA TWIMTBP DX9c, Xenos would destroy NVIDIA 7800 GTX.

NVIDIA 7800 GTX doesn't fully accelerate Direct3D 9c since it has issues with 32bit compute.

I think 7800GTX has fixed shaders, which means a 50-60% utilization at most cases, which throws out of balance the whole pipeline and limits the game graphics a huge lot

Xenos on the other hand has a unified fully used pipeline with a huge utilization percentage

Raw power is one thing, actually using it and removing bottlecks is another

In short, in real life apps, 7800GTX cant touch Xenos at all

even if xbox 720 hardware does not match GTX690 in raw power, i am sure will have far better performance in real applications, due to the fact that consoles can remove most PC bottlenecks and offer custom designs integraded with software

Nope not anymore "We decided to move away from Furmark in early 2011 and are now using a game like application" "We however are not disclosing what application that is as we do not want AMD/NVIDIA to "optimize & monitor" our stress test whatsoever, for our objective reasons of course." So their un reliable when it comes to full peak loads.[QUOTE="04dcarraher"][QUOTE="ronvalencia"] Note that Guru3D's benchmarks also used FurMarks.ronvalencia

Ops, sorry, my mistake.

They could lower the PowerTune limit if Sony or MS targets a lower TDP.

From http://www.geeks3d.com/20120305/amd-radeon-hd-7870-and-hd-7850-launched/

7850 would be a better candiate i.e. reallocate CELL's five SPU's TDP towards the GPU side.

7850 is a much more logical choice for them to keep power and heat down without having to downclock or "downgrade" to much to keep it ~100w tdp.[QUOTE="ronvalencia"]

[QUOTE="mrfrosty151986"]

7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.

loosingENDS

Outside of NVIDIA TWIMTBP DX9c, Xenos would destroy NVIDIA 7800 GTX.

NVIDIA 7800 GTX doesn't fully accelerate Direct3D 9c since it has issues with 32bit compute.

I think 7800GTX has fixed shaders, which means a 50-60% utilization at most cases, which throws out of balance the whole pipeline and limits the game graphics a huge lot

Xenos on the other hand has a unified fully used pipeline with a huge utilization percentage

Raw power is one thing, actually using it and removing bottlecks is another

In short, in real life apps, 7800GTX cant touch Xenos at all

even if xbox 720 hardware does not match GTX690 in raw power, i am sure will have far better performance in real applications, due to the fact that consoles can remove most PC bottlenecks and offer custom designs integraded with software

Geforce 7's pixel shaders stalls when it perform texture fetch operation i.e. it doesn't have SMT tech to swap out the stalled thread.

AMD fixed this problem with Ultra-Threading tech. Xenos has 64 SMT over 48 pipelines.

NVIDIA fixed this problem with GigaThreads.

[QUOTE="loosingENDS"][QUOTE="ronvalencia"]

Outside of NVIDIA TWIMTBP DX9c, Xenos would destroy NVIDIA 7800 GTX.

NVIDIA 7800 GTX doesn't fully accelerate Direct3D 9c since it has issues with 32bit compute.

ronvalencia

I think 7800GTX has fixed shaders, which means a 50-60% utilization at most cases, which throws out of balance the whole pipeline and limits the game graphics a huge lot

Xenos on the other hand has a unified fully used pipeline with a huge utilization percentage

Raw power is one thing, actually using it and removing bottlecks is another

In short, in real life apps, 7800GTX cant touch Xenos at all

even if xbox 720 hardware does not match GTX690 in raw power, i am sure will have far better performance in real applications, due to the fact that consoles can remove most PC bottlenecks and offer custom designs integraded with software

Geforce 7's pixel shaders stalls when it perform texture fetch operation i.e. it doesn't have SMT tech to swap out the stalled thread.I wonder what are some of the optimizations xbox 720 could have over the top cards today

One guess is the obvious EDRAM to remove the AA/RAM bottleneck, depending on the right ammount could make a world of difference

Another would be unified ram, with enough speed to actually benefit the design

I have stopped following hardware though, so i cant think of anything else

[QUOTE="ronvalencia"]

[QUOTE="mrfrosty151986"]

7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.

loosingENDS

Outside of NVIDIA TWIMTBP DX9c, Xenos would destroy NVIDIA 7800 GTX.

NVIDIA 7800 GTX doesn't fully accelerate Direct3D 9c since it has issues with 32bit compute.

I think 7800GTX has fixed shaders, which means a 50-60% utilization at most cases, which throws out of balance the whole pipeline and limits the game graphics a huge lot

Xenos on the other hand has a unified fully used pipeline with a huge utilization percentage

Raw power is one thing, actually using it and removing bottlecks is another

In short, in real life apps, 7800GTX cant touch Xenos at all

even if xbox 720 hardware does not match GTX690 in raw power, i am sure will have far better performance in real applications, due to the fact that consoles can remove most PC bottlenecks and offer custom designs integraded with software

When is comes to shader workloads yes the Xenos beats the 7800GTX to a bloody pulp. however in non shader heavy workloads 7800gtx because of its higher memory bandwidth and memory amount kills the Xenos. Shader heavy games didnt start coming out until 2007/2008 which by then the 7800GTX was already 2-3 years old and was based on the older fixed piplines for vertex and shader workloads. Here's the problem with your thinking All gpu's are now unified shader based, which meansd for the 720 to be able to perform even remotely close to a GTX 690 :roll: it would need a gpu that has 3500+ stream processors which means the power and cooling requirements along with performance would be nearly the same as a GTX 690.There is no magic designs or software to bypass the laws thermal dynamics.Geforce 7's pixel shaders stalls when it perform texture fetch operation i.e. it doesn't have SMT tech to swap out the stalled thread.[QUOTE="ronvalencia"][QUOTE="loosingENDS"]

I think 7800GTX has fixed shaders, which means a 50-60% utilization at most cases, which throws out of balance the whole pipeline and limits the game graphics a huge lot

Xenos on the other hand has a unified fully used pipeline with a huge utilization percentage

Raw power is one thing, actually using it and removing bottlecks is another

In short, in real life apps, 7800GTX cant touch Xenos at all

even if xbox 720 hardware does not match GTX690 in raw power, i am sure will have far better performance in real applications, due to the fact that consoles can remove most PC bottlenecks and offer custom designs integraded with software

loosingENDS

I wonder what are some of the optimizations xbox 720 could have over the top cards today

One guess is the obvious EDRAM to remove the AA/RAM bottleneck, depending on the right ammount could make a world of difference

Another would be unified ram, with enough speed to actually benefit the design

I have stopped following hardware though, so i cant think of anything else

AMD could

1. improve out-of-order processing on GCNs i.e. further improve GpGPU workloads.

2. additional independent scalar units i.e. further improve GpGPU workloads and shields the vector units from scalar workloads.

3. improve tessellation.

4. improve MSAA with deferred rendering.

[QUOTE="loosingENDS"]

[QUOTE="Masenkoe"]

Well first of all

LOLOL LOOSEY THREAD

Second of all, I like how you only posted 1 ZombiU Screen yet a million other screens.

Also, who cares, about Wii U graphics we all knew Nintendo didn't care.

And last, if you think next gen Sony and Microsoft consoles will be anywhere near the levels of those tech demo screens you're dead wrong.

XVision84

Fixed, i posted many more ZombiU pics, actually the more i find the worst it looks

I don't know man, this:

looks pretty impressive to me. Especially the lighting effects and the lack of jaggies, those 360 screenshots on other hand...

Small corridor environments aren't really impressive anymore though. Look at Silent Hill 2. That game is like 10 years old, but it's easy to hide how limited the hardware is when you are working with claustrophobic environments where the player's interactions affect them very little. It's nothing compared to a game with huge environments, shifting day and night cycles, destructable terrain and archetecture, tons of NPCs with complex AI routines, etc. Those are the kinds of things that require a lot more processing power, and unfortunately that means that Zombie U is not a very good game to show off the Wii-U's hardware credentials with, regardless of the quality of the game as an experience.

That's Silent Hill 2. It's a PS2-era game, but it still looks good if you are judging it by the small, indoor environments where not a lot happens other than the occasional puzzles or monster encounters.

[QUOTE="XVision84"]

[QUOTE="loosingENDS"]

Fixed, i posted many more ZombiU pics, actually the more i find the worst it looks

Timstuff

I don't know man, this:

looks pretty impressive to me. Especially the lighting effects and the lack of jaggies, those 360 screenshots on other hand...

Small corridor environments aren't really impressive anymore though. Look at Silent Hill 2. That game is like 10 years old, but it's easy to hide how limited the hardware is when you are working with claustrophobic environments where the player's interactions affect them very little. It's nothing compared to a game with huge environments, shifting day and night cycles, destructable terrain and archetecture, tons of NPCs with complex AI routines, etc. Those are the kinds of things that require a lot more processing power, and unfortunately that means that Zombie U is not a very good game to show off the Wii-U's hardware credentials with, regardless of the quality of the game as an experience.

That's Silent Hill 2. It's a PS2-era game, but it still looks good if you are judging it by the small, indoor environments where not a lot happens other than the occasional puzzles or monster encounters.

The is artwork from ZombiU, the game is nothing like that

[QUOTE="loosingENDS"]

The is artwork from ZombiU, the game is nothing like that

Timstuff

Ah. You'd think I'd have learned after Red Steel, LOL! :P

At least this one shows something that could have been gameplay

The ZombiU image is clear artwork, without any chance of mistaking it for gameplay

[QUOTE="mrfrosty151986"]

7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.

ronvalencia

Outside of NVIDIA TWIMTBP DX9c, Xenos would destroy NVIDIA 7800 GTX.

NVIDIA 7800 GTX doesn't fully accelerate Direct3D 9c since it has issues with 32bit compute.

A 7800GTX has twice the memory, twice the bandwidth, more fillrate, more texel rate...... it would kill off Xenos...

[QUOTE="ronvalencia"]

[QUOTE="mrfrosty151986"]

7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.

mrfrosty151986

Outside of NVIDIA TWIMTBP DX9c, Xenos would destroy NVIDIA 7800 GTX.

NVIDIA 7800 GTX doesn't fully accelerate Direct3D 9c since it has issues with 32bit compute.

A 7800GTX has twice the memory, twice the bandwidth, more fillrate, more texel rate...... it would kill off Xenos...

And yet cant run anything even miles close to what Xenos runs and is garbage comparing

More things that cant be actually fully used due to last gen fixed shader design and PC bottlenecks are not really that helpfull

A 7800GTX has twice the memory, twice the bandwidth, more fillrate, more texel rate...... it would kill off Xenos...

mrfrosty151986

Note that the amount of texture fetch operations scales with pixel shaders stalls.

Refer to http://forum.beyond3d.com/showthread.php?p=552774

Read Jawed's post

For example texture fetches in RSX will always be painfully slow in comparison - but how slow depends on the format of the textures.

Also, control flow operations in RSX will be out of bounds because they are impractically slow - whereas in Xenos they'll be the bread and butter of good code because there'll be no performance penalty.Dependent texture fetches in Xenos (I presume that's what the third point means), will work without interrupting shader code - again RSX simply can't do this, dependent texturing blocks one ALU per pipe

[QUOTE="mrfrosty151986"]

A 7800GTX has twice the memory, twice the bandwidth, more fillrate, more texel rate...... it would kill off Xenos...

ronvalencia

Note that the amount of texture fetch operations scales with pixel shaders stalls.

Refer to http://forum.beyond3d.com/showthread.php?p=552774

Read Jawed's post

Meens absolutely nothing...... Closed box system always go for the most efficient results so simply saying that RSX is bad at this is pointless... And were talking about a 7800GTX not RSX...jackass :|For example texture fetches in RSX will always be painfully slow in comparison - but how slow depends on the format of the textures.

Also, control flow operations in RSX will be out of bounds because they are impractically slow - whereas in Xenos they'll be the bread and butter of good code because there'll be no performance penalty.Dependent texture fetches in Xenos (I presume that's what the third point means), will work without interrupting shader code - again RSX simply can't do this, dependent texturing blocks one ALU per pipe

[QUOTE="mrfrosty151986"]

[QUOTE="ronvalencia"]

Outside of NVIDIA TWIMTBP DX9c, Xenos would destroy NVIDIA 7800 GTX.

NVIDIA 7800 GTX doesn't fully accelerate Direct3D 9c since it has issues with 32bit compute.

loosingENDS

A 7800GTX has twice the memory, twice the bandwidth, more fillrate, more texel rate...... it would kill off Xenos...

And yet cant run anything even miles close to what Xenos runs and is garbage comparing

More things that cant be actually fully used due to last gen fixed shader design and PC bottlenecks are not really that helpfull

A 7800GTX runs Quake 4, Doom 3 and Half Life 2 much much better then Xenos ever could.[QUOTE="ronvalencia"][QUOTE="mrfrosty151986"]

A 7800GTX has twice the memory, twice the bandwidth, more fillrate, more texel rate...... it would kill off Xenos...

mrfrosty151986

Note that the amount of texture fetch operations scales with pixel shaders stalls.

Refer to http://forum.beyond3d.com/showthread.php?p=552774

Read Jawed's post

Meens absolutely nothing...... Closed box system always go for the most efficient results so simply saying that RSX is bad at this is pointless... And were talking about a 7800GTX not RSX...jackass :|For example texture fetches in RSX will always be painfully slow in comparison - but how slow depends on the format of the textures.

Also, control flow operations in RSX will be out of bounds because they are impractically slow - whereas in Xenos they'll be the bread and butter of good code because there'll be no performance penalty.Dependent texture fetches in Xenos (I presume that's what the third point means), will work without interrupting shader code - again RSX simply can't do this, dependent texturing blocks one ALU per pipe

You fool, PS3's NVIDIA RSX is part of Geforce 7 family. Geforce 7 and NVIDIA RSX doesn't have G80's Gigathreads technology.

You can't even read NVIDIA's whitepaper on G80 vs G70.

Close box system doesn't change the fact that RSX's ALUs stalls during texture fetch operations. CPU's Load/Store operation = GPU's texture fetch operation. Before opening your mouth learn some computer science 101.

[QUOTE="loosingENDS"][QUOTE="mrfrosty151986"]

A 7800GTX has twice the memory, twice the bandwidth, more fillrate, more texel rate...... it would kill off Xenos...

mrfrosty151986

And yet cant run anything even miles close to what Xenos runs and is garbage comparing

More things that cant be actually fully used due to last gen fixed shader design and PC bottlenecks are not really that helpfull

A 7800GTX runs Quake 4, Doom 3 and Half Life 2 much much better then Xenos ever could."Stop comparing the way a console utilises GPU's to the way PC's do...you just make yourself look stupid."

I wonder who said that.... Hmmm.

[QUOTE="loosingENDS"][QUOTE="mrfrosty151986"]

A 7800GTX has twice the memory, twice the bandwidth, more fillrate, more texel rate...... it would kill off Xenos...

mrfrosty151986

And yet cant run anything even miles close to what Xenos runs and is garbage comparing

More things that cant be actually fully used due to last gen fixed shader design and PC bottlenecks are not really that helpfull

A 7800GTX runs Quake 4, Doom 3 and Half Life 2 much much better then Xenos ever could.Old game engines and they are not shader extensive. Geforce 7 and RSX can't even do HDR FP + MSAA.

On Geforce 7 family, texture fetch operation has a cascading ALU stalls i.e. stall "FP32 Shader Unit 1", you stall the rest of the ALU pipeline.

![]()

[QUOTE="ronvalencia"]

[QUOTE="mrfrosty151986"]

7800 GTX would destroy Xenos and while technically the GTX 690 would be an 'old' card it will be at least 3-4x faster then the GPU that will be in the next Xbox.

mrfrosty151986

Outside of NVIDIA TWIMTBP DX9c, Xenos would destroy NVIDIA 7800 GTX.

NVIDIA 7800 GTX doesn't fully accelerate Direct3D 9c since it has issues with 32bit compute.

A 7800GTX has twice the memory, twice the bandwidth, more fillrate, more texel rate...... it would kill off Xenos...

Crysis 2 on Geforce 7800GTX = crap frame rates http://www.youtube.com/watch?v=klgVCk178OI

Btw, Sony PS3 has CELL to fix NVIDIA RSX's issues.

Crysis 2 on Radeon X1950 Pro runs like Xbox 360 version http://www.youtube.com/watch?v=jHWPGmf_A_0

Crysis 2 on Geforce 7800GTX = crap frame rates http://www.youtube.com/watch?v=klgVCk178OI

Btw, Sony PS3 has CELL to fix NVIDIA RSX's issues.

Crysis 2 on Radeon X1950 Pro runs like Xbox 360 version http://www.youtube.com/watch?v=jHWPGmf_A_0

ronvalencia

I think you are missing the whole point of the argument in which the troll LoosingENDs thinks the next gen console will be much more powerful than any PC out today.

He thinks this because of the Xbox 360.

He doesn't seem to understand that the Xbox 360's gpu was basically a 7600gt with unified shaders in terms of performance.

If there were no unified shader tech then it would be much weaker than it is.

There is nothing like unified shader tech coming next gen so it won't have that advantage.

Luckily for me my 2004 PC was smashing the consoles early in the gen and by the time shader intensive games were coming along you could get an 8800gt for under 200USD and it was around 3 times the power of consoles.

[QUOTE="mrfrosty151986"][QUOTE="loosingENDS"]A 7800GTX runs Quake 4, Doom 3 and Half Life 2 much much better then Xenos ever could.And yet cant run anything even miles close to what Xenos runs and is garbage comparing

More things that cant be actually fully used due to last gen fixed shader design and PC bottlenecks are not really that helpfull

Inconsistancy

"Stop comparing the way a console utilises GPU's to the way PC's do...you just make yourself look stupid."

I wonder who said that.... Hmmm.

I wonder who can't read.... HmmmMeens absolutely nothing...... Closed box system always go for the most efficient results so simply saying that RSX is bad at this is pointless... And were talking about a 7800GTX not RSX...jackass :|[QUOTE="mrfrosty151986"][QUOTE="ronvalencia"]

Note that the amount of texture fetch operations scales with pixel shaders stalls.

Refer to http://forum.beyond3d.com/showthread.php?p=552774

Read Jawed's post

For example texture fetches in RSX will always be painfully slow in comparison - but how slow depends on the format of the textures.

Also, control flow operations in RSX will be out of bounds because they are impractically slow - whereas in Xenos they'll be the bread and butter of good code because there'll be no performance penalty.Dependent texture fetches in Xenos (I presume that's what the third point means), will work without interrupting shader code - again RSX simply can't do this, dependent texturing blocks one ALU per pipe

ronvalencia

You fool, PS3's NVIDIA RSX is part of Geforce 7 family. Geforce 7 and NVIDIA RSX doesn't have G80's Gigathreads technology.

You can't even read NVIDIA's whitepaper on G80 vs G70.

Close box system doesn't change the fact that RSX's ALUs stalls during texture fetch operations. CPU's Load/Store operation = GPU's texture fetch operation. Before opening your mouth learn some computer science 101.

You have no ideaof how things works if you think that...A 7800GTX runs Quake 4, Doom 3 and Half Life 2 much much better then Xenos ever could.[QUOTE="mrfrosty151986"][QUOTE="loosingENDS"]

And yet cant run anything even miles close to what Xenos runs and is garbage comparing

More things that cant be actually fully used due to last gen fixed shader design and PC bottlenecks are not really that helpfull

ronvalencia

Old game engines and they are not shader extensive. Geforce 7 and RSX can't even do HDR FP + MSAA.

On Geforce 7 family, texture fetch operation has a cascading ALU stalls i.e. stall "FP32 Shader Unit 1", you stall the rest of the ALU pipeline.

![]()

Again, your point being? HDR + MSAA has been done on PS3

[QUOTE="ronvalencia"]

[QUOTE="mrfrosty151986"] A 7800GTX runs Quake 4, Doom 3 and Half Life 2 much much better then Xenos ever could.mrfrosty151986

Old game engines and they are not shader extensive. Geforce 7 and RSX can't even do HDR FP + MSAA.

On Geforce 7 family, texture fetch operation has a cascading ALU stalls i.e. stall "FP32 Shader Unit 1", you stall the rest of the ALU pipeline.

![]()

Again, your point being? HDR + MSAA has been done on PS3

Again, you missed HDP FP not integer based HDR. PS3 has 2 major workloads i.e. LogLuv encoded integer based HDR + MSAA or MLAA + HDR FP.

LogLuv encoded integer based HDR consumes several pixel shader cycles i.e. not a single pass process.

Please Log In to post.

Log in to comment