We're already seeing inferior graphics LMAO:

[spoiler]

vs... This. LOL.

[/spoiler]

Lems are dONE.

This topic is locked from further discussion.

I saw this coming from a mile away.

Lems: ":cry: bu....bu..but teh dual GPUs and 12GB GDDR5 RAM. M$ can affordz it!1"

I_can_haz

>using memes in the wrong context

While Xbox One has a limited number of transistors in your house, it will have a unlimited number of transistors in the cloud.

be apart of One. LOL... come together as One.While Xbox One has a limited number of transistors in your house, it will have a unlimited number of transistors in the cloud.

slipknot0129

While Ps4 has backwards compatibility on the cloud, Xbox One is actually using it to make the console more powerful.

Ps4 one step forward, 2 steps back. Xbox One is playing its cards right using the servers and stuff to future proof the console. Its gonna be like the old days where Playstation has the inferior network. This time it will affect how good games will look.

[QUOTE="MK-Professor"]16 CU's at 900Mhz = 18 CU's at 800Mhz:lol: If it was that way don't you think sony would have use a straight 7850 with 900mhz,sure you can argue it would produce more heat at higher mhz,but i could also argue that 2 less CU would also impact heat in a positive way. The RSX is weaker than the 7800GTX even that it has a higher clock as well,there is other things on the GPU as well that differ from a straight up GCN.not sure why you continue to pretend otherwise.

tormentos

You are forgetting PS3 has CELL's SPUs to patch NVIDIA RSX.

Note that AMD Radeon X19x0 aged better than NVIDIA GeForce 7xx0.

With Xbox One and PS4, the GCN IP is the similar as the PC's GCN.

The FLOPS performance gap between PS4's GCN (1840 GFLOPS) and 7950 Boost Edition (3315 GFLOPS) is similar to Xbox 360's 240 GFLOPs vs GeForce 8800 GTX's 518 GFLOPs.

[QUOTE="MK-Professor"]16 CU's at 900Mhz = 18 CU's at 800Mhz:lol: If it was that way don't you think sony would have use a straight 7850 with 900mhz,sure you can argue it would produce more heat at higher mhz,but i could also argue that 2 less CU would also impact heat in a positive way. The RSX is weaker than the 7800GTX even that it has a higher clock as well,there is other things on the GPU as well that differ from a straight up GCN.not sure why you continue to pretend otherwise.

tormentos

:lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol:

tormentos never fail to entertain.

16 CU's at 900Mhz = 1.84 TFLOPS

18 CU's at 800Mhz = 1.84 TFLOPS

FACT

also look at a real world scenario

A HD7950 with 28 CU's at 1200MHz destroy a HD7970 with 32 CU's at 925MHz.

you see now? the HD7950 with 4 CU's less than the HD7970 and still manage to perform better, simple because of the higher clock speed.

[QUOTE="MK-Professor"]16 CU's at 900Mhz = 18 CU's at 800Mhz:lol: If it was that way don't you think sony would have use a straight 7850 with 900mhz,sure you can argue it would produce more heat at higher mhz,but i could also argue that 2 less CU would also impact heat in a positive way. The RSX is weaker than the 7800GTX even that it has a higher clock as well,there is other things on the GPU as well that differ from a straight up GCN.not sure why you continue to pretend otherwise.

tormentos

That is because the PS3 is based on the 7970m GPU but is a gimped version with 2 CU removed.

A slightly overclocked 7850 is more powerful than that.

A heavily overclocked 7850 would easily beat it.

[QUOTE="ronvalencia"]

[QUOTE="killzowned24"] what are you showing here? anyway,just read it does have external.

But what is that the hard drive is sitting on lol?

Cyberdot

there was confirmation of an outside brick

http://www.neogaf.com/forum/showthread.php?t=560781

http://www.polygon.com/2013/5/21/4352870/xbox-one-design

So it still got an external power supply?

LOL!

how is that a problem?So Xbox One games going to look like shit compared to PS4. Figures. This is what happens when you make a TV remote control and call it a video game console.

Yes, a ton of products use an external power supply, less heat in the case and if it breaks you replace the power supply not the whole console. The controller battery design is also better with two rechargable batteries you always have a wireless controller unlike the ones with the battery inside you have to plug it in to recharge loosing the wireless fuction.[QUOTE="rrjim1"]

[QUOTE="Cyberdot"]

So it still got an external power supply?

LOL!

Cyberdot

What the hell am I reading?

somebody writing common sense which you clearly have a lack of:lol: If it was that way don't you think sony would have use a straight 7850 with 900mhz,sure you can argue it would produce more heat at higher mhz,but i could also argue that 2 less CU would also impact heat in a positive way. The RSX is weaker than the 7800GTX even that it has a higher clock as well,there is other things on the GPU as well that differ from a straight up GCN.[QUOTE="tormentos"][QUOTE="MK-Professor"]16 CU's at 900Mhz = 18 CU's at 800Mhz

not sure why you continue to pretend otherwise.

MK-Professor

:lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol::lol:

tormentos never fail to entertain.

16 CU's at 900Mhz = 1.84 TFLOPS

18 CU's at 800Mhz = 1.84 TFLOPS

FACT

also look at a real world scenario

A HD7950 with 28 CU's at 1200MHz destroy a HD7970 with 32 CU's at 925MHz.

you see now? the HD7950 with 4 CU's less than the HD7970 and still manage to perform better, simple because of the higher clock speed.

7950 @ 1200Mhz also has faster fix function GPU units (e.g. ROPS, TMUs, tessellation, ACEs and 'etc').

Higher Mhz also benefits for less wide compute workloads i.e. this is why I like GeForce 8800's +Ghz clockspeed and compute width relationship.

It depends on the art work and MS (e.g. Turn 10) has more experience with AMD GPUs.So Xbox One games going to look like shit compared to PS4. Figures. This is what happens when you make a TV remote control and call it a video game console.

argetlam00

Lems must be devastated knowing that the PS4 has much better graphics.

This gen they showed everybody how obsessed they were with comparing multiplatform games, claiming victory when the differences were only minor.Davekeeh

Lems were overly rediculous about miniskewel differences of multiplats on 360 vs PS3.

With PS4 vs x1 the graphical power difference is more than with PS3 vs 360. PS4 has more graphical advantage vs x1 than 360 vs PS3 did.

PS4s graphical power is about 150% that of x1.

[QUOTE="Cyberdot"][QUOTE="ronvalencia"]

there was confirmation of an outside brick

http://www.neogaf.com/forum/showthread.php?t=560781

http://www.polygon.com/2013/5/21/4352870/xbox-one-design

delta3074

So it still got an external power supply?

LOL!

how is that a problem? I think an external power brick is the least of xbox done's concerns right now...Once MS discovered that 8 gigs of GDDR5 was indeed possible they should have scrapped their original design and started over (Not having it is effecting more than just Xbox One's bandwidth but its GPU design as well). If that necessitated a year delay then so be it. Sony delayed their hardware a year the last time and it cost them early but they eventually caught up. MS could have caught up too. And Kinect 2.0 shouldn't be incuded in the box, should be sold the same way Kinect 1.0 is. And all that stuff about requiring an internet connection and blocking used games should be scrapped.

Once MS discovered that 8 gigs of GDDR5 was indeed possible they should have scrapped their original design and started over (Not having it is effecting more than just Xbox One's bandwidth but its GPU design as well). If that necessitated a year delay then so be it. Sony delayed their hardware a year the last time and it cost them early but they eventually caught up. MS could have caught up too. And Kinect 2.0 shouldn't be incuded in the box, should be sold the same way Kinect 1.0 is. And all that stuff about requiring an internet connection and blocking used games should be scrapped.

Wickerman777

DDR3 would be cheaper than GDDR5 i.e. OEMs still sells Radeon HD 7730M with DDR3 memory.

You don't win total war with German Tiger tank e.g. USA's Sherman M4 and USSR's T34 follows cheaper+quantity numbers game. It's the Zergling rush approach.

Another thing I don't like about how MS went about all this is that there were two cases of betting the farm going on here, 1 each from Sony and MS and both related to the memory architecture. Sony bet everything on 8 gigs of GDDR eventually being possible and at the last minute it worked for them. MS, however, bet everything on 8 gigs of GDDR5 not becoming possible soon enough and did everything they could to make DDR3 work instead. OK, with all of Sony's financial problems I can understand why they would commit to just one plan. But MS is freaking loaded! They should have had a backup, should have went with two designs in parallel, one like what Xbox One turned out being like and the other like what PS4 turned out being like should 8 gigs of GDDR5 become a possibility, which it did. Instead, when it comes to the hardware anyway, they painted themselves into a DDR3 and weak GPU corner that there was no way out of short of a long delay.

Let's see if MS's Zergling rush approach works with the next console war.Another thing I don't like about how MS went about all this is that there were two cases of betting the farm going on here, 1 each from Sony and MS and both related to the memory architecture. Sony bet everything on 8 gigs of GDDR eventually being possible and at the last minute it worked for them. MS, however, bet everything on 8 gigs of GDDR5 not becoming possible soon enough and did everything they could to make DDR3 work instead. OK, with all of Sony's financial problems I can understand why they would commit to just one plan. But MS is freaking loaded! They should have had a backup, should have went with two designs in parallel, one like what Xbox One turned out being like and the other like what PS4 turned out being like should 8 gigs of GDDR5 become a possibility, which it did. Instead, when it comes to the hardware anyway, they painted themselves into a DDR3 and weak GPU corner that there was no way out of short of a long delay.

Wickerman777

[QUOTE="Wickerman777"]

Once MS discovered that 8 gigs of GDDR5 was indeed possible they should have scrapped their original design and started over (Not having it is effecting more than just Xbox One's bandwidth but its GPU design as well). If that necessitated a year delay then so be it. Sony delayed their hardware a year the last time and it cost them early but they eventually caught up. MS could have caught up too. And Kinect 2.0 shouldn't be incuded in the box, should be sold the same way Kinect 1.0 is. And all that stuff about requiring an internet connection and blocking used games should be scrapped.

ronvalencia

DDR3 would be cheaper than GDDR5 i.e. OEMs still sells Radeon HD 7730M with DDR3 memory.

You don't win total war with German Tiger tank e.g. USA's Sherman M4 and USSR's T34 follows cheaper+quantity numbers game. It's the Zergling rush approach.

I'm going by an article I read at eurogamer:

http://www.eurogamer.net/articles/digitalfoundry-spec-analysis-xbox-one

According to them despite MS's GPU being weaker it costs around the same thing as Sony's because its got all that extra crap on it like ESRAM and data move engines, etc., all of which is there to make DDR3 work. That money could have been spent on more CUs and ROPs instead which is what Sony did.

and yet the games will look the same like before, considering once again its easier to design on the xbox. its going to be the same thing as the ps3 vs 360 crapJetB1ackNewYearyou have a link that its easier to develop for the XBO than the PS4?

[QUOTE="ronvalencia"]

[QUOTE="Wickerman777"]

Once MS discovered that 8 gigs of GDDR5 was indeed possible they should have scrapped their original design and started over (Not having it is effecting more than just Xbox One's bandwidth but its GPU design as well). If that necessitated a year delay then so be it. Sony delayed their hardware a year the last time and it cost them early but they eventually caught up. MS could have caught up too. And Kinect 2.0 shouldn't be incuded in the box, should be sold the same way Kinect 1.0 is. And all that stuff about requiring an internet connection and blocking used games should be scrapped.

Wickerman777

DDR3 would be cheaper than GDDR5 i.e. OEMs still sells Radeon HD 7730M with DDR3 memory.

You don't win total war with German Tiger tank e.g. USA's Sherman M4 and USSR's T34 follows cheaper+quantity numbers game. It's the Zergling rush approach.

I'm going by an article I read at eurogamer:

http://www.eurogamer.net/articles/digitalfoundry-spec-analysis-xbox-one

According to them despite MS's GPU being weaker it costs around the same thing as Sony's because its got all that extra crap on it like ESRAM and data move engines, etc., all of which is there to make DDR3 work. That money could have been spent on more CUs and ROPs instead which is what Sony did.

Eurogamer didn't properly factor in wholesale GDDR5 prices.

[QUOTE="Wickerman777"]

[QUOTE="ronvalencia"]

DDR3 would be cheaper than GDDR5 i.e. OEMs still sells Radeon HD 7730M with DDR3 memory.

You don't win total war with German Tiger tank e.g. USA's Sherman M4 and USSR's T34 follows cheaper+quantity numbers game. It's the Zergling rush approach.

ronvalencia

I'm going by an article I read at eurogamer:

http://www.eurogamer.net/articles/digitalfoundry-spec-analysis-xbox-one

According to them despite MS's GPU being weaker it costs around the same thing as Sony's because its got all that extra crap on it like ESRAM and data move engines, etc., all of which is there to make DDR3 work. That money could have been spent on more CUs and ROPs instead which is what Sony did.

Eurogamer didn't properly factor in wholesale GDDR5 prices

Yes, they did. Perhaps I didn't say enough in my last comment. They said that the APUs are basically the same price despite Xbox's being clearly inferior to PS4's. But PS4's memory does indeed cost more than Xbox's. But so what? If MS could go back and do everything over again with the knowledge they have now I'm sure they'd pick an APU more like PS4's and GDDR5 instead. They just weren't willing to bet that 8 gigs of GDDR5 would be doable in 2013. Sony did and the gamble payed off for them.

[QUOTE="ronvalencia"]

[QUOTE="Wickerman777"]

I'm going by an article I read at eurogamer:

http://www.eurogamer.net/articles/digitalfoundry-spec-analysis-xbox-one

According to them despite MS's GPU being weaker it costs around the same thing as Sony's because its got all that extra crap on it like ESRAM and data move engines, etc., all of which is there to make DDR3 work. That money could have been spent on more CUs and ROPs instead which is what Sony did.

Wickerman777

Eurogamer didn't properly factor in wholesale GDDR5 prices

Yes, they did. Perhaps I didn't say enough in my last comment. They said that the APUs are basically the same price despite Xbox's being clearly inferior to PS4's. But PS4's memory does indeed cost more than Xbox's. But so what? If MS could go back and do everything over again with the knowledge they have now I'm sure they'd pick an APU more like PS4's and GDDR5 instead. They just weren't willing to bet that 8 gigs of GDDR5 would be doable in 2013. Sony did and the gamble payed off for them.

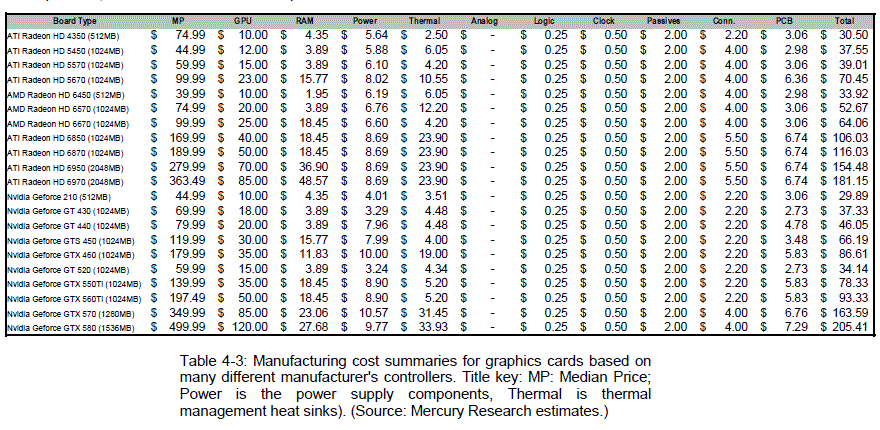

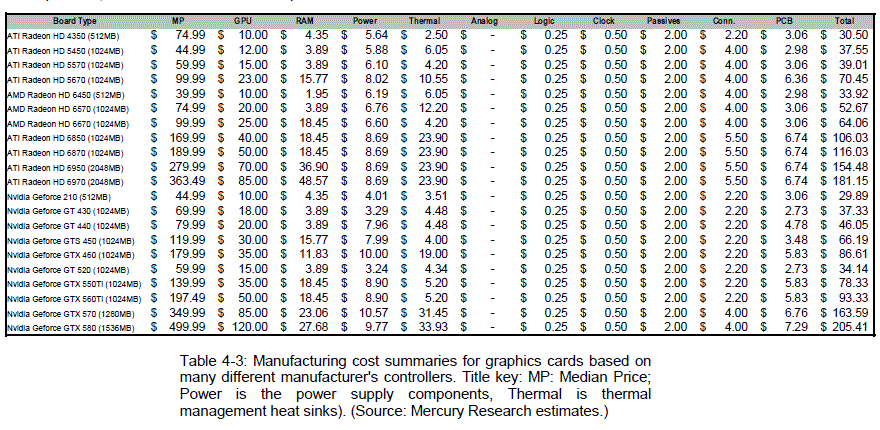

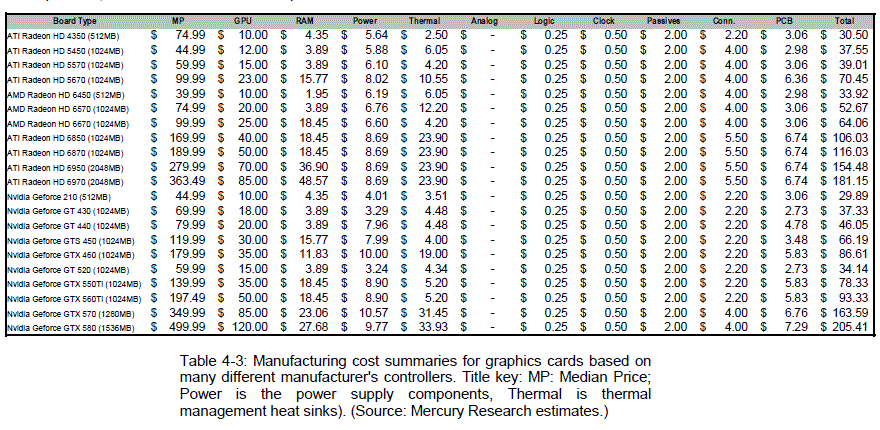

Radeon HD 69X0 has chip size of 389 mm^2, with 28nm process tech, you could easily fit Radeon HD 7970(AMD Tahiti XT)'s 4.3 billion transistors at 365mm^2 chip size i.e. AMD Tahiti's BOM is cheaper than AMD Cayman.

Note that AMD is selling AMD Tahiti LE (365mm^2 chip) at 7870 prices i.e. Radeon HD 7870 XT SKU (with cheaper 7870 GE's memory setup and PCB). My point, AMD can easily cut the cost for the GPU card when they can reduce GDDR5 memory cost.

[QUOTE="Wickerman777"][QUOTE="ronvalencia"]

Eurogamer didn't properly factor in wholesale GDDR5 prices

ronvalencia

Yes, they did. Perhaps I didn't say enough in my last comment. They said that the APUs are basically the same price despite Xbox's being clearly inferior to PS4's. But PS4's memory does indeed cost more than Xbox's. But so what? If MS could go back and do everything over again with the knowledge they have now I'm sure they'd pick an APU more like PS4's and GDDR5 instead. They just weren't willing to bet that 8 gigs of GDDR5 would be doable in 2013. Sony did and the gamble payed off for them.

Radeon HD 69X0 has chip size of 389 mm^2, with 28nm process tech, you could easily fit Radeon HD 7970(AMD Tahiti XT)'s 4.3 billion transistors at 365mm^2 chip size i.e. AMD Tahiti's BOM is cheaper than AMD Cayman. Note that AMD is selling AMD Tahiti LE (365mm^2 chip) at 7870 prices i.e. Radeon HD 7870 XT SKU.I don't even know what your point is anymore. Are you in some way trying to say that MS's APU doesn't suck when compared to PS4's? Well, it does.

Radeon HD 69X0 has chip size of 389 mm^2, with 28nm process tech, you could easily fit Radeon HD 7970(AMD Tahiti XT)'s 4.3 billion transistors at 365mm^2 chip size i.e. AMD Tahiti's BOM is cheaper than AMD Cayman. Note that AMD is selling AMD Tahiti LE (365mm^2 chip) at 7870 prices i.e. Radeon HD 7870 XT SKU.[QUOTE="ronvalencia"][QUOTE="Wickerman777"]

Yes, they did. Perhaps I didn't say enough in my last comment. They said that the APUs are basically the same price despite Xbox's being clearly inferior to PS4's. But PS4's memory does indeed cost more than Xbox's. But so what? If MS could go back and do everything over again with the knowledge they have now I'm sure they'd pick an APU more like PS4's and GDDR5 instead. They just weren't willing to bet that 8 gigs of GDDR5 would be doable in 2013. Sony did and the gamble payed off for them.

Wickerman777

I don't even know what your point is anymore. Are you in some way trying to say that MS's APU doesn't suck when compared to PS4's? Well, it does.

They didn't factor it in since cutting edge high density GDDR5 would be expensive i.e. it can exceed APU's price.[QUOTE="Wickerman777"][QUOTE="ronvalencia"] Radeon HD 69X0 has chip size of 389 mm^2, with 28nm process tech, you could easily fit Radeon HD 7970(AMD Tahiti XT)'s 4.3 billion transistors at 365mm^2 chip size i.e. AMD Tahiti's BOM is cheaper than AMD Cayman. Note that AMD is selling AMD Tahiti LE (365mm^2 chip) at 7870 prices i.e. Radeon HD 7870 XT SKU.ronvalencia

I don't even know what your point is anymore. Are you in some way trying to say that MS's APU doesn't suck when compared to PS4's? Well, it does.

They didn't factor it in since cutting edge high density GDDR5 would be expensive i.e. it can exceed APU's price.YES, THEY DID! I already said that before! Yes, PS4's memory is more expensive than Xbox's (And it very well be the more expensive console to produce. But I'm not sure how much Kinect effects things), but the APUs in the two boxes cost roughly the same thing. That latter point is the only one I had been trying to make.

They didn't factor it in since cutting edge high density GDDR5 would be expensive i.e. it can exceed APU's price.[QUOTE="ronvalencia"][QUOTE="Wickerman777"]

I don't even know what your point is anymore. Are you in some way trying to say that MS's APU doesn't suck when compared to PS4's? Well, it does.

Wickerman777

YES, THEY DID! I already said that before! Yes, PS4's memory is more expensive than Xbox's, but the APUs in the two boxes cost roughly the same thing. That latter point is the only one I had been trying to make.

They didn't quantify the numbers.

If the two APU prices are similar, the two boxes's price wouldn't be the same i.e. price gap between high density GDDR5 vs cheaper 2133 DDR3.

[QUOTE="Wickerman777"]

[QUOTE="ronvalencia"] They didn't factor it in since cutting edge high density GDDR5 would be expensive i.e. it can exceed APU's price.ronvalencia

YES, THEY DID! I already said that before! Yes, PS4's memory is more expensive than Xbox's, but the APUs in the two boxes cost roughly the same thing. That latter point is the only one I had been trying to make.

They didn't quantify the numbers. The two boxes wouldn't the same.

For the love of God when did I say they said that?! The only thing I was talking about was the APUs. You're the one talking about the complete boxes, not me and not them. Once again: The only thing I've been saying is that the APUs have a similar cost despite one being crap when compared to the other one. I'm not saying, nor was I saying before, anything about the price of the entire console, just the APUs.

They didn't quantify the numbers. The two boxes wouldn't the same.[QUOTE="ronvalencia"]

[QUOTE="Wickerman777"]

YES, THEY DID! I already said that before! Yes, PS4's memory is more expensive than Xbox's, but the APUs in the two boxes cost roughly the same thing. That latter point is the only one I had been trying to make.

Wickerman777

For the love of God when did I say they said that?! The only thing I was talking about was the APUs. You're the one talking about the complete boxes, not me and not them. Once again: The only thing I've been saying is that the APUs have a similar cost despite one being crap when compared to the other one. I'm not saying, nor was I saying before, anything about the price of the entire console, just the APUs.

Let me repost your trigger post

Once MS discovered that 8 gigs of GDDR5 was indeed possible they should have scrapped their original design and started over (Not having it is effecting more than just Xbox One's bandwidth but its GPU design as well). If that necessitated a year delay then so be it. Sony delayed their hardware a year the last time and it cost them early but they eventually caught up. MS could have caught up too. And Kinect 2.0 shouldn't be incuded in the box, should be sold the same way Kinect 1.0 is. And all that stuff about requiring an internet connection and blocking used games should be scrapped.

1. You talk about GDDR5 memory not just the APU.

2. I talk about DDR3 price vs GDDR5 price.

[QUOTE="ronvalencia"]

[QUOTE="Wickerman777"]

Once MS discovered that 8 gigs of GDDR5 was indeed possible they should have scrapped their original design and started over (Not having it is effecting more than just Xbox One's bandwidth but its GPU design as well). If that necessitated a year delay then so be it. Sony delayed their hardware a year the last time and it cost them early but they eventually caught up. MS could have caught up too. And Kinect 2.0 shouldn't be incuded in the box, should be sold the same way Kinect 1.0 is. And all that stuff about requiring an internet connection and blocking used games should be scrapped.

Wickerman777

DDR3 would be cheaper than GDDR5 i.e. OEMs still sells Radeon HD 7730M with DDR3 memory.

You don't win total war with German Tiger tank e.g. USA's Sherman M4 and USSR's T34 follows cheaper+quantity numbers game. It's the Zergling rush approach.

I'm going by an article I read at eurogamer:

http://www.eurogamer.net/articles/digitalfoundry-spec-analysis-xbox-one

According to them despite MS's GPU being weaker it costs around the same thing as Sony's because its got all that extra crap on it like ESRAM and data move engines, etc., all of which is there to make DDR3 work. That money could have been spent on more CUs and ROPs instead which is what Sony did.

Example of Eurogamer being stupid.

In reference to eurogamer.net/articles/digitalfoundry-saboteur-aa-blog-entry and I quote.

"In the meantime, what we have is something that's new and genuinely exciting from a technical standpoint. We're seeing PS3 attacking a visual problem using a method that not even the most high-end GPUs are using."

Eurogamer didn't factor in AMD's developer.amd.com/gpu_assets/AA-HPG09.pdf

It was later corrected by Christer Ericson, director of tools and technology at Sony Santa Monica and I quote

"The screenshots may not be showing MLAA, and it's almost certainly not a technique as experimental as we thought it was, but it's certainly the case that this is the most impressive form of this type of anti-aliasing we've seen to date in a console game. Certainly, as we alluded to originally, the concept of using an edge-filter/blur combination isn't new, and continues to be refined.This document by Isshiki and Kunieda published in 1999 suggested a similar technique, and, more recently, AMD's Iourcha, Yang and Pomianowski suggested a more advanced version of the same basic idea".

AMD's Iourcha, Yang and Pomianowski's papers refers to developer.amd.com/gpu_assets/AA-HPG09.pdf

To quote AMD's paper

"This filter is the basis for the Edge-Detect Custom Filter AA driver feature on ATI Radeon HD GPUs".

Eurogamer's "not even the most high-end GPU are using" assertion IS wrong.

From top to bottom GPUs, current ATI GPUs supports Direct3D 10.1 and methods mentioned AMD's AA paper.

Sorry, I rely on my own information sources not from Eurogamer. They don't even know about AMD GPU.

[QUOTE="Wickerman777"]

[QUOTE="ronvalencia"] They didn't quantify the numbers. The two boxes wouldn't the same.

ronvalencia

For the love of God when did I say they said that?! The only thing I was talking about was the APUs. You're the one talking about the complete boxes, not me and not them. Once again: The only thing I've been saying is that the APUs have a similar cost despite one being crap when compared to the other one. I'm not saying, nor was I saying before, anything about the price of the entire console, just the APUs.

Let me repost your trigger post

Once MS discovered that 8 gigs of GDDR5 was indeed possible they should have scrapped their original design and started over (Not having it is effecting more than just Xbox One's bandwidth but its GPU design as well). If that necessitated a year delay then so be it. Sony delayed their hardware a year the last time and it cost them early but they eventually caught up. MS could have caught up too. And Kinect 2.0 shouldn't be incuded in the box, should be sold the same way Kinect 1.0 is. And all that stuff about requiring an internet connection and blocking used games should be scrapped.

1. You talk about GDDR5 memory not just the APU.

2. I talk about DDR3 price vs GDDR5 price.

Now you're talking about an OPINION OF MINE I made earlier. I mentioned the eurogamer article in a reply later and that's what we've been arguing about. The eurogamer article talks about the APUs having a similar cost associated to them despite one being more powerful than the other. And yes, it later mentions that GDDR5 costs more than DDR3. All I've been talking about is the APUs, you're the one going and on about complete box costs. I don't know if maybe your point is that if MS had this to do over again they'd still go with the same design cuz DDR3 is cheaper. "If" you think that I think your nuts, lol. I don't believe for a minute that they gimped thier APU to accomodate DDR3-aiding crap because DDR3 is cheaper. Eurogamer's opinion on why they did it makes a lot more sense to me: that it was because they didn't think 8 gigs of GDDR5 would be possible and they were hell-bent on 8 gigs of RAM.

[QUOTE="ronvalencia"]

[QUOTE="Wickerman777"]

For the love of God when did I say they said that?! The only thing I was talking about was the APUs. You're the one talking about the complete boxes, not me and not them. Once again: The only thing I've been saying is that the APUs have a similar cost despite one being crap when compared to the other one. I'm not saying, nor was I saying before, anything about the price of the entire console, just the APUs.

Wickerman777

Let me repost your trigger post

Once MS discovered that 8 gigs of GDDR5 was indeed possible they should have scrapped their original design and started over (Not having it is effecting more than just Xbox One's bandwidth but its GPU design as well). If that necessitated a year delay then so be it. Sony delayed their hardware a year the last time and it cost them early but they eventually caught up. MS could have caught up too. And Kinect 2.0 shouldn't be incuded in the box, should be sold the same way Kinect 1.0 is. And all that stuff about requiring an internet connection and blocking used games should be scrapped.

1. You talk about GDDR5 memory not just the APU.

2. I talk about DDR3 price vs GDDR5 price.

Now you're talking about an OPINION OF MINE I made earlier. I mentioned the eurogamer article in a reply later and that's what we've been arguing about. The eurogamer article talks about the APUs having a similar cost associated to them despite one being more powerful than the other. And yes, it later mentions that GDDR5 costs more than DDR3. All I've been talking about is the APUs, you're the one going and on about complete box costs. I don't know if maybe your point is that if MS had this to do over again they'd still go with the same design cuz DDR3 is cheaper. "If" you think that I think your nuts, lol. I don't believe for a minute that they gimped thier APU to accomodate DDR3-aiding crap because DDR3 is cheaper. Eurogamer's opinion on why they did it makes a lot more sense to me: that it was because they didn't think 8 gigs of GDDR5 would be possible and they were hell-bent on 8 gigs of RAM.

It's the same decision on why OEMs gimps 7750M with DDR3, thus creating 7730M SKU i.e. it's cheaper. In that regard, MS is no different to DELL or HP.

Another example, my Sony Vaio VGN-FW45 laptop has GDDR3 for Radeon HD 4650M (i.e. best memory type for 4650M during 2008), while other 4650s based laptops has the cheaper GDDR2.

MS's ESRAM direction is influenced by Xbox 360's design pattern. I expected MS to do something similar i.e. MS didn't spend extra on 72bit XDR memory for the Xbox 360.

Sony's GDDR5 for PS4 has similar design pattern as PS3's extra XDR memory selection.

The midnset for MS and Sony remains the same as with Xbox 360 and PS3, but the big difference is with Sony i.e. they switched to AMD IP (i.e. CPU, GPU, MCH, NB, SB, GDDR5**)

**AMD (ATI's memory team) chairs (JEDEC) and designs GDDRx standard. http://vr-zone.com/articles/gddr6-memory-coming-in-2014/16359.html

You might not know this, but AMD i.e. Advanced Micro Devices is actually the company behind the creation of GDDR memory standard. The company did a lot of great work with GDDR3 and now with GDDR5, while GDDR4 was simply too short on the market to drive the demand for the solution. In the words of our sources, "GDDR3 was too good for GDDR4 to compete with, while everyone knew what was coming with GDDR5."

GDDR5 as a memory standard was designed as Dr. Jekyll and Mr. Hyde. The Single-Ended GDDR5 i.e. Dr. Jekyll was created to power the contemporary processors, while the Differential GDDR5 i.e. Mr. Hyde was designed to "murder Rambus and XDR". Ultimately, the conventional S.E. GDDR5 took off better than expected and clocked higher than anyone hoped for. While the estimates for the top standard were set at 1.5GHz QDR, i.e. 6 "effective GHz" with overclocking, we got both AMD and NVIDIA actually shipping retail parts at 1.5GHz, with overclocks as high as 1.85GHz (7.4 "GHz"). This brought us to more than 250GB/s achiveable bandwidth, meaning that the purpose of Differential GDDR5 was lost.

------------

Sony selected Rambus killer GDDR5 (PS; Single-Ended version displaced Differential version) in place of Rumbus's XDR. AMD's memory team is in competition against Rambus.

On the memory side, Sony's memory selection for PS4 is expected i.e. I expected Rambus killer memory selection from Sony and they selected it e.g. GDDR5.

--------

On graphics processing side

PS3 has about ~363 GFLOPs (from 6 SPUs + RSX)

Xbox 360 has about 240 GFLOPs (from Xenos)

Pattern: PS3 has a higher theoretical FLOPs but has issues with extracting effective performance.

The performance relationship for PS4 vs Xbox One is simiilar to PS3 vs Xbox 360 but extracting effective performance is better with AMD GCN.

Both MS's and Sony's mindset remained similar for next-gen consoles.

ok xboxiphoneps3 guy ..... the link says "POSSIBLE"

http://kotaku.com/the-five-possible-states-of-xbox-one-games-are-strangel-509597078

Please try harder next time when you try to misinform... Sorry im not going with Possible as Fact like you are in OP. If you dont update, you just are a mininformer mongrel.

ok xboxiphoneps3 guy ..... the link says "POSSIBLE"

http://kotaku.com/the-five-possible-states-of-xbox-one-games-are-strangel-509597078

Please try harder next time when you try to misinform... Sorry im not going with Possible as Fact like you are in OP. If you dont update, you just are a mininformer mongrel.

XBOunity

Caveat: this Xbox One development info was circulated by Microsoft to its partners at the beginning of this year. It may have changed, but based on what we saw this week, probably not in any major way.

stay butthurt :lol::lol::lol::lol::lol::lol::lol::lol::lol:

[QUOTE="princeofshapeir"]Well, its main purpose is to watch TV. Can't say I'm surprised. Ribnarak

lollll

lmfao :lol:the Cablebox One has no power for games! Microsoft has to use it for its primary purpose: Streaming HD channels.

Do we have any info about the CPU/GPU reserves of the PS4?

KZ SF postmortem only mentions 6 cores being used, but all rumors indicate that the OS reserve is half a core (not literally!) (not hard to believe considering its only 1 OS).

Free memory is either 7 or 7.5gb for games (since the memory was doubled when the OS was already created, they might have doubled the reserve for future proofing).

The only thing there is no info is that if there is any GPU reserve for OS. There is no info if the secondary processor for OS support alone is enough.

Anyone has any updated info/leaks on this?

Gee,what happened,lems?

You said that M$ is rich enough to sell quad titan sli machines for $500.Why are they selling a 1.1TFLOP machine,then?:(

TLHBO

and dat 1.1tflops equals to what kind of gpu in our day and age? Something like the 6870 or higher? Anyways, a weak system is an insta gtfo for me.silversix_To be fair I hate the X1 at the moment and its not because I hate Microsoft I have got one of their 360 its because the console suck but in fairness most developers say you often get around 2x the performance from a console then a PC using the same hardware because of optimisation. Often hermits forget that console hardware goes longer instead of thinking about the bigger picture.

Please Log In to post.

Log in to comment