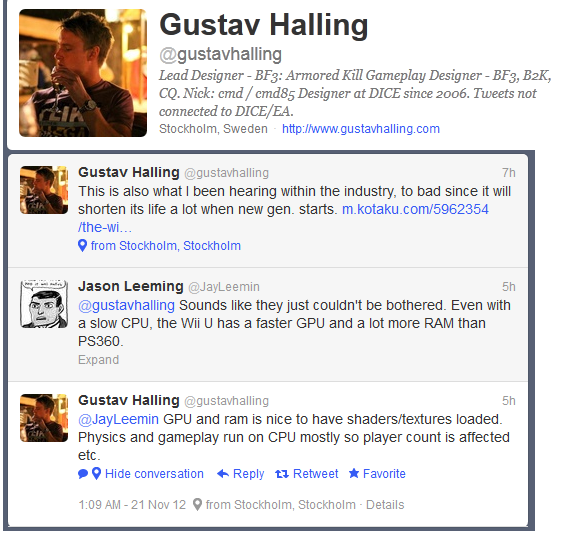

Dice Technical director.Why the frostbite engine will never come to the WiiU.

This topic is locked from further discussion.

It's three Wii U CPUs taped together, with much more L2 cache but only 1.24 GHz, and still stuck in the the past with a 64 bit FPU. Xenon and the Cell are capable of 128 bit VMX on all their cores and they run at 3.2 GHz max. Pathetic Wii U.Doesn't the WiiU have a fuggin Gamecube CPU?

Pray_to_me

[QUOTE="Pray_to_me"]It's three Wii U CPUs taped together, with much more L2 cache but only 1.24 GHz, and still stuck in the the past with a 64 bit FPU. Xenon and the Cell are capable of 128 bit VMX on all their cores and they run at 3.2 GHz max. Pathetic Wii U.:lol: Wow, the CPU is a piece of shit. Maybe that's what is preventing DICE from porting FB2 over.Doesn't the WiiU have a fuggin Gamecube CPU?

PC_Otter

Remember when 4A's(metro 2033) chief technical director called wii-u's cpu slow and horrible?  writings been on the wall for some time now.

writings been on the wall for some time now.

[QUOTE="Kaze_no_Mirai"]They say the strangest thing. It's pretty obvious that the Wii U is stronger than the HD twins. Whether it be by a little or a lot. Yet they couldn't get the results they wanted from the engine testing for Frostbite 2. Yet Frostbite 2 and 3 games will be on the HD twins.Slashkice

It's not stronger in every area. The CPU is slower compared to the HD twins, and it's likely the cause of issues for DICE. It ultimately comes back to money though, since getting it working to a satisfactory level on the Wii U would cost money, money EA clearly doesn't feel is worth spending.

Theoretically, dice could scale back frostbite 2/3, but after COD selling like crap and EA's very own NFS:MW(they spent resources taking advantage of wii-u hardware) selling 10k units last month, what's the point? The people who own wii-u only want and only buys nintendo games. Sheep have the nerve to complain on forums when third parties stop supporting their console. lol Put your money where your mouth is and buy some damn third party games.[QUOTE="PC_Otter"][QUOTE="Pray_to_me"]It's three Wii U CPUs taped together, with much more L2 cache but only 1.24 GHz, and still stuck in the the past with a 64 bit FPU. Xenon and the Cell are capable of 128 bit VMX on all their cores and they run at 3.2 GHz max. Pathetic Wii U.:lol: Wow, the CPU is a piece of shit. Maybe that's what is preventing DICE from porting FB2 over. It'll run technically I'm sure, but it would mean cutting out so much, making it an unrealistic prospect. CryEngine 3 for Shadow of the Eternals isn't that impressive. Crysis using CryEngine 2 could be ran on Pentium 4's, so I'm sure that scalability is still there. Of course using a Pentium 4 was damning to the potential product since settings had to be reduced, but it was still workable. Frostbite games like Battlefield 3 are the kind that need a high end dual core at minimum like a second gen i3 to get good, stable FPS since it gives each core 256 bit wide SIMD per clock capability which means alot of GFLOPS per core per clock. The Wii U lacks this aspect.Doesn't the WiiU have a fuggin Gamecube CPU?

I_can_haz

[QUOTE="I_can_haz"][QUOTE="PC_Otter"] It's three Wii U CPUs taped together, with much more L2 cache but only 1.24 GHz, and still stuck in the the past with a 64 bit FPU. Xenon and the Cell are capable of 128 bit VMX on all their cores and they run at 3.2 GHz max. Pathetic Wii U.PC_Otter:lol: Wow, the CPU is a piece of shit. Maybe that's what is preventing DICE from porting FB2 over. It'll run technically I'm sure, but it would mean cutting out so much, making it an unrealistic prospect. CryEngine 3 for Shadow of the Eternals isn't that impressive. Crysis using CryEngine 2 could be ran on Pentium 4's, so I'm sure that scalability is still there. Of course using a Pentium 4 was damning to the potential product since settings had to be reduced, but it was still workable. Frostbite games like Battlefield 3 are the kind that need a high end dual core at minimum like a second gen i3 to get good, stable FPS since it gives each core 256 bit wide SIMD per clock capability which means alot of GFLOPS per core per clock. The Wii U lacks this aspect.

1st gen Intel Core i3/i5/i7 doesn't have Intel's 256bit wide AVX i.e. it only has 128bit SSE hardware.

Using an external GPU** for my laptop, Battlefield 3 runs fine on Intel Core i7-740QM.

**My old desktop Radeon HD 6950 replaced my old 5770.

It'll run technically I'm sure, but it would mean cutting out so much, making it an unrealistic prospect. CryEngine 3 for Shadow of the Eternals isn't that impressive. Crysis using CryEngine 2 could be ran on Pentium 4's, so I'm sure that scalability is still there. Of course using a Pentium 4 was damning to the potential product since settings had to be reduced, but it was still workable. Frostbite games like Battlefield 3 are the kind that need a high end dual core at minimum like a second gen i3 to get good, stable FPS since it gives each core 256 bit wide SIMD per clock capability which means alot of GFLOPS per core per clock. The Wii U lacks this aspect.[QUOTE="PC_Otter"][QUOTE="I_can_haz"]:lol: Wow, the CPU is a piece of shit. Maybe that's what is preventing DICE from porting FB2 over.ronvalencia

1st gen Intel Core i3/i5/i7 doesn't have Intel's 256bit wide AVX i.e. it only has 128bit SSE hardware.

I said 2nd gen i3. Didn't you read my post? I didn't refer to desktop, but that's what I meant. LOL[QUOTE="ronvalencia"]

[QUOTE="PC_Otter"] It'll run technically I'm sure, but it would mean cutting out so much, making it an unrealistic prospect. CryEngine 3 for Shadow of the Eternals isn't that impressive. Crysis using CryEngine 2 could be ran on Pentium 4's, so I'm sure that scalability is still there. Of course using a Pentium 4 was damning to the potential product since settings had to be reduced, but it was still workable. Frostbite games like Battlefield 3 are the kind that need a high end dual core at minimum like a second gen i3 to get good, stable FPS since it gives each core 256 bit wide SIMD per clock capability which means alot of GFLOPS per core per clock. The Wii U lacks this aspect. PC_Otter

1st gen Intel Core i3/i5/i7 doesn't have Intel's 256bit wide AVX i.e. it only has 128bit SSE hardware.

I said 2nd gen i3. Didn't you read my post? I didn't refer to desktop, but that's what I meant. LOLMy point was Battlefield 3 runs fine on 1st gen Intel Core i7 Quad Cores.

With external GPU(it's now at Radeon HD 6950 2GB), my laptop can easily play any current PC games. I'll upgrade my laptop when it's required for new games.

[QUOTE="ChubbyGuy40"]

[QUOTE="locopatho"] I didn't call you stupid. I'm not insulting you. I;m trying to help you. Stop wishing for stuff that'll never happen. 3rd party ain't happening. Next gen engines and games and devs ain't happening. This isn't news to anyone except like 5 hopeful sheep.timbers_WSU

Unity 4 Pro is a next-gen, third party engine.

I think you are the very last member of the Wii U Defense Force. At what point are you just gonna say- (**** it. Nintendo messed up and who can blame gamers for laughing and looking the other direction." Point is it doesn't matter what engine is on the Wii U. Sitting side by side of another console is gonna show what the Wii showed this gen. The game just won't be the same on the Wii U.No one knows how weak or strong the console is yet. Neogaf is finding new things about the console's power every week.The conole is a memory intensive design compared to the other consoles.

Remember when 4A's(metro 2033) chief technical director called wii-u's cpu slow and horrible?

writings been on the wall for some time now.

MFDOOM1983

Wii's PowerPC G3 type CPU has 1 branch + 2 instruction issue/dispatch per cycle with 64bit SIMD instruction issue slots. Only has 64bit SIMD hardware.

AMD Bobcat type CPU has 2 instruction issue/dispatch per cycle with 128bit SSE/SIMD instruction issue slots. Only has 64bit SSE/SIMD hardware. Wii's CPU would hit instruction dispatch bottlenecks before AMD Bobcat.

I think you are the very last member of the Wii U Defense Force. At what point are you just gonna say- (**** it. Nintendo messed up and who can blame gamers for laughing and looking the other direction." Point is it doesn't matter what engine is on the Wii U. Sitting side by side of another console is gonna show what the Wii showed this gen. The game just won't be the same on the Wii U.[QUOTE="timbers_WSU"]

[QUOTE="ChubbyGuy40"]

Unity 4 Pro is a next-gen, third party engine.

super600

No one knows how weak or strong the console is yet. Neogaf is finding new things about the console's power every week.The conole is a memory intensive design compared to the other consoles.

DICE's post indicates the CPU is the main problem not the GPU. Nintendo should have went for AMD Jaguar Quad Core with one IBM PowerPC G3 type CPU for BC.Remember when 4A's(metro 2033) chief technical director called wii-u's cpu slow and horrible?

writings been on the wall for some time now.

MFDOOM1983

And people told me I was being moronic, a fanboy and delusional when I said the Wii U belonged in the same gen as the PS3/360. Even developers don't consider it a next gen console...

[QUOTE="super600"][QUOTE="timbers_WSU"] I think you are the very last member of the Wii U Defense Force. At what point are you just gonna say- (**** it. Nintendo messed up and who can blame gamers for laughing and looking the other direction." Point is it doesn't matter what engine is on the Wii U. Sitting side by side of another console is gonna show what the Wii showed this gen. The game just won't be the same on the Wii U.

ronvalencia

No one knows how weak or strong the console is yet. Neogaf is finding new things about the console's power every week.The conole is a memory intensive design compared to the other consoles.

DICE's post indicates the CPU is the main problem not the GPU. Nintendo should have went for AMD Jaguar Quad Core with one IBM PowerPC G3 type CPU for BC.Does anyone even know when DICE tested the console? They may have tested the console before it got a spec bump.

DICE's post indicates the CPU is the main problem not the GPU. Nintendo should have went for AMD Jaguar Quad Core with one IBM PowerPC G3 type CPU for BC.[QUOTE="ronvalencia"][QUOTE="super600"]

No one knows how weak or strong the console is yet. Neogaf is finding new things about the console's power every week.The conole is a memory intensive design compared to the other consoles.

super600

Does anyone even know when DICE tested the console? They may have tested the console before it got a spec bump.

I don't think it will solve the CPU issue.[QUOTE="super600"][QUOTE="ronvalencia"] DICE's post indicates the CPU is the main problem not the GPU. Nintendo should have went for AMD Jaguar Quad Core with one IBM PowerPC G3 type CPU for BC.ronvalencia

Does anyone even know when DICE tested the console? They may have tested the console before it got a spec bump.

I don't think it will solve the CPU issue.I still think the CPU is a mystery based on comments from multiple other devs that played around with the WiiU's hardware. I think it may be slightly stronger than the 360 CPU's.

I don't think it will solve the CPU issue.[QUOTE="ronvalencia"][QUOTE="super600"]

Does anyone even know when DICE tested the console? They may have tested the console before it got a spec bump.

super600

I still think the CPU is a mystery based on comments from multiple other devs that played around with the WiiU's hardware. I think it may be slightly stronger than the 360 CPU's.

On data processing, PPE's 128bit SIMD would be stronger than Wii's 64bit SIMD. Recall AMD's 3DNow 64bit SIMD (for both instructions and hardware)..

I don't think it will solve the CPU issue.[QUOTE="ronvalencia"][QUOTE="super600"]

Does anyone even know when DICE tested the console? They may have tested the console before it got a spec bump.

super600

I think it may be slightly stronger than the 360 CPU's.

Each "Espresso" core can do 5 instructions of out of order executions (out of order - superior) vs. the Xenon's 2 instructions of in order executions (in order - inferior).

Add to that that an entire Xenon Core had to be used for sound, and that Wii U has a dedicated chip for that, as well as the Espresso having way more cache, and up to date cache at that. Never mind that the CPU and GPU are on an MCM which ramps up communication speed as well, though this and the seperate arm chip don't really make the CPU stronger, it takes loads off it.

...In other words the CPU in Wii U can easily run circles around Xenon. It's not vastly more capable or anything, but it certainly is head and shoulder's above. Nintendo has been working with this kind of CPU for 3 generations now, so certainly they know all kinds of crazy tricks for getting the most out of it.

I found something on neogaf from someone that knows a bit more about the console's hardware than most people

The main "problem" with the CPU, as far as I can tell, is the toolchain - especially if DICE did their tests several months ago (which is probably the case). At least until some time in the second half of 2012, the IDE and toolchain was an outdated version of GHS Multi. There are a couple of problems with that: First of all, pretty much nobody in the games industry has any experience whatsoever using that particular IDE, compiler and profiler. An even bigger problem might have been that developers have (or had) to do most advanced stuff manually I believe. That old version didn't support autovectorization for paired singles as far as I know, in which case devs had to use assembly to get decent floating point performance. The multicore implementation doesn't appear to be standard either, so who knows how much developers had to do by hand to get decent multithreading performance out of this thing? Criterion confirmed in an interview that the pre-launch tools and SDK were pretty much terrible.

It's pretty much the toolchain/terrible dev kits that caused the CPU problems for the WiiU until late 2012.No one still knows how powerful the WiiU's CPU is when it is under stress.

I found something on neogaf from someone that knows a bit more about the console's hardware than most people

The main "problem" with the CPU, as far as I can tell, is the toolchain - especially if DICE did their tests several months ago (which is probably the case). At least until some time in the second half of 2012, the IDE and toolchain was an outdated version of GHS Multi. There are a couple of problems with that: First of all, pretty much nobody in the games industry has any experience whatsoever using that particular IDE, compiler and profiler. An even bigger problem might have been that developers have (or had) to do most advanced stuff manually I believe. That old version didn't support autovectorization for paired singles as far as I know, in which case devs had to use assembly to get decent floating point performance. The multicore implementation doesn't appear to be standard either, so who knows how much developers had to do by hand to get decent multithreading performance out of this thing? Criterion confirmed in an interview that the pre-launch tools and SDK were pretty much terrible.

It's pretty much the toolchain/terrible dev kits that caused the CPU problems for the WiiU until late 2012.No one still knows how powerful the WiiU's CPU is when it is under stress.

super600

That would explain launch ports. I wonder why those saying the CPU is crap didn't just say it was the development kits instead, seems fishy.

Anyways, the CPU is pretty beefy considering the size of the thing and that it's also at its core decade old technology. It's still much better than Xenon. The real star of Wii U is the GPU and RAM amount though.

[QUOTE="super600"]

I found something on neogaf from someone that knows a bit more about the console's hardware than most people

The main "problem" with the CPU, as far as I can tell, is the toolchain - especially if DICE did their tests several months ago (which is probably the case). At least until some time in the second half of 2012, the IDE and toolchain was an outdated version of GHS Multi. There are a couple of problems with that: First of all, pretty much nobody in the games industry has any experience whatsoever using that particular IDE, compiler and profiler. An even bigger problem might have been that developers have (or had) to do most advanced stuff manually I believe. That old version didn't support autovectorization for paired singles as far as I know, in which case devs had to use assembly to get decent floating point performance. The multicore implementation doesn't appear to be standard either, so who knows how much developers had to do by hand to get decent multithreading performance out of this thing? Criterion confirmed in an interview that the pre-launch tools and SDK were pretty much terrible.

It's pretty much the toolchain/terrible dev kits that caused the CPU problems for the WiiU until late 2012.No one still knows how powerful the WiiU's CPU is when it is under stress.

Chozofication

That would explain launch ports. I wonder why those saying the CPU is crap didn't just say it was the development kits instead, seems fishy.

Anyways, the CPU is pretty beefy considering the size of the thing and that it's also at its core decade old technology. It's still much better than Xenon. The real star of Wii U is the GPU and RAM amount though.

Now we just need to wait for ninty or some else to master the console's hardware.

[QUOTE="Chozofication"]

[QUOTE="super600"]

I found something on neogaf from someone that knows a bit more about the console's hardware than most people

The main "problem" with the CPU, as far as I can tell, is the toolchain - especially if DICE did their tests several months ago (which is probably the case). At least until some time in the second half of 2012, the IDE and toolchain was an outdated version of GHS Multi. There are a couple of problems with that: First of all, pretty much nobody in the games industry has any experience whatsoever using that particular IDE, compiler and profiler. An even bigger problem might have been that developers have (or had) to do most advanced stuff manually I believe. That old version didn't support autovectorization for paired singles as far as I know, in which case devs had to use assembly to get decent floating point performance. The multicore implementation doesn't appear to be standard either, so who knows how much developers had to do by hand to get decent multithreading performance out of this thing? Criterion confirmed in an interview that the pre-launch tools and SDK were pretty much terrible.

It's pretty much the toolchain/terrible dev kits that caused the CPU problems for the WiiU until late 2012.No one still knows how powerful the WiiU's CPU is when it is under stress.

super600

That would explain launch ports. I wonder why those saying the CPU is crap didn't just say it was the development kits instead, seems fishy.

Anyways, the CPU is pretty beefy considering the size of the thing and that it's also at its core decade old technology. It's still much better than Xenon. The real star of Wii U is the GPU and RAM amount though.

Now we just need to wait for ninty or some else to master the console's hardware.

What do you hope to see this year?

I think it's pretty safe to say we'll see a 3D Mario, and Galaxy pushed Wii far. Retro's probably going to show something too, who know's what else. Pikmin is impressive but probably won't push the console at all.

[QUOTE="super600"]

[QUOTE="Chozofication"]

That would explain launch ports. I wonder why those saying the CPU is crap didn't just say it was the development kits instead, seems fishy.

Anyways, the CPU is pretty beefy considering the size of the thing and that it's also at its core decade old technology. It's still much better than Xenon. The real star of Wii U is the GPU and RAM amount though.

Chozofication

Now we just need to wait for ninty or some else to master the console's hardware.

What do you hope to see this year?

I think it's pretty safe to say we'll see a 3D Mario, and Galaxy on Wii pushed it far. Retro's probably going to show something too, who know's what else.

Some new IP maybe from ninty,X and a bunch of the games that were already mentioned by ninty.

I think you are the very last member of the Wii U Defense Force. At what point are you just gonna say- (**** it. Nintendo messed up and who can blame gamers for laughing and looking the other direction." Point is it doesn't matter what engine is on the Wii U. Sitting side by side of another console is gonna show what the Wii showed this gen. The game just won't be the same on the Wii U.

timbers_WSU

It's going to show, what exactly? That the graphics are weaker? So it won't have Crysis 3 maxed out at 1080p, big deal. I have no interest in blindly jumping on the hate wagon because it's the popular thing to do on the internet right now.

What do you hope to see this year?

I think it's pretty safe to say we'll see a 3D Mario, and Galaxy pushed Wii far. Retro's probably going to show something too, who know's what else.

Chozofication

3D Mario, Smash Bros, Pikmin 3, TW101, Bayonetta 2, Mario Kart, Retro's project(s). Off the top of my head, those are all confirmed. I definitely want to see more of Monolith Soft's X. It'll be interesting to see how TW101 and Pikmin 3 perform since the CPU is apparently slow and weak. :roll:

[QUOTE="Chozofication"]

What do you hope to see this year?

I think it's pretty safe to say we'll see a 3D Mario, and Galaxy pushed Wii far. Retro's probably going to show something too, who know's what else.

ChubbyGuy40

3D Mario, Smash Bros, Pikmin 3, TW101, Bayonetta 2, Mario Kart, Retro's project(s). Off the top of my head, those are all confirmed. I definitely want to see more of Monolith Soft's X. It'll be interesting to see how TW101 and Pikmin 3 perform since the CPU is apparently slow and weak. :roll:

Pikmin will be incredible looking, but I can definitely see its Wii roots so far. I believe it keeps getting pushed back for graphics, and we haven't seen new footage in a good long while so let's hope it's come a ways.

Even the Wii's Broadway wasn't weak for its time. Take what Super Mario Galaxy did with gravity for example - Broadway was around 60% capable of what one Xenon core could do. Ever play Boom Blox?

There's a reason Nintendo's stuck with the same core technology for their CPU's for so long other than BC, it's really, really good. Gekko beat out the Xbox's CPU even though it had 50% slower clockspeeds. Judging thing's by clockspeeds is just wrong.

[QUOTE="MFDOOM1983"]

Remember when 4A's(metro 2033) chief technical director called wii-u's cpu slow and horrible?

writings been on the wall for some time now.

GD1551

And people told me I was being moronic, a fanboy and delusional when I said the Wii U belonged in the same gen as the PS3/360. Even developers don't consider it a next gen console...

You were trying to prove a point to a sheep, try to prove a point to a wall and see if its possible. No matter how you look at wiiu, weaksauce next gen system or overpriced last gen system released in 2012, it still fails to run games that are running on 05 hardware :lol:[QUOTE="GD1551"]

[QUOTE="MFDOOM1983"]

Remember when 4A's(metro 2033) chief technical director called wii-u's cpu slow and horrible?

writings been on the wall for some time now.

silversix_

And people told me I was being moronic, a fanboy and delusional when I said the Wii U belonged in the same gen as the PS3/360. Even developers don't consider it a next gen console...

You were trying to prove a point to a sheep, try to prove a point to a wall and see if its possible. No matter how you look at wiiu, weaksauce next gen system or overpriced last gen system released in 2012, it still fails to run games that are running on 05 hardware :lol:No one knows what the CPU can do when it is stressed out.

You were trying to prove a point to a sheep, try to prove a point to a wall and see if its possible. No matter how you look at wiiu, weaksauce next gen system or overpriced last gen system released in 2012, it still fails to run games that are running on 05 hardware :lol:[QUOTE="silversix_"]

[QUOTE="GD1551"]

And people told me I was being moronic, a fanboy and delusional when I said the Wii U belonged in the same gen as the PS3/360. Even developers don't consider it a next gen console...

super600

No one knows what the CPU can do when it is stressed out.

No one but devs and devs been telling you it sucks, so yeaaaaaaah.[QUOTE="super600"]

[QUOTE="silversix_"]You were trying to prove a point to a sheep, try to prove a point to a wall and see if its possible. No matter how you look at wiiu, weaksauce next gen system or overpriced last gen system released in 2012, it still fails to run games that are running on 05 hardware :lol:

silversix_

No one knows what the CPU can do when it is stressed out.

No one but devs and devs been telling you it sucks, so yeaaaaaaah.Ninty and multiple other devs have talked about how terrible the dev kits/toolchain were before the WiiU launched.No one knows what the CPU can handle.I rather wait for someone to master the console's hardware.

No one but devs and devs been telling you it sucks, so yeaaaaaaah.[QUOTE="silversix_"]

[QUOTE="super600"]

No one knows what the CPU can do when it is stressed out.

super600

Ninty and multiple other devs have talked about how terrible the dev kits/toolchain were before the WiiU launched.No one knows what the CPU can handle.I rather wait for someone to master the console's hardware.

What you seems to not understand AT ALL is that there's nothing, NOTHING to master on this system. This is not what ps4 and probably 720 will be where it'll take a couple of years for devs to max them out... The sooner you understand this the better you'll sleep at night.[QUOTE="super600"]

[QUOTE="silversix_"]No one but devs and devs been telling you it sucks, so yeaaaaaaah.

silversix_

Ninty and multiple other devs have talked about how terrible the dev kits/toolchain were before the WiiU launched.No one knows what the CPU can handle.I rather wait for someone to master the console's hardware.

What you seems to not understand AT ALL is that there's nothing, NOTHING to master on this system. This is not what ps4 and probably 720 will be where it'll take a couple of years for devs to max them out... The sooner you understand this the better you'll sleep at night.The PS4 and 720 will take a year or two to max most likely since they are using easy to use PC hardware.The WiU at min produces high end 360/PS3 graphics. No one has seen what it can produce when it is pushed to the max.

What you seems to not understand AT ALL is that there's nothing, NOTHING to master on this system. This is not what ps4 and probably 720 will be where it'll take a couple of years for devs to max them out... The sooner you understand this the better you'll sleep at night.

silversix_

If there's nothing to master, then why Criterion and Iwata both said developers just started to understand the system? Going by developer statements, it's easy to learn but hard to master. Since when were launch titles implicative of the full power of a system? The Wii-U doesn't have a standard, run-of-the-mill CPU like the PS4 and 720 will have, and giving secondary teams the job of doing half-assed ports rarely works out well, if at all.

DICE is to EA like Retro Studios is to Nintendo.You're confusing DICE with EA.

EA doesn't want to support the Wii U.

Clock-w0rk

[QUOTE="Clock-w0rk"]DICE is to EA like Retro Studios is to Nintendo.You're confusing DICE with EA.

EA doesn't want to support the Wii U.

nintendoboy16

That's not true. DICE actually gets to put out games.

DICE is to EA like Retro Studios is to Nintendo.[QUOTE="nintendoboy16"][QUOTE="Clock-w0rk"]

You're confusing DICE with EA.

EA doesn't want to support the Wii U.

ChubbyGuy40

That's not true. DICE actually gets to put out games.

Not the point. I'm saying that while DICE is a differently named company, they are still owned by EA and pretty much follow their ideals, much like Retro under Nintendo.[QUOTE="ChubbyGuy40"]

[QUOTE="nintendoboy16"] DICE is to EA like Retro Studios is to Nintendo.nintendoboy16

That's not true. DICE actually gets to put out games.

Not the point. I'm saying that while DICE is a differently named company, they are still owned by EA and pretty much follow their ideals, much like Retro under Nintendo. Correct[QUOTE="super600"]

[QUOTE="ronvalencia"] I don't think it will solve the CPU issue. Chozofication

I think it may be slightly stronger than the 360 CPU's.

Each "Espresso" core can do 5 instructions of out of order executions (out of order - superior) vs. the Xenon's 2 instructions of in order executions (in order - inferior).

Add to that that an entire Xenon Core had to be used for sound, and that Wii U has a dedicated chip for that, as well as the Espresso having way more cache, and up to date cache at that. Never mind that the CPU and GPU are on an MCM which ramps up communication speed as well, though this and the seperate arm chip don't really make the CPU stronger, it takes loads off it.

...In other words the CPU in Wii U can easily run circles around Xenon. It's not vastly more capable or anything, but it certainly is head and shoulder's above. Nintendo has been working with this kind of CPU for 3 generations now, so certainly they know all kinds of crazy tricks for getting the most out of it.

You didn't factor in the instruction's data payload width.

If http://en.wikipedia.org/wiki/Espresso_(microprocessor) is true, the problem with IBM Espresso is with it's 64bit wide SIMD unit and low 1.24 Ghz. For 128bit data processing, Espresso is effectively like 620Mhz 128bit SIMD unit.

In basic terms, PPE's 128 bit SIMD instruction is equivalent to two Espresso 64bit SIMD instruction. IBM Espresso wouldn't win data processing category.

From LinuxPPC app benchmarks, PPE (2 instruction issue design) i@ 3.2Ghz is equivalent to PowerPC G5 @ 1.6Ghz (1 branch + 4 instruction issue design) i.e. Wii U's Espresso would not win. PowerPC G5 is equiped with 128bit SIMD hardware.

----

From X86 camp....

A single Intel 256bit wide AVX instruction issue (including it's 256bit wide data payload) can cover Espresso's four instruction issue rate (upto 64bits wide for data payload).

AMD Jaguar has support for Intel's AVX instruction width and has 128bit SIMD hardware i.e. it "double pumps" it's 128bit SIMD hardware for AVX support.

It doesn't need to be EA. IBM Espresso is not Intel Core 2 class CPU i.e. out-of-order processing with 128bit SIMD units.1. EA

2.Frostbite 3 instead of Frostbite 2.

Thats really all we need to say.

WilliamRLBaker

Please Log In to post.

Log in to comment