[QUOTE="UnknownSniper65"]Will make the money back from XBL, software sales, and contract options. Can easily have the card by making the console bigger and fyi, the 7990 for 720 will be custom, take less heat, smaller etc... You are really that dumb? It took MS all the way into 2012 to actually make pure profit on the 360 and that includes xbox live and all other sources of income from console gaming. Also you can not shrink the 7990 enough to keep it a 7990 and to lower heat :lol:, they would need to use at the very minimum 16nm manufacturing to cut the powerconsumption by at least 40%, which isnt going to happen until 2015.Lol there is no way that rumor is true. That Xbox would have to have a pretty big PSU to support that AMD 7990. Not to mention they'd be taking a big loss on the GPU alone if they sold it for 300.

reach3

PC to be destroyed next year. Xbox 720 specs= AMD 7990. 16 Core CPU, 4GB Ram

This topic is locked from further discussion.

[QUOTE="ronvalencia"][QUOTE="MK-Professor"]

no

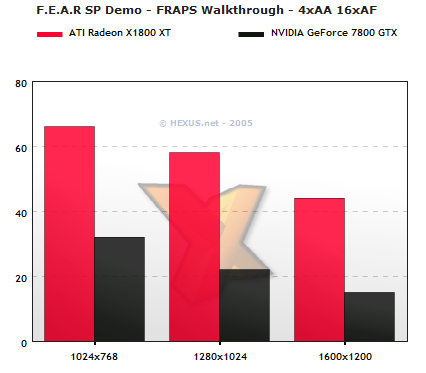

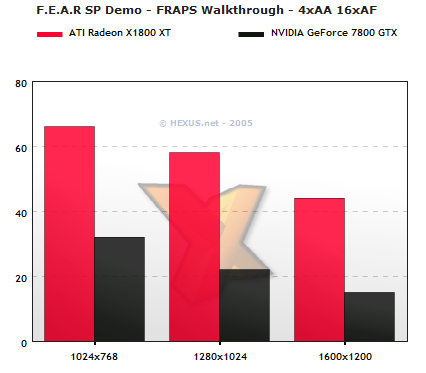

http://www.tomshardware.co.uk/charts/desktop-vga-charts-2006/3DMark06-v1.0.2,584.html even in 3DMark06 the 7800 GTX is still slightly faster.

buck to my initial argument, a prehistoric ATI X1950pro can run games like crysis 2 ( link ) with slightly better graphics and performance than console version.(there for the 7800GTX shouldn't have problem to play this game with similar settings)

reach3

No,

Crysis 2 + Geforce 7800 GTX = fail http://www.youtube.com/watch?v=klgVCk178OI

And Crysis 2 + Xenos = win its actually playable on superior 360 GPU O yes lets play a inferior squeal, that was designed around console limits. lets move onto the 1st Crysis which was recreated for consoles and it looks worse then it did back in 2007 even with a 7800.

[QUOTE="MK-Professor"]

no

http://www.tomshardware.co.uk/charts/desktop-vga-charts-2006/3DMark06-v1.0.2,584.html even in 3DMark06 the 7800 GTX is still slightly faster.

buck to my initial argument, a prehistoric ATI X1950pro can run games like crysis 2 ( link ) with slightly better graphics and performance than console version.(there for the 7800GTX shouldn't have problem to play this game with similar settings)

ronvalencia

No,

Crysis 2 + Geforce 7800 GTX = fail http://www.youtube.com/watch?v=klgVCk178OI

in evry other bechmark the Geforce 7800 GTX was ahead from the x1800XT or x1950pro

[QUOTE="ronvalencia"][QUOTE="MK-Professor"]

no

http://www.tomshardware.co.uk/charts/desktop-vga-charts-2006/3DMark06-v1.0.2,584.html even in 3DMark06 the 7800 GTX is still slightly faster.

buck to my initial argument, a prehistoric ATI X1950pro can run games like crysis 2 ( link ) with slightly better graphics and performance than console version.(there for the 7800GTX shouldn't have problem to play this game with similar settings)

reach3

No,

Crysis 2 + Geforce 7800 GTX = fail http://www.youtube.com/watch?v=klgVCk178OI

And Crysis 2 + Xenos = win http://www.youtube.com/watch?v=WFjGCA53sHQ its actually playable on superior 360 GPUand yet a prehistoric ATI X1950pro can run crysis 2 ( link ) with slightly better graphics and performance than console version.

Not its not quite plausible with the specs the TC is so delusional about. The costs and power and heat will cause the console price to skyrocket. Now 16 core cpu is a mixed bag , now are they going to use standard type triple or quad core cpu along with 12-13 ARM processors for multimedia and kinect? Or how about quad core cpu with 4 threads per core or 8 core with two threads per core would be more plausible. The 7990 claim is plain ludicrous that type GPU even with 28nm manufacturing is a 300w TDP based gpu. That would require a hefty cooler and power supply, and a direct line of sight of air cooling in a console sized case. What will most likely happen, is that they will use APU based console with a quad core cpu and a low TDP based gpu portion.Seems plausible. The Xbox 360 launched with a tri-core processor when the majority of PCs were just moving to dual core processors.

Peredith

[QUOTE="ronvalencia"]

[QUOTE="MK-Professor"]

no

http://www.tomshardware.co.uk/charts/desktop-vga-charts-2006/3DMark06-v1.0.2,584.html even in 3DMark06 the 7800 GTX is still slightly faster.

buck to my initial argument, a prehistoric ATI X1950pro can run games like crysis 2 ( link ) with slightly better graphics and performance than console version.(there for the 7800GTX shouldn't have problem to play this game with similar settings)

MK-Professor

No,

Crysis 2 + Geforce 7800 GTX = fail http://www.youtube.com/watch?v=klgVCk178OI

in evry other bechmark the Geforce 7800 GTX was ahead from the x1800XT or x1950pro

You didn't read my post from Beyond3D and modern shader programs in games.

Geforce 7800 GTX didn't win

1. Oblivion PC (one of many GameBryo engine games) since it's unable to match MSAA+HDR FP. Limitation carried over to PS3. Next-gen game engine for Xbox 360 era.

2. Mass Effect. i.e. a game that uses Unreal Engine 3 (deferred shading for lights). Next-gen game engine for Xbox 360 era.

3. Assassin's Creed. Next-gen game engine for Xbox 360 era.

4. It looks like it wouldn't win Crysis 2 (deferred renderer for lights).

Notice the games mentioned have shader bias.

There are plenty of games that uses Unreal Engine 3 e.g. Mirror's Edge, Gears Of War, Mass Effect 2, The Last Remnant, Unreal Tournament 3, Tom Clancy's Rainbow Six: Vegas and 'etc'. http://en.wikipedia.org/wiki/List_of_Unreal_Engine_games#Unreal_Engine_3

The move towards Xbox 360 era shows ATI's PC DX9 GPU strenghts i.e. reduces the influences with NVIDIA's "The Way It's Meant To Be Played" DX9 workarounds, which is not sustainable in the long run.

With Nvidia's Geforce 8800 (Nov 2006), NV can abandon thier DX9 "The Way It's Meant To Be Played" DX9 workarounds. PC doesn't have CELL to "patch" Geforce 7 type GPU.

One can't say Geforce 7800/7900 is a better GPU compared to the competition since clearly it doesn't show the sustained compute performance. Geforce 7's 32bit FP issues reminds me of Geforce FX i.e. 16bit FP compute is "good enough" mentality.

Mr 15 percent GPU vendor (NVIDIA) would not have clout for "The Way It's Meant To Be Played" in the next gen Wii U/Xbox 720/PS4 game consoles and Mr 25 percent GPU vendor (AMD).

PS; In mobile phone GPUs, Qualcomm's Adreno 320 uses AMD's Xenos technology. Qualcomm's SnapDragon S4 is mundering NV Tegra 3 in unit sales.

If M$ release a console with specs like that the price will be higher AND.... get ready for a loud jet engine console with high fail rate.KillzoneSnakerrod only lasted a year big deal, they learned their lesson. 360 slim now is more reliable than ps3 slim. if anything will fail rate it will be ps4

[QUOTE="KillzoneSnake"]If M$ release a console with specs like that the price will be higher AND.... get ready for a loud jet engine console with high fail rate.reach3rrod only lasted a year big deal, they learned their lesson. 360 slim now is more reliable than ps3 slim. if anything will fail rate it will be ps4 The 360 slim came out 5 years after the 360. Still the RROD haunts the slim 360 to this day. PS3 slim are much more durable... and guess what... this year Sony is launching the SUPER SLIM PS3 for $199

[QUOTE="reach3"][QUOTE="KillzoneSnake"]If M$ release a console with specs like that the price will be higher AND.... get ready for a loud jet engine console with high fail rate.KillzoneSnakerrod only lasted a year big deal, they learned their lesson. 360 slim now is more reliable than ps3 slim. if anything will fail rate it will be ps4 The 360 slim came out 5 years after the 360. Still the RROD haunts the slim 360 to this day. PS3 slim are much more durable... and guess what... this year Sony is launching the SUPER SLIM PS3 for $199 sorry I cant understand you, i dont speak BS

[QUOTE="jokeisgames2012"]fastest cars in the world are american period nunovlopes

They're also the ugliest.

You really think so?

[QUOTE="KillzoneSnake"]If M$ release a console with specs like that the price will be higher AND.... get ready for a loud jet engine console with high fail rate.reach3rrod only lasted a year big deal, they learned their lesson. 360 slim now is more reliable than ps3 slim. if anything will fail rate it will be ps4 People have sent it in more than once for rrod in the 3 years ms extended 360's warranty. Which they wouldn't have had to extend to 3 years costing them billions if it only lasted a year. The slim we sent in twice within the year warranty with very little use so I still don't consider them stable. I don't know about sony's home consoles after ps1, but with psp and now vita I don't have to worry about that. Most I will have to do is replace the analogs and battery after a few years if I don't get the remodel when it comes out. I think the 360 slim is still louder. Ps3 having a more enclosed case probably helps though.

I think a better point is that history will just repeat itself and the nextbox will suffer from lack of support just like the first 2.

Yup.my personal favourite Jet

sts106mat

Got a closeup of that one too back in 2008. Too bad I took the photo with an old Coolpix 995 (7-yr old digital camera). It's my wallpaper too.

LOL rumors but if they did that................RROD all over again. LOL that would produce so much heat.So, will have 16 core CPU, 4GB ram, and AMD 7990 plus Kinect V2. All for $300 dollars. With specs like these and throwing the beauty of console optimization on top, the graphcis are going to make PC look like WII U in comparison. No point in building a pc when 720 costs $300 and has betetr hardware than even a $2000 pc will have. I guess AMD stating 720 will have Avatar graphics is true after all.

plus 16 core cpu

http://www.tomshardware.com/news/Xbox-720-16-Core-CPU-xbox-next-microsoft,15285.html

reach3

[QUOTE="nunovlopes"][QUOTE="jokeisgames2012"]fastest cars in the world are american period Heirren

They're also the ugliest.

You really think so? That body is British, the AC Ace.

That body is British, the AC Ace. ^ Microsoft arent stupid they know how to use the hardware effectively. They arent going casual and settle like wii u/ ps4 and abandon core. Again with these optimized specs will leave pc, and POS4 in the dust. AMD themselves said will have Avatar like graphics, so will be close to them. Can you imagine Halo 5, Gears 4, Witcher 3, Forza etc... with graphics like this? I cannot wait.

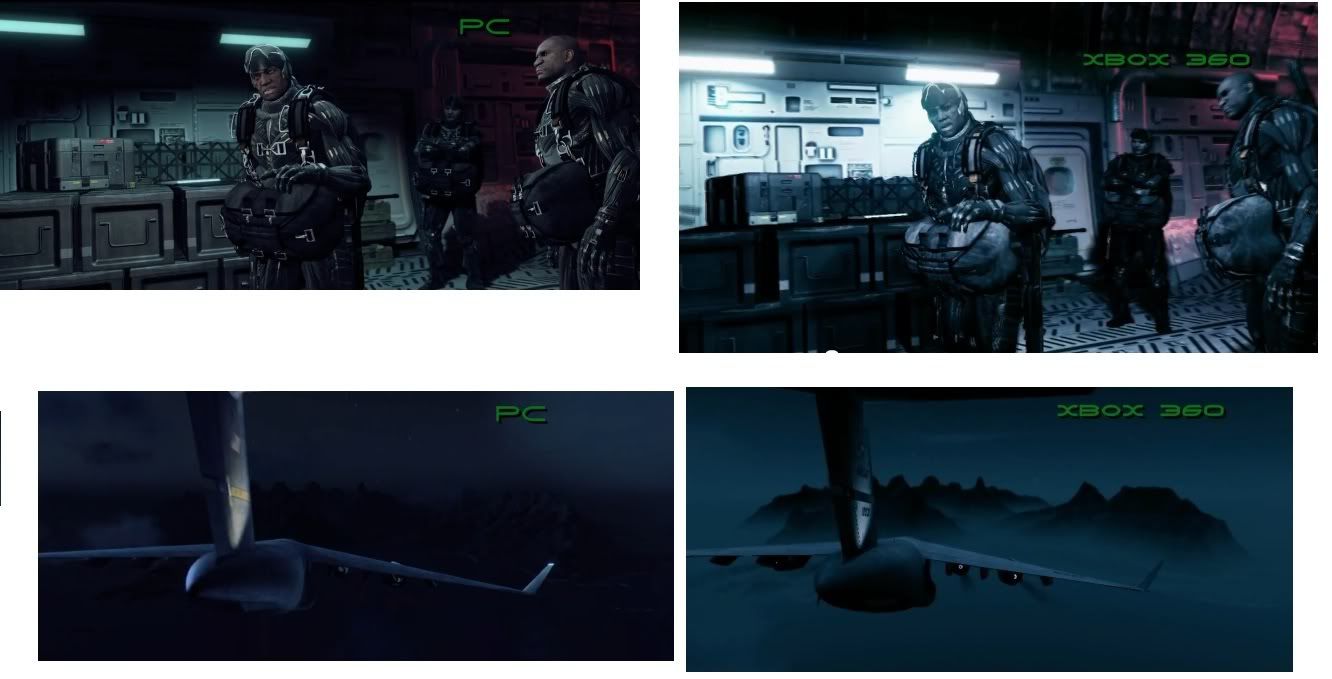

![]()

O yes lets play a inferior squeal, that was designed around console limits. lets move onto the 1st Crysis which was recreated for consoles and it looks worse then it did back in 2007 even with a 7800.There is a pic showing a korean lifted with maximum strength on pc and ps3, on ps3 the korean suit is blurry as hell, his face looks like crap and the radio on his shoulder is missing. Consoles are laughable.

04dcarraher

] sorry I cant understand you, i dont speak BSreach3But you do.

Microsoft arent stupid they know how to use the hardware effectively. They arent going casual and settle like wii u/ ps4 and abandon core. Again with these optimized specs will leave pc, and POS4 in the dust. AMD themselves said will have Avatar like graphics, so will be close to them. Can you imagine Halo 5, Gears 4, Witcher 3, Forza etc... with graphics like this? I cannot wait reach3This entire thread provs it. First of all microsoft is stupid, second no they dont know how to use hardware effectively, eg PROD,they HAVE gone casual and pc will never be defeated by crapbox. You cant have Avatar graphics. EVER. Unless you use the same techiniques they used, which is more motion capture than rendering. But i doubt you have any idea what those terms means just like most console gamers who listen to the hype crap the consoles throw at you.

Dude, Battlefield 2142 looks better then Gears of War 1 on 360. High draw distance, 64 players, 8xAA, 16xAF. Technically better then Gears of War 1 on 360. reach3 loses.Looks like a ps2 game in super high res. Gears may be lower res, but infinitely better technical graphics.Jebus213

Looks like a ps2 game in super high res. Gears may be lower res, but infinitely better technical graphics.[QUOTE="Jebus213"]Dude, Battlefield 2142 looks better then Gears of War 1 on 360. High draw distance, 64 players, 8xAA, 16xAF. Technically better then Gears of War 1 on 360. reach3 loses.

reach3

[QUOTE="reach3"]Looks like a ps2 game in super high res. Gears may be lower res, but infinitely better technical graphics.[QUOTE="Jebus213"]Dude, Battlefield 2142 looks better then Gears of War 1 on 360. High draw distance, 64 players, 8xAA, 16xAF. Technically better then Gears of War 1 on 360. reach3 loses.

Jebus213

[QUOTE="Jebus213"][QUOTE="reach3"] Looks like a ps2 game in super high res. Gears may be lower res, but infinitely better technical graphics.High draw distance, giant maps, 64 players(BF2 has 128 players servers), 8xAA, 16xAF. Technically better then linear Gears of War 1 on 360. You do know poly count and textures aren't the only technical things right? Without AF in Gears textures look like sh!t. And yet gears textures are far better and far higher poly count. oh and by how far?reach3

[QUOTE="Jebus213"] High draw distance, giant maps, 64 players(BF2 has 128 players servers), 8xAA, 16xAF. Technically better then linear Gears of War 1 on 360. You do know poly count and textures aren't the only technical things right? Without AF in Gears textures look like sh!t.

And yet gears textures are far better and far higher poly count. oh and by how far? Judges by pics, leap years[QUOTE="reach3"]And yet gears textures are far better and far higher poly count. oh and by how far? Judges by pics, leap years And in some ways, Crysis 1 on 360 buries pc version[QUOTE="Jebus213"] High draw distance, giant maps, 64 players(BF2 has 128 players servers), 8xAA, 16xAF. Technically better then linear Gears of War 1 on 360. You do know poly count and textures aren't the only technical things right? Without AF in Gears textures look like sh!t.Jebus213

Better lighting than pc version

oh and by how far? Judges by pics, leap years And in some ways, Crysis 1 on 360 buries pc version[QUOTE="Jebus213"][QUOTE="reach3"] And yet gears textures are far better and far higher poly count. reach3

Better lighting than pc version

It doesn't have better lighting. They reduced the graphics and put a blue photo filter over the 360 version.[QUOTE="reach3"]Judges by pics, leap years And in some ways, Crysis 1 on 360 buries pc version[QUOTE="Jebus213"] oh and by how far?Jebus213

Better lighting than pc version

It doesn't have better lighting. They reduced the graphics and put a blue photo filter over the 360 version. looks far better than pc version dull lighting, why not admit? Even Crytek admits 360 version looks better than pc version http://www.nowgamer.com/news/1073013/crytek_crysis_on_console_looks_better_than_pc_version.html "We asked Yerli if the finished product on consoles ran at the specs he would like. He told us, "Yeah, it runs at the same fidelity as Crysis 2, but the frame rate is a solid 25-30, its not a 60fps game. It feels very fluid on consoles, and weve also made an effort in remastering the content for consoles." Yerli added, "What I mean by that is weve given it a whole new lighting path, quite a few new special effects, weve added the Nanosuit 2 controls, and all of this has added to, as well as improved the experience." But how does it compare to the PC version of Crysis? "I want to be clear: when I say the console versions look better than Crysis on PC, I mean that as a factual thing" So CRYTEK themselves agree with me and confirmed 360 version of Crysis looks far better than pc version and its fact.@reach3 - I thought we talked about this before and you came to your senses. Nothing on any current console even comes close to games on the PC graphically. To suggest anything different only highlights you as delusional in the extreme.

Secondly the article you posted says nothing about a 7990. 4x-6x current Xbox power is around a 6850, which is a cheap, low ending gaming PC by todays standards.

You have no idea what you are talking about do you.

and yet article said will be 7000series, obviosuly referring to 7990@reach3 - I thought we talked about this before and you came to your senses. Nothing on any current console even comes close to games on the PC graphically. To suggest anything different only highlights you as delusional in the extreme.

Secondly the article you posted says nothing about a 7990. 4x-6x current Xbox power is around a 6850, which is a cheap, low ending gaming PC by todays standards.

You have no idea what you are talking about do you.

iamrob7

Please Log In to post.

Log in to comment