@tormentos:

Colonel Ron's very busy and he just wanted me to tell you....

Lololololol :P

Let the games begin!

Tweet

Source

Would have liked a Scorpio. Sadly it came a little too late. My PC is far more powerful and got most games I want on the Scorpio.

I already know Battlefront II will run and look better on Scorpio than the VAST majority of PCs on Steam, native 4K @ 60 FPS.

Microsoft is directing devs to use DX12 for games. BF2 is going to be a DX12 game. Of course most Playstation fans ignore the xbox one got more 60fps games. PS4 mostly got 30fps games, and got better hardware.

@gordonfreeman:Yeah I know which is why I used the quotation marks, but that's a good point about the DirectX 12 custom integration into the hardware. That should help with processing load.

@ronvalencia: Good point. Advances in AI are not moving as fast, and the game still has to be made for the base consoles as well. I'm not to sure about offloading tasks to the gpu. Would running a game like BF2 4K at high to medium asset quality be maxing the gpu? I guess DirectX could help with this?

Each GCN CU has 64 bit scalar integer processors which can takeover some of CPU's rendering logic.

AMD GCN CU's 64bit scalar unit to be enabled under Shader Model 6.0. Shader Model 6 planning was since the beginning of the first GCN i.e. Radeon HD 7970

PS4 has 18 CU, hence there's 18 scalar processors at 800 Mhz

PS4 Pro has 36 CU, hence there's 36 scalar processors at 911 Mhz

XBO has 12 CU, hence there's 12 scalar processors at 857 Mhz

Scorpio has 40 CU, hence there's 40 scalar processors at 1172 Mhz

AMD GCN CU's 64bit scalar unit maps to X86-64 CPU's 64bit Scalar Integer units ie. the 64bit scalar datatype is the same.

GCN CU's 64bit scalar unit is like a small 64bit CPU with 8 KB (1024 64bit) registers..

EA DICE's Frostbite 3.X XBO/PS4 builds has advance GPU usage to reduce CPU load i.e. there's no secret sauce. EA DICE has co-written Mantle API with AMD and they should know AMD GCN's specs.

Shader 6 is coming to xbox one. Jump in graphic quality, for the XB1?

I'll ask again. Can anyone provide a few examples of his Jez person being right about his insider info besides the DF reveal?

I'll ask again. Can anyone provide a few examples of his Jez person being right about his insider info besides the DF reveal?

He can't post fake information. He writing about MS products. And hes revealed lot info that turned out to be true., on other stuff.

He's insiders are Microsoft employees, must of the stuff is controlled leaks.

I'll ask again. Can anyone provide a few examples of his Jez person being right about his insider info besides the DF reveal?

He can't post fake information. He writing about MS products. And hes revealed lot info that turned out to be true., on other stuff.

He's insiders are Microsoft employees, must of the stuff is controlled leaks.

"And hes revealed lot info that turned out to be true., on other stuff."

This is what I'm asking about. What has he revealed that turned out to be true? can you provide a small list of examples?

I'll ask again. Can anyone provide a few examples of his Jez person being right about his insider info besides the DF reveal?

He can't post fake information. He writing about MS products. And hes revealed lot info that turned out to be true., on other stuff.

He's insiders are Microsoft employees, must of the stuff is controlled leaks.

"And hes revealed lot info that turned out to be true., on other stuff."

This is what I'm asking about. What has he revealed that turned out to be true? can you provide a small list of examples?

I not a fanboy of hes i would have to check. He leaked a video of the dev kit before DF showed it, that's one leak i can remember.

He's job is not to write about xbox. He's job is to write about Microsoft products.

It won't be 60 fps. Especially on 64 player matches. It MIGHT achieve 60 fps 4k native on smaller matches such as 16 vs 16 and the single player. But I have a huge doubt that 64 player matches will be 60 fps.

They'll probably sit in the 30s like current Xbone, dropping below 30 constantly on 64 player matches.

Read a ton of bullshit regarding PC to XB1 comparisons, in terms of platform benefits....original post really wasn't explicitly saying that all those PC gamers who can't run BF2 as well as a Scorpio can, should get a Scorpio. Maybe some of them would just want to? Maybe someone can own a Scorpio and a PC...physically possible. Most PCs don't have 1080s and such, and Scorpio is running the game native 4k 60, a massive upgrade to original X1 performance...anyone who denies that as a massive upgrade or tries to twist that simple news into, "yeah but, PC!..noone on PC should be interested in a Xbox branded affordable 4k gaming console"...is missing the point or in denial.

That anyone would turn this simple news into such a contest is definitely seeking to downplay the upgrade from X1 to Scorpio for this game.

Each GCN CU has 64 bit scalar integer processors which can takeover some of CPU's rendering logic.

AMD GCN CU's 64bit scalar unit to be enabled under Shader Model 6.0. Shader Model 6 planning was since the beginning of the first GCN i.e. Radeon HD 7970

PS4 has 18 CU, hence there's 18 scalar processors at 800 Mhz

PS4 Pro has 36 CU, hence there's 36 scalar processors at 911 Mhz

XBO has 12 CU, hence there's 12 scalar processors at 857 Mhz

Scorpio has 40 CU, hence there's 40 scalar processors at 1172 Mhz

AMD GCN CU's 64bit scalar unit maps to X86-64 CPU's 64bit Scalar Integer units ie. the 64bit scalar datatype is the same.

GCN CU's 64bit scalar unit is like a small 64bit CPU with 8 KB (1024 64bit) registers..

EA DICE's Frostbite 3.X XBO/PS4 builds has advance GPU usage to reduce CPU load i.e. there's no secret sauce. EA DICE has co-written Mantle API with AMD and they should know AMD GCN's specs.

Shader 6 is coming to xbox one. Jump in graphic quality, for the XB1?

No. Scalar processor is use for logic processing not for data processing. EA DICE is moving more CPU rendering logic to the GPU.

GPU's vector units mostly deals with graphics data.

Going to proceed with caution on this hype. Its already known that Scorpio out performs most pc on steam but at what level. This will be the game that might support Ron's assertion but will pc version be at E3 for comparison. Also caution games don't run at there represented states at E3. People here will premature punce on it when they see fps in the 40s for example.

Going to proceed with caution on this hype. Its already known that Scorpio out performs most pc on steam but at what level. This will be the game that might support Ron's assertion but will pc version be at E3 for comparison. Also caution games don't run at there represented states at E3. People here will premature punce on it when they see fps in the 40s.

As long 1.6 Ghz CPU drives 60 hz Physics/AI update rates, it should be fine on Scorpio. A straight 2.3 Ghz increase has 43 percent more head room over 1.6 Ghz version. Lower latency improvements yields additional headroom.

Scorpio's custom command processor reduce CPU (GPU command list building) workload up to half.

AMD Jaguar's L2 cache has access 26 cycle latency.

Intel Sandy Bridge/Haswell's L2 cache has access ~11 cycle latency.

http://www.anandtech.com/show/6355/intels-haswell-architecture/9 "Feeding the beast"

When compared Jaguar, Sandy bridge L2 cache has 57 percent less latency and twice the width (16 bytes vs 32 bytes).

16 bytes = 128 bit i.e. one 128 bit SSE or 0.5 256 bit AVX or 2 smaller 64 bit data types (Jaguar has two X86-64 decoders).

32 bytes = 256 bit i.e. two 128 bit SSE or 1 256 bit AVX or 4 smaller 64 bit data types (Ryzen/Sandybridge has four X86-64 decoders)..

64 bytes = 512 bit i.e. two 128 bit SSE (dual SSE port limit for Haswell) or two 256 bit AVX (dual AVX port limit for Haswell) or 4 smaller 64 bit data types (Haswell has four X86-64 decoders limit).

Ryzen's 128 bit SSE units has 4 ports i.e. both L1 and L2 cache can feed it.

Jagaur's 128 bit SSE units has 2 ports i.e. both L1 and L2 cache can feed it (in theory).

MS claims lower latency for their CPU improvement, hence half-way to Sandy bridge.

We will see what settings where used and how stable the framerate is.

I have a system better than the Scorpio and at 4K I cannot get a comfortable 60FPS in BF1 at Ultra settings.... On High I get 60-80FPS, Ultra it can drop to 50FPS.

I'll assume that the game will be running at a mixture of settings to get the 60FPS.

is it true native 4k?

yea and what resolution scale? and what settings?

Here.

I already know Battlefront II will run and look better on Scorpio than the VAST majority of PCs on Steam, native 4K @ 60 FPS.

Well most PC are no even stronger than the Pro on steam,so scorpio is even higher that doesn't say much,i want to see head to head comparison with the 1070gtx both frames and quality wise.

http://www.eurogamer.net/articles/digitalfoundry-2017-project-scorpio-tech-revealed

Scorpio GPU's 2MB L2 cache was used to reach 4K in addition to "more than 300 GB/s memory bandwidth" GPU allocation.

Existing AMD GPU's pixel engines are not even connected to L2 cache i.e. RX-580 wasted it's 2 MB L2 cache since it's not connected to Pixel Engines.

1. The secret sauce strike again..

2. Forza is a shitty optimized game,and is not impressive in any way,wait until BF2 arrives and see how it fares vs the 1070 so you can make more shitty post.

3. You don't need to quadruple cache to hit 4k,many GPU do it already,this is nothing more than PR from MS,just like ESRAM was and the 209GB/s which you ride many times and that serve the xbox one for nothing.

1. It's NVIDIA's secret sauce and it's part of NVIDIA's tile cache rendering supporting hardware and it's a near guarantee win for NVIDIA for workloads that uses Pixel Engine path e.g. Unreal Engine 4 such as Tekken 7 which has plenty of alpha/blend/semi-transparent effects.

2. Your argument doesn't address missing features with the current AMD GPU's Pixel Engine path. Forza wet track has tons alpha/blend/semi-transparent effects which are inherently Pixel Engine path i.e. it's OK on 256 bit GDDR5-8000 equipped GTX 1070 GPUs and it's terrible on AMD GPUs with 256 bit GDDR5-8000 equipped RX-480 OC.

R9-390X doesn't have DDC to reduce extra memory bandwidth load nor 2 MB L2 cache to reduce latency and reduce external memory hit rates.

MS used Scorpio's 2 MB L2 cache + more than 300 GBps memory bandwidth for their Forza run.

3. What you haven't grasped is, IF NVIDIA has it = winning GPU performance booster. You can't handle the truth. GTX 1070 has near BOM cost as RX-580 and GTX 1070 has superior results and NVIDIA has a healthy profit. This is why I dump my AMD desktop PC GPUs.

XBO's ESRAM is garbage with 109 GBps memory write limit and 30 MB storage size, but it enabled XBO' Snake Pass to gain extra foliage over PS4 version. ESRAM does NOT address Shader ALU bound issue. Your argument is a red herring.

@Grey_Eyed_Elf: What are your specs?

Better than a Scorpio... Its why this 4K hype is joke, I KNOW neither the Pro or Scorpio is really enough unless its a easy to run game. Majority of games I have to run at medium and if I am lucky high settings to get 60FPS. Ultra?... Nope, not unless I drop to 1440p.

Scorpio will undoubtedly be running games at 30FPS.

We will see what settings where used and how stable the framerate is.

I have a system better than the Scorpio and at 4K I cannot get a comfortable 60FPS in BF1 at Ultra settings.... On High I get 60-80FPS, Ultra it can drop to 50FPS.

I'll assume that the game will be running at a mixture of settings to get the 60FPS.

Try further overclock your GPU's memory. GTX 1070 is equipped with 256 bit GDDR5-8000 while GTX 1080 has 256 bit GDDR5X-10000. Newer GTX 1070s has GDDR5-9000 memory modules.

Your MSI GTX 1070 Gaming X has GDDR5-8100 setup.

http://www.guru3d.com/articles_pages/msi_geforce_gtx_1070_gaming_x_review,30.html

Guru3d overclocked their MSI GTX 1070 Gaming X's memory to GDDR5-9100. Try 8500 and progress your memory overclock to 9000.

MS used Scorpio's 2 MB L2 cache + more than 300 GBps memory bandwidth for their Forza run i.e. Nvidia GPUs doesn't have 2 MB L2 cache booster advantage against Scorpio. Its a no brainer for Scorpio's Forza result i.e. MS copied Maxwell's cache/memory setup.

GP104 has 2 MB L2 cache with Pixel Engine connection.

I followed Guru3D's MSI GTX 980 Ti Gaming X overclock settings and it works fine on my MSI GTX 980 Ti Gaming X.

We will see what settings where used and how stable the framerate is.

I have a system better than the Scorpio and at 4K I cannot get a comfortable 60FPS in BF1 at Ultra settings.... On High I get 60-80FPS, Ultra it can drop to 50FPS.

I'll assume that the game will be running at a mixture of settings to get the 60FPS.

Try further overclock your GPU's memory. GTX 1070 is equipped with 256 bit GDDR5-8000 while GTX 1080 has 256 bit GDDR5X-10000. Newer GTX 1070s has GDDR5-9000 memory modules.

Your MSI GTX 1070 Gaming X has GDDR5-8100 setup.

http://www.guru3d.com/articles_pages/msi_geforce_gtx_1070_gaming_x_review,30.html

Guru3d overclocked their MSI GTX 1070 Gaming X's memory to GDDR5-9100. Try 8500 and progress your memory overclock to 9000.

...

We will see what settings where used and how stable the framerate is.

I have a system better than the Scorpio and at 4K I cannot get a comfortable 60FPS in BF1 at Ultra settings.... On High I get 60-80FPS, Ultra it can drop to 50FPS.

I'll assume that the game will be running at a mixture of settings to get the 60FPS.

Try further overclock your GPU's memory. GTX 1070 is equipped with 256 bit GDDR5-8000 while GTX 1080 has 256 bit GDDR5X-10000. Newer GTX 1070s has GDDR5-9000 memory modules.

Your MSI GTX 1070 Gaming X has GDDR5-8100 setup.

http://www.guru3d.com/articles_pages/msi_geforce_gtx_1070_gaming_x_review,30.html

Guru3d overclocked their MSI GTX 1070 Gaming X's memory to GDDR5-9100. Try 8500 and progress your memory overclock to 9000.

...

Did you run BF1 in overclock condition?

They made an entire article off a single Tweet? From an argument, no less. Of course Scorpio would perform better than majority of PCs, but there's no actual source the article.

We will see what settings where used and how stable the framerate is.

I have a system better than the Scorpio and at 4K I cannot get a comfortable 60FPS in BF1 at Ultra settings.... On High I get 60-80FPS, Ultra it can drop to 50FPS.

I'll assume that the game will be running at a mixture of settings to get the 60FPS.

Try further overclock your GPU's memory. GTX 1070 is equipped with 256 bit GDDR5-8000 while GTX 1080 has 256 bit GDDR5X-10000. Newer GTX 1070s has GDDR5-9000 memory modules.

Your MSI GTX 1070 Gaming X has GDDR5-8100 setup.

http://www.guru3d.com/articles_pages/msi_geforce_gtx_1070_gaming_x_review,30.html

Guru3d overclocked their MSI GTX 1070 Gaming X's memory to GDDR5-9100. Try 8500 and progress your memory overclock to 9000.

...

Did you run BF1 in overclock condition?

I have had that overclock since I got the card and benched it.

BF1 on Ultra at 4K... Its will drop to 40-50FPS. Its playable yes but I like my MINIMUM being closer to 60 as possible for FPS games.

The reason I mention this is because there's no way what so ever that BF2 will be running 4K 60FPS on Ultra... Scorpio regardless of the memory bandwidth advantage will be bottlenecked by the raw performance of polaris.

...

I have had that overclock since I got the card and benched it.

BF1 on Ultra at 4K... Its will drop to 40-50FPS. Its playable yes but I like my MINIMUM being closer to 60 as possible for FPS games.

The reason I mention this is because there's no way what so ever that BF2 will be running 4K 60FPS on Ultra... Scorpio regardless of the memory bandwidth advantage will be bottlenecked by the raw performance of polaris.

All of Ron's rambling about bandwidth for the Scorpio gpu is moot. Even with a RX 580 overclocked by 210mhz with memory overclocked to an effective 9000mhz, seen only 7% increase over stock RX 580 at 1440p. Meaning that at 9ghz memory the RX 580 is running at 288gb/s which is within 12-15gb/s off from Scorpio gpu's ~300gb/s. Its not going to make a sizable difference if the gpu cant pump out the pixels. The gpu suggested by MS is said to provide 2.7x the gpu fill rate vs X1 S would be around 39 G/Pixels.

ie Scorpio gpu is looking like to be around RX 580 or slightly more type of performance. Then we have to take in account that the gpu wont have access to a full 8gb of vram. Scorpio is going to have to make sacrifices in graphics quality to achieve 4k/60 fps.

@Grey_Eyed_Elf: What are your specs?

Better than a Scorpio... Its why this 4K hype is joke, I KNOW neither the Pro or Scorpio is really enough unless its a easy to run game. Majority of games I have to run at medium and if I am lucky high settings to get 60FPS. Ultra?... Nope, not unless I drop to 1440p.

Scorpio will undoubtedly be running games at 30FPS.

The Scorpio has faster Ram.

@Grey_Eyed_Elf: What are your specs?

Better than a Scorpio... Its why this 4K hype is joke, I KNOW neither the Pro or Scorpio is really enough unless its a easy to run game. Majority of games I have to run at medium and if I am lucky high settings to get 60FPS. Ultra?... Nope, not unless I drop to 1440p.

Scorpio will undoubtedly be running games at 30FPS.

The Scorpio has faster Ram.

Which means squat if it cant pump out pixel rates beyond RX 580 type of performance.

@Grey_Eyed_Elf: What are your specs?

Better than a Scorpio... Its why this 4K hype is joke, I KNOW neither the Pro or Scorpio is really enough unless its a easy to run game. Majority of games I have to run at medium and if I am lucky high settings to get 60FPS. Ultra?... Nope, not unless I drop to 1440p.

Scorpio will undoubtedly be running games at 30FPS.

The Scorpio has faster Ram.

Faster RAM...

System RAM means absolutely ZERO to gaming performance and VRAM is measured in memory bandwidth and amount which can both BE bottlenecks and BE bottlnecked by the GPU... Which in the Scorpio's case it is.

Just look at the Fury X with and 980 Ti...

Battlefield 1 4K =

Memory Bandwidth doesn't mean a thing if the raw horsepower can't keep up.

BF1 uses 3.6-3.8GB VRAM so its not a bottleneck that's stopping the Fury X... Its the chip.

300GB/s on a Polaris chip doesn't change a thing, a GTX 1070 at stock will rape it.

@Grey_Eyed_Elf: What are your specs?

Better than a Scorpio... Its why this 4K hype is joke, I KNOW neither the Pro or Scorpio is really enough unless its a easy to run game. Majority of games I have to run at medium and if I am lucky high settings to get 60FPS. Ultra?... Nope, not unless I drop to 1440p.

Scorpio will undoubtedly be running games at 30FPS.

The Scorpio has faster Ram.

Which means squat if it cant pump out pixel rates beyond RX 580 type of performance.

Pixel rate is linked to memory bandwidth.

RGBA16F: RX-580's 1340 Mhz * 32 ROPS * 8 bytes = 343 GB/s <--- smashed into 256 bit GDDR5-8000's 256 GB/s wall. Polaris ROPS doesn't have L2 cache linkage.

RGBA16F: Scorpio's 1172 Mhz * 32 ROPS * 8 bytes = 300 GB/s

I haven't factored in memory sub-system inefficiency and DCC (delta color compression).

Any ROPS bound limitation can be workaround with TMUs.

@Grey_Eyed_Elf: What are your specs?

Better than a Scorpio... Its why this 4K hype is joke, I KNOW neither the Pro or Scorpio is really enough unless its a easy to run game. Majority of games I have to run at medium and if I am lucky high settings to get 60FPS. Ultra?... Nope, not unless I drop to 1440p.

Scorpio will undoubtedly be running games at 30FPS.

The Scorpio has faster Ram.

Faster RAM...

System RAM means absolutely ZERO to gaming performance and VRAM is measured in memory bandwidth and amount which can both BE bottlenecks and BE bottlnecked by the GPU... Which in the Scorpio's case it is.

Just look at the Fury X with and 980 Ti...

Battlefield 1 4K =

Memory Bandwidth doesn't mean a thing if the raw horsepower can't keep up.

BF1 uses 3.6-3.8GB VRAM so its not a bottleneck that's stopping the Fury X... Its the chip.

300GB/s on a Polaris chip doesn't change a thing, a GTX 1070 at stock will rape it.

Compute Science 101

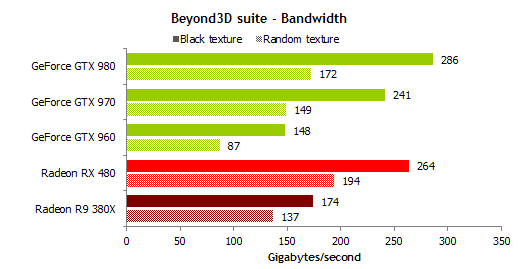

My 1st claim, Fury X does NOT get 512 GB/s memory bandwidth.

Notice the compressed effective memory bandwidth from GTX 980 Ti is similar to Fury X's compressed effective memory bandwidth.

Memory subsystem inefficiency comes back to bite you.

Furthermore, GTX 980 T's Pixel Engines has ultra fast/ultra low latency L2 cache linkage while Fury X's Pixel Engine runs into HBM memory controller bottleneck.

The gap between 980 Ti and Fury X mirrors Beyond3D's texture benchmark.

...

I have had that overclock since I got the card and benched it.

BF1 on Ultra at 4K... Its will drop to 40-50FPS. Its playable yes but I like my MINIMUM being closer to 60 as possible for FPS games.

The reason I mention this is because there's no way what so ever that BF2 will be running 4K 60FPS on Ultra... Scorpio regardless of the memory bandwidth advantage will be bottlenecked by the raw performance of polaris.

All of Ron's rambling about bandwidth for the Scorpio gpu is moot. Even with a RX 580 overclocked by 210mhz with memory overclocked to an effective 9000mhz, seen only 7% increase over stock RX 580 at 1440p. Meaning that at 9ghz memory the RX 580 is running at 288gb/s which is within 12-15gb/s off from Scorpio gpu's ~300gb/s. Its not going to make a sizable difference if the gpu cant pump out the pixels. The gpu suggested by MS is said to provide 2.7x the gpu fill rate vs X1 S would be around 39 G/Pixels.

ie Scorpio gpu is looking like to be around RX 580 or slightly more type of performance. Then we have to take in account that the gpu wont have access to a full 8gb of vram. Scorpio is going to have to make sacrifices in graphics quality to achieve 4k/60 fps.

Hahahahah, Try 4K resolution instead of 1440p to overload memory bandwidth factor.

Polaris 10's compression/decompression rate has a limit of ~256 GB/s. Note why AMD didn't include faster memory bandwidth modules with RX-580.

For Polaris, it's better to add more memory controllers with more DCC units.

@Grey_Eyed_Elf: What are your specs?

Better than a Scorpio... Its why this 4K hype is joke, I KNOW neither the Pro or Scorpio is really enough unless its a easy to run game. Majority of games I have to run at medium and if I am lucky high settings to get 60FPS. Ultra?... Nope, not unless I drop to 1440p.

Scorpio will undoubtedly be running games at 30FPS.

The Scorpio has faster Ram.

Faster RAM...

System RAM means absolutely ZERO to gaming performance and VRAM is measured in memory bandwidth and amount which can both BE bottlenecks and BE bottlnecked by the GPU... Which in the Scorpio's case it is.

Just look at the Fury X with and 980 Ti...

Battlefield 1 4K =

Memory Bandwidth doesn't mean a thing if the raw horsepower can't keep up.

BF1 uses 3.6-3.8GB VRAM so its not a bottleneck that's stopping the Fury X... Its the chip.

300GB/s on a Polaris chip doesn't change a thing, a GTX 1070 at stock will rape it.

Compute Science 101

My 1st claim, Fury X does NOT get 512 GB/s memory bandwidth.

Notice the compressed effective memory bandwidth from GTX 980 Ti is similar to Fury X's compressed effective memory bandwidth.

Memory subsystem inefficiency comes back to bite you.

Furthermore, GTX 980 T's Pixel Engines has ultra fast/ultra low latency L2 cache linkage while Fury X's Pixel Engine runs into HBM memory controller bottleneck.

The gap between 980 Ti and Fury X mirrors Beyond3D's texture benchmark.

That's exactly why you should stop posting about specifications.

The Fury X on paper is rated 512GB/s of Bandwidth... But achieves 387GB/s.

Now the Polaris chip in the Scorpio is rated at 300GB/s... Yet you are using that as a solid number in every thread about scorpio.

The Scorpio has faster Ram.

Faster RAM...

System RAM means absolutely ZERO to gaming performance and VRAM is measured in memory bandwidth and amount which can both BE bottlenecks and BE bottlnecked by the GPU... Which in the Scorpio's case it is.

Just look at the Fury X with and 980 Ti...

Battlefield 1 4K =

Memory Bandwidth doesn't mean a thing if the raw horsepower can't keep up.

BF1 uses 3.6-3.8GB VRAM so its not a bottleneck that's stopping the Fury X... Its the chip.

300GB/s on a Polaris chip doesn't change a thing, a GTX 1070 at stock will rape it.

Compute Science 101

My 1st claim, Fury X does NOT get 512 GB/s memory bandwidth.

Notice the compressed effective memory bandwidth from GTX 980 Ti is similar to Fury X's compressed effective memory bandwidth.

Memory subsystem inefficiency comes back to bite you.

Furthermore, GTX 980 T's Pixel Engines has ultra fast/ultra low latency L2 cache linkage while Fury X's Pixel Engine runs into HBM memory controller bottleneck.

The gap between 980 Ti and Fury X mirrors Beyond3D's texture benchmark.

That's exactly why you should stop posting about specifications.

The Fury X on paper is rated 512GB/s of Bandwidth... But achieves 387GB/s.

Now the Polaris chip in the Scorpio is rated at 300GB/s... Yet you are using that as a solid number in every thread about scorpio.

Polaris memory subsystem efficiency i.e. 194 GBps / 256 GBps = 75.7 percent efficient which is similar PS4's memory subsystem efficiency.

Polaris memory compression ratio: 264 / 193 = 1.368X

Scaling to Scorpio's 326 GBps physical. 75.7 percent x 326 GBps = 246.782 GBps. Applying 1.368X compression yields 337.567 GBps, hence it's already in Fury X range.

Polaris has better memory compression when compared to Fury X.

For Scorpio's Forza run

http://www.eurogamer.net/articles/digitalfoundry-2017-project-scorpio-tech-revealed

We quadrupled the GPU L2 cache size, again for targeting the 4K performance."

XBO version is already tiled render game engine and Scorpio GPU's 2MB L2 cache was used to reach 4K which R9-390X's Pixel Engine doesn't have L2 cache connection.

R9-390X's L2 cache size is 1 MB and R9-390X's Pixel Engine is NOT connected to 1 MB L2 cache. Fury and Polaris 2MB L2 cache are NOT connected to Pixel Engines, hence more dependent on external memory sub-system.

Scorpio GPU's 2MB L2 cache can be used as a buffer against CPU's usage i.e. reduce main memory hit rates for the GPU.

--------------------

Reduce to high settings for 4K / 60 fps.

-------------------------

If Scorpio has insufficient data element processing power, Polaris IP has dual subword FP16 feature

Polaris's data element processing yields double rate while keeping math operations per cycle rate as is.

This is similar to Vega's double rate FP16, but Polaris version doesn't increase math operation per cycle rate.

@xboxiphoneps3:

you have to include what the cpu and other devices will be using as well. So the gpu will have 300gb/s or little less

Not only that by far the biggest games played today that reak in by far the most money are PC games, consoles are not even close. E-sports is a real thing and completely dominated by PC gaming.

Wrong. Mobile gaming has surpassed PC in revenue. Hot girls and non-gamers playing Candy Crush, Angry Bird, etc bringing more $ than those dropping dead in Asian internet cafes who are addicted to MMO. The funny part is right before you said this, you even mentioned mobile gaming.

I cant wait til Sunday!

The spins already starting.

'Bu bu bu its 4k60 on MEDIUM'

lawl

This...exactly. Ever since Scorpio's announce trailer, the goalposts from Sony fanboys and PC elitists have continually shifted to something else, as issue x,y,z has come up, and Scorpio has proven itself to be beyond expectation a,b,c.

But it's never good enough or impressive for them. Rarely do any of these Xbox hater posters here have any credibility whatsoever. Well, only MS 1st party will see any upgrade. Well, it's only a little bit more powerful than Pro, so maybe 1440 or 1800p checkerboard with some more frames......well, yeah it'll do 4k native with this 3rd party engine cause the engine's really optimized......oh, well yeah it'll do 4k/60fps but LOWEST settings........to now....well ok 4k 60fps but ONLY medium settings and none higher.

What is the point of all this stupid bullshit? Most of these idiots would've looked a lot smarter if they'd kept their damn mouths shut since last E3 and just waited for all the specs and proven performance to come to light, but they couldn't resist the urge to talk shit hoping it was underpowered.

Scorpio dwarfs Pro in specs, fucking deal with it and accept it. Yep, you don't like the game lineup, we know. So don't buy it. But others care. These haters try so hard to make us not care....I guess? As if we can be dissuaded or admit the greatness of their holy Roachstations? Scorpio is going to be a great, affordable 4k gaming machine with great value for those who want a 4k console. Someone with sub-1050 GPU PC might wanna pick one up to supplement their gaming....and many X1 users want to upgrade.

@xboxiphoneps3:

you have to include what the cpu and other devices will be using as well. So the gpu will have 300gb/s or little less

1. XBO has fusion link to reduce external memory hit rates which doesn't exist on desktop PCs with discrete GPUs.

2. Kinect DSPs has their own smaller ESRAM memory which is separate from 30 MB ESRAM. It's unknown for Scorpio.

3. Scorpio GPU has 2MB L2 cache for 4K performance booster for Forza's wet track which contains tons of alpha/semi-transparent effects. Current AMD GPU's Pixel Engine (for alpha/semi-transparent effects) doesn't have L2 cache connection.

http://www.eurogamer.net/articles/digitalfoundry-2017-project-scorpio-tech-revealed

We quadrupled the GPU L2 cache size, again for targeting the 4K performance."

XBO version is already tiled render game and GPU's 2MB L2 cache was used to reach 4K.

Existing AMD GPU's pixel engines are not even connected to L2 cache.

Scorpio GPU's 2MB L2 cache is designed to reduce external memory hit rates and lower latency.

http://www.eurogamer.net/articles/digitalfoundry-2017-the-scorpio-engine-in-depth

"For 4K assets, textures get larger and render targets get larger as well. This means a couple of things - you need more space, you need more bandwidth," explains Nick Baker. "The question though was how much? We'd hate to build this GPU and then end up having to be memory-starved. All the analysis that Andrew was talking about, we were able to look at the effect of different memory bandwidths, and it quickly led us to needing more than 300GB/s memory bandwidth. In the end we ended up choosing 326GB/s. On Scorpio we are using a 384-bit GDDR5 interface - that is 12 channels. Each channel is 32 bits, and then 6.8GHz on the signalling so you multiply those up and you get the 326GB/s."

Scorpio GPU was allocated with more than 300 GB/s of memory bandwidth.

4. Baked in DirectX12 custom command processor with micro-coding (mini-CPU) support reduces CPU workload up to half.

@Ghost120x: Nintendo did the same thing with the "seal of approval" because, prior to the internet, there was no good way for the common consumer to filter out the junk.

Ironically, some, and I agree with them, now mock that Nintendo seal of approval, cause that was on so many games...that were in fact, absolute garbage. AVGN level garbage.

But hey, someone tested it in an NES and it has a start menu and is semi-functional, it gets the seal! As long as the check for Nintendo for licensing clears...

Ah...Nintendo, you will never get my money again.

The changes they did to the gpu they stated a 2.7x fill rate increase compared to X1 which means most likely that we are looking at under 40 G/pixels rate with gpu that is still vastly still Polaris based. The gpu will not see the full 326gb/s, so suggesting 300gb/s area is not out of the realm of probability.

Also the command processor is not a true saving grace for cutting cpu to gpu workloads in half.

Microsoft's Andrew Goossen has been in touch to clarify that D3D12 support at the hardware level is actually a part of the existing Xbox One and Xbox One S too.". The amount of win is dependent on the game engine and content, and not all games will see that size of improvement."

Please Log In to post.

Log in to comment